MatSpray: Fusing 2D Material World Knowledge on 3D Geometry (2512.18314v1)

Abstract: Manual modeling of material parameters and 3D geometry is a time consuming yet essential task in the gaming and film industries. While recent advances in 3D reconstruction have enabled accurate approximations of scene geometry and appearance, these methods often fall short in relighting scenarios due to the lack of precise, spatially varying material parameters. At the same time, diffusion models operating on 2D images have shown strong performance in predicting physically based rendering (PBR) properties such as albedo, roughness, and metallicity. However, transferring these 2D material maps onto reconstructed 3D geometry remains a significant challenge. We propose a framework for fusing 2D material data into 3D geometry using a combination of novel learning-based and projection-based approaches. We begin by reconstructing scene geometry via Gaussian Splatting. From the input images, a diffusion model generates 2D maps for albedo, roughness, and metallic parameters. Any existing diffusion model that can convert images or videos to PBR materials can be applied. The predictions are further integrated into the 3D representation either by optimizing an image-based loss or by directly projecting the material parameters onto the Gaussians using Gaussian ray tracing. To enhance fine-scale accuracy and multi-view consistency, we further introduce a light-weight neural refinement step (Neural Merger), which takes ray-traced material features as input and produces detailed adjustments. Our results demonstrate that the proposed methods outperform existing techniques in both quantitative metrics and perceived visual realism. This enables more accurate, relightable, and photorealistic renderings from reconstructed scenes, significantly improving the realism and efficiency of asset creation workflows in content production pipelines.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces MatSpray, a method that turns regular photos of an object into a high‑quality 3D model you can light in different ways. It focuses on getting the object’s “materials” right—things like its true color, how shiny or rough it is, and whether parts are metal—so the 3D model looks realistic under new lighting.

What questions did the researchers ask?

They wanted to solve three simple problems:

- How can we use smart 2D AI tools (that understand materials from photos) to build better materials on 3D objects?

- How do we make sure material guesses from different photos agree with each other in 3D?

- Can this make 3D models faster to build and more realistic when “relit” (shown under brand‑new lighting)?

How did they do it?

Think of building a 3D model like making a statue out of lots of tiny, soft paint dots. Then you “spray” the right material info onto those dots and adjust it until it looks right from every angle.

Step 1: Build a 3D shape with Gaussian Splatting

- Gaussian Splatting represents a 3D object using many small, fuzzy blobs (called “Gaussians”) instead of triangles or pixels. This creates the basic shape and surface directions (normals).

Step 2: Predict materials from 2D photos using diffusion models

- A diffusion model is a type of AI that’s very good at understanding and generating images.

- From each photo, the AI predicts three “PBR” material maps:

- Base color (also called albedo): the true color of the object without shadows or glare.

- Roughness: how smooth or rough the surface is (smooth = shiny, rough = matte).

- Metallic: whether a surface acts like metal (reflective) or not.

- Different photos can give slightly different guesses for the same spot on the object.

Step 3: “Spray” those materials onto the 3D model with ray tracing

- Ray tracing sends pretend light rays through the scene to see which fuzzy blobs they hit.

- For each blob, they gather the material values seen in each photo and attach them to the blob.

- Now each blob has several per‑view material suggestions—but they’re not perfectly consistent yet.

Step 4: Make the materials consistent with the Neural Merger

- The Neural Merger is a small neural network that decides how much to trust each photo’s material suggestion for a blob.

- It uses a softmax (think: “smart weighting”) to blend only the given suggestions—so it doesn’t invent wild new values—and ensures materials agree across views.

- There’s one Neural Merger per material type (color, roughness, metallic), helping keep things physically realistic.

Step 5: Check and refine by rendering (PBR)

- PBR (Physically Based Rendering) simulates how light behaves on real materials.

- They render the object using the merged materials and compare the result with the original photos.

- Differences guide small adjustments to make the final materials more accurate.

What did they find?

- More realistic relighting: Their 3D models look better when shown under new lighting, especially for shiny or metallic objects.

- Cleaner materials: The base color has fewer “baked‑in” shadows, and roughness/metallic maps are more consistent across views.

- Better accuracy: Their results beat other methods on quality measures (like clarity and structural similarity) for many test objects.

- Faster: On average, the method was about 3.5× quicker than a strong baseline (IRGS), making it more practical for real projects.

Why does it matter?

- For games and movies: Artists spend lots of time making materials look right. This tool speeds up building realistic, relightable 3D assets from ordinary photos.

- Uses existing AI “world knowledge”: It plugs in any good 2D material predictor (present or future) and lifts its knowledge into 3D.

- More realism, less effort: By fusing 2D material understanding with a solid 3D shape and a smart merging step, the final models look more natural under different lights.

A simple takeaway

MatSpray takes what a smart photo‑based AI knows about materials and “sprays” that knowledge onto a detailed 3D shape, then carefully blends and checks it so everything stays consistent and physically believable. The result: better‑looking, relightable 3D objects, created faster.

Knowledge Gaps

Below is a single, consolidated list of concrete knowledge gaps, limitations, and open questions that the paper leaves unresolved. Each point is intended to be actionable for future research.

- Reliance on 2D diffusion predictors: How to systematically handle poor or biased material predictions, including uncertainty quantification and confidence-aware weighting in the Neural Merger.

- Limited BRDF expressiveness: Extension beyond basecolor/roughness/metallic to handle anisotropy, clearcoat, specular tint, IOR, normal/displacement maps, subsurface scattering, transmission, and emissive materials.

- Lighting modeling scope: Incorporation of local lights, occlusions, shadowing, interreflections, and light transport beyond a single environment map to avoid compensating material parameters for missing global illumination.

- Camera pipeline factors: Treatment of exposure, white balance, tone mapping, and color space calibration across views to prevent material and environment map estimates from absorbing photometric inconsistencies.

- 2D-to-3D projection robustness: Occlusion-aware assignment and visibility reasoning during Gaussian ray tracing to avoid mixing materials across overlapping footprints and partially self-occluded surfaces.

- Hyperparameter sensitivity: Lack of analysis for ray-tracing falloff parameter λ, footprint supersampling rates, and merger network capacity; no guidelines for tuning or stability under different scenes.

- View-dependent effects removal: Explicit algorithms to detect and suppress specular highlights and baked-in shadows before projection, rather than relying solely on Neural Merger and photometric supervision.

- Neural Merger inputs: Evaluation of adding per-view geometry/visibility features (normals, view angle, depth ordering, shading context) and per-pixel confidence to improve weighting decisions beyond position and material values.

- Uncertainty and probabilistic fusion: A principled Bayesian or ensemble-based merger to propagate and resolve per-view material uncertainty, including outlier suppression and calibrated confidence intervals.

- Geometry dependence: Strategies to mitigate failures when R3DGS produces inconsistent geometry/normals, including joint refinement with SDFs/meshes or geometry regularizers that improve material-geometry disentanglement.

- Missed Gaussians: A concrete mechanism (e.g., projection transformer or assignment optimization) to recover very small or flat Gaussians missed by ray tracing, and quantitative evaluation of the resulting coverage.

- Scalability: Performance and memory scaling analyses with number of views, image resolution, scene complexity, and object count; methods to maintain speed and quality on commodity hardware.

- Large scenes and clutter: Extension from single-object assets to multi-object, cluttered, or outdoor scenes with complex occlusions and varying backgrounds, including segmentation and instance-level material attribution.

- Segmentation reliance: The pipeline’s dependence on accurate object masks is not specified; robust integration of automatic segmentation and foreground/background separation is needed.

- Real-world evaluation: Absence of quantitative material metrics for real scenes and lack of perceptual/user studies to substantiate “perceived visual realism”; benchmarks and protocols are needed.

- Baselines and fairness: Broader comparisons to mesh/SDF-based inverse rendering and recent relightable NeRF/GS methods; controlled fairness regarding environment map occlusions and diffusion-free baselines.

- Domain generalization: Robustness of material fusion under different capture conditions (indoor/outdoor, dynamic lighting, handheld cameras, motion blur), and cross-domain validation beyond Navi and selected synthetic assets.

- Material plausibility checks: Enforcement and evaluation of physical constraints (energy conservation, Fresnel consistency, plausible roughness-metallic relationships) to avoid visually plausible but physically invalid parameters.

- Normal/microgeometry handling: Joint estimation of normal maps and microstructure (bump/height) from 2D priors to better capture fine-scale specular behavior and reduce reliance on geometry-only normals.

- End-to-end training: Exploration of differentiable integration with diffusion predictors (e.g., fine-tuning or adapter layers) to co-train 2D priors and 3D fusion for improved cross-view consistency.

- Aggregation alternatives: Systematic study of projection aggregation strategies (median vs. opacity/distance-weighted, robust M-estimators) and their impact on outlier resistance and material sharpness.

- Environment map estimation: Methods to jointly optimize spatially varying illumination or per-view lighting with occlusion-aware environment visibility, rather than a single global environment.

- Failure characterization: Detailed analysis of common failure modes (e.g., highly specular dielectrics, transparent/partially transmissive objects, complex patterns) with targeted ablations and remediation strategies.

- Metric design: Development of multi-view consistency metrics for materials (not just image PSNR/SSIM/LPIPS), including cross-view material variance and physically motivated error measures.

- Generalization to video: Explicit handling of temporal consistency across longer sequences, quantifying how batch-wise diffusion predictions drift and how the merger adapts over time.

- Hypernetwork design: Investigation of per-channel mergers vs. shared architectures, cross-channel coupling, and regularization (e.g., sparsity/entropy constraints) to avoid over-smoothing and retain material detail.

- Open-source and reproducibility: Availability of code, pre-trained merger models, and standardized datasets/material annotations to facilitate benchmarking and reproducibility across labs.

Glossary

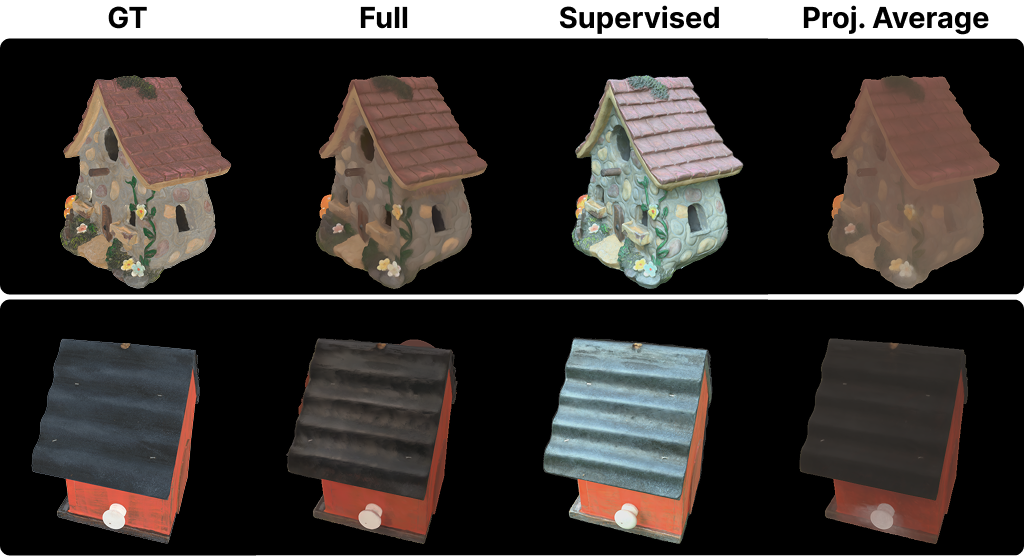

- Ablation study: a systematic evaluation where components of a method are removed or altered to measure their impact on performance. "Quantitative Ablation study on a subset of the evaluated objects."

- Albedo: the intrinsic diffuse reflectance of a surface used in PBR; often referred to as the color independent of lighting. "This combination yields cleaner albedo, more accurate roughness, and informed metallic estimates"

- Base color: the PBR parameter representing the surface’s intrinsic color without lighting effects. "base color, roughness, and metallic parameters"

- Bidirectional reflectance distribution function (BRDF): a function that describes how light is reflected at an opaque surface, depending on incoming and outgoing directions. "Spatially varying BRDFs (svBRDFs) have long been studied"

- COLMAP: a structure-from-motion and multi-view stereo pipeline for estimating camera poses and sparse geometry from images. "Real-world scenes use structure-from-motion reconstruction from COLMAP~\cite{schonberger:16} for both initialization and camera inference."

- Cook–Torrance model: a physically based microfacet reflectance model widely used in rendering. "we adopt a CookâTorrance variant, which is based on the widely used Disney principled BRDF"

- Deferred rendering: a rendering technique that defers shading calculations to a second pass using intermediate buffers (e.g., normals, material parameters). "The materials maps and normals are further refined based on a rendering loss, evaluated with deferred rendering."

- Deferred shading: computing lighting in a later stage using G-buffers with material and geometric information. "Additionally, using deferred shading we supervise by a PBR-based photometric rendering loss with the multi-view ground truth images of the object."

- Diffusion models: generative models that learn data distributions via iterative denoising, used here to predict material maps from images. "diffusion models operating on 2D images have shown strong performance in predicting physically based rendering (PBR) properties such as albedo, roughness, and metallicity."

- DiffusionRenderer: a specific diffusion-based predictor for PBR material maps. "Of particular relevance is DiffusionRenderer by Huang et al. \cite{huang:25}, whose results on high-quality material maps motivated this work."

- Environment map: an image-based representation of surrounding illumination used to light a scene. "The illumination is modeled by an optimizable environment map during refinement."

- Environment map optimization: the process of adjusting an environment map so that renderings match observed images. "stabilizing joint environment map optimization."

- Gaussian ray tracing: ray tracing techniques that intersect rays with Gaussian primitives to aggregate or assign properties. "We exploit Gaussian Ray Tracing as a mechanism for transferring 2D material data to 3D."

- Gaussian Splatting: a scene representation and rendering method using 3D Gaussians as primitives for fast reconstruction and view synthesis. "Scene geometry is reconstructed via a relightable Gaussian Splatting pipeline (R3DGS) \cite{kerbl:23, gao:24}"

- Grid-based supersampling: sampling multiple sub-pixel points on a grid to reduce aliasing and improve stability. "Grid-based supersampling per pixel is used to ensure stable Gaussian hits."

- Inverse rendering: recovering scene parameters (materials, lighting, geometry) from images by optimizing to match renderings to observations. "Classical inverse rendering requires strong assumptions about lighting and exposure and remains fragile when materials vary spatially."

- IRGS: a Gaussian-based inverse rendering method that extends 3DGS with 2D Gaussians and deferred shading. "IRGS \cite{gu:25} extends this with 2D Gaussians and deferred shading for improved appearance modeling."

- LPIPS: a perceptual image similarity metric based on deep features. "We evaluate material estimation using PSNR, SSIM~\cite{wang2004ssim}, and LPIPS~\cite{zhang2018lpips} between predicted and ground-truth material maps."

- NeRF: Neural Radiance Fields, a neural representation for photorealistic view synthesis. "NeRF and Gaussian Splatting providing strong foundations for radiance-based scene representations"

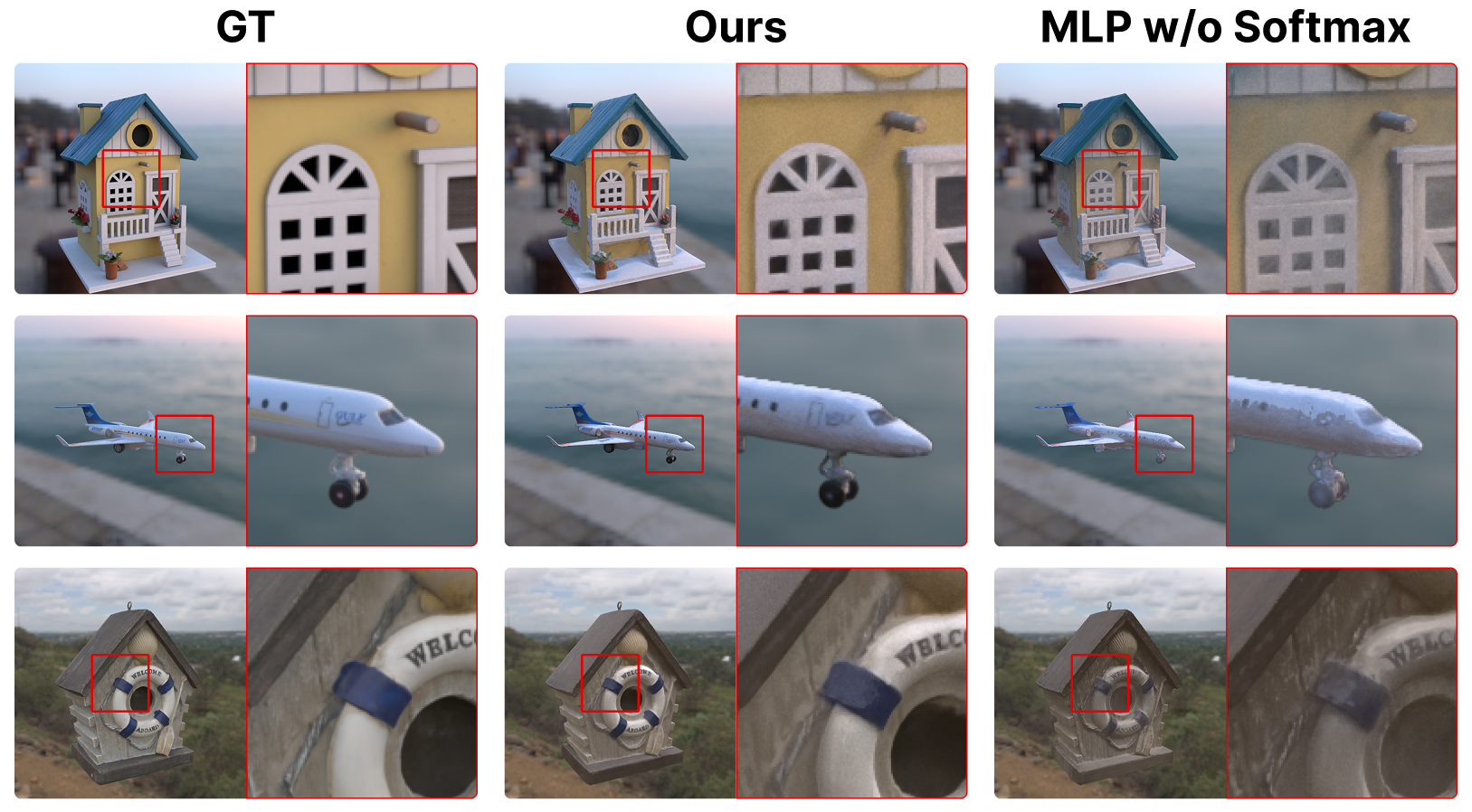

- Neural Merger: a lightweight MLP-based module that fuses multi-view material estimates via learned weights and softmax. "To reduce inconsistencies across views, we introduce the Neural Merger, which predicts weights per view for the material parameters..."

- Opacity (α): a per-Gaussian parameter controlling contribution/visibility; here related to density in Gaussian ray tracing. "Because the opacity α used in Gaussian Splatting~\cite{kerbl:23} does not directly correspond to a physical density, we adopt the formulation by Moenne-Loccoz et al.~\cite{moenne-loccoz:24}"

- Physically Based Rendering (PBR): rendering paradigm using physically grounded material and lighting models. "physically based rendering (PBR) properties such as albedo, roughness, and metallicity."

- Positional encoding: a technique to encode spatial coordinates with sinusoidal features to help neural networks capture position-dependent patterns. "encoded using a positional encoding."

- PSNR: Peak Signal-to-Noise Ratio, a fidelity metric for image reconstruction. "We choose DiffusionRenderer because it achieves about thirty percent higher PSNR than the other methods."

- Rasterization: converting primitives (here Gaussians with materials) into pixel-based images or maps. "which are then rasterized into material maps."

- Relighting: rendering objects under novel illumination conditions by separating materials from lighting. "Editing and relighting real scenes captured with casual cameras is central to many vision and graphics applications."

- Signed distance field (SDF): a scalar field giving distance to the nearest surface; useful for geometry and physically plausible shading. "where coupling with signed distance fields (SDFs) improves physical plausibility"

- Softmax: a normalization function mapping scores to a probability distribution over views. "A softmax function then converts these outputs into normalized weights:"

- Specular: mirror-like reflective component of materials that produces highlights. "particularly for specular objects."

- SSIM: Structural Similarity Index, a perceptual metric assessing structural fidelity between images. "We evaluate material estimation using PSNR, SSIM~\cite{wang2004ssim}, and LPIPS..."

- svBRDF (spatially varying BRDF): BRDFs whose parameters change across the surface to capture local material variation. "Spatially varying BRDFs (svBRDFs) have long been studied"

Practical Applications

Immediate Applications

Below are specific, actionable use cases that can be deployed with today’s toolchain by leveraging MatSpray’s fusion of 2D diffusion-based PBR priors with 3D Gaussian Splatting and the Neural Merger for multi-view consistency.

- Sector: Games/Film/VFX — Automated relightable prop digitization

- What: Convert casual multi-view captures of props into high-quality, fully relightable PBR assets (base color, roughness, metallic) with materially-consistent maps, particularly robust on specular/metallic objects.

- Tools/workflow: Capture → camera poses (COLMAP) → 2D PBR prediction (e.g., DiffusionRenderer) → MatSpray (Gaussian ray tracing + Neural Merger) → export to USD/GLTF with PBR → DCC/engine import (Blender/Unreal/Unity).

- Assumptions/dependencies: Multi-view images with reasonable coverage; accurate camera calibration; availability of a GPU; opaque surfaces; quality of the chosen 2D material predictor influences fidelity.

- Sector: E‑commerce/Product visualization — Scalable 3D product assets for web/AR

- What: Build consistent, relightable assets from standard product photo sets to power 360 viewers, AR try-ons, and lighting-consistent catalogs without hand-authored materials.

- Tools/workflow: Photo studio capture → 2D PBR diffusion → MatSpray → automated export and CDN deploy to web viewers (GLB/USDC).

- Assumptions/dependencies: License to capture; standard, repeatable studio capture; cloud or on-prem GPUs for throughput; materials are opaque or minimally translucent.

- Sector: Advertising/Packaging — Rapid relighting for campaign variants

- What: Generate photoreal composites by relighting captured products under campaign-specific HDRIs; iterate creative lighting without re-shooting.

- Tools/workflow: MatSpray asset → HDRI library → batch renders for variants → compositor pipeline.

- Assumptions/dependencies: Accurate environment maps; consistent geometry; limited support for transparent/volumetric materials.

- Sector: Interior design/AEC — Furniture and fixture digitization

- What: Turn on-site captures of furniture/fixtures into relightable assets that maintain accurate metallic/roughness for design reviews and virtual staging.

- Tools/workflow: Mobile multi-view capture → MatSpray → DCC import → scene relighting with project-specific HDRIs.

- Assumptions/dependencies: Sufficient viewpoints; controlled exposure helps; large/glossy surfaces benefit from normal guidance as described (R3DGS + Diffusion normals).

- Sector: Cultural Heritage — Artifact preservation with physically meaningful materials

- What: Produce relightable museum artifacts that preserve realistic surface appearance (without baked-in lighting) for education and digital exhibits.

- Tools/workflow: Capture in galleries → MatSpray → web/VR exhibit deployment.

- Assumptions/dependencies: Permission and capture logistics; opaque materials; high-frequency details may need more views.

- Sector: XR/AR content — Fast asset creation from casual capture

- What: Convert handheld phone captures into PBR assets suitable for AR apps with convincing relighting across environments.

- Tools/workflow: Mobile capture → cloud MatSpray service → AR asset export (USDZ/GLB).

- Assumptions/dependencies: Cloud compute; accurate camera poses; sufficient coverage.

- Sector: Software tooling — DCC and engine integrations

- What: Plugins and nodes for Blender/Unreal/Unity to “spray” 2D diffusion materials onto Gaussian reconstructions with a softmax-based Neural Merger for consistency.

- Tools/workflow: “MatSpray for Blender/Unreal” add-on; USD/MaterialX export; batch processing UI.

- Assumptions/dependencies: Maintenance with evolving diffusion models; GPU acceleration.

- Sector: Research/Academia — Inverse rendering and dataset generation

- What: Create multi-view-consistent PBR supervision datasets; benchmark 2D diffusion material models by lifting them into 3D; test environment map optimization with constrained PBR materials.

- Tools/workflow: Controlled datasets → MatSpray outputs (PBR + normals) → quantitative and perceptual evaluation.

- Assumptions/dependencies: Ground-truth or reference renderings for metrics; reproducible capture.

- Sector: Photogrammetry/Scanning — Material-aware scan post-processing

- What: Enhance photogrammetry pipelines by replacing baked textures with PBR parameter maps, improving relightability and downstream editing.

- Tools/workflow: Photogrammetry mesh or Gaussian Splatting → MatSpray material fusion → PBR texture baking to mesh UVs.

- Assumptions/dependencies: Mesh/Gaussian alignment; robust UV baking; opaque materials.

- Sector: On-set Previs — Rapid prop capture and relighting on set

- What: Provide previs-ready relightable assets from quick multi-view shoots; iterate look-dev under stage HDRIs within minutes.

- Tools/workflow: On-set capture → fast MatSpray run (≈25 minutes on RTX 4090) → previs tool ingest.

- Assumptions/dependencies: Access to compute on set or edge; fewer views may reduce fidelity.

- Sector: Synthetic Data for Vision — Physically plausible appearance for data generation

- What: Use realistic PBR assets to improve domain gap in training detectors/segmenters by rendering under diverse, physically correct lighting.

- Tools/workflow: MatSpray assets → randomized HDRIs and cameras → dataset renders with labels.

- Assumptions/dependencies: Labeling pipeline; PBR fidelity more critical than sub-mm geometry detail for many tasks.

- Sector: Lighting/Look‑dev — Constrained environment map estimation

- What: Improve robustness of HDRI estimation during inverse rendering by constraining materials via the Neural Merger’s softmax interpolation rather than free-floating parameters.

- Tools/workflow: MatSpray refinement stage with deferred shading; compare L1/SSIM vs ground truth.

- Assumptions/dependencies: Stable geometry; well-exposed input images.

Long-Term Applications

These opportunities are promising but require further research, scaling, or productization (e.g., broader material models, mobile deployment, or scene-level robustness).

- Sector: Mobile/Edge — Real-time or near‑real-time on-device capture-to-PBR

- What: Smartphone apps that produce relightable PBR assets on-device or with minimal cloud compute.

- Tools/workflow: Optimized diffusion predictors; quantized MatSpray; incremental Gaussian updates.

- Assumptions/dependencies: Model compression; efficient camera pose estimation; thermal/power constraints.

- Sector: Scene-scale Relightable Reconstruction (Indoors/Outdoors)

- What: Extend from single objects to entire rooms/facades with consistent, spatially varying materials and HDRI estimation.

- Tools/workflow: Multi-room capture; hierarchical Gaussians; global illumination-aware refinement.

- Assumptions/dependencies: Handling indirect lighting, shadows, and interreflections; scalable memory/compute; robust occlusion reasoning.

- Sector: Advanced Material Models — Beyond metallic/roughness

- What: Add normal/displacement, clearcoat, anisotropy, sheen, subsurface scattering, and translucency; move toward full Disney BRDF or SVBRDF/BTF.

- Tools/workflow: Extended diffusion predictors; multi-channel Neural Merger heads; volumetric/participating media handling.

- Assumptions/dependencies: Training data diversity; physics-aware losses; capture protocols for non-opaque materials.

- Sector: Language‑guided material editing and semantic part controls

- What: Couple consistent material/geometry fields with language features for intuitive edits (e.g., “make the handle matte”); leverage proposed future segmentation.

- Tools/workflow: Vision‑LLMs + MatSpray outputs; editable masks; constrained optimization.

- Assumptions/dependencies: Reliable part segmentation; aligned language embeddings; UX and safety constraints.

- Sector: Manufacturing/Metrology — Surface finish QA from casual capture

- What: Estimate roughness/metallic proxies for pass/fail checks of coatings and finishes without lab-grade devices.

- Tools/workflow: Standardized capture rigs; MatSpray material inference; tolerance dashboards.

- Assumptions/dependencies: Calibration protocols; domain adaptation for specific materials; ground-truth correlation studies.

- Sector: Robotics/Industrial Simulation — Physics-grounded appearance for sim‑to‑real

- What: Improve sim realism for vision policies and photometric sensors using accurate reflectance; support friction/appearance estimation links.

- Tools/workflow: MatSpray assets → simulators (Isaac/Unreal/Unity) → policy training.

- Assumptions/dependencies: Mapping roughness/metallic to sensor models; large‑scale asset ingestion.

- Sector: Autonomous Driving/Urban Twins — City‑scale material mapping

- What: Build relightable digital twins of streetscapes for sensor simulation and perception research.

- Tools/workflow: Vehicle-mounted capture; distributed processing; material atlases.

- Assumptions/dependencies: Massive compute; dynamic object handling; weather/time‑of‑day variability.

- Sector: Live Mixed Reality — On‑the‑fly materialization of surroundings

- What: Continuously estimate surroundings’ PBR to achieve lighting-consistent MR insertions and occlusion with minimal drift.

- Tools/workflow: SLAM + MatSpray-like fast material fusion; rolling HDRI estimation.

- Assumptions/dependencies: Low-latency processing; temporal consistency; moving light sources.

- Sector: Asset Marketplaces — Automatic standardization and verification of PBR assets

- What: Normalize community assets to consistent PBR conventions; verify metallic/roughness plausibility; flag baked-in lighting.

- Tools/workflow: Marketplace ingestion pipeline with MatSpray checks; metrics (PSNR/SSIM/LPIPS proxies).

- Assumptions/dependencies: Reference capture or priors; content provenance and consent.

- Sector: Policy/Standards — Guidelines for capture, licensing, and energy budgeting

- What: Establish capture standards for PBR fidelity, recommended HDRI sets, disclosure of generative priors used, and sustainability reporting for large-scale digitization.

- Tools/workflow: Industry consortia (USD/MaterialX) incorporating PBR provenance fields; audit trails.

- Assumptions/dependencies: Cross‑industry coordination; legal/IP frameworks; data governance.

- Sector: Education and Training — Interactive material literacy

- What: Learning kits where students capture objects, produce PBR assets, and explore how illumination interacts with materials.

- Tools/workflow: Classroom-friendly capture+processing bundles; curated HDRIs; lesson plans.

- Assumptions/dependencies: Simplified UI; limited compute; curated assets.

Common assumptions and dependencies across applications

- Inputs: Multi-view RGB images with adequate coverage and accurate camera poses (e.g., via COLMAP).

- Models: Availability and reliability of a 2D diffusion-based PBR predictor; domain gaps (e.g., unusual materials) may degrade quality.

- Compute: GPU acceleration for reasonable turnaround; cloud deployment for scale.

- Materials: Best for opaque, non-transmissive surfaces; extensions needed for glass, subsurface scattering, or volumetrics.

- Geometry: Quality depends on Gaussian Splatting reconstruction; specular objects benefit from normal guidance; small/flat Gaussians can be missed.

- Lighting: Environment map optimization is synergistic but assumes relatively stable exposure; extreme lighting changes add difficulty.

- Legal/Operational: Rights to capture and use images; privacy/brand compliance; HDRI licensing; reproducibility of capture protocols.

By combining swappable 2D “world material knowledge” with 3D Gaussian ray tracing and a softmax-based Neural Merger, MatSpray reduces manual material authoring time, improves relightability, and accelerates asset pipelines—making many of the immediate applications above both technically and economically compelling today.

Collections

Sign up for free to add this paper to one or more collections.