- The paper introduces an end-to-end multi-view PBR diffusion model that generates illumination-invariant textures while mitigating artifacts.

- It leverages reference attention, multi-channel aligned attention, and consistency-regularized training to enhance spatial alignment and texture fidelity.

- Evaluations show its superiority over state-of-the-art methods using metrics like FID, LPIPS, and CLIP-I, ensuring globally consistent and realistic results.

MaterialMVP: Illumination-Invariant Material Generation via Multi-view PBR Diffusion

Introduction

"MaterialMVP: Illumination-Invariant Material Generation via Multi-view PBR Diffusion" introduces an innovative approach for generating physically-based rendering (PBR) textures from 3D meshes and image prompts. The model addresses the complexities of multi-view material synthesis with a focus on achieving illumination-invariant and geometrically consistent textures. Critically, MaterialMVP establishes a framework for PBR texture generation that leverages novel training strategies and attention mechanisms to mitigate artifacts and resolve material inconsistencies.

Methodology

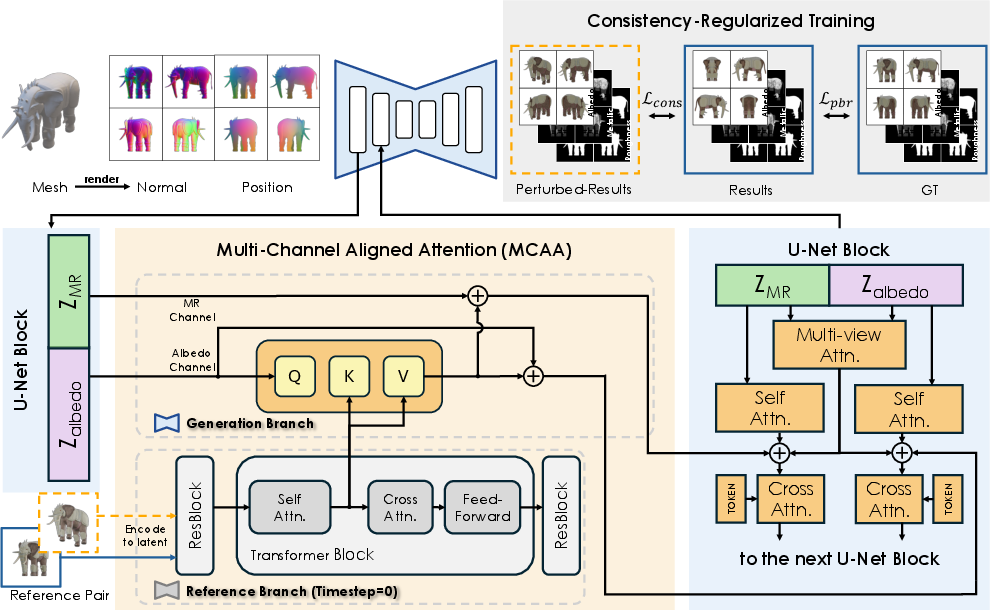

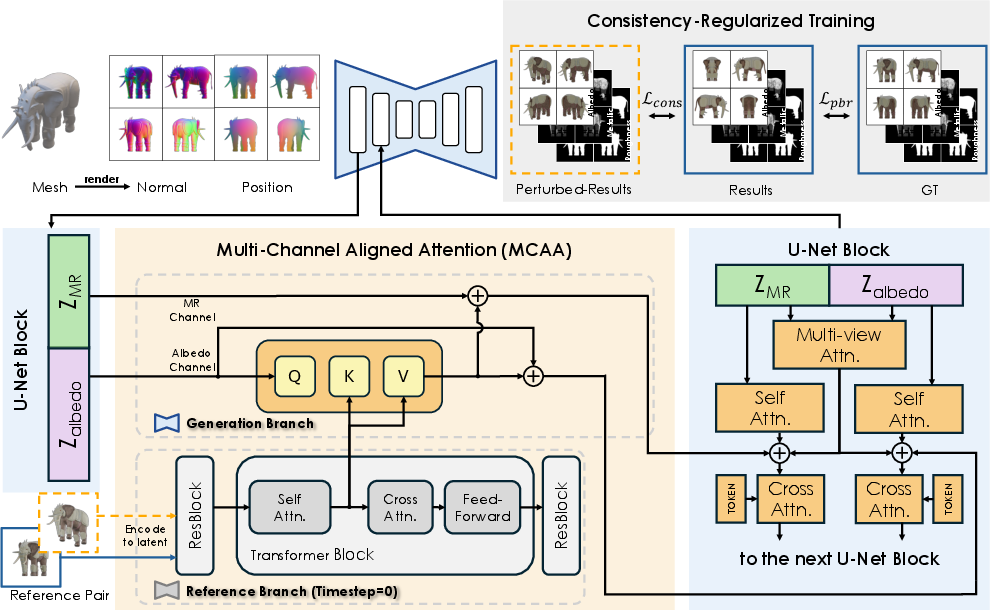

MaterialMVP employs an end-to-end diffusion model framework designed to generate high-quality multi-view PBR textures. The methodology hinges on several key components:

- Reference Attention and Dual-Channel Material Generation: This involves optimizing albedo and metallic-roughness (MR) textures in separate channels, ensuring precise spatial alignment with the reference images while encoding informative features from input images through Reference Attention.

- Consistency-Regularized Training: This training strategy enforces stability across different viewpoints and illumination conditions. By training on pairs of reference images with variations in camera pose and lighting, the model learns to generate consistent PBR outputs across diverse scenarios.

- Multi-Channel Aligned Attention (MCAA): MCAA is introduced to synchronize the information between the albedo and MR channels, directly addressing the misalignment issues in PBR texture maps.

Figure 1: Overview of MaterialMVP, which takes a 3D mesh and a reference image as input and generates high-quality PBR textures through multi-view PBR diffusion. The albedo and MR channels are aligned via MCAA, effectively reducing artifacts. And Consistency-Regularized Training effectively eliminates illumination and addresses material inconsistency across multi-view PBR textures.

Results

MaterialMVP's performance is quantitatively superior to prior methods when evaluated on metrics such as FID and LPIPS, reflecting its capacity to generate textures that closely resemble true-to-life appearances. Furthermore, the model excels in semantic alignment with input references, as evidenced by strong CLIP-I metrics. Qualitative comparisons further underscore MaterialMVP's realism and cohesion across different lighting conditions and viewpoints.

- Texture Fidelity: The model generates textures with high coherence and realism, significantly outperforming other state-of-the-art (SOTA) techniques in terms of avoiding artifacts like misaligned patterns and inconsistent lighting (Figures 3 and 4).

- Illumination Invariance: In contrast to other methods that embed unwanted lighting effects into textures, MaterialMVP produces illumination-invariant outputs that reliably respond to environmental lighting changes (Figure 2).

- Global Consistency: By leveraging dual-reference conditioning and MCAA, MaterialMVP achieves superior global texture consistency, circumventing common pitfalls of chaotic patterns and texture seams often found in alternative approaches.

Figure 3: Qualitative comparison of text-conditioned 3D generation methods and our approach. Our method achieves superior fidelity in texture and pattern coherence, avoiding blur, inconsistent lighting, and misaligned patterns found in other approaches.

Ablation Studies

Ablation experiments confirm the efficacy of each component within the MaterialMVP architecture. The incorporation of consistency loss and MCAA substantially boosts the alignment accuracy and quality of the PBR textures, reducing misalignment and blurring artifacts inherent in other approaches.

- Consistency Loss Impact: When omitted, textures exhibit exaggerated metallic properties, demonstrating the necessity of consistency regularization for accurate material synthesis.

- MCAA Impact: The lack of MCAA leads to misaligned texture maps, highlighting its critical role in achieving artifact-free texture alignment across channels.

Conclusion

MaterialMVP sets a new benchmark for generating multi-view PBR textures with high fidelity and consistency. Its illumination-invariant approach significantly enhances the capability to generate realistic textures under varying conditions, promoting advancements in the scalable creation of digital assets. Future work could further explore the adaptation of this framework for real-time applications and investigate additional modalities for input reference conditioning. The techniques developed here also pave the path for further improvements in rendering and gaming technologies, where realistic material representation is crucial.