- The paper presents a hybrid approach that fuses generative single-view priors with a parametric material aggregation scheme for robust 3D material reconstruction.

- It employs multi-view probabilistic optimization by minimizing KL-divergence between Laplacian-based distributions and per-object transforms to preserve fine details.

- Experimental results demonstrate significant improvements in PSNR, SSIM, LPIPS, and L2 metrics on both synthetic and real datasets compared to SOTA methods.

Intrinsic Image Fusion for Multi-View 3D Material Reconstruction

Introduction

"Intrinsic Image Fusion for Multi-View 3D Material Reconstruction" (2512.13157) tackles the critical problem of reconstructing high-fidelity physically-based rendering (PBR) materials at room-scale from multi-view image inputs and corresponding 3D geometry. Traditional inverse rendering methods—relying heavily on analysis-by-synthesis and path tracing—are constrained by the dual impediments of computational cost and the ill-posed nature of radiometric decomposition, especially at scale. Single-view material estimation approaches, though boosted by recent advances in diffusion-based probabilistic models, suffer from prediction ambiguity and cross-view inconsistency. The presented work formulates a hybridized strategy, fusing robust 2D priors from generative single-view predictors with a parametric material aggregation scheme, followed by regularized inverse rendering optimization to obtain multi-view-consistent, physically faithful BRDF textures suitable for relighting and content creation.

Methodology

Parametric PBR Aggregation of Single-View Priors

The key contribution is the distillation of single-view decomposition priors—sampled via RGBX [RGBX], a material-aware diffusion model—into a parametric material space, imposing physical consistency and reducing solution ambiguity. For each view, multiple decomposition candidates (albedo, roughness, metallic) are generated to cover the span of plausible explanations given the underconstrained nature of reflectance-light separation.

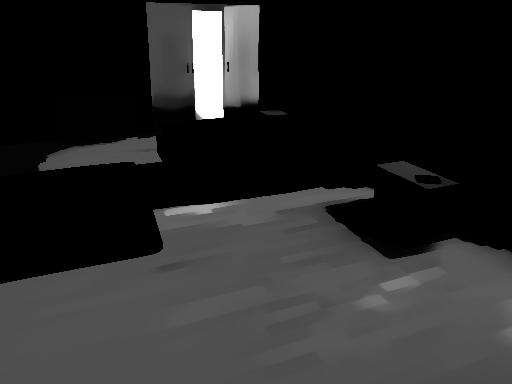

Figure 1: Parametric aggregation transitions from inconsistent single-view and multi-view predictions to physically grounded, sharp multi-view fused PBR maps.

Rather than naïve averaging, which introduces cross-view seams and blurring, the solution fits a low-dimensional affine transform per object, augmented by a Laplacian-distributed model for high-frequency pattern uncertainty. This enforces plausible cross-view agreement while preserving fine details.

Multi-View Probabilistic Optimization

The fusion proceeds via distribution matching: the scene-wide PBR texture is modeled as a Laplacian distribution parameterized by per-object affine transforms and neural base textures. Consistency across views is achieved by minimizing the KL-divergence between the predicted 3D material distributions and the aggregated single-view Laplacian mixtures. Assignment logits act as soft selectors to identify the most plausible single-view prediction per object per view, robustly rejecting outlier samples.

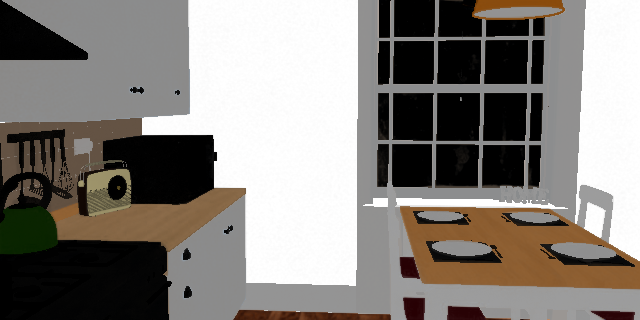

Figure 2: Cross-view aggregation maintains texture consistency and selectively preserves high spatial frequency content.

The parameter space to be optimized is dramatically reduced—only per-object transforms and assignment values rather than full textures—resulting in lower susceptibility to Monte Carlo noise during the inverse path tracing phase.

Regularized Inverse Rendering

The final refinement stage employs factorized inverse path tracing [FIPT], using photometric synthesis under the Cook-Torrance BRDF model [CookTorrance]. Scene lighting is represented by per-triangle emission, optimized in an alternating scheme for stable convergence. Notably, the optimization is regularized to converge on the parametric manifold defined by single-view priors, shielding the process from noise-induced artifacts such as baked-in lighting and incorrect specular attribution—a persistent flaw in previous works.

Figure 3: IIF pipeline overview: multi-view images and reconstructed geometry flow through probabilistic single-view estimation, parametric aggregation, and inverse rendering optimization.

Experimental Analysis

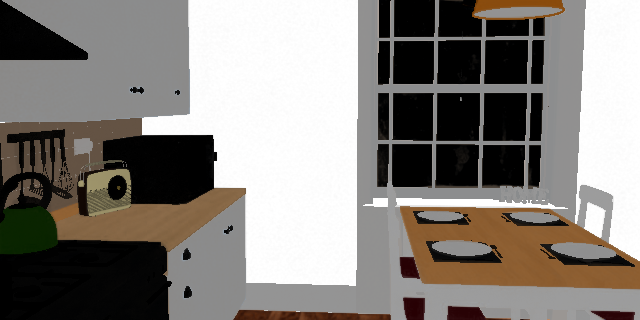

Quantitative and Qualitative Evaluation

IIF's efficacy is substantiated by comprehensive quantitative metrics, outperforming SOTA baselines (NeILF++ [NeilfPP], FIPT [FIPT], IRIS [IRIS]) across albedo, roughness, metallic, and emit channels on synthetic [BitterliScenes] and real-world ScanNet++ [ScanNet++] scenes. Significant improvements are observed in PSNR, SSIM, LPIPS, and L2 error—indicating both precision and perceptual quality benefits.

Figure 4: Synthetic comparisons show reduced bias and artifacting in IIF versus SOTA methods, with sharp reconstructions and minimal specular misattribution.

Figure 5: Real-world comparisons highlight robustness to incomplete/noisy geometry, with IIF delivering artifact-free contours and consistent material estimates.

Ablation and Model Complexity

The per-image-per-object parametric model demonstrates superior expressivity over simpler per-image aggregation or per-texel approaches, with the latter suffering from spatial inconsistencies and pattern loss. Increasing the number of sampled priors per view further improves fused map quality, validating the probabilistic matching framework's robustness against over-smoothing.

Figure 6: Visualization of parametric model expressivity: object-level fitting corrects inter-object reflectance and preserves pattern fidelity.

Figure 7: Relighting and rendering applications validate the utility of IIF's clean, physically consistent decompositions for downstream photorealistic synthesis.

Implications and Future Directions

This work establishes a new paradigm for room-scale inverse rendering, emphasizing the utility of distilling priors from powerful generative models into explicit, low-dimensional parametric spaces for noise-resilient, physically sound multi-view material fusion. The framework enables high-quality material reconstruction for relighting, editing, and object insertion, with practical impact on augmented reality, digital twin creation, and advanced scene understanding.

Significant open directions remain: joint optimization of geometry and material priors, leveraging uncertainty measures for selective prior rejection, and tighter integration with pre-trained generative models for direct end-to-end optimization. As generative and probabilistic models mature, their fusion with physically-constrained inverse rendering architectures can be expected to drive further advances in scalable, faithful scene reconstruction for both academic and industrial applications.

Conclusion

Intrinsic Image Fusion (2512.13157) delivers a comprehensive, probabilistic multi-view 3D material reconstruction framework that leverages single-view generative diffusion priors, parametric distribution matching, and robust inverse rendering. The method overcomes longstanding challenges in consistency, computational efficiency, and physical fidelity, setting a strong baseline for practical, artifact-free scene decomposition at scale. Future progress will likely exploit deeper integration of generative modeling, uncertainty quantification, and joint scene-material optimization to extend reconstruction capacity beyond current geometric limits.