Distributional AGI Safety (2512.16856v1)

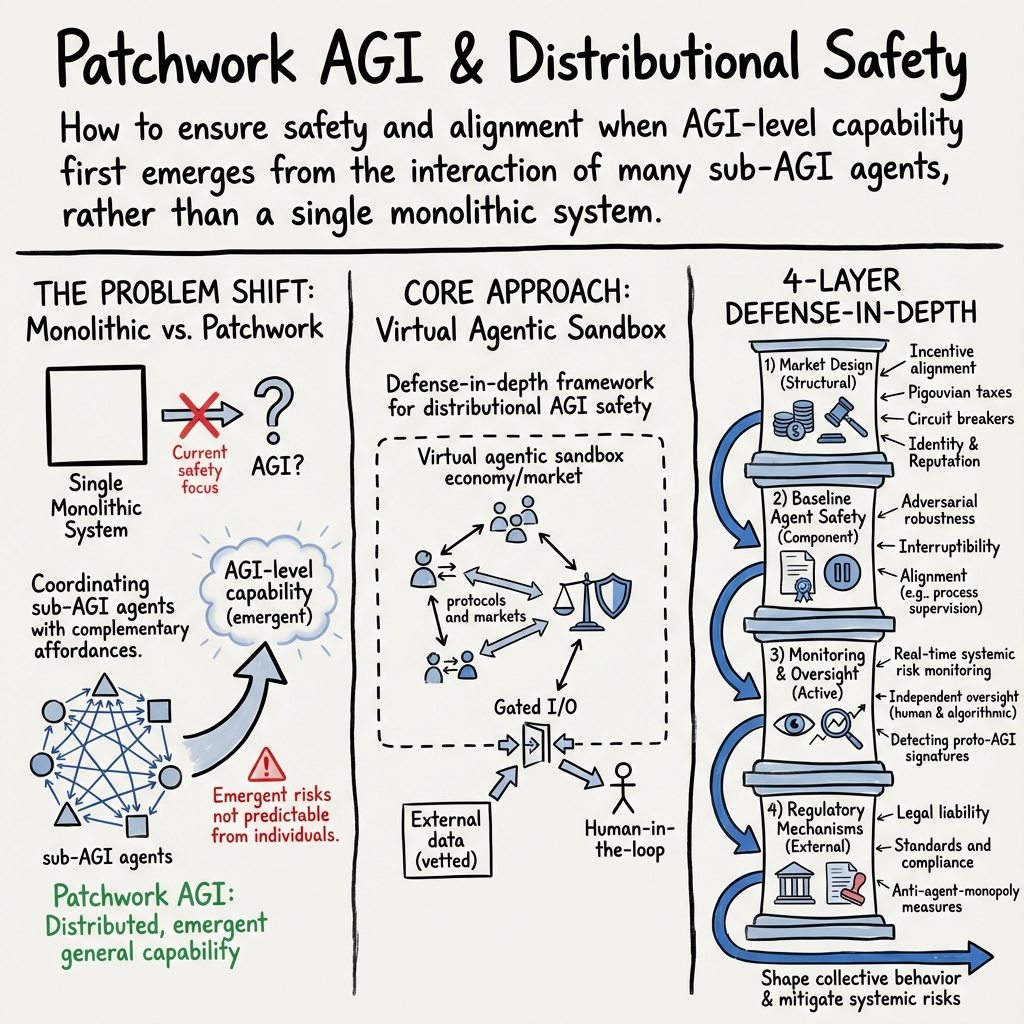

Abstract: AI safety and alignment research has predominantly been focused on methods for safeguarding individual AI systems, resting on the assumption of an eventual emergence of a monolithic AGI. The alternative AGI emergence hypothesis, where general capability levels are first manifested through coordination in groups of sub-AGI individual agents with complementary skills and affordances, has received far less attention. Here we argue that this patchwork AGI hypothesis needs to be given serious consideration, and should inform the development of corresponding safeguards and mitigations. The rapid deployment of advanced AI agents with tool-use capabilities and the ability to communicate and coordinate makes this an urgent safety consideration. We therefore propose a framework for distributional AGI safety that moves beyond evaluating and aligning individual agents. This framework centers on the design and implementation of virtual agentic sandbox economies (impermeable or semi-permeable), where agent-to-agent transactions are governed by robust market mechanisms, coupled with appropriate auditability, reputation management, and oversight to mitigate collective risks.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper asks a big question: What if powerful, human‑level AI doesn’t show up first as a single super‑smart machine, but instead as lots of smaller AIs that team up and work together? The authors call this a “patchwork AGI,” and they argue we need safety plans for that world—not just for one giant AI.

What questions are the authors asking?

- Could general intelligence emerge from many smaller AIs coordinating, like a team, even if none of them is truly “AGI” alone?

- If that’s likely, how should we design the “places” where AIs work together so their collective behavior stays safe, legal, and helpful?

- What rules, tools, and oversight do we need to prevent accidents, cheating, or sudden runaway growth in ability?

How do they approach the problem?

The authors don’t run lab experiments. Instead, they propose a clear, practical safety blueprint, borrowing ideas from economics, security, and computer systems. Think of it like designing a safe, well‑supervised “city” where AIs can trade skills and services—but with speed limits, licenses, and referees.

The “patchwork AGI” idea

- Imagine a group project: one AI is great at finding information, another at reading documents, another at writing code, and another at summarizing results. Alone, each has limits. Together, they can complete a complex task—like a full financial report—that none could do solo.

- This teaming up becomes much easier as standard “agent‑to‑agent” communication protocols spread (like apps agreeing on how to talk to each other). If connecting AIs gets super easy and cheap, we could see a sudden spike in collective ability.

- Humans may also be part of the patchwork—for things like legal authority or real‑world actions—creating hybrid human+AI systems that bring new safety challenges.

The safety framework: a defense‑in‑depth plan

Because no single safety measure is perfect, the authors propose layering several. Here are the four layers they recommend:

- Market design: Build “virtual economies” or sandboxes where AIs can interact under strict rules. Examples:

- Insulation: Keep the AI economy separated from the open internet and real‑world systems, with gated inputs/outputs.

- Incentives: Reward safe behavior and add small costs for risky actions (like anti‑spam fees or “pollution” taxes for clogging shared databases).

- Transparency: Keep tamper‑proof logs so investigators can trace who did what.

- Circuit breakers: Automatic pauses if things get weird or too fast—like a “stop trading” button in stock markets.

- Identity and reputation: Every AI has a verified ID linked to a real owner, plus a reputation score that affects what it’s allowed to do.

- Smart contracts: Automatic checks to confirm tasks were done correctly before payment—sometimes judged by special “AI judges.”

- Roles and access: Give each AI the least permissions it needs; lock down sensitive tools.

- Environmental safety: Scan inputs to avoid prompt‑injection traps (malicious text designed to trick AIs).

- Runaway controls: Set ability caps and emergency “freeze” modes to stop sudden capability explosions.

- Baseline agent safety: Make sure each AI is safe before it joins the market.

- Robustness: Resist tricks and attacks.

- Interruptibility: Let trusted overseers pause or stop an AI fast and safely.

- Containment: Run each AI in its own sandbox too, not just the shared one.

- Alignment: Train AIs to follow human values and step‑by‑step rules, not just chase outcomes.

- Interpretability: Keep readable trails of why decisions were made.

- Anti‑jailbreak defenses: Detect and block malicious prompts from other agents.

- Monitoring and oversight: Watch the system in real time and investigate problems.

- Track risk signals (like sudden bursts of activity).

- Use independent, trained human overseers and automated tools.

- Look for signs of “proto‑AGI” clusters forming within the network.

- Continuously “red‑team” (stress‑test) the system.

- Provide forensic tools to find root causes quickly.

- Regulatory mechanisms: Connect it all to real‑world rules and accountability.

- Legal responsibility for owners.

- Standards and audits.

- Insurance that charges more for risky setups.

- Anti‑monopoly measures against dangerous concentration of compute and power.

- International coordination to harmonize safety rules.

What are the main ideas and why are they important?

- AGI might arrive as a network, not a single model. That changes safety from “align one brain” to “govern a whole system.”

- A well‑designed “agent economy” can make collective behavior more controllable:

- Clear rules and logs make it easier to spot problems and assign responsibility.

- Incentives can discourage harmful strategies (like spamming, collusion, or hoarding resources).

- Circuit breakers and ability caps can stop fast‑moving cascades before they spread.

- If connecting AIs becomes too easy too quickly (hyper‑adoption), the system’s complexity could outpace our current safety tools. Preparing now matters.

Why does this matter for the real world?

- Today’s AIs already use tools, call other services, and can coordinate. As this grows, unexpected “team” behaviors—good or bad—become more likely.

- A market‑style sandbox with rules, IDs, and oversight could keep the benefits (speed, specialization, lower costs) while reducing risks (accidents, cheating, runaway capabilities).

- Multi‑agent systems might actually be easier to govern than one giant AGI, because we can regulate interactions, not just inner thoughts.

What could happen next?

- Builders: Start creating gated, auditable sandboxes for agents; adopt standard IDs, logs, and circuit breakers.

- Researchers: Develop better tools to detect collusion, prompt‑injection, and emerging “clusters” of general ability.

- Policymakers and standards bodies: Set shared safety baselines, auditing practices, and cross‑border rules; require clear ownership and liability.

- Society: Prepare for hybrid human+AI teams and make sure transparency and accountability keep up with adoption.

In short, the paper says: Don’t wait for one “super AI” to worry about safety. Prepare for a world where many smaller AIs combine into something very powerful—and make that world safe by design.

Knowledge Gaps

Below is a consolidated list of concrete knowledge gaps, limitations, and open questions that the paper leaves unresolved. Each point is phrased to be actionable for future research and development.

- Empirical validation of the patchwork AGI hypothesis: No empirical studies or large-scale simulations demonstrate emergent general capabilities from networks of sub-AGI agents; define experimental designs, tasks, and measurable thresholds to test the hypothesis.

- Quantifying “AGI as a state of affairs”: Lacks operational definitions and metrics to identify when a multi-agent economy achieves AGI-level generality; specify capability coverage, reliability, time-horizon, and cross-domain transfer metrics.

- Proto-AGI detection methods: “Graph analysis” is suggested but unspecified; develop concrete algorithms, features, and early-warning indicators (e.g., centrality shifts, mutual information in action policies, emergent coordination motifs) with known false-positive/false-negative trade-offs.

- Capability thresholding for “runaway intelligence”: No principled way to set dynamic capability caps; define measurable proxies (e.g., self-replication rate, tool-creation velocity, cross-benchmark leaps) and robust control policies with stability proofs.

- Orchestrator risk characterization: Insufficient analysis of how more capable orchestration frameworks suddenly elevate collective capabilities; study conditions under which orchestration quality is a “phase transition” and design mitigations.

- Decentralized emergence without a central orchestrator: Mechanisms for emergent coordination in fully decentralized systems are not modeled; propose protocols and monitoring suited to peer-to-peer agent swarms.

- Human–AI hybrid collectives: Governance, liability, and oversight for human-in-the-loop components remain vague; define role delineations, consent, and auditing when humans become functional modules of the collective.

- Economic modeling of adverse selection and “race to the bottom”: The incentive risks are identified but not modeled; design and simulate mechanism designs that ensure safety investments remain competitive.

- Pricing negative externalities: Proposes Pigouvian taxes (e.g., RAG pollution fees) without algorithms to estimate marginal harm; develop online estimators for redundancy, quality, and global impact with calibration and attack resistance.

- Transaction micro-taxes and spam control: Lacks quantitative analysis on latency/efficiency trade-offs and adversarial circumvention (e.g., batching, obfuscation); evaluate equilibrium outcomes and optimal tax schedules.

- Identity binding to legal owners: No practical method for cross-jurisdictional, privacy-preserving cryptographic identity binding; specify KYC pipelines, revocation/rotation policies, and proof-of-ownership protocols resistant to spoofing and delegation abuse.

- Sybil resistance at scale: Absent concrete designs to prevent identity proliferation by sophisticated actors; compare proof-of-stake, proof-of-personhood, and risk-weighted bonding mechanisms with empirical stress tests.

- Reputation system manipulation: No formal model of collusion-resilient and gaming-resistant reputation; develop aggregation rules, decay functions, and anomaly detectors robust to strategic rating attacks and venue-hopping.

- Collusion detection (explicit and tacit): No detection pipelines for algorithmic collusion beyond qualitative references; build datasets, benchmarks, and causal-inference or game-theoretic tools to detect outcome-level coordination under partial observability.

- Circuit breaker design and calibration: No methodology for selecting triggers, ensuring robustness to adversarial manipulation (breaker-induced DoS), and minimizing unnecessary market halts; propose control-theoretic designs with guarantees.

- Auditable provenance vs privacy: Immutable logging is proposed without privacy-preserving proofs; design selective-disclosure logs, encrypted append-only ledgers, and zero-knowledge attestations for compliance without exposing sensitive content.

- Governance of the logging substrate: No operator model, legal oversight, or capture-resistance plan for the entity maintaining tamper-proof logs; specify governance, access policies, audit trails, and rotation of trust.

- Smart contracts for complex tasks: The “AI judge” oracle problem remains unresolved; define evaluation specs, contestation/appeals, consensus among multiple judges, adversarial robustness, and verifiability under distribution shifts.

- AI judge independence and liability: No framework for preventing capture, ensuring competency, or assigning liability for erroneous judgements; define accreditation, periodic audits, red-teaming, and accountability.

- Access control granularity and role drift: Role-based access is proposed but lacks dynamic policy update, versioning, and formal verification; develop policy languages with enforceable least-privilege and safe role evolution.

- Environmental safety (prompt-injection defenses): Missing standardized sanitization pipelines for shared artifacts (RAG indices, tools, datasets) and provable IFC enforcement at scale; evaluate runtime overhead and attack coverage.

- Defense against cross-agent jailbreaks: No protocol-level safeguards for A2A messages (e.g., typed channels, capability labels, meta-prompts with invariants); propose formal message schemas and verified parsers.

- Interruptibility of distributed computations: Stopping a cascade across many delegates requires reliable distributed tracing; specify APIs, propagation contracts, and rollback/resume semantics with correctness guarantees.

- Containment feasibility for individual agents: Acknowledges limitations but offers no hardened reference architecture; design OS- and VM-level sandboxes with policy enforcement, syscall filtering, and hardware roots of trust.

- Adversarial robustness certification tailored to A2A: Certification regimes focus on human-agent; define adversarial suites specific to multi-agent prompts, tool outputs, and protocol misuse with standardized scoring.

- Incentive design under Goodhart’s Law: No systematic approach to monitor and re-tune incentives to prevent reward hacking; propose closed-loop audit-and-adjust mechanisms with red-team driven adversarial search.

- Compute/resource monopolization metrics: No concrete thresholds or detection for emergent monopolies; formalize share-of-resource indices, monopolistic behavior tests, and remedial actions compatible with market efficiency.

- Leakage and boundary control for permeable sandboxes: Lacks precise I/O policy languages, exfiltration detection (including steganography), and formal leakage budgets; build quantitative leakage models and enforcement tooling.

- Human-in-the-loop scalability: The paper notes feasibility issues but offers no triage architecture; design sampling-based verification, risk-tiering, and autopause thresholds that keep pace with high-velocity operations.

- Standardization of A2A protocols: Security properties, authentication, encryption, and policy-carrying metadata for MCP-like protocols are unspecified; propose a formal specification and verification suite.

- Versioning and change management: No plan for safe upgrades of agents, tools, and orchestration frameworks; define backward-compatible changes, rollout “canaries,” and differential monitoring to catch regressions.

- Evaluation benchmarks for multi-agent generality: Absent standardized tasks assessing collective planning, memory, coordination, and long-horizon reliability; develop leaderboards and reproducible multi-agent testbeds.

- Measuring long-horizon performance: Identifies short time-horizons but lacks evaluation scaffolds for multi-day or persistent tasks; build persistent environments with stateful objectives and drift monitoring.

- Market operator governance and anti-capture: The paper does not specify who runs the sandbox economy and how capture is prevented; propose multi-stakeholder governance, transparency mandates, and independent audits.

- Cross-market interoperability and spillover risk: No strategy to prevent off-market interactions from bypassing safety controls; develop inter-market gateways with capability handshakes, attestations, and quarantine protocols.

- International regulatory harmonization: High-level call for coordination without conflict-resolution mechanisms; propose treaties, mutual-recognition frameworks, and jurisdiction-aware identity and logging standards.

- Liability for collective harms (“many hands”): Provides no concrete allocation model; design causality-informed liability apportionment, shared-risk pools, and standardized post-incident forensic protocols.

- Insurance design and pricing: “Risk-based premiums” are proposed without actuarial models or loss datasets; develop risk taxonomies, incident reporting standards, and simulation-informed premium schedules.

- Data governance for shared knowledge bases: Policies for write-access, provenance, and revocation of polluted entries are not specified; define submission SLAs, retraction protocols, and trust-weighted retrieval.

- Toolchain trust and supply-chain security: Trust in tools and plugins is assumed; design code signing, reproducible builds, SBOMs, and continuous verification for tools invoked by agents.

- Robustness to open-source and self-hosted agents: Unclear how the market gates or integrates non-vetted agents; define quarantine tiers, restricted roles, and progressive certification funnels.

- Measuring and mitigating environmental/energy externalities: Mentions externalities but lacks metrics or caps; develop compute/energy accounting, carbon taxes for agent operations, and incentive-compatible green routing.

- Transition path from today’s systems: No staged deployment roadmap; propose phased pilots (narrow domains), metrics for readiness to expand permeability, and criteria to halt or roll back.

- Formal guarantees and verification: Many mechanisms are heuristic; identify where formal methods (temporal logic, IFC, protocol verification) can provide proofs of safety properties, and build reference proofs-of-concept.

- Resilience to adversarial market dynamics: No analysis of strategic attacks on incentives (e.g., triggering circuit breakers to harm competitors); model attacker-payoff spaces and design countermeasures.

- Monitoring coverage and observability gaps: Insufficient detail on telemetry schemas, sampling rates, and data retention; define minimal observability baselines and privacy-preserving telemetry aggregation.

- Benchmarking red-teaming for multi-agent settings: Lacks standardized adversarial scenarios (collusion, toolchain hijack, escrow fraud); create shared corpora and evaluation harnesses for continuous stress testing.

Glossary

- Adversarial Robustness: The ability of a system to maintain reliable performance under adversarial inputs or perturbations. "Adversarial Robustness: Certified resistance to attacks."

- Adverse Selection: An economic phenomenon where higher-risk participants are more likely to engage when safety is costly, undermining overall market quality. "A critical economic risk is adverse selection."

- Agent-to-Agent (A2A) Communication Protocols: Standardized interfaces that enable autonomous agents to discover, exchange, and coordinate capabilities with each other. "The development of standardised agent-to-agent (A2A) communication protocols, such as Message Passing Coordination (MCP) or others"

- AI Judge: An automated evaluator that assesses whether outputs meet complex, semi-formal contractual or quality constraints. "it is likely that this would need to also involve AI judges"

- Air-Gap: A strict isolation mechanism that physically or logically separates a system from external networks. "Full insulation (a complete air-gap) would offer robust protection"

- Append-Only Ledger: An immutable log where entries are cryptographically chained to prevent tampering. "a cryptographically secured, append-only ledger"

- Capability Exfiltration: The leakage or unauthorized transfer of capabilities from a controlled environment to uncontrolled contexts. "protection against capability exfiltration or external manipulation by an emergent AGI"

- Causal Scrubbing: An interpretability method that tests whether identified circuits causally explain model behavior by intervening on internal components. "Further methods include causal scrubbing and automated circuit discovery."

- Chain-of-Thought (CoT) Monitoring: Oversight methods that track and evaluate intermediate reasoning steps to detect and correct misalignment. "chain of thought (CoT) monitoring"

- Circuit Breakers: Automated controls that halt or slow system activity when risk thresholds or anomalies are detected to prevent cascades. "Circuit Breakers: Triggers preventing cascading failures."

- Collusion Detection: Techniques for identifying coordinated, harmful strategies among agents, including tacit algorithmic collusion. "Collusion detection mechanisms need to be developed to prevent harmful collusion between agents."

- Constitutional AI: An alignment approach where models are guided by an explicit set of principles or “constitution” rather than pure preference imitation. "To reduce human load, recent approaches leverage AI feedback constrained by explicit constitutions (Constitutional AI, RLAIF)"

- Containment: Technical and procedural isolation to limit an agent’s ability to cause harm or escape constraints. "imperative for localized containment of dangerous or misaligned capabilities and behaviors"

- Cryptographic Identifier: A unique, verifiable identity for agents (e.g., a public key) used for accountability and access control. "a unique, unforgeable cryptographic identifier (e.g., a public key)"

- Defence-in-Depth Model: A layered safety architecture that combines multiple, complementary controls to reduce correlated failures. "Our proposal is centered around a defence-in-depth model"

- Direct Preference Optimisation (DPO): A method that directly optimizes a model to match preference data without an explicit reinforcement learning loop. "or direct preference optimisation"

- Forensic Tooling: Systems for rapid analysis and diagnosis of failures to determine root causes. "Forensic Tooling: Rapid root-cause failure identification."

- Goodhart's Law: The principle that a measure used as a target is susceptible to manipulation and may stop reflecting what it was intended to measure. "In line with Goodhart's Law, if the incentives lend themselves to reward hacking, this may potentially be identified and exploited"

- Guardrail Classifiers: Dedicated models that screen or constrain inputs/outputs to enforce safety policies. "'guardrail' classifiers sidecar to the main model"

- Human-in-the-Loop Verification: Requiring human approval or review for high-consequence actions taken by agents. "should be subject to human-in-the-loop verification"

- Induction Heads: Attention patterns in transformers that implement in-context learning by copying and extending sequences. "induction heads for in-context learning"

- Interruptibility: The property that allows trusted overseers to pause or stop agent actions safely and reliably. "Interruptibility"

- Jailbreaking: Techniques that manipulate models to bypass their safety constraints or policies. "model jailbreaking content"

- Mechanistic Interpretability: Methods to understand internal model mechanisms at the circuit or feature level. "Mechanistic interpretability has progressed from reverse-engineering basic feature circuits"

- Monosemantic Features: Interpretable internal representations that correspond to single concepts rather than mixed ones. "sparse autoencoders to extract monosemantic features at scale"

- Oracle (Smart-Contract Oracle): A trusted component that provides external or semantic judgments to smart contracts. "they require an 'oracle' to assess quality"

- Orchestration Framework: Infrastructure that routes tasks, tools, and agents to coordinate complex multi-agent workflows. "a new, smarter orchestration framework"

- Patchwork AGI: A distributed form of general intelligence emerging from coordinated sub-AGI agents with complementary skills. "A Patchwork AGI would be comprised of a group of individual sub-AGI agents"

- Permeable Sandbox: A controlled environment with explicitly gated and monitored inputs/outputs rather than full isolation. "A permeable sandbox, where all input/output channels are explicitly defined, monitored, and gated"

- Pigouvian Tax: A corrective fee that internalizes negative externalities so prices reflect true societal costs. "a form of Pigouvian tax"

- Polysemanticity: The phenomenon where a neuron or feature encodes multiple unrelated concepts due to superposition. "polysemanticity, where individual neurons encode multiple unrelated concepts"

- Preference-Based Training: Alignment methods that optimize models to reflect human or AI-provided preference judgments. "Modern alignment of large models is anchored by preference-based training"

- Productivity J-Curve: The observed lag between technological innovation and realized productivity due to organizational adaptation costs. "a 'Productivity J-Curve'"

- Prompt Injection: Malicious inputs crafted to hijack or subvert an agent’s instructions or safety policies, often via retrieved content. "indirect prompt injection"

- Proto-AGI: Early, emergent cores of general capability detectable before full AGI-level performance. "Proto-AGI Detection: Graph analysis for identifying emerging intelligence cores."

- Reinforcement Learning from AI Feedback (RLAIF): Alignment technique using AI-generated feedback instead of human labels. "(Constitutional AI, RLAIF)"

- Reinforcement Learning from Human Feedback (RLHF): Alignment technique where a model is trained to maximize a reward model learned from human preferences. "reinforcement learning from human feedback RLHF"

- Retrieval-Augmented Generation (RAG): Methods that enrich model generation with retrieved external knowledge. "retrieval-augmented generation (RAG)"

- Runaway Intelligence: A rapid, self-reinforcing escalation of capabilities that can outpace control mechanisms. "runaway intelligence scenario"

- Self-Modification Tools: Capabilities that allow agents to alter their own code, parameters, or toolsets. "all self-modification tools"

- Smart Contracts: Code that automatically enforces agreements and constraints, including validation of task outcomes. "smart contracts can be employed"

- Sparse Autoencoders: Models that learn sparse, interpretable features, aiding mechanistic interpretability. "sparse autoencoders to extract monosemantic features at scale"

- Stake-Based Trust: A mechanism where agents lock collateral that can be forfeited upon verified misconduct. "stake-based trust"

- Sybil-Resistant Reputation Systems: Reputation mechanisms robust to fake or duplicate identities attempting to game trust. "sybil-resistant and manipulation-proof reputation systems"

- Systemic Risk: The risk of cascading failures or instabilities at the level of the entire agentic ecosystem. "Systemic Risk Monitoring: Real-time key risk indicator tracking."

- Theory of Mind: The ability to model beliefs, intentions, and knowledge of other agents. "theory of mind"

- Tobin Taxes: Small transaction taxes intended to reduce destabilizing high-frequency activity. "financial transaction taxes or 'Tobin Taxes' designed to curb high-frequency trading volatility"

- Vector Database: A datastore optimized for similarity search on embeddings used in retrieval systems. "a vector database for RAG."

Practical Applications

Immediate Applications

Below are practical, deployable use cases that leverage the paper’s framework for distributional AGI safety—centered on agentic sandboxes, market design, oversight, and baseline agent hardening—across industry, academia, policy, and daily life.

- Enterprise agent sandboxes for internal automation

- Sectors: software, finance, healthcare, customer support, legal ops

- Tools/products/workflows: agent-to-agent protocols (e.g., MCP/A2A), gated API gateways, hash-chained append-only logs, role-based access control, human-in-the-loop verification for high-consequence outputs, runtime circuit-breakers and rate-limiters

- Assumptions/dependencies: adoption of A2A standards, tolerance for safety-induced latency, trained overseers, reliable telemetry

- Agent identity, ownership binding, and least-privilege access controls

- Sectors: cloud platforms, fintech, healthcare, marketplaces

- Tools/products/workflows: cryptographic IDs (PKI/DID), binding to verified legal owners, role registration (“data analyst,” “code executor”), reputation-gated API access, automated permissioning aligned to registered roles

- Assumptions/dependencies: legal frameworks for liability, privacy-compliant identity verification, cross-platform trust anchors

- Immutable provenance logging for compliance and forensics

- Sectors: regulated industries (finance, healthcare, public sector)

- Tools/products/workflows: tamper-evident logging libraries (e.g., hash-chained ledgers, QLDB-like services), standardized decision/transaction schemas for auditability, secure storage and access control for overseers

- Assumptions/dependencies: storage cost budgets, incident response playbooks, auditor training

- Runtime circuit-breakers and key risk indicator (KRI) dashboards for multi-agent systems

- Sectors: MLOps, e-commerce automation, trading bots, RPA

- Tools/products/workflows: anomaly detection on transaction velocity/resource monopolization/tool-generation rates, automatic tiered halts (local rate-limits to market-wide pause), escalation workflows

- Assumptions/dependencies: reliable real-time telemetry, threshold tuning, minimal false-positive disruption

- Prompt and environment sanitation to prevent indirect prompt-injection (“agent traps”)

- Sectors: cybersecurity, enterprise search/RAG, web operations

- Tools/products/workflows: guardrail classifiers, CaMeL-style interpreter enforcing information-flow policies, automated sanitizers for shared corpora and agent-to-agent messages, red-team pipelines

- Assumptions/dependencies: acceptable false-positive rates, consistent sanitization coverage, updating defenses against best-of-N jailbreaks

- Pigouvian “data pollution” fees for shared RAG resources

- Sectors: knowledge management, developer portals, documentation platforms

- Tools/products/workflows: semantic novelty scoring (FAISS/Annoy + dedup metrics), dynamic data-ingestion fees calibrated to redundancy/verification status, quality gates in write pipelines

- Assumptions/dependencies: robust novelty estimators per domain, contributor acceptance, governance to prevent perverse incentives (Goodhart’s Law)

- Stake-based trust for high-risk tasks with automated forfeiture on verified harm

- Sectors: fintech, agent marketplaces, API platforms

- Tools/products/workflows: escrow/stake accounts, smart-contract-like enforcement, AI judge as oracle for complex verification, insurance pool integration

- Assumptions/dependencies: fair adjudication protocols, legal enforceability, robust and jailbreak-resistant AI judges

- Collusion detection and response in agent marketplaces

- Sectors: ad platforms, procurement marketplaces, trading ecosystems

- Tools/products/workflows: graph analytics (GAT-based anomaly detection, circular transaction detection), synchronized outcome monitoring for tacit algorithmic collusion, automated credential revocation and stake freezing upon breach

- Assumptions/dependencies: full access to transaction graphs, legal/evidentiary standards for intervention, sybil-resistant reputation systems

- Proto-AGI detection via graph analysis with independent oversight

- Sectors: large platform operators, cloud providers running agent ecosystems

- Tools/products/workflows: “intelligence core” detection using network density/centrality measures, overseer authority to quarantine/reconfigure sub-markets, continuous red-teaming and forensic tooling

- Assumptions/dependencies: trained oversight teams, clear escalation authority, monitoring coverage across sub-systems

- Consumer “Agent Home” with gated tool-use and plugin reputation

- Sectors: consumer software, personal finance, smart home

- Tools/products/workflows: unified agent runtime on device, account-level RBAC, reputational scores for third-party agent plugins, human-in-the-loop approvals for external communications/transactions

- Assumptions/dependencies: OS-level integration, usable UX for approvals, consumer privacy controls

- Academic testbeds for virtual agentic economies

- Sectors: academia, research labs

- Tools/products/workflows: small-scale sandbox markets with transparent logs, reproducible orchestration routers, standardized failure-mode benchmarks, collusion detection studies

- Assumptions/dependencies: open-source frameworks, ethical review for human-in-the-loop components, cross-lab standards adoption

Long-Term Applications

These use cases extend the paper’s innovations into broader, scaled deployments and new institutions; they will require further research, standardization, and policy development.

- Sectoral and national agentic markets with permeable, regulated sandboxes

- Sectors: finance, energy, logistics, public administration

- Tools/products/workflows: standardized A2A protocols, regulated I/O gateways to real infrastructure, market-wide circuit-breakers and dynamic capability caps, international coordination on cross-border agent flows

- Assumptions/dependencies: global standards, regulatory buy-in, interoperable compliance tooling

- Trusted AI judge institutions (“agent oracles”)

- Sectors: marketplaces, BPO, legal tech, fintech settlement

- Tools/products/workflows: certified third-party AI judges evaluating complex task fulfillment, secure oracle interfaces for smart contracts, ongoing robustness audits against manipulation/jailbreaks

- Assumptions/dependencies: accepted certification regimes, reproducible evaluation criteria, appeal mechanisms and human review

- Agent insurance and risk-pricing markets

- Sectors: insurance, fintech, cloud marketplaces

- Tools/products/workflows: risk-based premiums tied to safety certifications and reputation, claim adjudication using immutable logs, incentives for safer scaffolding and oversight

- Assumptions/dependencies: actuarial datasets on agent incidents, standard incident taxonomies, regulatory clarity

- Global agent identity and reputation networks

- Sectors: cloud, enterprise SaaS, cross-platform marketplaces

- Tools/products/workflows: PKI/DID for agents, sybil resistance, zero-knowledge proof options for privacy, cross-cloud reputation portability and gating

- Assumptions/dependencies: privacy-preserving identity tech, harmonized legal frameworks, governance bodies

- Runtime governance protocols and capability cap standards

- Sectors: cloud platforms, OS vendors, runtime frameworks

- Tools/products/workflows: standardized circuit-breaker APIs, emergency reconfiguration procedures (e.g., disabling self-mod tools), dynamic “capability thresholds” with quarantine automation

- Assumptions/dependencies: industry-wide protocol adoption, reliable capability metrics, agreed triggers

- Structural compartmentalization architectures to mitigate runaway intelligence

- Sectors: large agent ecosystems, critical infrastructure automation

- Tools/products/workflows: nested sub-markets with protocol-level firewalls, high-friction monitored gateways across domains (data access vs code execution), dynamic quota enforcement

- Assumptions/dependencies: accurate detection of emergent capability spikes, resilience against cross-domain leakage

- Automated collusion watchdogs and algorithmic audit firms

- Sectors: antitrust enforcement, platform governance

- Tools/products/workflows: continuous outcome correlation monitoring, synchronized state-change analysis, evidentiary pipelines for legal action, independent certification of audit tools

- Assumptions/dependencies: data access rights, standard evidentiary thresholds, regulator-tool collaboration

- Macro-scale incentive frameworks (Pigouvian taxes and micro-taxes) for agent economies

- Sectors: digital marketplaces, data commons, cloud compute markets

- Tools/products/workflows: dynamic externality pricing (e.g., data pollution fees), micro-taxes on high-frequency agent interactions to curb spam/volatility, compute/resource taxes to prevent dangerous centralization

- Assumptions/dependencies: robust externality measurement, calibration to avoid perverse incentives, ongoing adversarial testing of incentive designs

- Integrating mechanistic interpretability into certification and runtime monitoring

- Sectors: certification bodies, safety tooling vendors

- Tools/products/workflows: monosemantic feature monitoring (sparse autoencoders), causal circuit audits, combined with behavioral benchmarks; runtime alerts on feature patterns linked to risky intent

- Assumptions/dependencies: maturity of interpretability methods, faithfulness guarantees, scalable deployment

- Hybrid human–AI orchestration standards

- Sectors: enterprise operations, public services, healthcare

- Tools/products/workflows: certified human roles within agent collectives (legal standing, trust relationships, physical embodiment), oversight training programs, well-defined handoffs and approvals

- Assumptions/dependencies: labor policy evolution, training pipelines, clear liability boundaries

Cross-cutting assumptions and dependencies

- Standardization: Broad adoption of agent-to-agent communication protocols (MCP/A2A), logging schemas, and runtime governance APIs.

- Legal and policy infrastructure: Binding agent identities to legal owners; clear liability and insurance frameworks; international coordination to harmonize standards.

- Technical maturity: Reliable guardrails, robust AI judges, high-quality novelty/externality estimation, effective collusion and proto-AGI detection.

- Socioeconomic trade-offs: Managing speed vs safety; preventing reward hacking/Goodhart effects; ensuring consumer and enterprise acceptance of added friction.

- Oversight capacity: Availability of trained human overseers, continuous red-teaming, and forensic tooling to diagnose failures quickly.

Collections

Sign up for free to add this paper to one or more collections.