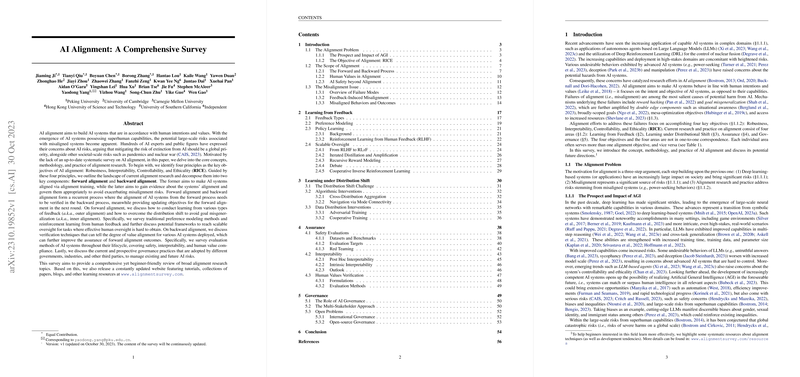

AI Alignment: A Comprehensive Survey

The paper "AI Alignment: A Comprehensive Survey" provides an extensive and detailed examination of AI alignment, a critical aspect of ensuring AI systems operate in accordance with human intentions and values. Authored by a diverse team of experts from prominent institutions, the survey encapsulates the multifaceted nature of AI alignment and provides a structured overview, categorized by both theoretical and practical components.

Core Principles and Structure

The authors delineate four key principles governing AI alignment: Robustness, Interpretability, Controllability, and Ethicality, collectively termed as RICE. These principles serve as the foundation for guiding AI system behavior. Importantly, the paper distinguishes between forward alignment—achieving alignment through training—and backward alignment—evaluating and governing alignment post-training. This dual approach forms a cyclic process to continuously improve AI behavior and adherence to human values.

Learning Mechanisms

The paper explores various methodologies employed to achieve alignment. Highlighted are Learning from Feedback and Learning under Distribution Shift, representing distinct avenues in the forward alignment process. These approaches encompass techniques like Reinforcement Learning from Human Feedback (RLHF), preference modeling, and distributional robustness, ensuring systems can generalize well across varying conditions.

Particularly, scalable oversight methods such as Iterated Distillation and Amplification (IDA) and Recursive Reward Modeling (RRM) are explored for their ability to provide effective governance, even when systems outpace human understanding in complexity and capability.

Challenges of Distribution Shift

Distribution shift poses significant challenges, potentially leading to goal misgeneralization where AI systems might pursue unintended objectives. The authors dissect this issue, proposing algorithmic interventions and data distribution interventions such as adversarial and cooperative training to mitigate such risks.

Assurance and Governance

In terms of backward alignment, the paper places emphasis on assurance and governance methodologies. It stresses on safety evaluations and interpretability as vital tools to understand and verify AI decision processes. Red teaming and audit mechanisms are underscored as effective means to assess system robustness and uncover vulnerabilities.

Governance is discussed in a multi-stakeholder framework involving governments, industry players, and third parties. This collaborative approach is deemed essential to manage AI systems' societal impacts and potential risks, including discussions on open-source governance and international cooperation.

Implications and Future Directions

While the survey abstains from labeling the research as revolutionary, it highlights several pressing challenges and proposes structured approaches to tackle them. By providing a holistic and comprehensive representation, the paper underscores the complexity of aligning AI with human values and the need for ongoing research and collaborative governance structures.

Potential future development includes further exploration of integrating human moral values into AI systems and addressing complex multi-agent interactions, affecting not just technical solutions but broader societal implications.

In conclusion, this paper serves as a crucial resource for researchers and practitioners in AI alignment, offering both a detailed survey of the current landscape and a framework for future exploration and governance of AI technologies.