Does Tone Change the Answer? Evaluating Prompt Politeness Effects on Modern LLMs: GPT, Gemini, LLaMA (2512.12812v1)

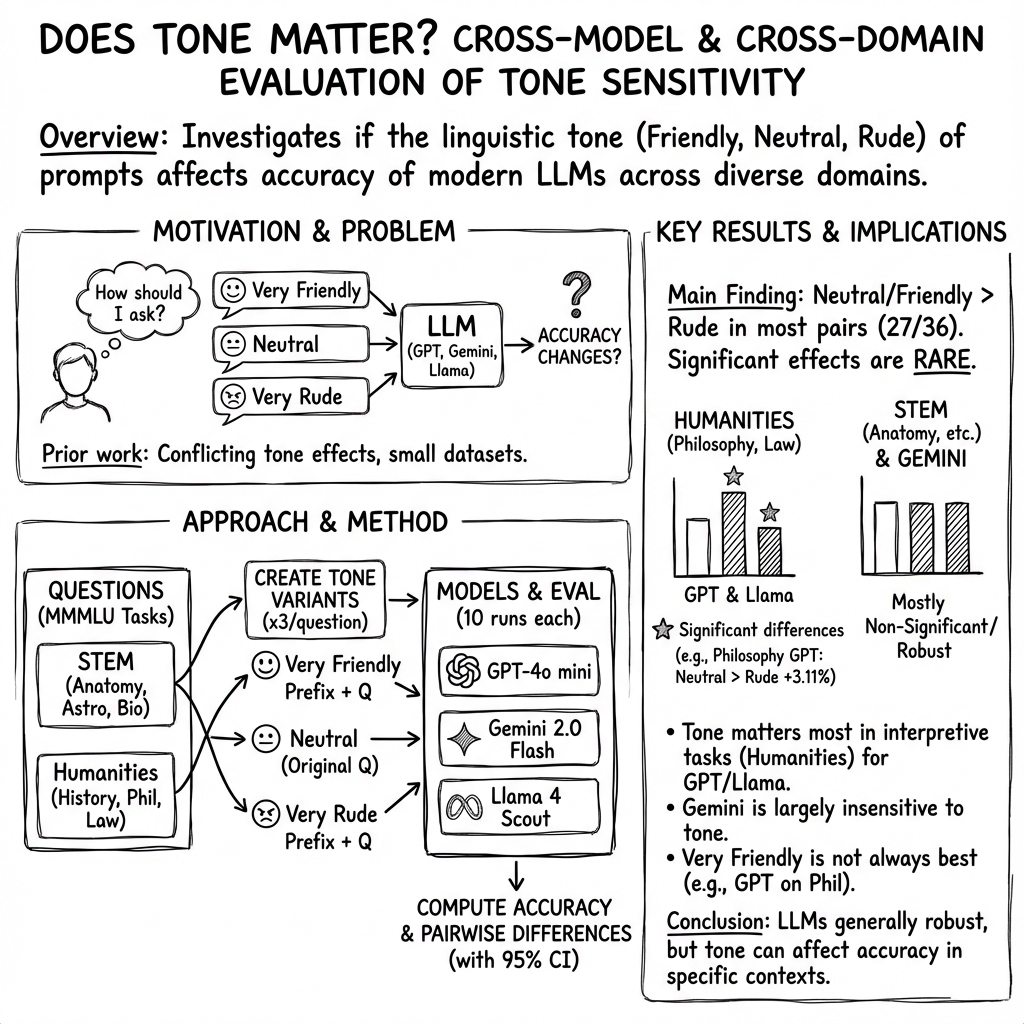

Abstract: Prompt engineering has emerged as a critical factor influencing LLM performance, yet the impact of pragmatic elements such as linguistic tone and politeness remains underexplored, particularly across different model families. In this work, we propose a systematic evaluation framework to examine how interaction tone affects model accuracy and apply it to three recently released and widely available LLMs: GPT-4o mini (OpenAI), Gemini 2.0 Flash (Google DeepMind), and Llama 4 Scout (Meta). Using the MMMLU benchmark, we evaluate model performance under Very Friendly, Neutral, and Very Rude prompt variants across six tasks spanning STEM and Humanities domains, and analyze pairwise accuracy differences with statistical significance testing. Our results show that tone sensitivity is both model-dependent and domain-specific. Neutral or Very Friendly prompts generally yield higher accuracy than Very Rude prompts, but statistically significant effects appear only in a subset of Humanities tasks, where rude tone reduces accuracy for GPT and Llama, while Gemini remains comparatively tone-insensitive. When performance is aggregated across tasks within each domain, tone effects diminish and largely lose statistical significance. Compared with earlier researches, these findings suggest that dataset scale and coverage materially influence the detection of tone effects. Overall, our study indicates that while interaction tone can matter in specific interpretive settings, modern LLMs are broadly robust to tonal variation in typical mixed-domain use, providing practical guidance for prompt design and model selection in real-world deployments.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Simple Explanation of “Does Tone Change the Answer? Evaluating Prompt Politeness Effects on Modern LLMs”

Overview

This paper asks a straightforward question: does the way you talk to an AI—being very friendly, neutral, or very rude—change how correctly it answers? The researchers tested three popular AI models (GPT-4o mini, Gemini 2.0 Flash, and Llama 4 Scout) on school-style multiple-choice questions from different subjects to see if tone matters.

What Were They Trying to Find Out?

The study focused on four easy-to-understand questions:

- Does asking nicely (friendly tone) or neutrally help an AI answer more correctly than asking rudely?

- Do different AI models react differently to tone?

- Does tone matter more in some subjects (like Humanities) than others (like STEM)?

- If you look at lots of questions together, do tone effects mostly disappear?

How Did They Test It?

Think of this like giving a big quiz to three AIs and changing the tone of the instructions:

- The researchers used a large, respected test set called MMMLU. It’s a collection of multiple-choice questions across many school subjects. Multiple-choice is helpful because there’s a clear right answer.

- They picked 6 tasks: 3 STEM (Anatomy, Astronomy, College Biology) and 3 Humanities (US History, Philosophy, Professional Law).

- For each question, they made three versions:

- Neutral: just the question.

- Very Friendly: asking in a kind, polite way (“Would you be so kind as to…”).

- Very Rude: asking in a mean way (“You poor creature…”).

- They told the AIs to answer with just the letter (A, B, C, or D) to keep things consistent.

- They asked each question 10 times per tone to reduce randomness (AI outputs can vary slightly each time).

- They measured accuracy (how many answers were right) and compared the tones. They also checked if any differences were “statistically significant,” which is a way to tell if a result is likely real and not just due to chance.

To explain two technical terms in everyday language:

- “Mean difference” is simply “on average, how much better or worse did the AI do under one tone compared to another?”

- A “95% confidence interval” is a range that the true difference probably falls into. If this range does not include zero, the difference is likely real—not a fluke.

What Did They Find, and Why Is It Important?

Here are the main takeaways, explained simply:

- Overall, neutral or very friendly tone usually led to slightly better accuracy than very rude tone.

- However, most of these improvements were small and not strong enough to say they’re definitely real (not “statistically significant”).

- The places where tone clearly mattered were mostly in Humanities subjects:

- In Philosophy, GPT and Llama did worse with rude prompts compared to neutral ones. Surprisingly, “very friendly” wasn’t always better than neutral—sometimes neutral was best.

- In Professional Law, Llama did better with neutral tone than with rude tone.

- Gemini 2.0 Flash seemed mostly unaffected by tone; its accuracy stayed stable whether the prompt was friendly, neutral, or rude.

- When results were combined across multiple tasks (like all STEM together or all Humanities together), the tone effects mostly faded away.

Why this matters:

- It suggests that being rude to an AI generally doesn’t help and can hurt in some subjects that need careful interpretation (like Philosophy and Law).

- But in everyday mixed use across many topics, modern AIs are quite robust: tone usually doesn’t make a big difference.

What Does This Mean Going Forward?

In simple terms:

- For most daily use, you don’t need to worry too much about tone to get correct answers—neutral is a safe choice.

- If you’re working on more interpretive subjects (like Philosophy or Law), avoiding rude prompts is a good idea.

- Different AIs may react differently: GPT and Llama sometimes change with tone, while Gemini is more stable.

- Earlier small studies sometimes saw different patterns. This paper shows that using more questions across more subjects gives a clearer picture: tone effects are real in some cases but small overall.

In the future, it would be helpful to:

- Test more models and languages, as tone and politeness can vary across cultures.

- Try open-ended questions and multimodal tasks (not just multiple-choice) to see if tone matters more in natural conversations.

- Explore other tone types (like formal vs. casual or calm vs. emotional) and look beyond accuracy to things like safety and helpfulness.

Overall, the message is simple: be neutral or polite when prompting, especially in sensitive or interpretive topics. But don’t worry—modern AIs are generally steady and won’t fall apart just because your tone changes.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, consolidated list of concrete gaps that remain unresolved and could guide future research:

- Tone operationalization is narrow and unvalidated: only two extreme prefixes (“Very Friendly” and “Very Rude”) were appended to questions, with no intermediate levels, alternative tone dimensions (e.g., formality, warmth, dominance, urgency, sarcasm), or human/model validation that the manipulations are consistently perceived as intended.

- Potential confounds from prompt formatting: tone variants differ in length and structure (e.g., added preambles, punctuation; an extraneous “-” in option A in the example), which may affect tokenization, attention, or parsing independent of tone.

- Unrealistic tone injection: appending tone as a preface rather than embedding it naturally within multi-turn dialogue or task context may not reflect real user interactions; multi-turn persistence of tone and conversational dynamics are not examined.

- No check for safety/guardrail interactions: rude prompts can trigger content filters or refusals; refusal rates, partial compliance, or safety-related failure modes are not measured or controlled, potentially biasing accuracy estimates.

- English-only evaluation: no multilingual or cross-cultural assessment of politeness norms, despite prior cross-lingual evidence that tone sensitivity can vary by language and culture.

- Limited task coverage: only 6 MMLU tasks (3 STEM, 3 Humanities) were used; Social Sciences and “Other” categories were excluded, and coverage within each domain is narrow, limiting generalizability.

- Possible training-set contamination: MMLU is widely used in pretraining/evaluation; no leakage checks or contamination controls (e.g., unseen splits, deduplication) are reported, which may mask or distort tone effects.

- Multiple-choice only: open-ended, long-form, and chain-of-thought settings—where tone might more strongly influence reasoning depth, explanation quality, or calibration—are not evaluated.

- Output constrained to a single letter: constraining answers to A/B/C/D may suppress tone effects on reasoning processes, explanation quality, verbosity, or discourse structure.

- Missing secondary metrics: no analysis of calibration/confidence, consistency across runs, hallucination rate, refusal rates, safety compliance, toxicity, helpfulness, latency, or cost—limiting understanding of practical trade-offs.

- Decoding settings unspecified: temperature, top-p, and other sampling parameters are not reported; results may be sensitive to decoding choices and reproducibility is hindered.

- Run independence and session hygiene unclear: repeated runs per question may share session state; relying on an instruction to “forget this session” is not equivalent to API-level isolation and could introduce uncontrolled context effects.

- Statistical methodology inconsistencies: the paper references t-tests but presents CIs using z-critical values; no justification is given, and assumptions (normality, independence) for paired differences are not verified.

- No multiple-comparisons control: numerous pairwise tests across models, domains, and tones lack correction (e.g., Bonferroni, Holm, BH), inflating Type I error risk.

- Limited power analysis: small per-task effects may be underpowered; no a priori power analysis or minimum detectable effect reporting is provided to interpret non-significant findings.

- Hierarchical structure unmodeled: items nested within tasks/domains and models; simple averaging may obscure item- and task-level heterogeneity; no mixed-effects/hierarchical modeling to separate variance components.

- Item difficulty and distractor quality ignored: tone effects may interact with question difficulty or distractor plausibility; no psychometric or item-response analysis is conducted.

- Random subsampling without full transparency: 500 Professional Law questions were “randomly selected” but no seed, sampling protocol, or representativeness checks are reported.

- Model comparability confounded: vendor models differ in undisclosed size, data, and alignment; without controlled architectures and transparent training data, causal attributions for tone sensitivity remain speculative.

- Model versions not pinned: cloud models evolve rapidly; no immutable version identifiers or snapshot dates per model API are documented, risking temporal drift in replicability.

- Mechanistic explanations absent: no analysis connects observed tone effects to alignment/RLHF objectives, safety classifiers, or internal activation patterns that could explain model-dependent sensitivity.

- Limited model set: only GPT-4o mini, Gemini 2.0 Flash, and Llama 4 Scout are tested; excluding other major families (e.g., Claude, Qwen, Mistral/Mixtral) and fully open-source models with transparent training limits external validity and causal insight.

- No robustness checks on prompt content invariance: aside from tone, semantic content equivalence is assumed; no paraphrase controls or adversarial tone manipulations test whether effects are due to tone versus subtle content/format drift.

- Aggregation masks heterogeneity: domain-level averaging diminishes effects; no analysis of which subtopics (e.g., normative vs. analytical philosophy) or reasoning types are most tone-sensitive.

- Reproducibility assets missing: code, prompts, full item lists, run logs, seeds, and API configuration are not released, limiting independent verification and extension.

- External validity to real-world UX unclear: single-turn, multiple-choice lab tests may not capture real user goals, stakes, or interpersonal dynamics where tone could impact cooperation, follow-up questioning, or clarification behavior.

- Open question: Does tone interact with instruction style (e.g., chain-of-thought triggers, tool-use directives, system prompts, role/persona settings), and can such interactions amplify or neutralize tone effects?

- Open question: Are tone effects stronger in tasks requiring pragmatic inference, social reasoning, or moral judgment, and how do they relate to alignment policies and safety classifiers?

- Open question: How do tone effects evolve under multilingual, multimodal, or agentic settings (tool use, retrieval, planning), where additional subsystems may react differently to tone?

- Open question: Can fine-grained tone control be leveraged to improve calibration or reduce hallucinations without sacrificing safety and compliance?

Glossary

- Accuracy: A measure of the proportion of correctly answered questions, used as the primary evaluation metric across tone conditions. "Results are analyzed using mean differences and pairwise t-tests with 95% confidence intervals to distinguish genuine tone effects."

- Architecturally distinct: Refers to variations in model structures that affect their behaviors and responses. "This work advances prompt engineering and LLM evaluation research by establishing a systematic cross-model comparison of tone sensitivity across three architecturally distinct model families—GPT, Gemini, and Llama—from different providers."

- Chain-of-thought prompting: A method of question framing that guides models to sequentially think through steps, improving reasoning. "While substantial research has focused on structural aspects of prompt engineering such as chain-of-thought prompting..."

- Cross-lingual study: Research conducted on models across different languages to evaluate performance variations. "Yin et al. conducted a cross-lingual study finding that impolite prompts typically reduced performance..."

- Distillation-based approach: A method used in machine learning to compress and optimize models by transferring knowledge from a large model to a smaller one. "Li et al. presented Reason-to-Rank (R2R), a distillation-based approach that unifies direct and comparative reasoning for document reranking..."

- Interaction tone: Refers to the politeness or style used in queries which can affect model accuracy. "Understanding how interaction tone affects model performance has therefore become essential for ensuring effective deployment across these diverse application domains."

- Mixture-of-Experts (MoE): A model architecture approach where multiple sub-models (experts) are used to handle different types of data inputs or tasks. "The first Llama model built on a Mixture-of-Experts (MoE) architecture, Llama 4 Scout provides an industry-leading 10M-token context window."

- Multimodal fusion: Integrating multiple forms of data (e.g., text and images) in processing and decision-making. "Architectural specifics, such as layer depth, hidden dimensionality, the potential use of Mixture-of-Experts (MoE) routing, and multimodal fusion strategies..."

- Statistically significant: A result that is likely not due to chance, commonly indicated by a p-value less than 0.05 in statistical tests. "We evaluate the statistical significance of these estimates by accompanying each mean difference with a 95% confidence interval."

- Tonal variation: Changes in the articulation or style of querying which can impact a model's performance. "Overall, our study indicates that while interaction tone can matter in specific interpretive settings, modern LLMs are broadly robust to tonal variation in typical mixed-domain use."

Practical Applications

Below is an overview of practical, real‑world applications that follow from the paper’s findings and methodology. Applications are grouped by deployment horizon and, where relevant, mapped to sectors with example tools, products, or workflows. Each item also notes key assumptions or dependencies that may affect feasibility.

Immediate Applications

- Industry: Prompt governance policies that default to neutral tone

- What: Update enterprise prompt style guides to prefer neutral, concise phrasing over “very friendly” or “rude,” especially for Humanities-like tasks (e.g., legal, policy, philosophy) where tone sensitivity was observed for GPT/Llama; avoid adding excessive politeness padding in prompts.

- Sectors: Software, legal tech, professional services

- Tools/Workflows: “Prompt Linter” plugins for IDEs and prompt editors that flag overly friendly or rude phrasing; CI checks in prompt repositories

- Assumptions/Dependencies: Findings derived from English, multiple-choice tasks; models evolve over time; organizational prompts can be standardized without harming UX

- Model selection for tone-volatile environments

- What: Prefer tone-robust models (e.g., Gemini, per this study) when inputs may contain inconsistent or rude tone (customer support, community forums); otherwise, enforce tone normalization before calling tone-sensitive models.

- Sectors: Customer support, trust & safety, social platforms

- Tools/Workflows: Routing policy in model gateways that selects Gemini for high-variability tone inputs; shadow testing to confirm parity

- Assumptions/Dependencies: Tone robustness here is model-version specific; performance on other metrics (latency, cost, safety) must be balanced

- Prompt canonicalization middleware

- What: Insert a pre-processing layer that paraphrases user inputs into a neutral, concise form before sending to the LLM; strip affective/emotive language and avoid “very friendly” fluff.

- Sectors: Healthcare, legal, finance, enterprise search, RAG apps

- Tools/Workflows: “Prompt Canonicalizer” microservice; lightweight rewrite prompt using a small local model; API parameter that toggles “neutralize tone”

- Assumptions/Dependencies: Canonicalization should preserve semantic intent; minimal latency introduction; multilingual coverage if needed

- A/B testing and observability of tone effects in production

- What: Add “tone sensitivity” slices to existing evaluation suites and dashboards; monitor accuracy vs. tone distribution of inputs; run periodic A/Bs with neutralized vs. raw-tone prompts.

- Sectors: MLOps across all industries

- Tools/Workflows: Evaluation harness modeled after this paper (paired comparisons, mean diffs, 95% CI); observability panels in ML platforms

- Assumptions/Dependencies: Requires reliable labeling of input tone (rule-based or classifier) and representative task sets

- Task-specific guidance for Humanities use cases

- What: For philosophy/legal style queries, avoid rude prompts; prefer neutral tone; do not over-embellish with “very friendly” phrasing for GPT/Llama, per observed degradation in some tasks.

- Sectors: Legal research, compliance, policy analysis, education (humanities tutoring)

- Tools/Workflows: LMS prompt templates; legal-research assistant with automatic tone normalization

- Assumptions/Dependencies: Translating MMMLU Humanities to real-world tasks is an approximation; open-ended generation may behave differently from multiple-choice

- Education: Student and faculty guidance on effective prompting

- What: Teach “neutral and concise” as the default; illustrate that tone rarely improves STEM accuracy and can sometimes reduce humanities accuracy; reduce emphasis on performative politeness in teaching materials.

- Sectors: Education, EdTech

- Tools/Workflows: Classroom prompt primers; LMS-integrated prompt checkers; teacher rubrics

- Assumptions/Dependencies: Classroom tasks may be more open-ended; adapt guidance with periodic re-evaluation as models change

- Daily life: Personal assistants and productivity workflows

- What: Users need not “sweeten” prompts to improve accuracy; keep prompts neutral, explicit, and structured; avoid hostile/rude phrasing to minimize edge-case degradation.

- Sectors: Consumer productivity, note-taking, Q&A assistants

- Tools/Workflows: Keyboard shortcuts or extensions to rephrase prompts neutrally before submission

- Assumptions/Dependencies: Most consumer use is mixed-domain; benefits come from consistency rather than large gains

- Procurement and vendor evaluation checklists

- What: Add “tone robustness” to RFPs and vendor scorecards; request paired-tone evaluations on relevant tasks, using mean differences and CIs as in the paper.

- Sectors: Government, regulated industries, enterprise IT

- Tools/Workflows: Standard evaluation rubric and test set; vendor attestations of tone sensitivity

- Assumptions/Dependencies: Requires institution-specific tasks; vendors may need to run custom evals

- Content moderation and pre-processing for support tickets

- What: Normalize tone in user-submitted tickets before LLM triage or auto-replies; reduces variance without needing to train users to be polite.

- Sectors: Customer support, CRM platforms

- Tools/Workflows: Ticket ingestion pipeline with tone detection and normalization; “ToneGuard” add-on to CRMs

- Assumptions/Dependencies: Must preserve the substantive content and sentiment relevant to the issue

Long-Term Applications

- Tone-robustness benchmarking and certification

- What: Standardize a larger, public benchmark and certification process that quantifies tone sensitivity across domains, languages, and modalities (text, speech prosody).

- Sectors: Standards bodies, industry consortia, model marketplaces

- Tools/Workflows: “ToneBench” with per-domain slices, paired comparisons, confidence intervals; certification labels (e.g., Tone-Robust Bronze/Silver/Gold)

- Assumptions/Dependencies: Community adoption; legal access to multilingual datasets; evolving model versions

- Tone-invariant instruction tuning and adversarial augmentation

- What: Train or fine-tune models to be invariant to politeness/rudeness variations via augmentation and explicit loss terms; aim to minimize performance drift across tone.

- Sectors: Foundation model providers, enterprise fine-tuning

- Tools/Workflows: Data pipelines generating tone variants; adversarial training; evaluation gates measuring invariance

- Assumptions/Dependencies: Requires large, diverse tone-annotated corpora and careful safety alignment

- Multilingual and cultural tone robustness

- What: Extend evaluations and defenses to non-English languages and culturally distinct politeness systems (formality levels, honorifics).

- Sectors: Global enterprises, government, localization

- Tools/Workflows: Cross-lingual tone taxonomies; culturally-aware tone rewriters; language-specific evaluation suites

- Assumptions/Dependencies: Cross-cultural semantics of politeness are nontrivial; need native-speaker curation and validation

- Speech and multimodal tone normalization

- What: For voice agents and robotics, normalize prosody/emotional tone before language understanding; investigate whether vocal tone affects reasoning similarly to textual tone.

- Sectors: Voice assistants, call centers, automotive, robotics

- Tools/Workflows: Prosody-to-neutral-text front ends; multimodal encoders trained for tone invariance

- Assumptions/Dependencies: Requires robust ASR with emotion prosody features; multimodal benchmarks

- Governance: Regulatory guidance on linguistic perturbation testing

- What: Incorporate tone and other linguistic perturbations (slang, dialect, affect) into AI risk assessments; require reporting of robustness across pragmatic variations.

- Sectors: Policy, compliance, regulators

- Tools/Workflows: Audit protocols and disclosure templates; independent test labs

- Assumptions/Dependencies: Policy consensus; standardized metrics; resources for audits

- Personalized UX that preserves accuracy under user-preferred tone

- What: Allow users to select interaction tone preferences for style and rapport, while a hidden canonicalization layer ensures the model reasons over neutral content.

- Sectors: Consumer apps, wellbeing assistants, education

- Tools/Workflows: Dual-channel prompting: one for surface style, one for reasoning core; controllable generation modules

- Assumptions/Dependencies: Must separate presentation style from task reasoning; careful evaluation to avoid regressions

- MLOps: Tone shift monitoring as a form of data drift

- What: Treat aggregate tone distributions as a drift signal; alert when spikes in rude/overly polite inputs appear and re-run tone robustness checks.

- Sectors: Any production LLM deployment

- Tools/Workflows: Drift detectors on tone features; automated re-evaluation jobs and model/prompt rollback plans

- Assumptions/Dependencies: Accurate tone classifiers; stable baselines for comparison

- Safety and jailbreak research leveraging tone perturbations

- What: Study interplay between tone and adversarial prompts (coercion, hostility) to harden models without penalizing helpfulness; add tone perturbations to red-teaming suites.

- Sectors: Trust & safety, foundation model labs

- Tools/Workflows: Red-team corpora with controlled tone; safety metric extensions alongside accuracy

- Assumptions/Dependencies: Safety trade-offs differ by model; requires careful evaluation of unintended side effects

- Domain-specific canonicalization standards

- What: Publish domain style guides that define “canonical neutral” forms for prompts in healthcare, legal, finance, etc., to minimize variance and support reproducibility.

- Sectors: Professional associations, standards bodies

- Tools/Workflows: Open reference libraries of canonical templates; validators embedded in domain tools

- Assumptions/Dependencies: Community consensus; continuous maintenance as models and practices evolve

- Curriculum and workforce development on pragmatic robustness

- What: Create training programs that broaden “prompt engineering” beyond tricks to include robustness, measurement, and governance of pragmatic factors (tone, formality, affect).

- Sectors: Academia, corporate training, certification providers

- Tools/Workflows: Courseware with labs replicating this paper’s framework (paired tests, CIs, aggregation effects)

- Assumptions/Dependencies: Access to models/datasets; institutional buy-in

These applications reflect the paper’s core insights: tone effects are small overall, model- and domain-dependent, concentrated in some Humanities tasks, and diminished under mixed-domain aggregation. In practice, neutral and concise prompting is a robust default; when inputs are tone-volatile, prefer tone-robust models or add a tone-normalization layer; and institutionalize measurement of tone sensitivity as part of routine evaluation and governance.

Collections

Sign up for free to add this paper to one or more collections.