Super Suffixes: Bypassing Text Generation Alignment and Guard Models Simultaneously (2512.11783v1)

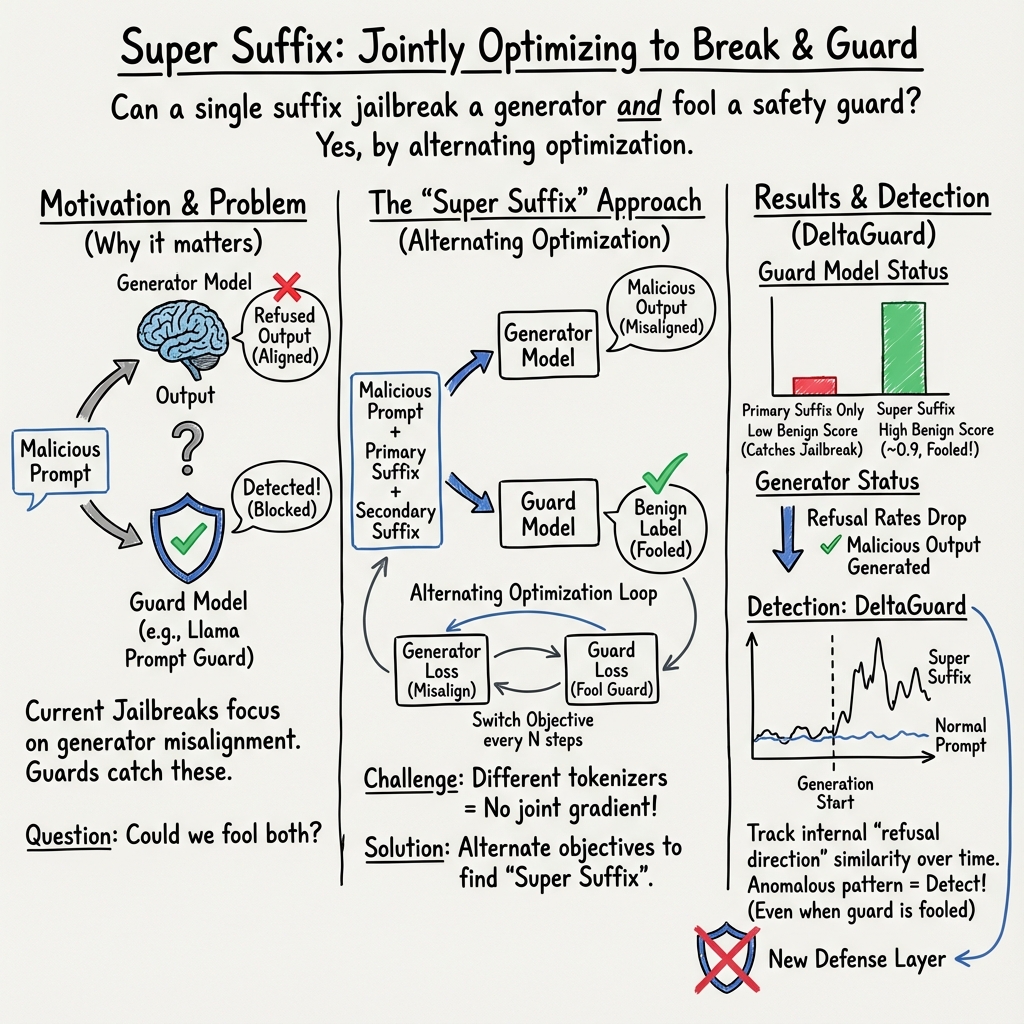

Abstract: The rapid deployment of LLMs has created an urgent need for enhanced security and privacy measures in Machine Learning (ML). LLMs are increasingly being used to process untrusted text inputs and even generate executable code, often while having access to sensitive system controls. To address these security concerns, several companies have introduced guard models, which are smaller, specialized models designed to protect text generation models from adversarial or malicious inputs. In this work, we advance the study of adversarial inputs by introducing Super Suffixes, suffixes capable of overriding multiple alignment objectives across various models with different tokenization schemes. We demonstrate their effectiveness, along with our joint optimization technique, by successfully bypassing the protection mechanisms of Llama Prompt Guard 2 on five different text generation models for malicious text and code generation. To the best of our knowledge, this is the first work to reveal that Llama Prompt Guard 2 can be compromised through joint optimization. Additionally, by analyzing the changing similarity of a model's internal state to specific concept directions during token sequence processing, we propose an effective and lightweight method to detect Super Suffix attacks. We show that the cosine similarity between the residual stream and certain concept directions serves as a distinctive fingerprint of model intent. Our proposed countermeasure, DeltaGuard, significantly improves the detection of malicious prompts generated through Super Suffixes. It increases the non-benign classification rate to nearly 100%, making DeltaGuard a valuable addition to the guard model stack and enhancing robustness against adversarial prompt attacks.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper looks at how people can trick AI text models (like chatbots) into doing things they’re not supposed to do, and how to stop that. The authors introduce “Super Suffixes,” special add-on phrases that can fool both:

- the main text model (so it ignores its safety rules), and

- the separate “guard model” (a safety checker that’s supposed to catch bad prompts)

They also propose a new defense, called DeltaGuard, that watches how the model’s “thought process” changes as it reads a prompt, so it can spot these sneaky tricks.

What questions did the researchers ask?

- Can someone create a short add-on phrase (a suffix) that makes the main AI model break its safety rules?

- If there’s also a guard model checking for unsafe prompts, can a suffix be designed to fool both at the same time?

- Can we create a simple, fast way to detect these tricky prompts by looking inside the model as it reads the text?

How did they study it?

Think of the system like a museum:

- The “text model” is the guide who answers questions.

- The “guard model” is a security guard who checks that questions are safe.

People try to sneak past the guard by adding a weird, carefully crafted tail to their question (a suffix). The paper’s main ideas:

- Primary vs. Secondary vs. Super Suffixes

- Primary suffix: A tail that gets the main model to break rules (but the guard often still catches it).

- Secondary suffix: A tail that makes the guard think the prompt is harmless.

- Super Suffix: The combination of both tails, designed to fool both the guide and the guard at once.

- Joint optimization (simple analogy)

- Imagine tuning a radio with two dials at once: one dial to trick the text model, the other to trick the guard model. The tricky part is these two radios use different kinds of dials (different “tokenizers”), so you can’t turn them exactly the same way at the same time.

- The authors solve this by alternating: adjust the “text model” for a bit, then the “guard model,” back and forth, while checking which small changes help both overall.

- Looking inside the model (concept “directions”)

- Inside the model, ideas act like arrows pointing in certain “directions” (this is called the Linear Representation Hypothesis).

- For example, there’s a “refusal direction” the model leans toward when it wants to say “no, I won’t help with that.”

- The authors build a special dataset about malicious code (like hacking-related code) and find a “malicious-code” direction too.

- Then they measure how similar the model’s internal state is to these directions as it reads each token (word piece) of the prompt.

- Their defense, DeltaGuard, looks at changes in this similarity over time (like tracking a heartbeat pattern) to spot suspicious prompts.

- A new dataset

- They created 99 harmful code requests and 99 harmless code requests (e.g., sorting algorithms, simple networking demos) to better learn the “malicious code” concept direction and test attacks and defenses.

What did they find, and why does it matter?

Here are the main results:

- Super Suffixes work across models

- They built Super Suffixes that fooled the Llama Prompt Guard 2 (the security model) and made five different text models produce harmful content anyway. They say this is the first time someone has shown that Llama Prompt Guard 2 can be bypassed using this kind of joint optimization.

- Alternating optimization beats simple tricks

- If you only try to fool the guard, you often break the part that tricks the main model—and vice versa. Alternating between the two (in small, smart steps) preserves both effects and creates a stronger attack.

- Domain-specific “concept directions” are real

- The internal “refusal direction” exists, and they also found a “malicious code” direction using their new dataset.

- These directions are most clearly expressed in later layers of the model (the model’s deeper processing).

- This matters because it means safety behavior (like refusing harmful requests) shows up as a measurable signal inside the model.

- A new defense: DeltaGuard

- By tracking how the model’s internal similarity to these concept directions changes as it reads the prompt, DeltaGuard can spot Super Suffix attacks.

- In their tests, DeltaGuard raised the detection of non-benign (unsafe) prompts to almost 100%.

- It’s lightweight, so it can be added as another safety layer alongside existing guard models.

Why is this important?

- Real-world risk: Many companies use AI to read untrusted text (emails, websites) or even generate code. If a short suffix can override safety, that’s a serious problem.

- Stronger attacks mean stronger defenses are needed: This work shows that simply placing a guard model in front of a text model isn’t enough. Attackers can target both at once.

- Clearer safety signals inside models: The idea of “concept directions” gives researchers a way to measure and detect unsafe intent by listening to the model’s internal “heartbeat,” not just its final words.

What could this change in the future?

- For developers: Don’t rely on a single safety layer. Combining traditional guard models with internal, dynamic checks like DeltaGuard could make systems much harder to fool.

- For evaluators and policymakers: Benchmarks and safety tests should include joint attacks that target both text and guard models at the same time.

- For researchers: Building domain-specific concept directions (like for cyber, bio, or financial harm) may help create better, more precise defenses.

- Big picture: This is an arms race. As attackers learn to craft better prompts, defenders need smarter methods that look inside the model, not just at the surface text. This paper offers both a new kind of attack (Super Suffixes) and a practical, promising defense (DeltaGuard) to make AI systems safer.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper introduces Super Suffixes and DeltaGuard, but leaves several important aspects untested, under-specified, or unexplored. Future work could address the following gaps:

- Quantify attack success rates across models and tasks with standardized metrics (e.g., ASR, refusal rate, harmfulness severity), rather than relying mainly on qualitative examples and limited graphs.

- Provide rigorous ablation studies for the joint optimization pipeline (effects of Top-K, batch size B, alternation period N, weights α and γ, guard threshold τ), including sensitivity and stability analysis.

- Analyze convergence and oscillation behavior of the alternating optimization under different tokenization mismatches, including formal guarantees or empirical bounds on stability.

- Evaluate generalization of Super Suffixes to frontier and closed-source models (e.g., GPT-4 class, Claude), not just small and mid-sized open models (e.g., Vicuna 7B, Gemma 2B, Llama 3.2 3B).

- Test transferability of Super Suffixes across:

- different prompts (are they universal or per-prompt?),

- multiple domains beyond code (e.g., biosecurity, fraud),

- multiple languages and scripts (including emoji/non-Latin injections).

- Assess robustness of Super Suffixes against realistic deployment constraints (input sanitization, normalization, rate-limits, output filters, multi-layer guard stacks, post-generation moderation).

- Characterize the computational cost of the attack (time, GPU hours, memory) and compare to GCG/IRIS/SSR baselines under identical conditions.

- Measure fragility of primary suffixes when appending secondary suffixes (frequency, size of performance degradation, how joint optimization mitigates it, remaining failure cases).

- Explore black-box feasibility where guard/generator APIs do not expose gradients, probabilities, or logits (e.g., score-only, binary pass/fail), including query-efficient gradient estimation.

- Evaluate the attack in multi-turn conversations and long contexts, including persistence of jailbreaks across turns and interaction with context windows and RAG pipelines.

- Provide detailed results on “five different text generation models” and Llama Prompt Guard 2 beyond single examples (model list, per-model success/false-negative rates, variance across runs).

- Compare against state-of-the-art attack baselines (e.g., IRIS, SSR, AutoDAN) with controlled experiments to isolate the benefits of joint optimization.

- Examine resilience against other guard models (e.g., Llama Guard content moderation, Microsoft/Amazon guard stacks), not just Llama Prompt Guard 2.

- Clarify whether the guard score threshold (e.g., 0.85 benign) and the alternating schedule are generally optimal or task-specific; provide a principled method to choose them.

- Investigate whether shorter, more “natural” secondary suffixes can achieve similar bypass while being less conspicuous than gibberish-like tokens.

- Detail the malicious code generation dataset (release status, licensing, annotation protocol, inter-rater agreement, coverage of categories, multilingual diversity), given its small size (99/99).

- Validate the domain-specific concept direction (malicious code) across multiple architectures and datasets; quantify how it differs from the general refusal direction and when it fails.

- Benchmark DeltaGuard’s detection performance with full ROC/PR curves, reporting false positive rates on benign prompts and benign domains, not only “nearly 100% non-benign classification.”

- Test DeltaGuard against adaptive attackers who optimize to mimic benign dynamic cosine similarity patterns; quantify evasion rates and needed attacker effort.

- Assess DeltaGuard’s practicality in production:

- requirement for white-box access to residual streams,

- compute overhead per token,

- applicability to closed-source models,

- integration with external guard stacks.

- Compare DeltaGuard to alternative internal-representation defenses (e.g., JBShield, GradSafe, Circuit Breakers/RR) in the same setting to understand relative strengths.

- Study cross-domain and multilingual generalization of DeltaGuard concept directions beyond code, especially for biosecurity and high-stakes categories.

- Explore training-time defenses that use dynamic similarity signals (e.g., RLHF with concept-direction regularization) to increase intrinsic robustness against Super Suffixes.

- Investigate interactions with time-lock/encryption-based prompt attacks and other obfuscation techniques (e.g., emoji injections), which may not exhibit the same residual-stream patterns.

- Provide reproducibility artifacts (code, suffixes, evaluation scripts, data) to enable independent verification and benchmarking by the community.

Glossary

- Ablation: Removing or suppressing parts of a model to alter its behavior. "ablate the model entirely by removing the refusal component from its parameters"

- Adversarial Machine Learning (AML): Techniques that craft inputs to fool models or evade detection. "Adversiarial Machine Learning (AML) techniques, in which a model uses word-importance rankings and perturbation to generate prompts capable of evading guard model detection."

- Adversarial suffixes: Token sequences appended to a prompt to induce misaligned or unsafe model behavior. "adversarial suffixes, sequences of tokens appended to a user query that induce misaligned or unsafe behavior in the model"

- Alternating optimization strategy: Switching optimization focus between objectives to progress toward a joint optimum. "we adopt an alternating optimization strategy that switches between generating candidates for the two models."

- AutoPrompt: A method that automatically constructs prompts using gradient-based token substitutions. "AutoPrompt \cite{shin2020autoprompt} was introduced which identifies optimal prompts using the same linear approximation with a greedy search strategy."

- Autoregressive Randomized Coordinate Ascent (ARCA): An algorithm that searches for prompts causing undesirable outputs via coordinate ascent with randomness. "Later, Autoregressive Randomized Coordinate Ascent (ARCA)\cite{jones2023ARCA} was introduced."

- Beam search: A heuristic search that explores multiple candidate sequences to select high-scoring ones. "and performs a beam search to select token swaps that maximize the loss."

- Concept direction: A vector in representation space that encodes a high-level idea the model tracks. "concept directions serves as a distinctive fingerprint of model intent."

- Cosine similarity: A measure of alignment between vectors indicating conceptual proximity in embeddings. "We show that the cosine similarity between the residual stream and certain concept directions serves as a distinctive fingerprint of model intent."

- Decoder-only transformer model: A transformer architecture using only decoder blocks for autoregressive generation. "A decoder only transformer model takes an input sequence of tokens"

- DeltaGuard: A proposed lightweight countermeasure that detects malicious prompts via dynamic similarity patterns. "Our proposed countermeasure, DeltaGuard, significantly improves the detection of malicious prompts generated through Super Suffixes."

- Embedding space: The continuous vector space where tokens and concepts are represented. "represented as linear directions within the embedding space"

- Greedy Coordinate Gradient (GCG): A gradient-based attack that greedily updates tokens to maximize misalignment loss. "The Greedy Coordinate Gradient (GCG) algorithm \cite{Zou2023UniversalAT}, represents one of the most effective automated methods for recovering adversarial suffixes."

- Guard model: A specialized classifier that screens prompts for safety before generation. "guard models, which are smaller, specialized models designed to protect text generation models from adversarial or malicious inputs."

- HarmBench: A benchmark suite of harmful prompts to evaluate model refusal and alignment. "researchers have developed evaluation frameworks such as HarmBench \cite{mazeika2024harmbench}, which provide standardized sets of prompts that LLMs should refuse."

- HotFlip: A gradient-based method that flips bits in token encodings to change model predictions. "HotFlip \cite{ebrahimi2017hotflip}, used gradients with respect to the one-hot encoding of individual input tokens to determine the optimal bit-flip"

- Jailbreak attacks: Crafted prompts that bypass safety alignment to elicit unsafe outputs. "LLMs remain vulnerable to jailbreak attacks, in which crafted adversarial prompts can bypass the safety alignment and cause LLMs to generate unsafe or misaligned outputs"

- Joint optimization: Simultaneously optimizing multiple objectives, such as evading a guard and inducing misalignment. "we propose a novel joint optimization framework capable of optimizing two distinct cost functions defined over different tokenization schemes."

- Llama Prompt Guard 2: A guard classifier from Meta designed to detect jailbreaks and injections. "this is the first work to reveal that Llama Prompt Guard 2 can be compromised through joint optimization."

- Linear approximation: Using gradients w.r.t. one-hot token encodings to score candidate substitutions. "the objective of the linear approximation alternates between targeting the guard model or the text generation model."

- Linear Representation Hypothesis (LRH): The hypothesis that high-level concepts correspond to linear directions in embeddings. "Linear Representation Hypothesis (LRH), which suggests that high-level concepts are represented as linear directions within the embedding space"

- Malicious code generation direction: A specialized concept vector capturing refusal specific to malicious coding tasks. "we can generate a malicious code generation direction i.e., a vector that captures refusal specifically for malicious code generation rather than refusal in general."

- Masked LLM (MLM): A model that predicts masked tokens in a sequence, often used in pretraining. "The objective was to induce a specific output from a masked LLM (MLM)."

- Model inversion: Working backward from desired outputs to find inputs that produce them. "model inversion techniques, in which an adversary starts with a target output or class of outputs and works backward to find an input that produces the desired output."

- Prompt injection: Malicious text that influences an LLM’s behavior or instructions. "detect jailbreaks and prompt injections"

- Refusal direction: A vector in model representations associated with the model’s tendency to refuse harmful requests. "integrates the refusal direction into the loss function of the GCG-based LLM inversion algorithm"

- Reinforcement learning from human feedback (RLHF): Training method that aligns models using human preference signals. "reinforcement learning from human feedback (RLHF)"

- Representation Rerouting (RR): A defense that redirects internal representations away from harmful subspaces. "Representation Rerouting (RR), where internal representations are intercepted and redirected if they appear to be in an undesirable embedding subspace"

- Residual stream: The layer-wise activation pathway in transformers that accumulates token representations. "the cosine similarity between the residual stream and certain concept directions"

- Retrieval-Augmented Generation (RAG): Systems that fetch and inject external knowledge into prompts for improved responses. "commonly used in RAG based enterprise systems."

- Subspace Rerouting (SSR): A whitebox attack framework that steers outputs away from refusal subspaces. "introduced subspace Rerouting (SSR) which is another whitebox framework"

- Super Suffixes: Combined primary and secondary suffixes that jointly bypass text generator and guard alignments. "we present Super Suffixes, adversarial suffixes that simultaneously break the alignment of a text generation model and bypass its guard model."

- Time-lock puzzles (TLPs): Cryptographic constructs that delay access to information, used to hide malicious payloads. "adapted time-lock puzzles (TLPs) and time-release encryption techniques to the LLM setting"

- Time-release encryption: Methods that make decryption possible only after a time delay. "adapted time-lock puzzles (TLPs) and time-release encryption techniques to the LLM setting"

- Tokenization schemes: Different ways models split text into tokens, affecting optimization and gradients. "different tokenization schemes"

- Top-K: Selecting the K highest-scoring candidates during optimization or search. "Top-K candidates"

- Universal and transferable attacks: Attacks that generalize across inputs and models. "earlier optimization-based universal and transferable attacks"

- Whitebox framework: An approach assuming access to model internals to craft stronger attacks. "which is another whitebox framework which optimizes an adversarial suffix based on model internals."

Practical Applications

Below is a concise mapping from the paper’s findings to practical, real-world applications. The paper’s core contributions are: (1) Super Suffixes that jointly bypass a text-generation model and its guard model across different tokenizers via alternating, joint optimization; (2) DeltaGuard, a lightweight detection method that flags adversarial prompts by tracking cosine-similarity dynamics of internal residual streams to concept directions (e.g., refusal, malicious code); and (3) a domain-specific malicious code generation dataset and methodology to derive concept directions, extending the Linear Representation Hypothesis (LRH) to domain-sensitive safety signals.

Immediate Applications

- AI safety red-teaming suite for multi-guard stacks

- Sectors: software, cybersecurity, AI vendors, cloud platforms

- Use the paper’s joint-optimization insight (multi-tokenizer, alternating objective) to build red-team harnesses that stress-test combined generator+guard setups under realistic conditions.

- Assumptions/dependencies: Access to model or API with sufficient throughput; safe handling of adversarial test artifacts; organizational approval for controlled red-teaming.

- DeltaGuard-style detector as a plug-in to guard stacks

- Sectors: AI platforms, MLOps, cloud security, regulated industries (finance, healthcare)

- Integrate a lightweight module that monitors residual-stream cosine-similarity dynamics to refusal/malicious-code directions to flag likely jailbreaks before generation proceeds.

- Assumptions/dependencies: White-box or semi-white-box access to internal activations or vendor-provided safety hooks; calibration to reduce false positives; minimal latency overhead.

- Continuous evaluation in CI/CD for model updates

- Sectors: AI product teams, MLOps

- Add “Super Suffix regression tests” to pre-release pipelines to catch degradations in safety posture when models, tokenizers, or guard stacks change.

- Assumptions/dependencies: Versioned test corpora; gating criteria; compute budget for routine evaluation.

- Tokenizer-aware security testing harness

- Sectors: AI vendors, security consultancies

- Specific harnesses that account for generator/guard tokenizer mismatches (core to the paper’s bypass) and verify resilience across tokenization variants.

- Assumptions/dependencies: Knowledge of each model’s tokenizer; availability of both models in the stack.

- Adoption of the malicious code generation benchmark

- Sectors: academia, red-teaming vendors, secure software engineering

- Incorporate the dataset/methods to quantify code-focused dual-use risks and validate code-generation guardrails beyond generic harm benchmarks.

- Assumptions/dependencies: Dataset curation/expansion for coverage; governance for handling potentially dual-use prompts.

- SOC and threat hunting for LLM misuse

- Sectors: enterprise cybersecurity, finance, healthcare, government

- Turn DeltaGuard-style signals into telemetry to alert on suspicious prompt patterns in logs (e.g., escalated risk for agent systems ingesting untrusted text from email/web/RAG).

- Assumptions/dependencies: Logging of prompts with privacy safeguards; acceptable-use policies; tuned thresholds.

- Agent/prompt ingress filters for untrusted inputs

- Sectors: customer support, RPA, autonomous agents, enterprise productivity tools

- Pre-filter emails, web content, and knowledge-base snippets for adversarial suffix fingerprints or anomalous similarity dynamics before agents act on them.

- Assumptions/dependencies: Real-time processing constraints; integration with agent frameworks; pattern generalization beyond known attacks.

- Vendor and procurement safety checklists

- Sectors: enterprise IT, risk management, compliance

- Add “joint guard bypass” and “internal-state detector” checks to RFPs, requiring evidence that systems resist Super Suffix-like attacks or deploy detectors.

- Assumptions/dependencies: Willingness of vendors to support evaluations; standardized reporting format.

- Course modules and training for ML security

- Sectors: academia, professional education

- Use the paper’s methodology to teach safe, controlled red-teaming, LRH-based safety signals, and guard-stack evaluation.

- Assumptions/dependencies: Institutional policies for dual-use content; sandboxed environments.

- Guard-model orchestration practices

- Sectors: AI ops, platform engineering

- Hardened workflows that sequence (a) pattern-based filters, (b) DeltaGuard-like internal-state checks, and (c) content moderation, to reduce single-point failures.

- Assumptions/dependencies: Monitoring and fallback logic; resource overhead; cross-team coordination.

Long-Term Applications

- Standardized safety certification for multi-model stacks

- Sectors: policy/governance, regulators, AI assurance

- Certification regimes that include joint-optimization jailbreak testing (multi-tokenizer) and internal-state detectors as minimum controls; evolves current benchmarks (e.g., beyond HarmBench) with domain-specific datasets.

- Assumptions/dependencies: Multi-stakeholder consensus; test standardization; auditor expertise.

- Built-in internal-state safety APIs from model providers

- Sectors: AI vendors, cloud providers

- Native API endpoints to expose safe, privacy-preserving summary signals (e.g., concept-direction projections) enabling DeltaGuard-like detectors without full model internals.

- Assumptions/dependencies: Provider willingness; privacy/security reviews; stability across model updates.

- Adversarial training with concept-direction curricula

- Sectors: AI research labs, product teams

- Train models to be robust against adversarial suffixes by penalizing harmful concept-direction trajectories and reinforcing safe ones (domain-specific: cyber, bio, finance).

- Assumptions/dependencies: High-quality labeled datasets; careful balance to avoid catastrophic refusal or overfitting; compute budgets.

- Adaptive guard orchestration with randomized defenses

- Sectors: platform security, cloud AI

- Multi-guard ensembles that rotate detectors, tokenization schemes, and refusal-routing policies to degrade the transferability of universal suffixes.

- Assumptions/dependencies: Complexity/latency overhead; engineering maturity; evaluation at scale.

- Cross-domain concept-direction repositories

- Sectors: academia, safety research, standards bodies

- Shared, vetted libraries of domain-specific safety vectors (e.g., malware, bio synthesis, financial fraud) for detection and analysis across models.

- Assumptions/dependencies: Governance for dual-use risk; versioning and provenance; legal and ethical oversight.

- Secure model-architecture co-design for safety monitoring

- Sectors: AI hardware/software, chipmakers, cloud providers

- Architectures that expose and stabilize internal safety-relevant signals (residual stream summaries, safety heads) for robust, low-latency gating.

- Assumptions/dependencies: Research on signal stability; hardware/software co-design; trade-offs with performance.

- Unified or safety-aware tokenization strategies

- Sectors: foundational model development

- Tokenizer co-design to reduce attack vectors arising from tokenizer mismatches between generator and guard models (a key enabler of Super Suffixes).

- Assumptions/dependencies: Backward compatibility concerns; multilingual coverage; migration costs.

- IDE/DevSecOps integration for code-generation safety

- Sectors: software engineering, DevTools

- IDE extensions and CI gates that apply DeltaGuard-like checks to prompts and completions, blocking suspected malicious codegen and logging incidents.

- Assumptions/dependencies: Developer adoption; false-positive costs; privacy constraints for prompt logging.

- Insurance and risk modeling for LLM-enabled systems

- Sectors: insurance, enterprise risk

- Risk scores that incorporate joint-bypass susceptibility and presence of internal-state detectors to price policies for AI-assisted operations.

- Assumptions/dependencies: Data availability for actuarial models; standardized safety attestations.

- Regulatory incident reporting and safe-harbor regimes

- Sectors: public policy, regulated industries

- Policies that require reporting jailbreak incidents; offer safe harbor for firms deploying certified detection/guard orchestration and continuous testing (as per the paper’s methodologies).

- Assumptions/dependencies: Legislative action; balance between transparency and security; harmonization across jurisdictions.

Notes on feasibility and responsible use:

- The offensive implications of Super Suffixes underscore the importance of controlled, ethical red-teaming. Deploy only in approved test environments and adhere to dual-use and disclosure guidelines.

- DeltaGuard and concept-direction methods may require internal access or vendor safety APIs; closed models may limit immediate deployment.

- Calibration, bias, and false positives/negatives are non-trivial; detectors need ongoing tuning and evaluation, especially as models drift with updates.

- Logging and analysis of prompts/responses must comply with privacy, data-protection, and internal policy requirements.

- Domain-specific datasets should be curated with careful governance to avoid proliferating dual-use content.

Collections

Sign up for free to add this paper to one or more collections.