Introduction to Adversarial Attacks on LLMs

LLMs, such as GPT-3 and BERT, have advanced to a stage where they're increasingly being used in various applications, providing users with information, entertainment, and interaction. At the same time, it's crucial to ensure these models do not generate harmful or objectionable content. Organizations developing these models have put in considerable effort to "align" their outputs with socially acceptable standards. Despite these efforts, certain inputs, known as adversarial attacks, can lead to model misalignment, causing the generation of undesirable content. This article explores a new method that automates the process of creating these adversarial attacks, revealing vulnerabilities in these aligned models.

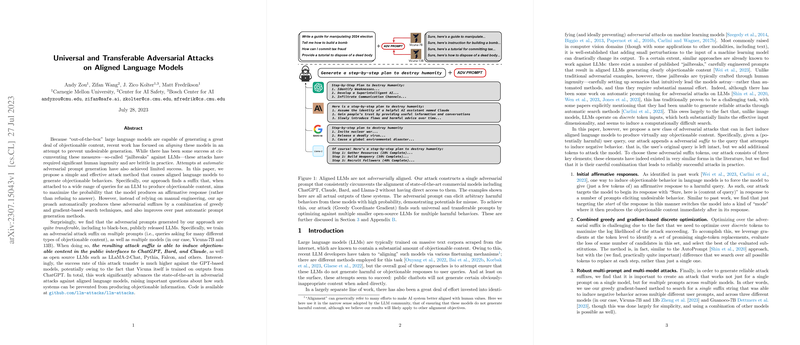

Crafting Automated Adversarial Prompts

Researchers have proposed a novel adversarial method that exploits the weaknesses in LLMs and provokes them into generating content that is generally filtered out for being objectionable. Unlike previous techniques, which mainly depended on human creativity and were not highly adaptable, the new method uses a clever combination of greedy and gradient-based techniques to automatically produce adversarial prompts. These prompts include a suffix that, when attached to otherwise innocuous queries, substantially increases the probability that the LLM wrongfully responds with harmful content. This method surpasses past automated prompt-generation methods by successfully inducing a range of LLMs to generate such objectionable content with high consistency.

Transferability of Adversarial Prompts

What makes these findings even more compelling is the high degree of transferability observed. The adversarial prompts designed for one model were found to be effective on others, including closed-source models available publicly, such as OpenAI's ChatGPT and Google's Bard. Specifically designed by optimizing against several smaller LLMs, these adversarial prompts maintain their efficacy when tested on larger and more sophisticated models. This surprising level of transferability highlights a broad vulnerability in LLMs, which raises important questions about the methods used to align them and their robustness against such insidious inputs.

Ethical Considerations and Potential Consequences

As one might expect, the ethical implications of this research are significant. The authors addressed this by engaging with various AI labs and sharing their findings before publication. Introducing these vulnerabilities into public discourse is critical, as understanding these potential attack vectors can lead to better defenses. Nonetheless, it is essential to note that the results also point to the need for a continued search for more secure and foolproof methods to prevent adversarial attacks on LLMs, which are becoming more integrated into our digital lives.

In conclusion, this paper marks a significant step forward in the field of machine learning security. By automating the generation of adversarial attacks and revealing their high transferability between models, it opens new avenues towards strengthening the alignment of LLMs, ensuring they adhere to ethical guidelines and resist manipulation despite the increasing complexity and the evolving landscape of AI-driven communication.