Reasoning Models Ace the CFA Exams (2512.08270v1)

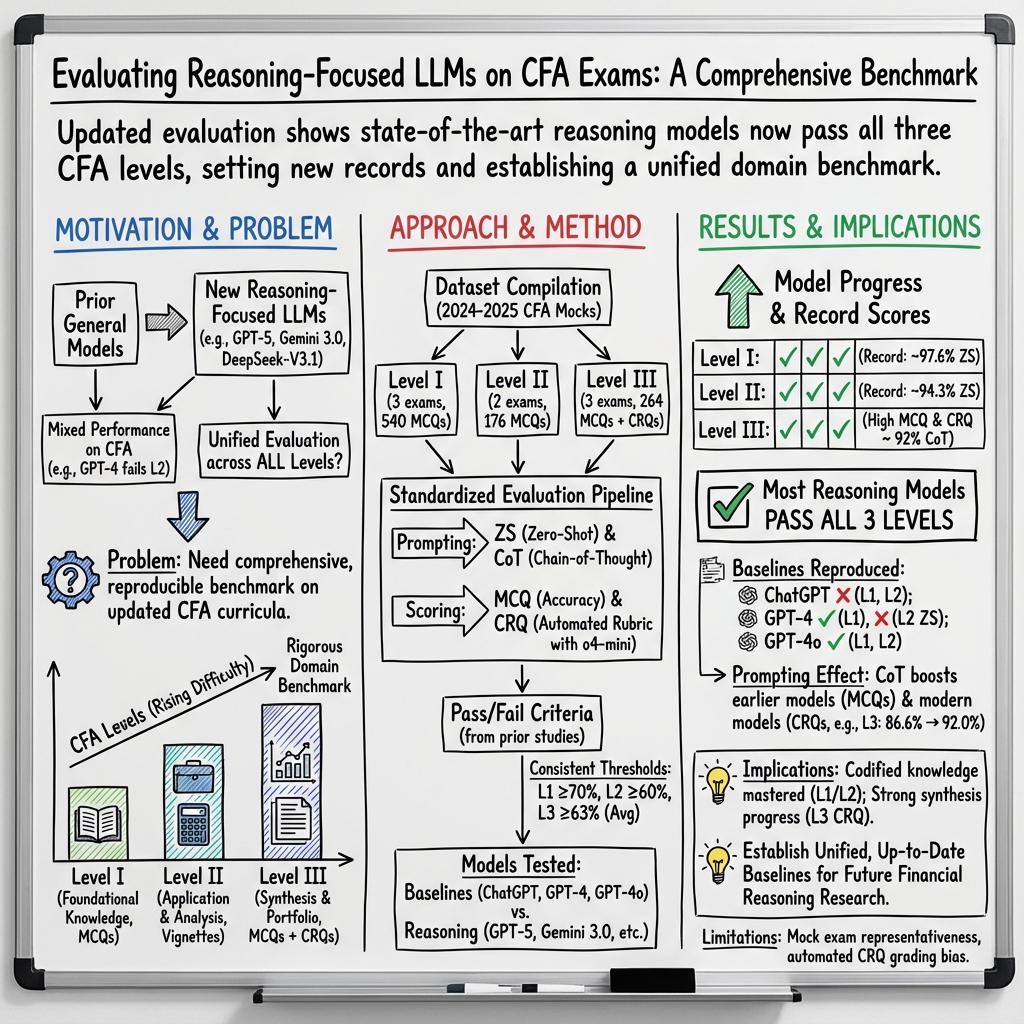

Abstract: Previous research has reported that LLMs demonstrate poor performance on the Chartered Financial Analyst (CFA) exams. However, recent reasoning models have achieved strong results on graduate-level academic and professional examinations across various disciplines. In this paper, we evaluate state-of-the-art reasoning models on a set of mock CFA exams consisting of 980 questions across three Level I exams, two Level II exams, and three Level III exams. Using the same pass/fail criteria from prior studies, we find that most models clear all three levels. The models that pass, ordered by overall performance, are Gemini 3.0 Pro, Gemini 2.5 Pro, GPT-5, Grok 4, Claude Opus 4.1, and DeepSeek-V3.1. Specifically, Gemini 3.0 Pro achieves a record score of 97.6% on Level I. Performance is also strong on Level II, led by GPT-5 at 94.3%. On Level III, Gemini 2.5 Pro attains the highest score with 86.4% on multiple-choice questions while Gemini 3.0 Pro achieves 92.0% on constructed-response questions.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper looks at how well modern AI “reasoning models” can handle the CFA exams. The CFA (Chartered Financial Analyst) certification is a tough, three-level program for people who work in investing and finance. The authors test several AI models on mock CFA exams to see if these AIs can pass—and even excel—at different types of finance questions, from basic multiple-choice to complex “show your work” problems.

What were they trying to find out?

The researchers wanted to answer simple, practical questions:

- Can today’s best AI models pass all three CFA levels?

- Do they do better on basic questions or more complex, real-world cases?

- Does asking the AI to “show its work” help it get better scores?

How did they test the models?

Think of the three CFA levels like a video game with harder stages:

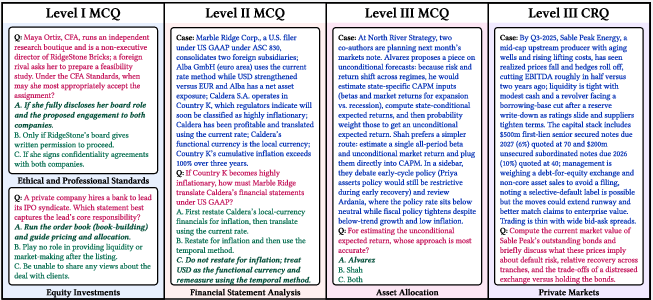

- Level I: Lots of single multiple-choice questions about foundations (like definitions and simple calculations).

- Level II: Case-based multiple-choice questions (you read a mini-story or “vignette” about a company or situation, then answer several related questions).

- Level III: The hardest mix—both case-based multiple-choice and constructed-response questions (these are open-ended, where you explain your reasoning and write out answers, like a short homework problem).

Here’s how they ran the tests:

- They used 980 questions from recent mock exams across Levels I, II, and III.

- They tested older AIs (like ChatGPT and GPT-4) and newer reasoning models (like GPT-5, Gemini 2.5 Pro, Gemini 3.0 Pro, Grok 4, Claude Opus 4.1, and DeepSeek-V3.1).

- They tried two styles of prompting:

- Zero-Shot (ZS): “Just give the answer.”

- Chain-of-Thought (CoT): “Show your work step-by-step and then give the answer.”

- For open-ended Level III answers, they used another AI as an automated “robot grader” with a strict rubric, assigning points like a teacher.

- They used pass/fail rules similar to real CFA standards (for example, getting above certain percentage thresholds overall and in each topic).

Main findings and why they matter

The results are impressive and show clear progress:

- Older models struggled. ChatGPT often failed. GPT-4 improved with “show your work” but still had trouble, especially on Level II.

- Newer reasoning models did much better—most passed all three levels.

- Standout scores:

- Level I: Gemini 3.0 Pro hit a record 97.6% (extremely high).

- Level II: GPT-5 led with 94.3%.

- Level III: Gemini 2.5 Pro was best on multiple-choice (86.4%), while Gemini 3.0 Pro was best on written answers (constructed-response) with 92.0%.

- “Show your work” (Chain-of-Thought) helped a lot on open-ended Level III tasks, but sometimes slightly lowered multiple-choice scores for some models. This suggests modern AIs already do very well on straightforward questions, and detailed reasoning is especially helpful for complex, write-out problems.

- Topic strengths and weaknesses:

- The newest AIs are strong in math-heavy areas (Quantitative Methods), Economics, and Investments.

- Ethics and Professional Standards are still harder for many models, showing that judgment and ethical reasoning remain challenging.

These findings matter because they show AI is now capable of handling tough finance exams, not just memorizing facts. That opens doors for powerful study tools, workplace assistants, and better support for analysts.

Implications and impact

In simple terms:

- AI is getting really good at finance test questions—especially in Levels I and II—and is catching up fast on the hardest parts of Level III.

- This could help students and professionals study smarter, check their work, and practice real-world finance decisions.

- But there are important cautions:

- The Level III grading used an automated AI “grader,” which can sometimes favor longer answers (a “verbosity bias”). Human expert graders would be more precise.

- There’s a chance some questions or similar versions exist in AI training data (called “data contamination”), which could make scores look better than pure reasoning.

- The Level III mock exams came from a third-party source, not the official institute, so future work should use official materials for maximum accuracy.

Overall, the paper shows that today’s reasoning models don’t just know finance—they can apply it. If we keep improving fair testing and human-checked grading, AI could become a trusted helper for learning, working, and making careful financial decisions.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored in the paper’s evaluation of reasoning models on CFA exams:

- Use of non-official Level III materials: Results rely on AnalystPrep mock exams for Level III; it remains unknown how performance transfers to official CFA Institute Level III mock exams and the full range of official constructed-response (CRQ) complexity and distractors.

- Human grading vs. LLM-based CRQ scoring: Level III CRQs are graded by an LLM (o4-mini); the extent of verbosity bias, rubric adherence, and detection of subtle logical errors is unquantified. A human-verified ground truth by CFA charterholders and inter-rater reliability analysis are needed.

- Alignment with official CFA scoring and pass standards: The pass/fail thresholds (e.g., topic-level minima; Level III averaging rules; 63% heuristic) are not official. The impact of using CFA’s actual Minimum Passing Score (MPS), topic weightings, partial credit rules, and official CRQ point structures is untested.

- Psychometric validity and difficulty calibration: Item difficulty, discrimination, and reliability (e.g., via IRT, Cronbach’s alpha) are not reported. Without psychometrics or human candidate baselines on the same items, claims of “mastery” are weakly grounded.

- Generalization across Level III Pathways: Coverage of all 2025 Pathways (Portfolio Management, Private Markets, Private Wealth) is not explicitly tested; performance differences across pathways and case types remain unknown.

- Data contamination assessment: The study notes possible indirect leakage but provides no concrete contamination checks (e.g., de-duplication against public corpora, novelty auditing, overlap scans). The degree to which scores reflect memorization versus reasoning is unresolved.

- Exam realism and constraints: Time limits, fatigue, and calculator policies (and whether tool augmentation is allowed or used) are not simulated. Latency, throughput, and time-to-answer per question are unreported, leaving real exam feasibility unanswered.

- Snapshot drift and reproducibility: The sensitivity of results to model snapshot updates and provider-side changes is unknown. Number of runs, seeds, and strict reproducibility protocols (beyond temperature settings) are insufficiently documented.

- Prompt sensitivity and inference strategies: Only Zero-Shot and Zero-Shot CoT are tested. The impact of self-consistency, majority voting, reflection, verification, or tool-augmented reasoning (e.g., Python/calculator) on both MCQs and CRQs remains unexplored.

- CoT regressions on MCQs: The observed performance drops under CoT for some models (e.g., Gemini 3.0 Pro, GPT-5) are not investigated. Mechanisms behind CoT’s negative/positive effects and whether structured reasoning templates mitigate regressions are open questions.

- Robustness to paraphrase and distractors: Model stability under paraphrased vignettes, adversarial distractors, minor numerical perturbations, and variable table/chart formats is not assessed.

- Ethics domain error characterization: Ethics shows relatively higher error rates, but the paper lacks a qualitative taxonomy of failure modes (e.g., misapplication of the Code and Standards, stakeholder conflicts, disclosure nuances) to guide targeted model improvements.

- Rounding and financial convention adherence: The impact of CFA-specific calculation details (e.g., day-count conventions, rounding rules, tax treatments) on model accuracy and consistency is not measured.

- Output parsing and evaluation pipeline transparency: The robustness of answer extraction (e.g., enforcing “A/B/C only”), handling of off-format outputs, and end-to-end evaluation code transparency are insufficiently described for external replication.

- Topic and format representativeness: The paper reports topic weight comparisons, but potential mismatches in item-set sizes, option structures, and vignette length versus official exams could affect comparability; these differences are not quantified.

- Context-length and truncation effects: With longer vignettes, the role of context window limits, token truncation, and memory spillover on accuracy is unexamined.

- Calibration and confidence: Models’ probability calibration, overconfidence/underconfidence (especially in Ethics and CRQs), and the relationship between confidence and correctness are not analyzed.

- Cost and practicality: Inference cost, token consumption, and throughput at scale (e.g., for training or practice use) are not reported, limiting practical deployment considerations.

- External validity beyond exams: Claims of approaching “senior-level proficiency” are not supported by evaluations on real-world finance tasks (live data analysis, portfolio simulation, compliance memos, client communication), leaving workplace relevance uncertain.

- Multilingual generalization: Performance on non-English CFA content and localization effects are not explored.

- LLM grader validation protocol: Agreement between multiple LLM graders, bias toward longer answers, and blind scoring vs. rubric-only scoring are not benchmarked, leaving CRQ scoring reliability uncertain.

- Dataset and code availability: Proprietary/paywalled items prevent open replication; a plan for releasing a contamination-controlled, rights-cleared benchmark (or synthetic-but-validated proxies) is absent.

Glossary

- Asset Allocation: The process of dividing investments among different asset categories to balance risk and return. "Asset Allocation (15â20\%)"

- Automated evaluator: An AI system used to assess and score responses based on predefined criteria. "we employ o4-mini as an automated evaluator."

- Benchmark contamination: When evaluation datasets overlap with model training data, inflating performance by memorization rather than reasoning. "benchmark contamination, where model performance reflects training data contamination rather than reasoning capability."

- Chain-of-Thought (CoT): A prompting technique that asks models to articulate step-by-step reasoning before giving an answer. "Chain-of-Thought (CoT): We use a Zero-Shot Chain-of-Thought approach, instructing the model to "think step-by-step" and "explain your reasoning" before generating the final answer."

- Constructed-response case studies: Open-ended, scenario-based questions requiring written analysis and solutions. "11 constructed-response case studies (totaling 44 CRQs)"

- Constructed-response questions (CRQs): Open-ended questions where candidates must produce and justify answers rather than select from options. "a combination of item sets and constructed-response questions (CRQs)"

- Corporate Issuers: A CFA curriculum topic focusing on corporate finance decisions, capital structure, and governance. "substantially revising topics such as Corporate Issuers and Fixed Income"

- Derivatives and Risk Management: The use of financial derivatives to hedge, speculate, or manage portfolio risk. "Performance Measurement and Derivatives and Risk Management are difficult for even the most advanced models."

- Distractor: A plausible but incorrect answer choice designed to test understanding and reduce guessing. "distractor subtlety."

- Ethical Standards: Principles and rules governing professional conduct and integrity in finance. "Ethical Standards (10â15\%)"

- Equity Investments: A CFA topic covering valuation, markets, and securities related to ownership stakes in companies. "Equity Investments"

- Financial Reporting: The analysis and interpretation of financial statements and accounting standards. "Financial Reporting"

- Fixed Income: Securities that pay fixed periodic income, such as bonds; includes valuation and risk analysis. "Fixed Income"

- Grading rubric: A structured set of criteria used to evaluate and score answers consistently. "the AnalystPrep grading rubric."

- Item set: A case-based group of multiple-choice questions sharing a common vignette/context. "case-based multiple-choice item sets (vignettes)"

- Model snapshot: A date-stamped version of a model used to ensure reproducible evaluation. "snapshots (date-stamped versions of models)"

- Pass/Fail criteria: Explicit thresholds that determine whether a candidate (or model) passes an exam. "Pass/Fail criteria."

- Pathways: Specialized tracks within Level III focusing on particular practice areas. "the specialized Pathways (30â35\%) in either Portfolio Management, Private Markets, or Private Wealth."

- Performance Measurement: Methods to evaluate returns and risk-adjusted performance of portfolios. "Performance Measurement (5â10\%)"

- Portfolio Construction: The process of selecting assets and weights to build portfolios aligned with objectives and constraints. "Portfolio Construction (15â20\%)"

- Portfolio Management: The overarching discipline of setting objectives, asset allocation, implementation, and monitoring of portfolios. "either Portfolio Management, Private Markets, or Private Wealth."

- Private Markets: Investments in non-publicly traded assets, such as private equity or private debt. "either Portfolio Management, Private Markets, or Private Wealth."

- Private Wealth: Financial planning and investment management for high-net-worth individuals and families. "either Portfolio Management, Private Markets, or Private Wealth."

- Temperature: A generation parameter controlling randomness in model outputs; lower values reduce variability. "the temperature set to 0 to minimize generation randomness."

- Verbosity bias: A grading tendency to favor longer, more comprehensive-sounding answers regardless of precision. "a verbosity bias, where judges favor longer, comprehensive-sounding responses even if they lack specific technical precision."

- Vignette: A narrative case providing context for a set of related questions. "case-based multiple-choice item sets (vignettes)"

- Zero-Shot (ZS): A prompting setting where the model answers without examples or prior task-specific training. "Zero-Shot (ZS): The model is presented with the question context and instructed to output the final answer directly."

Practical Applications

Overview

This paper shows that current reasoning-focused LLMs (e.g., Gemini 3.0 Pro, GPT‑5, Grok 4, Claude Opus 4.1, DeepSeek‑V3.1) can pass all three CFA levels on recent mock exams, achieving near-perfect scores on Levels I–II and strong performance on Level III, including constructed-response (CRQ) tasks. The evaluation pipeline (topic-balanced dataset, zero-shot vs. chain-of-thought prompting, rubric-based automated grading, pass/fail criteria) offers a replicable blueprint for domain competence testing. Below are practical applications stemming from these findings and methods.

Immediate Applications

- AI CFA study copilot for candidates (Sector: Education/EdTech)

- Adaptive practice with item sets mirroring 2024–2025 topic weights; targeted remediation on weak topics; CRQ practice with rubric-aligned feedback; CoT for open-ended explanations; ZS for quick checks.

- Tools/products: Personalized CFA prep platform; “mock exam generator” that refreshes vignettes and distractors; CRQ feedback assistant.

- Assumptions/Dependencies: Licensing for practice content; mitigation of LLM grader verbosity bias; periodic updates to match curriculum changes; human review for high-stakes feedback.

- Analyst copilot for finance teams (Sector: Finance/Software)

- Automates Level I–II tasks: ratio calculations, statement analysis, valuation walkthroughs, and investment tools; drafts Level III-style memos (asset allocation rationale, portfolio tradeoffs).

- Tools/products: “Analyst CoPilot” embedded in research and PM workflows (e.g., FactSet/Bloomberg plugins), with ZS for crisp computation and CoT for narrative synthesis.

- Assumptions/Dependencies: Data access and governance; human oversight for Ethical Standards judgments; guardrails to prevent hallucinated figures; audit logs.

- Investment memo and client letter drafting (Sector: Finance/Wealth/IR)

- Generates structured CRQ‑style memos: market outlooks, portfolio rationales, rebalancing rationales, and client-friendly summaries.

- Tools/products: Investment committee memo generator; client-reporting assistant with template libraries; red-team module to stress-test arguments.

- Assumptions/Dependencies: Compliance pre-check; approval workflows; fact retrieval from trusted data.

- Ethics and compliance pre-checker (Sector: Finance/Compliance)

- Pre-screens research notes and marketing materials for CFA Ethical Standards issues; flags conflicts, disclosures, suitability, and misrepresentation risks.

- Tools/products: “Ethics risk radar” integrated into document pipelines; explainer highlighting policy references and required edits.

- Assumptions/Dependencies: Recognized limitation—Ethical Standards remain a higher-error area for top models; must be advisory with human compliance sign-off.

- HR and L&D assessment for finance roles (Sector: Enterprise/HR)

- Internal knowledge checks mirroring topic weights across levels; identifies skill gaps; routes staff to targeted micro-lessons.

- Tools/products: Finance capability assessments; CFA-topic-aligned quizzes; role-based badges.

- Assumptions/Dependencies: Psychometric validation; anti-cheat measures; clear separation between formative and summative uses.

- Domain-readiness evaluation harness for AI procurement (Sector: Software/Enterprise)

- Reuses the paper’s pass/fail criteria, topic coverage mapping, and ZS/CoT protocols to certify model readiness for financial tasks.

- Tools/products: “Finance LLM Benchmark Suite”; dashboard tracking model scores, topic-level errors, and prompting effects.

- Assumptions/Dependencies: Access to current, low-contamination datasets; cost control for model evaluations; version pinning and snapshot logging.

- Automated grading triage for finance courses (Sector: Education)

- First-pass scoring of CRQs with rubric-based LLM evaluator; provides structured feedback before human grading.

- Tools/products: Grading assistant for business school/fintech courses; feedback explainer tied to rubric criteria.

- Assumptions/Dependencies: Human-in-the-loop for final grades; mitigation of verbosity bias; fairness audits and calibration.

- Prompting policy/orchestration in enterprise (Sector: Software/Platform)

- Applies empirical insight: ZS for closed-ended/MCQ-like tasks; CoT for open-ended/CRQ synthesis; dynamic routing by task type.

- Tools/products: Prompt router and governance layer that auto-selects reasoning mode per task and model.

- Assumptions/Dependencies: Telemetry on outcome quality; continuous A/B testing; model-specific tuning.

- Benchmark rotation and contamination mitigation (Sector: Research/Platform)

- Uses paywalled, newly updated materials to reduce contamination; rotates item sets periodically; tracks drift.

- Tools/products: Rotating benchmark curator; data lineage tracker; contamination checks via embedding and overlap detection.

- Assumptions/Dependencies: Content licensing; reproducibility vs. secrecy trade-offs; sustainable refresh cadence.

- Retail investor education assistant (Sector: Consumer Finance/Education)

- Teaches investing concepts via exam-like Q&A and vignettes; explains risk/return, diversification, and basic valuation.

- Tools/products: “CFA-lite” learning app for retail; scenario simulators for asset allocation education.

- Assumptions/Dependencies: Clear disclaimers (“not investment advice”); suitability and risk-warning templates; no individualized recommendations without regulation-compliant guardrails.

- Policy and procurement criteria for public institutions (Sector: Policy/Government)

- Adopt domain-exam standards (thresholds, topic coverage) when procuring AI for finance-related tasks in agencies.

- Tools/products: “AI competence badges” tied to pass/fail thresholds; agency playbooks for model selection.

- Assumptions/Dependencies: Transparent test sets; conflict-of-interest controls; periodic re-certification.

Long-Term Applications

- Regulated AI investment advisor with explainability (Sector: Finance/Wealth/FinTech)

- An AI RIA/robo-advisor that meets competency thresholds, produces CRQ-grade rationales, and maintains auditable reasoning logs; starts with human co-sign, progresses to more autonomy.

- Tools/products: Advisory engine with suitability checks, compliance logging, and post-hoc explanation; supervisory control dashboards.

- Assumptions/Dependencies: Regulatory approval and liability frameworks; robust out-of-sample performance; stress tests under market shifts.

- AI junior-to-senior analyst progression (Sector: Finance/Asset Management)

- Progressive delegation of research, modeling, and portfolio construction tasks as models reach Level III-grade synthesis; AI participates in investment committee prep.

- Tools/products: Role-based capability gates; multi-model consensus; risk/ethics “second reader” agents.

- Assumptions/Dependencies: Proven reliability on ethical judgments; incident response playbooks; continuous model revalidation.

- Official or high-stakes CRQ grading with AI (Sector: Education/Certification)

- LLM-driven scoring for constructed responses with human calibration; reduces grading backlog; enables richer formative assessment.

- Tools/products: Secure grading platform with rubric alignment, calibration sets, fairness audits, and bias controls (e.g., verbosity penalties/normalization).

- Assumptions/Dependencies: Stakeholder acceptance; rigorous validity studies; adversarial robustness and privacy guarantees.

- Standardized licensing/certification of AI systems via professional exams (Sector: Policy/Standards)

- Cross-institution frameworks using exams like CFA to license AI systems for specific regulated tasks; continuous monitoring and re-licensing.

- Tools/products: Certification bodies, audit trails, and revocation procedures; standardized reporting of topic-level competencies and blind evals.

- Assumptions/Dependencies: Multi-stakeholder governance; anti-gaming controls; legal clarity on accountability.

- Cross-domain professional exam suite (Sector: Research/Platform)

- Unified benchmark portfolio (Finance/Law/Medicine/Accounting) to measure model readiness for enterprise deployment across regulated domains.

- Tools/products: Exam orchestration platform; domain-specific pass/fail profiles; drift and degradation monitors.

- Assumptions/Dependencies: Current, contamination-resistant exams; licensing; consistent scoring protocols.

- Ethics-centric model improvement pipeline (Sector: AI/ML)

- Targeted data/programmatic RL to close gaps in Ethical Standards; scenario-rich counterfactuals and adversarial cases to improve normative reasoning.

- Tools/products: Ethics case generator; red-team datasets; reinforcement learning with rule conformance rewards.

- Assumptions/Dependencies: Access to high-quality, diverse ethics cases; consensus rubrics; avoiding overfitting to narrow policy sets.

- Data-contamination–resistant evaluation ecosystems (Sector: Research/Standards)

- Ephemeral, paywalled, and randomized content with secure enclaves; provenance tracking and watermarking of evaluation artifacts.

- Tools/products: Secure benchmark vaults; forensic contamination detectors; reproducible yet rotating testbeds.

- Assumptions/Dependencies: Publisher partnerships; infrastructure funding; standardized disclosure norms.

- Assurance and safety for financial LLMs (Sector: Risk/Assurance)

- Stress testing across market regimes; counterfactual explanations; uncertainty and calibration tooling; runtime monitors for ethics/compliance.

- Tools/products: Reliability dashboards; reasonableness checks vs. priors; ensemble and abstention mechanisms.

- Assumptions/Dependencies: Access to historical and synthetic regime data; thresholds for auto-escalation; governance alignment.

- AI-delivered finance curricula with credible assessments (Sector: Education)

- End-to-end AI course delivery: personalized paths, authentic assessments, and explainable grading; bridges to professional certifications.

- Tools/products: Courseware copilot; exam simulators with rubric-grounded feedback; progression analytics.

- Assumptions/Dependencies: Accreditation acceptance; robust proctoring; fairness and accessibility requirements.

- Autonomous portfolio construction agent participating in IC (Sector: Asset Management)

- Long-horizon agent that drafts proposals, runs scenario/derivative hedging analyses, and debates tradeoffs; integrates with risk engines and OMS.

- Tools/products: IC copilot with hypothesis tracking and post-decision analysis; integration to risk systems (VaR, stress tests).

- Assumptions/Dependencies: Proven generalization beyond exam-style tasks; strong data integration; human veto power and controls.

Notes on feasibility across all applications:

- Dependencies common to many use cases: access to high-performing reasoning models; cost/latency management; secure data integration; licensing for exam/learning content; human oversight—especially for ethics; mitigation of verbosity bias in LLM grading; ongoing evaluation to detect degradation, contamination, and distribution shift.

Collections

Sign up for free to add this paper to one or more collections.