Symmetries at the origin of hierarchical emergence (2512.00984v1)

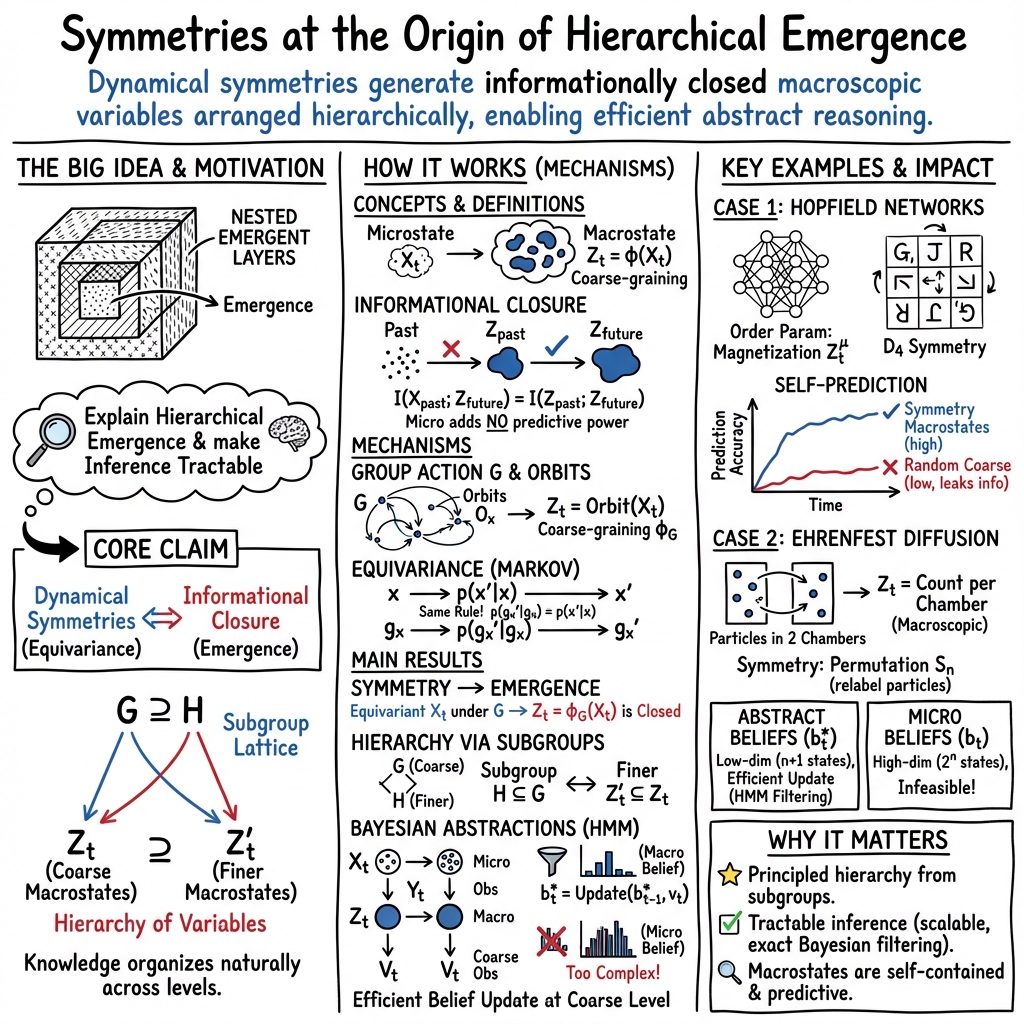

Abstract: Many systems of interest exhibit nested emergent layers with their own rules and regularities, and our knowledge about them seems naturally organised around these levels. This paper proposes that this type of hierarchical emergence arises as a result of underlying symmetries. By combining principles from information theory, group theory, and statistical mechanics, one finds that dynamical processes that are equivariant with respect to a symmetry group give rise to emergent macroscopic levels organised into a hierarchy determined by the subgroups of the symmetry. The same symmetries happen to also shape Bayesian beliefs, yielding hierarchies of abstract belief states that can be updated autonomously at different levels of resolution. These results are illustrated in Hopfield networks and Ehrenfest diffusion, showing that familiar macroscopic quantities emerge naturally from their symmetries. Together, these results suggest that symmetries provide a fundamental mechanism for emergence and support a structural correspondence between objective and epistemic processes, making feasible inferential problems that would otherwise be computationally intractable.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper asks a simple question with big consequences: why do many complex things in the world seem to organize themselves into layers (like physics → chemistry → biology), where each layer has its own rules? The author argues that a key reason is symmetry. When a system’s rules don’t change under certain transformations (like rotating a shape or swapping identical parts), those symmetries naturally create “higher-level” variables that evolve on their own. Even better, the same symmetries tell an observer how to form simpler beliefs about the system, making it much easier to predict and learn about without getting lost in tiny details.

Key questions

The paper explores three main questions:

- When do “zoomed-out” variables (macroscopic variables) evolve by themselves, so we don’t need to track every tiny detail (microscopic variables)?

- How do different symmetries create a whole hierarchy of such zoomed-out levels?

- How can an observer use the same symmetries to update beliefs efficiently at the right level, instead of tracking everything?

Approach and key ideas (explained simply)

To answer these, the paper combines three tools:

- Group theory (symmetry): A symmetry means you can transform the system (like rotate, reflect, or swap identical parts) and the rules remain the same. Think of all the ways to rotate a square—it still looks like a square.

- Information theory (what info matters): A “good” higher-level description is one where the past of the zoomed-out variable contains all the information needed to predict its future. That means micro-details don’t help—this is called informational closure.

- Markov processes and Bayesian beliefs (how things evolve and how we track them): A Markov process means “the next step depends only on where you are now.” Bayesian beliefs are your best-guess probabilities about hidden things, updated as you get new evidence (like a weather forecast updating as new data arrives).

Two central ideas explained with analogies:

- Coarse-graining (zooming out): Instead of tracking each bird in a flock, you track the overall direction and shape. A coarse-graining groups many microstates into one macrostate.

- Equivariance (symmetry-friendly dynamics): Doing a symmetry first (e.g., rotate the whole system) and then running the dynamics gives the same result as running the dynamics first and then applying the symmetry. If that holds, the symmetry is built into the rules.

What the paper shows:

- If the dynamics respect a symmetry (are equivariant), then grouping microstates that are the same under that symmetry creates a macro-variable that predicts itself—micro-details don’t add predictive power. This is informational closure.

- Symmetries come in families (groups and subgroups). Each subgroup gives a different “zoom level.” Together, these levels form a hierarchy that mirrors the “family tree” of the symmetry’s subgroups.

- If both the system and your measurements respect the same symmetry, you can build a simpler, lower-dimensional belief (an abstract belief) about the macro-variables and update it directly—no need to track the full microscopic state.

Main findings and why they matter

- Symmetry creates emergent macroscales: When the rules are invariant under a symmetry (like swapping identical particles), you can group microstates into orbits (sets of states that are the same under the symmetry). Those orbits form a macro-variable whose future can be predicted from its own past—no micro peeking required.

- A whole hierarchy appears: Every subgroup (a smaller symmetry inside the bigger symmetry) yields its own macro-variable. These levels nest into a tidy hierarchy, from very detailed to very coarse.

- Symmetry-guided beliefs are efficient: In hidden systems with noisy observations, you can form abstract beliefs about the macro-variables (like counts or averages) and update them efficiently with standard Bayesian filtering. This can cut computation by an enormous amount while still answering the right questions.

- Tested on two classic models:

- Hopfield networks (memory in neural nets): The “magnetization” (how similar the current activity is to each stored pattern) forms a macro-variable. When you train on all rotations/reflections of letters, the symmetries cleanly separate “which letter” from “which rotation/reflection,” giving a hierarchy of macrostates that predict themselves better than random groupings.

- Ehrenfest diffusion (gas in two boxes): Tracking only “how many particles are in the left box” is the natural macro-variable created by the symmetry of swapping identical particles. Beliefs about this count can be updated in time that grows linearly with particle number—compared to the impossible exponential cost of tracking every particle’s position.

Why this is important:

- It explains how layered organization can arise from a deep and general property—symmetry.

- It shows a practical path for making hard inference tasks tractable by choosing the right level to think at—guided by symmetry.

- It links the “objective world” (physical dynamics) and the “subjective world” (our beliefs) through the same structure.

Two short case studies

Hopfield networks (a model of memory)

- What it is: A network of simple “neurons” that stores patterns (like letters) and settles into the closest stored pattern when you start from a noisy version.

- Symmetry insight: If you store patterns plus all their rotations/reflections, the network’s rules are symmetric to those operations. The “magnetization” (one similarity score per stored pattern) is the right macro-variable: it predicts its own evolution and separates “which letter” from “how it’s rotated/reflected.”

- Result: These symmetry-based macrostates predict the future much better than random groupings with the same size, confirming they’re the right abstractions.

Ehrenfest diffusion (gas in two chambers)

- What it is: n particles move between two chambers. At each step, at most one particle switches sides. Sensors sometimes misread a particle’s side.

- Symmetry insight: Since particles are identical, swapping any two doesn’t change the rules. The natural macro-variable is the number of particles on one side (a simple count).

- Beliefs: Instead of tracking 2n microscopic configurations, you track just n+1 possibilities (0 to n particles on the left). Updating these beliefs is lightning-fast and still answers the main question (“how full is the chamber?”).

Implications and potential impact

- A general mechanism for emergence: Symmetry isn’t just a nice-to-have—it actively builds the higher-level rules we observe.

- A bridge between physics and learning: The same symmetries that shape the world also shape the right way to think about it (your beliefs), letting you update at the right level and save massive computation.

- Practical gains for AI and science: In messy, high-dimensional, partially observed systems, symmetry-guided abstract beliefs can make impossible tasks feasible—especially for control, prediction, and scientific modeling.

- A roadmap for abstraction: Instead of handcrafting what to track, look for symmetries. Their subgroup structure gives you a principled “menu” of zoom levels.

In short: If the rules don’t change under certain transformations, you can safely “factor out” those transformations. That creates clean, self-contained higher-level variables and a matching way to think about them—letting you predict and learn more, with less.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper presents a symmetry-based mechanism for hierarchical emergence and a parallel hierarchy for Bayesian beliefs. The following points identify what remains missing, uncertain, or left unexplored, framed to guide concrete future research:

- Formal extension beyond finite groups and discrete state spaces:

- Generalize Theorem 1 and Corollaries to continuous state spaces and continuous-time dynamics (e.g., stochastic differential equations), replacing the Reynolds average with integration over Haar measure and handling measure-theoretic subtleties.

- Clarify whether informational closure via group orbits persists with Lie groups, non-compact groups, and continuous symmetries.

- Approximate or broken symmetries:

- Develop a quantitative theory of “near-equivalence” that measures how close dynamics/emissions are to equivariance and how that affects information closure (e.g., bounds on leakage using KL divergence or mutual information).

- Establish robustness: when small symmetry-breaking perturbations preserve macroscopic closure approximately; define stability conditions and error bounds.

- Necessity vs sufficiency:

- Provide full necessary and sufficient conditions for informational closure beyond the sufficiency of equivariance, connecting rigorously to Markov lumpability and equitable partitions.

- Characterize when group-orbit coarse-grainings are maximal or minimal among all informationally closed coarse-grainings.

- Subgroup–level correspondence:

- Formalize the claimed homomorphism between the subgroup lattice and the hierarchy of informationally closed levels: under what conditions is it injective/surjective; when do distinct subgroups induce identical macrostates?

- Identify cases where emergent levels exist that are not induced by subgroups of a given symmetry (e.g., due to non-group-based lumpability), and classify these structures.

- Non-Markovian and non-stationary processes:

- Extend results to non-Markovian dynamics (memoryful processes) and time-inhomogeneous Markov chains; define closure criteria that allow multi-step memory at the macro level.

- Handle time-varying or context-dependent symmetries (e.g., symmetry changes across regimes or phases).

- Verification and computation of the Reynolds invariance condition:

- Provide algorithms to test invariance of the averaged kernel in high-dimensional systems (including sampling-based estimators for large groups).

- Address computational feasibility for continuous or very large groups, including randomized approximations and convergence guarantees.

- Measurement symmetry assumptions:

- Relax the strict emission symmetry condition; characterize how mismatch between process symmetries and sensor symmetries degrades belief closure and what partial results still hold.

- Derive sufficient conditions under which coarse-grained measurements can still support efficient filtering for despite asymmetric or noisy sensing.

- Strengthening the belief-level closure condition:

- Characterize when can be achieved; provide constructive design principles for to meet this condition (e.g., sensor aggregation architectures).

- Quantify the trade-off between tractability and informativeness when the condition fails; derive bounds on the gap between and .

- Practical belief-updating and algorithmic issues:

- Provide explicit scalable algorithms for updating abstract beliefs across intermediate levels in the subgroup lattice; analyze time and memory complexity beyond big-O claims.

- Develop online level selection policies that choose the appropriate abstraction level given computational budget and accuracy requirements (e.g., based on predictive information or value of information).

- Address how to compute or approximate the mixing coefficients in the mapping Ψ (Eq. 31) from data when is not known or is costly to estimate.

- Learning symmetries and subgroup lattices from data:

- Propose statistically consistent methods to infer latent group actions, identify subgroup structure, and estimate equivariance from trajectories under noise and partial observability.

- Analyze sample complexity, identifiability, and robustness of learned symmetries in realistic regimes; incorporate geometric deep learning tools for equivariant model discovery.

- Scope of case studies and empirical validation:

- Validate the framework on diverse real-world systems (beyond Hopfield and Ehrenfest), including physical, biological, and social networks with plausible approximate symmetries.

- In Hopfield networks:

- Test sensitivity of results to non-Hebbian training, spurious attractors, capacity constraints (m/n), asynchronous updates, and varying β; quantify performance across phases.

- Compare symmetry-induced macrostates with alternative order parameters; provide statistical significance and reproducibility of self-prediction advantages over random coarse-grainings.

- In Ehrenfest:

- Provide concrete, implementable algorithms for belief updates at scale; validate complexity claims empirically.

- Explore intermediate subgroup-induced macroscales (block permutations), show explicit filtering constructions for these levels, and quantify gains versus micro-level filtering.

- Integration with control and intervention:

- Extend the framework to decision-making (POMDPs, control), showing how symmetry-induced macrostates support near-optimal policies; analyze controllability and causal interpretability at macro levels.

- Study how actions can be designed to respect or exploit symmetries to simplify planning and learning.

- Relation to renormalization and effective field theories:

- Provide concrete bridges between the proposed symmetry-induced emergence and renormalization group methods; demonstrate on models where both apply and compare predictions and macrostates.

- Clarify conditions under which symmetry-derived macroscales coincide with RG coarse-grainings, and when they diverge.

- Category-theoretic and structural correspondences:

- Formalize the “structural correspondence” between objective and epistemic hierarchies using categorical constructs (functors, adjunctions); prove commutativity properties across levels more generally.

- Handling local and weak symmetries:

- Extend beyond global symmetries to local/fibration/gauge-like symmetries; connect to graph-coloring/equitable partitioning, provide algorithms and complexity guarantees.

- Initial-condition dependencies:

- Analyze the impact of non-symmetric priors on emergent levels and abstract beliefs; specify transient versus asymptotic effects and provide correction methods.

- Quantifying emergence strength:

- Define operational measures of “degree of emergence” associated with symmetry (e.g., mutual information closure gap across levels), and use them to rank macroscales or guide level selection.

- Limits and failure modes:

- Characterize scenarios where subgroup-induced coarse-grainings produce misleading macrostates (e.g., symmetry that is dynamically irrelevant, metastability, non-ergodicity); provide diagnostics to detect such cases.

Glossary

- Abstract beliefs: Coarse-grained Bayesian beliefs over macroscopic variables induced by symmetries, which can be updated autonomously at reduced dimensionality. "These abstract beliefs are built via \autoref{eq:cool_beliefs}, which mixes micro-level beliefs in the equivalence class , and then marginalises the mixture via ."

- Activation function: Nonlinear function mapping neuron inputs to outputs in a neural network. "F(x) = (1+\tanh{x})/2 is the activation function,"

- Bayesian beliefs: The posterior distribution over latent states given observations, forming a belief-state Markov process. "The optimal estimation of based on a sequence of measurements is given by Bayesian beliefs, a Markov process $B_t\coloneq\mathbb{E}\{\mathds{1}_{X_t}|{Y}{t}\}$"

- Bayesian filtering: Recursive update of beliefs over latent states using transition and observation models. "which can be efficiently updated via standard Bayesian filtering as"

- Binary symmetric channel: A noise model where each bit is flipped independently with a fixed crossover probability. "obtained via a binary symmetric channel with crossover probability "

- Coarse-graining: Mapping from a microscopic state space to a reduced macroscopic description. "A coarse-graining mapping gives rise to another process ."

- Data processing inequality: Information-theoretic principle stating that post-processing cannot increase mutual information. "Then, the data processing inequality implies that"

- Death-birth process: A Markov process where the state increments or decrements by one with rates dependent on current counts. "Given the dynamics of the Ehrenfest model, can be seen to follow a Markovian death-birth process"

- Dihedral group: The symmetry group of a regular polygon, including rotations and reflections. "additional symmetries corresponding to the dihedral group --- which is generated by the rotation () and the horizontal reflection ()."

- Dynamical equivariance: Property that applying a group action to states preserves transition dynamics. "One says that a Markov process is dynamically equivariant if there exists a group action such that"

- Ehrenfest diffusion: A classical stochastic model of gas molecules moving between two chambers. "These results are illustrated in Hopfield networks and Ehrenfest diffusion,"

- Emission kernel: The conditional distribution that generates observations from latent states in an HMM. "while observations are generated through an emission kernel ."

- Equivalence class: A set of elements identified as equivalent under a given relation, here induced by a symmetry action. "the equivalence class ${y}{t}'\in\psi<sup>{-1}({y}{t})"</sup></li> <li><strong>Equivariant HMMs</strong>: Hidden Markov models whose latent dynamics and emissions respect the same symmetry group actions. "Systems satisfying these properties will be called equivariant HMMs."</li> <li><strong>Fibration symmetries</strong>: A formalism to describe local symmetries relating fine and coarse structures via fibered mappings. "which can be seen as a local symmetry using the formalism of fibration symmetries."</li> <li><strong>Hamming distance</strong>: The number of positions at which two equal-length binary vectors differ. "where $\|x-x'\|xx'$."</li> <li><strong>Hebbian learning</strong>: A synaptic update rule strengthening connections between co-activating neurons. "Hopfield networks typically use Hebbian learning"</li> <li><strong>Hidden Markov model (HMM)</strong>: A model with Markovian latent states and noisy observations generated from them. "Let us investigate the relationship between the hierarchical organisation of a process and measurements of it by focusing on hidden Markov models (HMM)."</li> <li><strong>Information closure</strong>: Condition where coarse-grained past contains all information needed to predict coarse-grained future, rendering micro-details irrelevant. "This idea can be operationalised via the condition of information closure"</li> <li><strong>Klein group</strong>: The abelian group isomorphic to $\mathbb{Z}_2\times\mathbb{Z}_2$, representing two independent reflections. "isomorphic to Klein's group $\mathbb{Z}_2\times\mathbb{Z}_2$."</li> <li><strong>Kullback-Leibler divergence</strong>: A measure of discrepancy between two probability distributions. "As the above expression corresponds to a Kullback-Leibler divergence,"</li> <li><strong>Lattice (subgroup lattice)</strong>: The partially ordered structure of subgroups under inclusion. "whose organisation reflects the lattice structure of the subgroups of $G$"</li> <li><strong>Markov lumpability</strong>: A property enabling aggregation of states into macro-states that preserve Markovian dynamics. "A necessary condition is given by Markov lumpability,"</li> <li><strong>Mattis magnetisation</strong>: The overlap between the current network state and stored patterns, serving as an order parameter in Hopfield networks. "making the so-called Mattis magnetisation"</li> <li><strong>Mutual information</strong>: A measure quantifying the shared information between random variables. "where $I$ is Shannon's mutual information."</li> <li><strong>Orbit (group theory)</strong>: The set of states reachable by applying group actions to a given state, forming equivalence classes. "A group action induces a partition on $\mathcal{X}$ via ‘orbits’ of the form"</li> <li><strong>Orbit-stabiliser theorem</strong>: A group-theoretic result relating orbit sizes to stabiliser subgroup sizes. "where (a) is a consequence of the orbit-stabiliser theorem."</li> <li><strong>Order parameter</strong>: A macroscopic quantity summarising system state and indicating phases or collective behaviour. "a natural order parameter of the dynamics"</li> <li><strong>Reynolds operator</strong>: The averaging operator over a group action, used to symmetrise functions or kernels. "let us introduce the Reynolds operator"</li> <li><strong>Renormalisation group theory</strong>: A framework describing how physical laws change with scale and explaining universality near criticality. "Emergence has been studied in statistical physics via phase transitions and renormalisation group theory,"</li> <li><strong>Supervenience</strong>: Asymmetric dependence where macro-properties require micro-changes but not vice versa. "— an asymmetry known as supervenience"</li> <li><strong>Symmetry group</strong>: A group of transformations leaving certain properties or dynamics invariant. "dynamical processes that are equivariant with respect to a symmetry group give rise to emergent macroscopic levels"</li> <li><strong>Tower property (of conditional expectations)</strong>: Law stating nested conditioning reduces to conditioning on the coarser sigma-algebra. "due to the tower property of conditional expectations,"</li> <li><strong>Transition kernel</strong>: The conditional probability law governing state evolution in a stochastic process. "transition kernel $p(x_{t+1}| x_t)$"

Practical Applications

Immediate Applications

The following items describe practical use cases that can be deployed now, leveraging symmetry-induced emergent variables and abstract belief updates to reduce complexity and improve prediction in partially observable, high-dimensional systems.

- Rotation/reflection-invariant pattern recognition for imaging and OCR

Sectors: software/AI, healthcare imaging

Use the paper’s dihedral-group factorization (Hopfield case study) to build orientation/reflection-invariant recognition pipelines (e.g., letters, icons, medical shapes), replacing pixel-level dependence with symmetry-derived macrostates (Mattis magnetisations).

Potential tools/products/workflows:

OrbitCoarseGrainerfor images, group-equivariant CNNs combined with a symmetry-aware content-addressable memory,MattisMagnetisationTrackerAssumptions/Dependencies: underlying patterns admit approximate dihedral symmetries; emissions (sensor outputs) preserve those symmetries; training data covers symmetry orbits. - Fast occupancy/count tracking from noisy sensors (smart buildings, retail, transport)

Sectors: energy (HVAC), IoT, retail analytics, transportation

Apply the Ehrenfest abstraction (particle counts as macrostates) to estimate people or device counts per zone from binary/noisy sensors, enabling real-time control and analytics without micro-level tracking.

Potential tools/products/workflows:

AbstractBeliefFilterfor counts,Count-HMMwith permutation symmetry, a workflow: identify exchangeability → build count-level HMM → filter abstract beliefs Assumptions/Dependencies: approximate permutation symmetry (exchangeable entities), emissions respect symmetry (similar noise across sensors), Markov-like transitions between zones. - Swarm robotics macro-state control and monitoring

Sectors: robotics, defense, agriculture

Use permutation symmetry of identical robots to track formation-level variables (e.g., cluster size, coverage) and control at the macroscale; update beliefs over macro formation states from noisy local sensing.

Potential tools/products/workflows:

SwarmOrbitController,MacroFormationFilterAssumptions/Dependencies: homogeneous agents; symmetric interaction rules; measurements preserve exchangeability; POMDP transitions close to Markov. - POMDP solvers with symmetry-based abstract beliefs

Sectors: software/AI (reinforcement learning), operations research

Reduce belief-state dimensionality by coarse-graining to macrostates when environments have rotation, reflection, or permutation symmetries (grid worlds, symmetric tasks).

Potential tools/products/workflows:

SymmetryPOMDPsolver,ReynoldsOperatortest for closure; workflow: detect symmetry → build orbit partitions → verify informational closure → plan on abstract belief MDP Assumptions/Dependencies: identified group actions; emissions and transitions equivariant; rewards compatible with macrostates. - Symmetry-aware anomaly detection in manufacturing and process industries

Sectors: industrial automation, energy

Monitor symmetry-derived macrostates (throughput per identical stations, balanced loads). Detect symmetry-breaking as anomalies (machine drift, faults) when macro predictions degrade.

Potential tools/products/workflows:

SymmetryAnomalyDetector,ClosureLeakMonitorAssumptions/Dependencies: near-exchangeable lines/stations; stable subgroup lattice; emissions maintain symmetry under normal operations. - Crowd and traffic modeling via macro counts

Sectors: urban planning, public safety, transport policy

Track flows using count-level HMMs (birth-death style at aggregates) from noisy sensors (cams, Wi-Fi, gates), enabling real-time interventions without individual tracking.

Potential tools/products/workflows:

AggregateFlowFilter,MacroInterventionPlannerAssumptions/Dependencies: adequate aggregation preserving approximate symmetry; measurement noise homogeneous across units. - Market regime tracking using exchangeability within sectors

Sectors: finance

Treat similar assets as exchangeable to infer macro regimes (sector bull/bear, volatility clusters) from noisy micro signals; reduce model size while preserving macro predictive power.

Potential tools/products/workflows:

RegimeOrbitFilter,SectorExchangeabilityToolkitAssumptions/Dependencies: asset clustering yields practical exchangeability; regime transitions approximately Markov; emissions invariant to constituent permutations. - Cybersecurity: symmetry-break monitoring for complex systems

Sectors: cybersecurity, IT operations

Baseline macro regularities (derived from system symmetries) provide a reference; significant deviations in abstract self-prediction imply attacks, misconfigurations, or drift.

Potential tools/products/workflows:

SymmetryBreakMonitor,MacroHealthScoreAssumptions/Dependencies: established symmetry-respecting baselines; sensor signals not adversarially manipulated to mimic symmetry. - Symmetry-respecting sensor design guidelines

Sectors: hardware/IoT, standards/policy

Design measurement functions to preserve system symmetries so abstract belief filtering remains valid (e.g., consistent sensor noise, identical sensor placement for exchangeable units).

Potential tools/products/workflows:

SymmetryPreservingMeasurementChecklist,Orbit-Consistent Sensor Layout PlannerAssumptions/Dependencies: known or hypothesized group structure; ability to standardize measurement channels. - Scientific time-series analysis: emergent variable discovery

Sectors: academia (physics, neuroscience, complex systems)

Apply Reynolds operator averaging and information-closure tests to identify macrostates and subgroup lattices in experimental/observational data, enabling principled coarse-graining.

Potential tools/products/workflows: Python/R library implementing

ReynoldsOperator,InfoClosureTest,SubgroupLatticeExplorerAssumptions/Dependencies: finite/discrete approximation or discretization; detectable approximate equivariance. - Model compression by factoring symmetries

Sectors: AI/software engineering

Reduce memory models (e.g., Hopfield-like content-addressable memories) by storing orbit representatives or symmetry-normalized patterns; operate in magnetisation space at inference.

Potential tools/products/workflows:

SymmetryModelCompressor,MagnetisationSpaceInferenceAssumptions/Dependencies: patterns lie in known symmetry classes; task tolerates macro-level decision making. - Education and outreach modules on symmetry-driven emergence

Sectors: education (STEM)

Interactive demos using Hopfield letters (dihedral symmetries) and Ehrenfest counts to teach emergence, coarse-graining, and Bayesian filtering.

Potential tools/products/workflows: Jupyter notebooks,

EmergenceLabinteractive app Assumptions/Dependencies: none beyond standard computing resources.

Long-Term Applications

The following items require further research, scaling, or development before widespread deployment. They extend the paper’s principles to broader settings, continuous groups, approximate symmetries, and institutional adoption.

- Automated symmetry discovery in real-world systems (including weak/approximate symmetries)

Sectors: AI, engineering analytics

General-purpose pipelines that infer group actions, subgroup lattices, and emergent variables from high-dimensional time-series via graph-coloring and approximate equivariance tests.

Potential tools/products/workflows:

AutoSymDetect,HierEmergenceAnalyzerAssumptions/Dependencies: robust algorithms for noisy, non-stationary data; guarantees for approximate closure. - Abstract belief frameworks for continuous groups and non-Markovian processes

Sectors: academia, scientific computing

Generalize symmetry-induced filtering to Lie groups and memoryful dynamics; integrate with renormalisation and variational inference to maintain tractability.

Potential tools/products/workflows:

LieGroupBeliefFilter,Variational-RG HybridAssumptions/Dependencies: new theory for continuous symmetries; efficient numerical implementations. - Symmetry-aware cognitive architectures for safe, scalable AI

Sectors: AI, robotics

Agents maintain and update beliefs at multiple abstraction levels aligned to environmental symmetries; act using macro policies, refine at micro when needed.

Potential tools/products/workflows:

HierBeliefAgent,Macro-to-Micro Policy BridgeAssumptions/Dependencies: tasks exhibit exploitable symmetries; online symmetry learning; stability under symmetry breaking. - Policy and standards for symmetry-preserving measurement and reporting

Sectors: governance/policy, public infrastructure

Mandate symmetry-consistent data collection for macro monitoring (epidemiology, supply chains, platforms), enabling tractable yet accurate inference at scale.

Potential tools/products/workflows:

MacroMonitordashboards, data standards for orbit-consistent reporting Assumptions/Dependencies: institutional adoption; measurement harmonization; privacy-preserving aggregation. - Pharmaceutical and structural biology modeling via symmetry-guided coarse-graining

Sectors: healthcare/biotech

Use molecular symmetries to define emergent macrostates (e.g., symmetric binding sites, motif counts) for tractable inference in drug discovery and protein simulations.

Potential tools/products/workflows:

SymmetryCoarseGrainMD,MotifBeliefFilterAssumptions/Dependencies: validated approximate symmetries; specialized measurement models. - Climate and energy systems: symmetry-guided macro inference

Sectors: environment, energy

Identify approximate symmetries (e.g., zonal/seasonal invariances) to track macro climate states or grid regimes via abstract beliefs, reducing computational burden.

Potential tools/products/workflows:

ClimateOrbitFilter,GridMacroRegimeTrackerAssumptions/Dependencies: discovery of useful symmetries; robustness to symmetry-breaking events. - Market and mechanism design exploiting exchangeability

Sectors: finance/economics

Design auctions and resource allocation protocols that leverage symmetry to infer macro allocations and equilibria efficiently, facilitating scalable market operations.

Potential tools/products/workflows:

ExchangeableMechanismLab,MacroEquilibriumEstimatorAssumptions/Dependencies: agent clustering and homogeneous rules; approximate Markovian regime dynamics. - Privacy-preserving national statistics via symmetric aggregation

Sectors: policy, privacy tech

Publish symmetry-preserving aggregates that enable accurate macro inference (abstract beliefs) while protecting micro data (compatible with differential privacy).

Potential tools/products/workflows:

SymmetricAggregationwith differential privacy,MacroInference APIsAssumptions/Dependencies: measurability of group actions in datasets; balancing privacy and utility. - Real-time macro control of large cyber-physical systems

Sectors: transportation, energy, logistics

Operate fleets, grids, and factories at macro levels (counts, regimes) with abstract belief filters; fall back to micro operations when symmetry breaks.

Potential tools/products/workflows:

MacroControl Platform,Symmetry-Break FallbackAssumptions/Dependencies: reliable macro measurements; detection and handling of symmetry violations. - Scientific theory-building using subgroup lattices as scaffolds

Sectors: academia (across disciplines)

Use the subgroup lattice to map emergent levels and corresponding belief hierarchies, aligning objective processes and epistemic models to structure disciplines and curricula.

Potential tools/products/workflows:

Lattice-to-Theory Mapper,Hierarchical Abstraction CurriculumAssumptions/Dependencies: community consensus; extension to continuous/approximate symmetries.

Collections

Sign up for free to add this paper to one or more collections.