- The paper demonstrates that architectural decisions, not LLM reasoning, primarily determine the success of browser agents.

- It details mitigation strategies like intelligent trimming and caching that reduce token consumption and improve performance, achieving an 85% success rate on benchmarks.

- The study underscores that enforcing domain allowlisting and programmatic safety checks is essential for secure, scalable web automation.

Building Browser Agents: Architecture, Security, and Practical Solutions

Introduction

The paper "Building Browser Agents: Architecture, Security, and Practical Solutions" (2511.19477) provides a comprehensive analysis of the architectural considerations and security challenges associated with the development and deployment of browser agents. These agents, powered by LLMs, are designed to automate web interactions and support tasks such as e-commerce operations, trip planning, and data extraction. The paper highlights that the primary limiting factors in the deployment of such agents are not the capabilities of LLMs themselves but rather the architectural decisions and security constraints that underpin their operation.

Architectural Challenges

Browser agents must navigate a set of unique challenges that are primarily architectural in nature. A key insight from the paper is that architectural decisions, rather than LLM reasoning capabilities, dictate the success of browser agents. The architecture encompasses context management, tool integration, and interaction with page elements. The failure modes of browser agents often arise from mismatches between their operational paradigm and the complexities of human-designed web interfaces, which involve dynamic content, visual hierarchies, and time-dependent information.

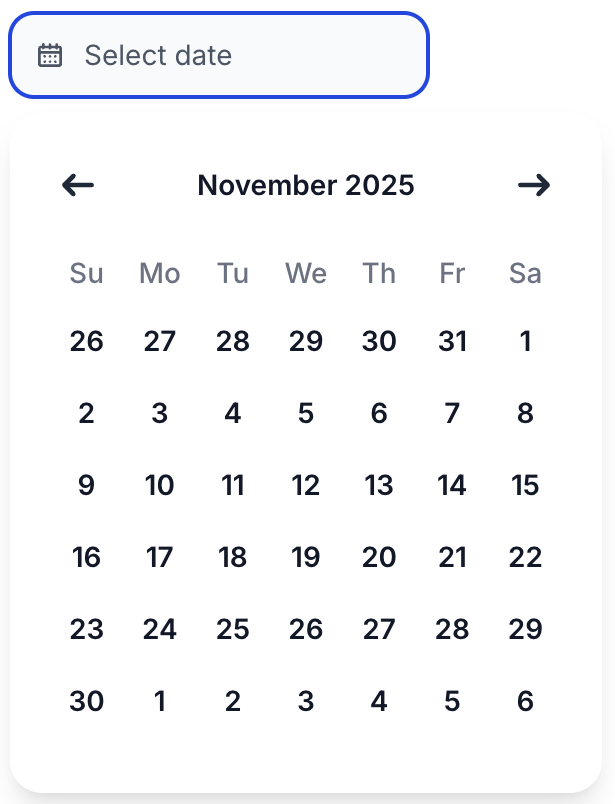

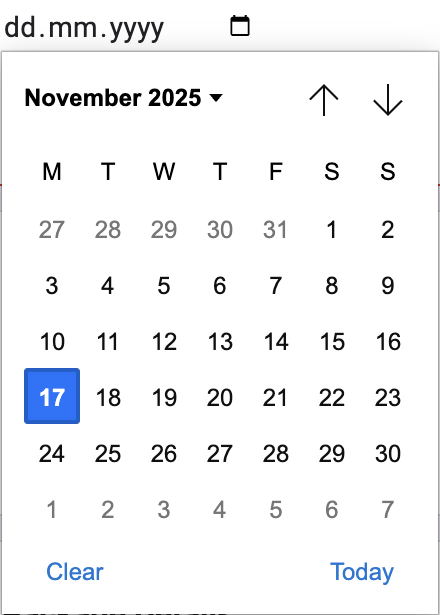

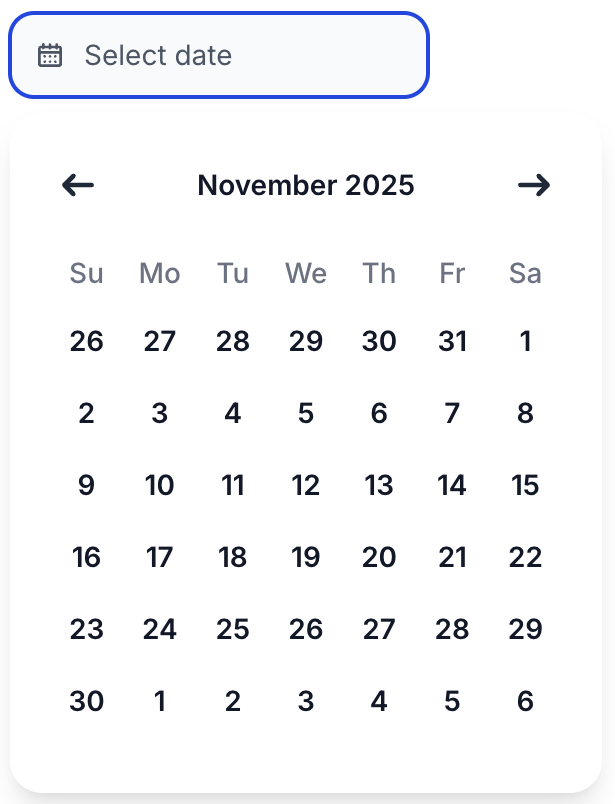

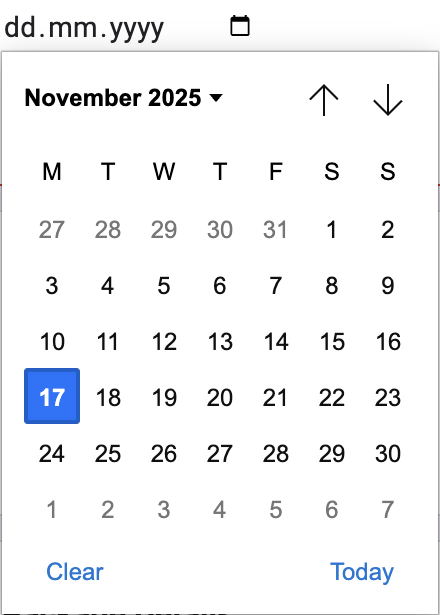

Figure 1 illustrates the difficulty of annotation approaches in densely packed interfaces, reinforcing the need for architecture that can accommodate both accessibility-driven text representations and vision-based interactions.

Figure 1: Annotation approach fails with dense interfaces. When attempting to annotate a date picker with many small clickable elements (24px each), the overlay becomes cluttered and confusing, making it difficult for the LLM to understand and reference specific elements.

Security Implications

Security challenges are a critical barrier to the deployment of browser agents in real-world settings. The paper provides a detailed exploration of prompt injection attacks, which exploit the interpretive nature of LLMs to execute unintended commands. The authors argue that these attacks undermine the feasibility of deploying general-purpose browsing intelligence, advocating instead for specialized tools that enforce programmatic constraints on actions.

Production observations underscore that AI-native browsers remain vulnerable to these attacks despite various mitigation strategies. Figure 2 shows how prompt injection can exploit input fields, requiring architectural countermeasures that emphasize deterministic safety boundaries rather than probabilistic LLM judgments.

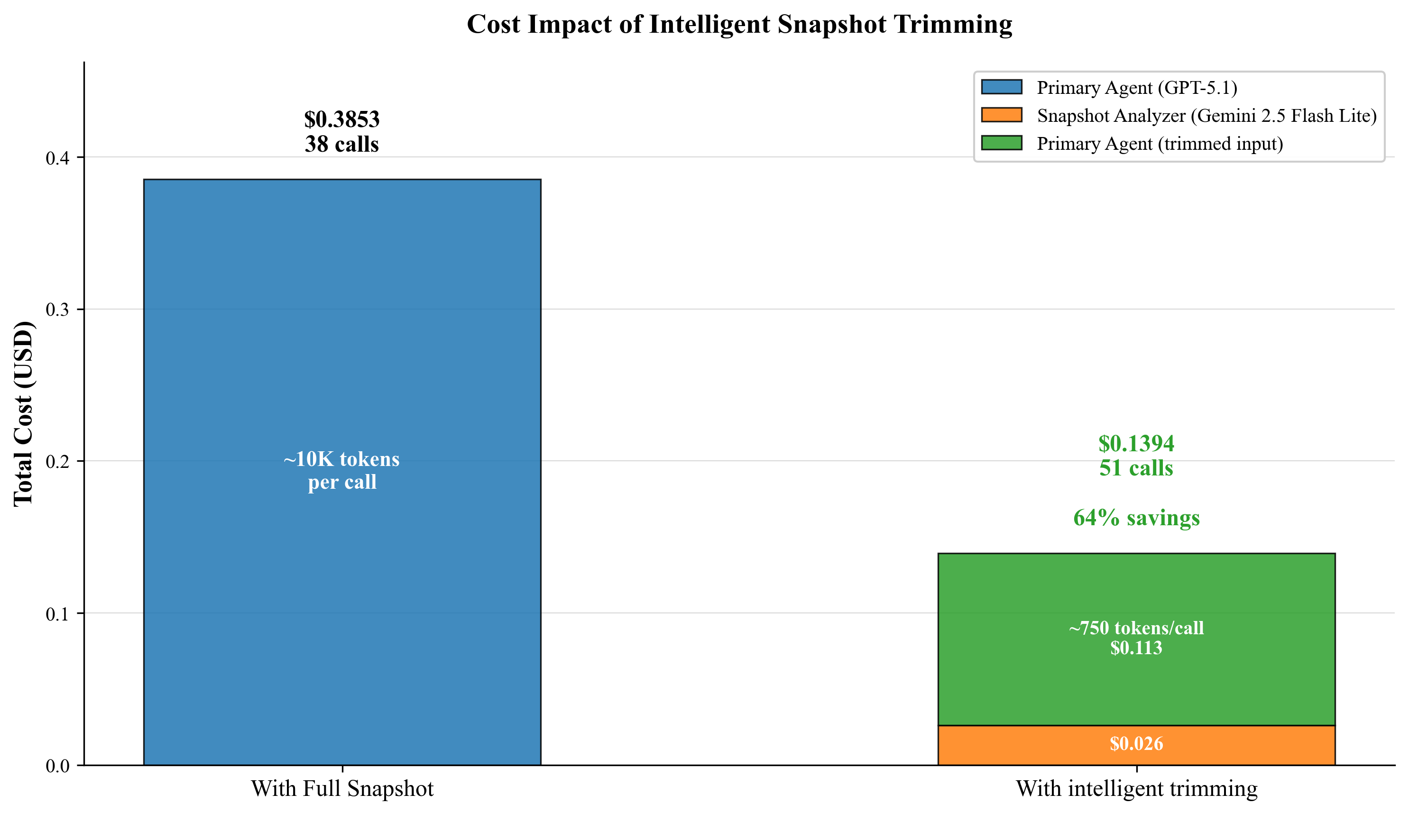

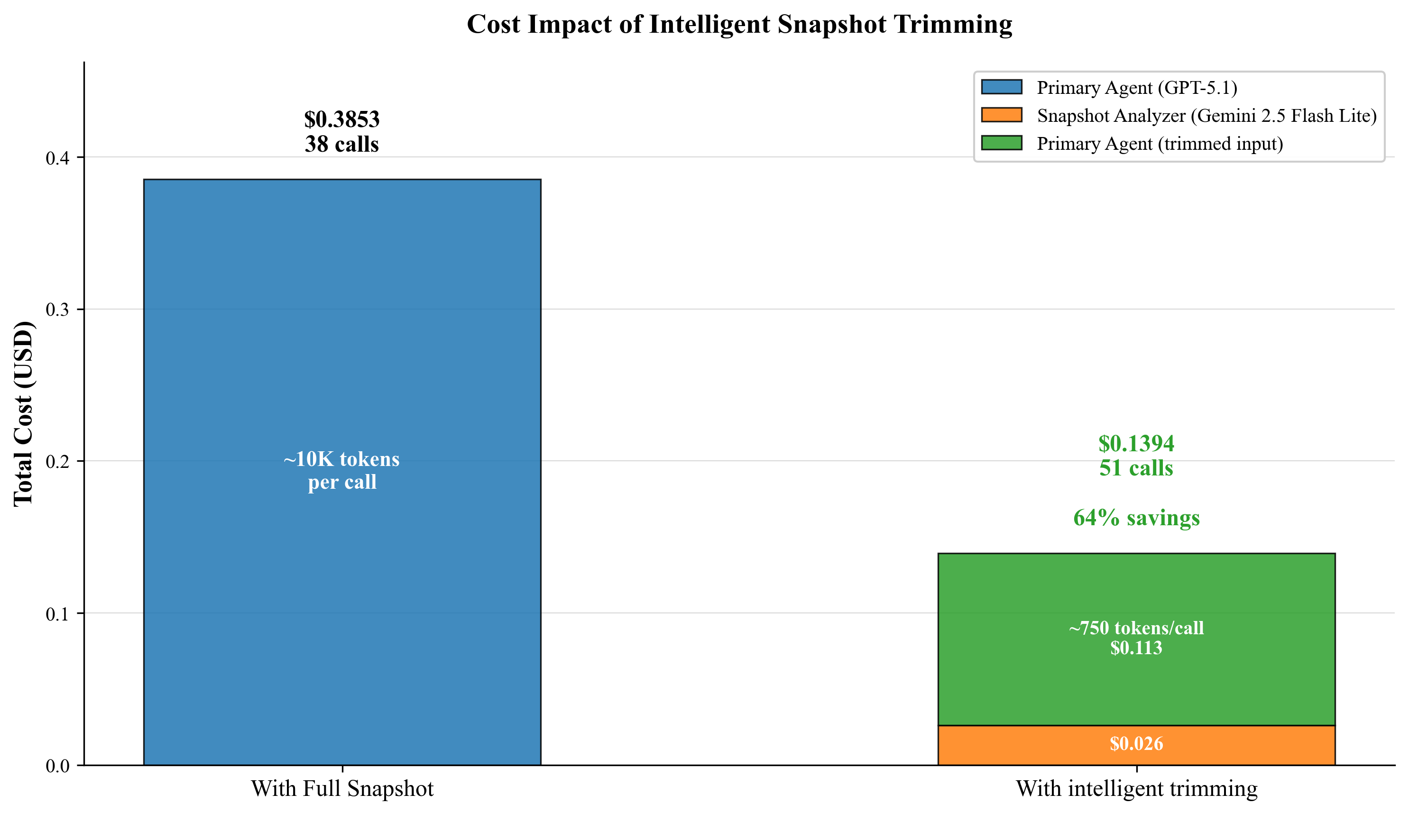

Figure 2: Intelligent Trimming Cost Analysis. Comparison of two approaches over a 38-51 action task. Without trimming, the primary LLM receives full snapshots on every call. With intelligent trimming, a lightweight model filters the snapshot, reducing the input size for the primary LLM from ~10,000 tokens to 500-1,000 tokens per step. Despite a 34% increase in tool calls due to occasional re-requests, the total cost decreases by 57%.

The paper examines browser agent performance using the WebGames benchmark, demonstrating approximately 85% success, a significant improvement over prior browser agents that achieved only 50% success. Key areas of improvement include enhanced context management and bulk action capabilities, which collectively shorten execution times and reduce token consumption. The detailed cost analysis reveals that strategic use of caching and intelligent trimming can result in significant cost savings, allowing the architecture to operate within sustainable budget constraints.

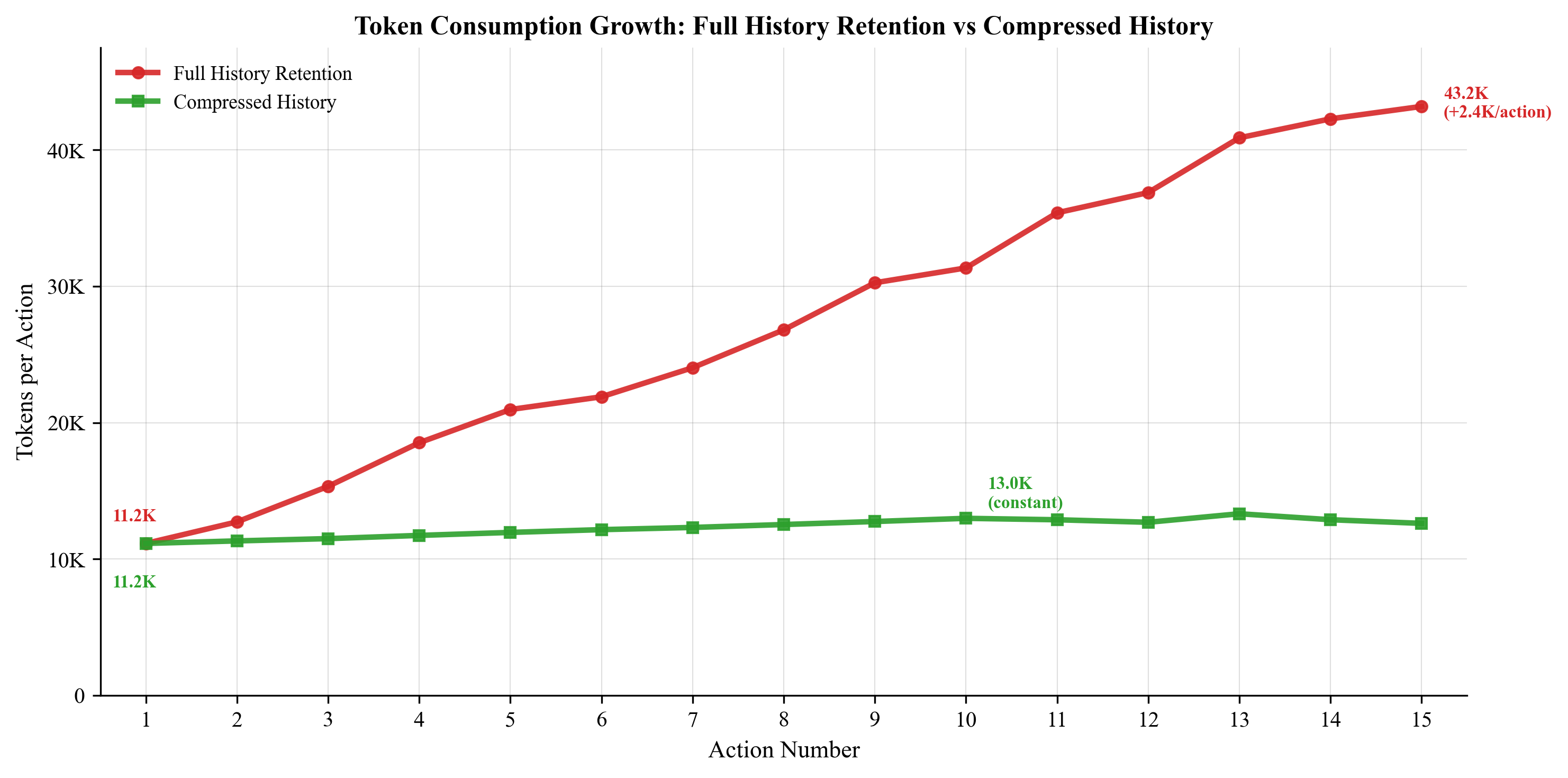

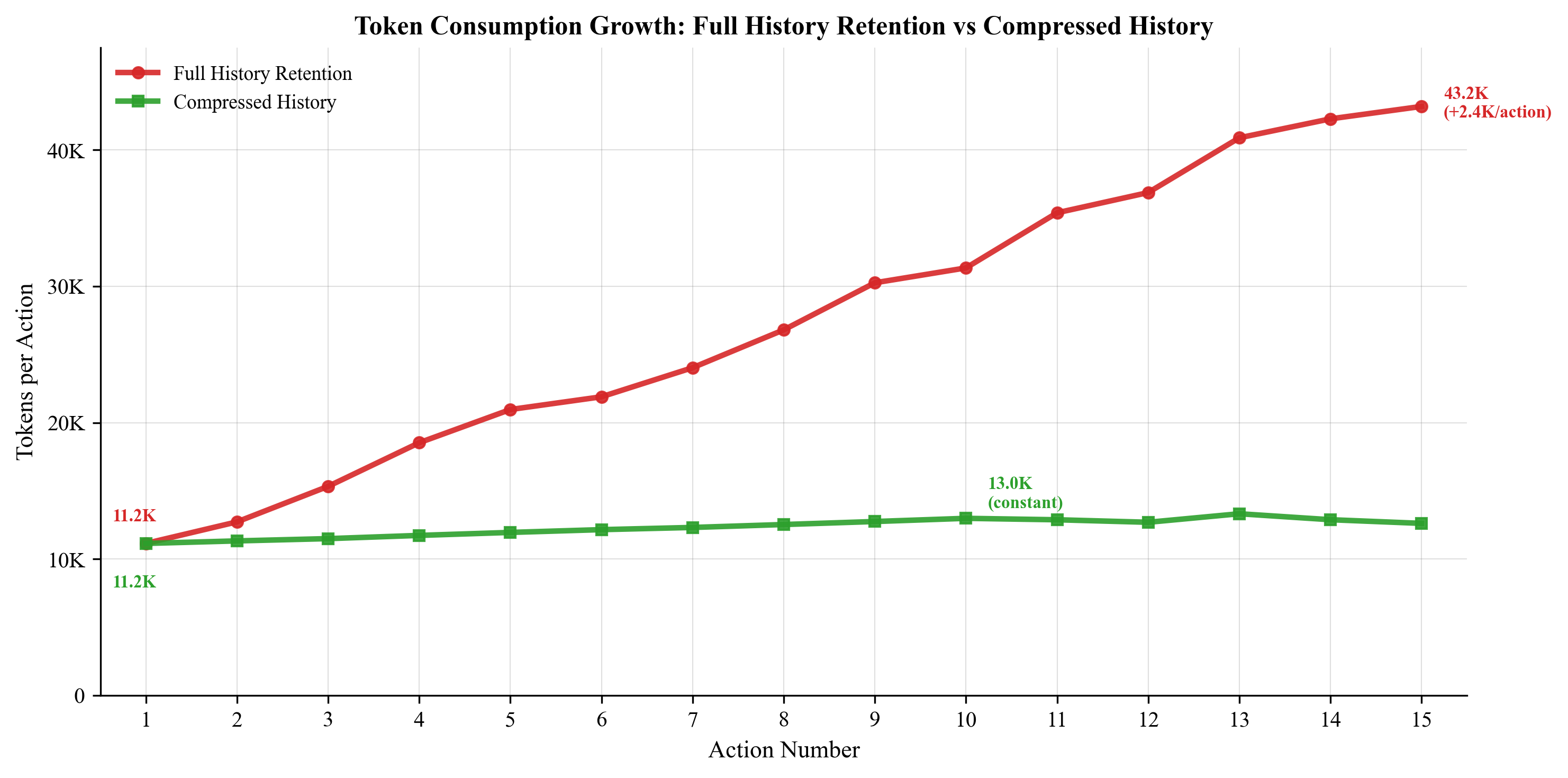

Figure 4 highlights how token consumption stabilizes through selective history compression and intelligent page trimming, providing an economic framework for efficient browser agent operations.

Figure 4: Token consumption: Full History vs. Compressed History. Full history retention leads to linear growth, reaching over 43,000 tokens after 15 actions. The compressed history approach stabilizes around 12,600 tokens, maintaining constant performance and cost regardless of task length.

Implications and Future Directions

The implications of this research suggest a shift towards the development of specialized browser agents tailored to specific tasks or domains, enhancing both reliability and security. By enforcing strict domain allowlisting and implementing programmatic safety checks within the execution layer, browser agents can operate more securely. The paper emphasizes the importance of balancing innovation in LLM capabilities with robust architectural foundations, paving the way for safer, scalable deployment in production environments.

Future developments in AI-driven web automation may focus on further refining these architectural elements, boosting resilience against ever-evolving security threats, and optimizing computational resource allocation. This trajectory would not only enhance agent robustness but also broaden the scope of autonomous web interactions across diverse application areas.

Conclusion

In conclusion, building effective browser agents that are both capable and secure necessitates an architectural overhaul that prioritizes specialized, constraint-enforced tools over generalized browsing intelligence. While LLM advances contribute to enhanced reasoning capabilities, the true determinant of browser agent success lies in their architectural and security framework. As the field progresses, adhering to these foundational principles will be crucial for driving forward the safe and efficient automation of web interactions.