The paper provides a comprehensive and systematic survey on LLM-brained GUI agents, tracing the multidisciplinary evolution from conventional GUI automation techniques to the integration of LLMs that endow agents with advanced planning, reasoning, and multimodal perception capabilities.

The survey is organized into several interrelated sections:

- Historical Evolution and Context The paper begins by discussing the limitations of early automation strategies—random-based, rule-based, and script-based approaches—and illustrates how these methods, while foundational for GUI automation, lack the flexibility required for dynamic, heterogeneous interfaces. It then reviews the transition toward machine learning and reinforcement learning-based techniques, which paved the way for more adaptive GUI agents. The introduction of LLMs, particularly multimodal models, is underscored as a pivotal milestone that transforms GUI automation by enabling agents to interpret natural language and process visual cues such as screenshots and widget trees.

- Foundations and System Architecture

The core of the survey dissects the architectural design of LLM-brained GUI agents. Key components are detailed as follows:

- Operating Environment:

The work discusses how agents interact with various platforms (mobile, web, and desktop), emphasizing the extraction of structured data (e.g., DOM trees, accessibility trees) and unstructured visual data (screenshots). The survey illustrates the challenges of state perception in diverse operating environments and the methodologies for capturing comprehensive UI context. - Prompt Engineering and Model Inference:

Central to the paradigm is the conversion of multimodal environmental states and user requests into detailed prompts. These prompts encapsulate user instructions, UI element properties, and example action sequences to harness in-context learning capabilities of LLMs. The paper further explains how the model inference stage involves planning (often using chain-of-thought reasoning) and subsequent action inference, where the LLM generates discrete commands (e.g., UI operations or native API calls) that translate into tangible interactions. - Actions Execution:

The agents execute actions via three broad modalities: direct UI operations (mouse, keyboard, touch), native API calls for efficient task execution, and integration of AI tools (such as summarizers or image generators) to enhance performance. The survey highlights the trade-offs between these modalities, such as the robustness of UI operations versus the efficiency gains from direct API manipulations. - Memory Mechanisms:

To address multi-step tasks, the survey outlines the implementation of both short-term and long-term memory. Short-term memory (STM) is used to maintain the immediate context within a constrained context window of the LLM, while long-term memory (LTM) stores historical trajectories and task outcomes externally. This dual-memory approach enables agents to dynamically adapt their behavior based on accumulated experience.

- Advanced Enhancements and Multi-Agent Systems

The survey addresses a suite of advanced techniques that further push the boundaries of GUI agent capabilities:

- Computer Vision-Based GUI Parsing:

When system-level APIs fail to extract complete UI information, computer vision (CV) models are employed to parse screenshots, detect non-standard widgets, and even infer functional properties of UI elements. This hybrid CV approach significantly enhances the robustness of GUI parsing. - Multi-Agent Frameworks:

The work surveys systems that incorporate multiple, specialized agents operating in a collaborative framework. By decomposing complex tasks into subtasks handled by distinct agents (e.g., a "Document Extractor" or a "Web Retriever"), these architectures demonstrate improved scalability and adaptability. - Self-Reflection and Self-Evolution:

Techniques such as ReAct and Reflexion are detailed as methods by which agents introspect and adjust their decision-making processes. Self-reflection allows an agent to analyze the outcomes of previous actions and refine future steps, while self-evolution mechanisms ensure that agents can update their strategies based on historical successes and failures. These mechanisms are paralleled with reinforcement learning approaches, where the GUI automation tasks are cast as Markov Decision Processes (MDPs) with clearly defined states, actions, and reward structures.

- Taxonomy and Roadmap for Future Research In addition to breaking down individual components, the survey introduces an extensive taxonomy covering frameworks, datasets, model architectures, evaluation metrics, and real-world applications. Tables and figures (e.g., detailed visualizations of framework architectures and a taxonomy diagram) serve as blueprints for navigating the state-of-the-art, highlighting both the diversity of current approaches and the interconnections between various research elements. The roadmap section points out critical research gaps, such as the need for more sophisticated environment state perception, more efficient memory management strategies, and improved inter-agent communication protocols.

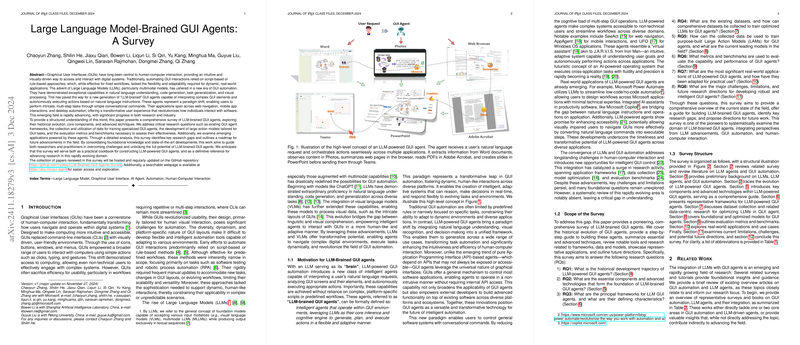

- Applications and Practical Implications The survey concludes by presenting a broad range of applications—from web navigation and mobile app automation to complex desktop operations—illustrating how LLM-brained GUI agents can transform human-computer interactions by enabling natural language control over software systems. The discussion emphasizes that while these agents have demonstrated strong performance in controlled experiments and industrial prototypes, challenges remain regarding scalability, latency, and safety.

Overall, the paper offers a detailed and balanced synthesis of the current research landscape in LLM-powered GUI automation. It effectively situates recent trends within the broader evolution of GUI automation, while rigorously cataloging technical advances such as multi-modal perception, prompt engineering, action inference, and memory integration. Researchers in the field will find this survey a valuable reference for both understanding the state-of-the-art and identifying fertile directions for future investigations.