- The paper demonstrates that AI Overviews appear in 84% of queries, with significant inconsistencies observed in 33% of cases.

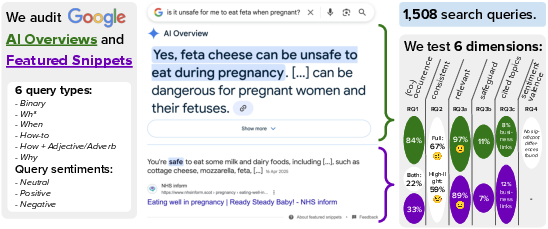

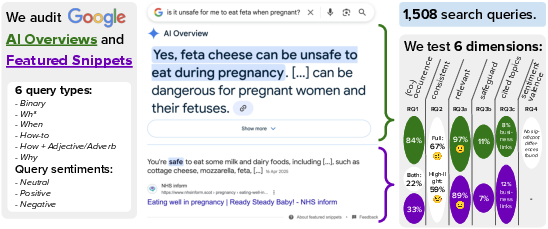

- It employs an algorithmic audit of 1,508 baby care and pregnancy queries, evaluating quality, relevance, and the presence of medical safeguards.

- It reveals that limited safeguard cues and commercial bias in Featured Snippets raise potential risks of misinformation in sensitive health contexts.

Analysis of the Audit Study on Google’s AI Overviews and Featured Snippets

Introduction

The integration of AI-generated content into web search results is manifesting through features such as AI Overviews (AIO) and Featured Snippets (FS) in search engines like Google. This paper provides a comprehensive audit of these features specifically within the context of baby care and pregnancy-related queries. The findings highlight substantial inconsistencies and the potential implications arising from unreliable health information sourced through these mediums.

Methodology

The research utilized an algorithmic audit of 1,508 queries focusing on baby care and pregnancy topics. The evaluation framework addressed multiple quality dimensions: answer consistency, relevance, presence of medical safeguards, source credibility, and sentiment alignment. Numerous categories were manually labeled to assess the variations in quality and information consistency between AIO and FS across several query types and sentiments.

Figure 1: Overview of the audit paper methods and results on Google's AI Overviews (AIO) and Featured Snippets (FS).

Prevalence and Inconsistency

The findings reveal that AIOs appear much more frequently than FS, with a presence in 84% of queries compared to FS's 32.5%. Notably, there is a significant inconsistency in the information provided by these features, with discrepancies identified in 33% of co-occurring AIO and FS instances. Among these inconsistencies, binary contradictions pose a serious risk, particularly in medical contexts where conflicting advice about substance safety or health risks can lead to harmful decisions.

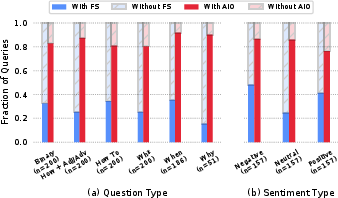

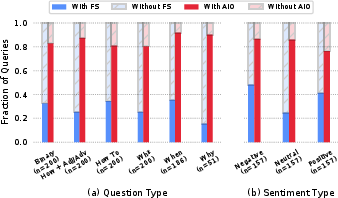

Figure 2: Fractional Appearance Distribution of AIO answer and FS answer by question type and question sentiment.

Despite generally high relevance ratings—96.6% for AIOs and 88.7% for FS—the features demonstrated a critical lack of safeguard cues, with only 11% of AIO and 7% of FS responses containing necessary medical safety warnings. This shortfall is particularly concerning given the potential health risks of misinformation in the sensitive domain of pregnancy.

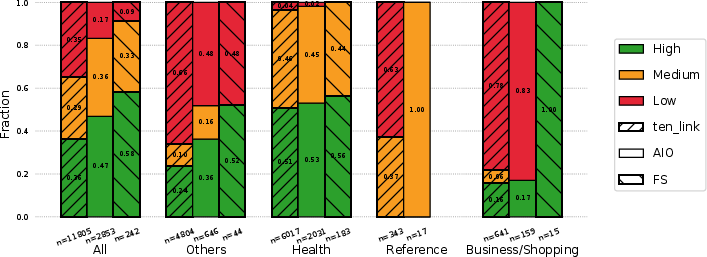

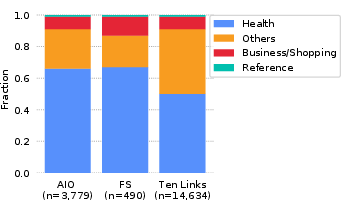

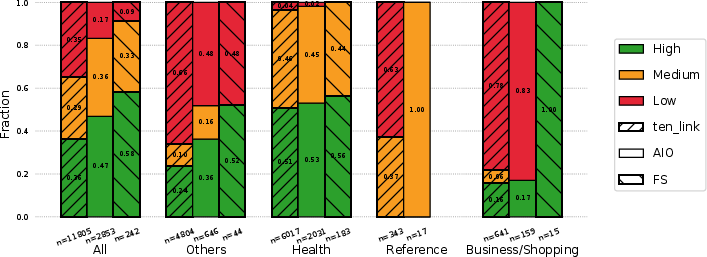

Source Credibility and Query Sentiment

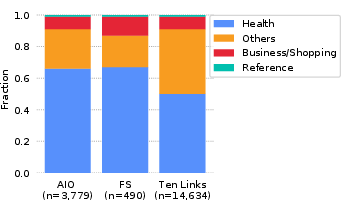

Health and wellness websites dominate the source categories for both features, yet FS frequently cites commercial sites, compromising objectivity. The behavior of these features under different query sentiments suggests FS is more responsive to emotionally negative formulations, reflecting potential bias in how information is presented based on sentiment analysis.

Figure 3: Fractional distribution of source credibility for top 10% domains in AIO/FS answers and the ten blue links.

Implications and Conclusion

The paper underscores the urgency of implementing stronger quality controls in AI-mediated health information. The empirical findings suggest significant implications on public health, underscoring the necessity of advancing audit methodologies to enhance information accuracy, particularly in high-stakes domains. Future investigations should focus on extending these audits across other critical domains and examining how evolving AI technologies continue to influence the reliability and quality of information accessible to end-users.

Figure 4: Fractional distribution of major categories of domains sourcing AIO/FS answers and the ten blue links.

In conclusion, as AI artifacts gain prominence in directing information on critical health topics, ensuring their consistency, reliability, and safety remains imperative. The methodology and insights from this paper offer a scalable framework for ongoing evaluation and improvement of AI search components.