Analyzing the Sociotechnical Dynamics of Answer Engines in AI-Based Search

The paper "Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses" presents a comprehensive paper on the limitations and societal implications of Answer Engines. As LLMs become increasingly integrated into daily information retrieval tasks, they are metamorphosing from research instruments into influential technologies. This transformation demands an acute understanding of their utility and impact beyond the surface level, especially within the sociotechnical framework that this paper examines.

Key Findings from the Usability Study

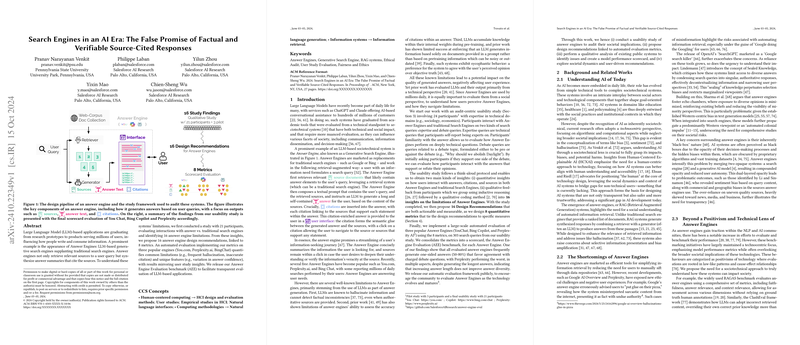

The authors conducted an audit-centric usability paper involving 21 participants, focusing on the comparison between answer engines and traditional search engines. Through this paper, 16 core limitations of answer engines were identified. These limitations can be grouped based on four main components of an answer engine: the generated answer text, citations, sources, and user interface. Notably, three crucial limitations include:

- The Lack of Objective Detail and Balance: Participants noted that answers were often devoid of necessary depth and presented one-sided perspectives. This propensity limits the exploration of diverse views, particularly in answering opinionated or debate-based queries.

- Confidence and Improper Source Attribution: The paper revealed that answer engines often exhibited unjustified confidence in their responses and frequently misattributed citations. This gap raises caution regarding trust and factuality in the information these engines present.

- User Autonomy and Source Transparency: Participants expressed a lack of control over source selection and verification, resulting from a predominantly opaque system architecture. This inadequacy impacts user trust and autonomy in verifying information accuracy.

Quantitative Evaluation Metrics and Results

Building on insights from the paper, the authors propose eight evaluation metrics for a systematic assessment of answer engines. These metrics examine aspects such as citation accuracy, statement relevance, and source necessity. The application of this framework across popular answer engines–You.com, Perplexity.ai, and BingChat–revealed substantial room for improvement. The engines frequently generate one-sided and overconfident answers, with Perplexity notably underperforming due to heightened confidence levels regardless of the question's nature.

Broader Implications

From a practical perspective, the findings underscore the necessity for continuous evaluation and transparency as these systems further permeate sociotechnical systems like healthcare and education. Theoretical implications include pondering the evolution of autonomous search engines into more comprehensive decision-making tools. As these technologies refine, their influence on user critical thinking and information verification practices demands scrutiny.

Future Developments

Looking forward, this field may witness advancements through improved interaction models that involve human feedback and better contextual understanding. Establishing robust governance structures and policies around AI applications remains crucial to mitigate bias and maintain ethical standards in information dissemination.

Conclusion

In conclusion, this paper emphasizes the importance of developing answer engines that are not only powerful in generating useful information but also aligned with ethical and transparent practices that support user empowerment. By conducting a meticulous audit, the authors contribute substantially to the discourse on AI-driven information retrieval systems, setting a precedent for future AI technologies and their integration into societal frameworks.