- The paper introduces AlphaResearch, a dual research agent integrating idea generation, execution-based verification, and simulated peer review to accelerate algorithm discovery.

- It employs a reward model trained on historical peer reviews with a 72% accuracy, outperforming traditional verification methods.

- AlphaResearch demonstrates practical superiority by surpassing human benchmarks on packing circles, showcasing its potential in open-ended research tasks.

AlphaResearch: Accelerating New Algorithm Discovery with LLMs

The paper introduces AlphaResearch, an autonomous agent that leverages LLMs to accelerate the discovery of new algorithms in open-ended problems. This agent integrates idea generation, a dual research environment, and a unique combination of execution-based verification and simulated peer review rewards to achieve this goal.

Motivation and Background

The motivation for developing AlphaResearch stems from the limitations observed in previous approaches to algorithm discovery using LLMs. Traditional methods often suffer from biased verification and misalignment issues when evaluating machine-generated ideas, which restrict the exploration potential of novel concepts. Prior attempts like AlphaEvolve focus on execution-based verification systems that can validate code's technical correctness but often lack scientific significance, while idea generation systems evaluated by LLM judges might propose innovative concepts that are computationally infeasible.

AlphaResearch Approach

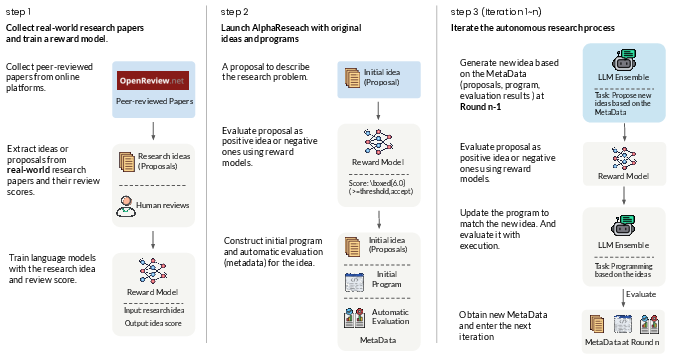

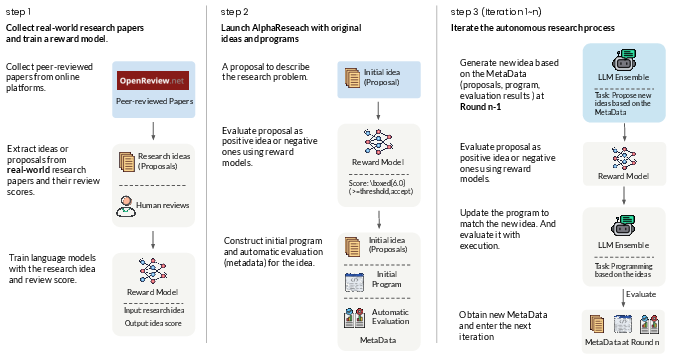

AlphaResearch aims to combine feasibility and innovation by integrating a dual research environment. The agent iteratively performs three steps: proposing new ideas, verifying these ideas within this dual environment, and optimizing the research proposals for enhanced performance.

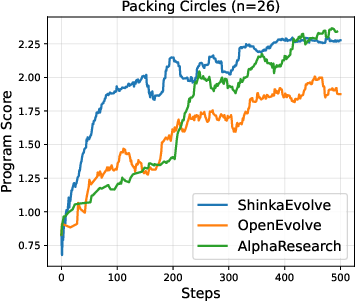

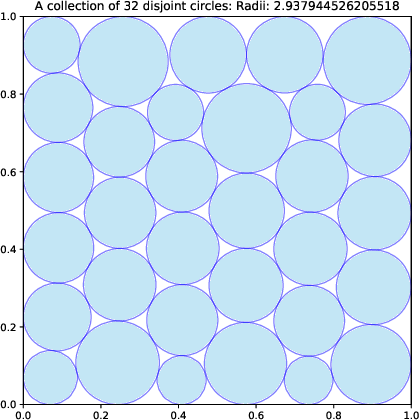

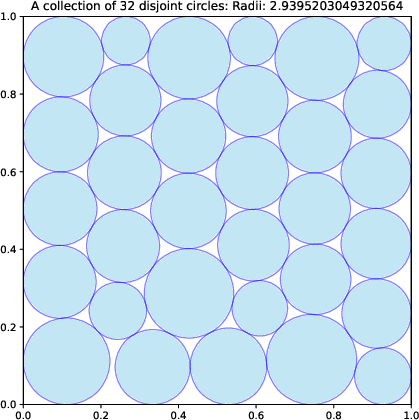

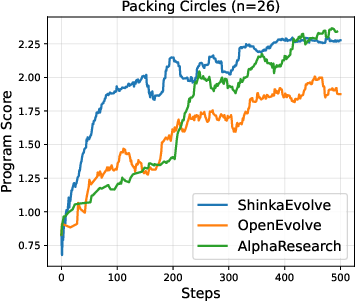

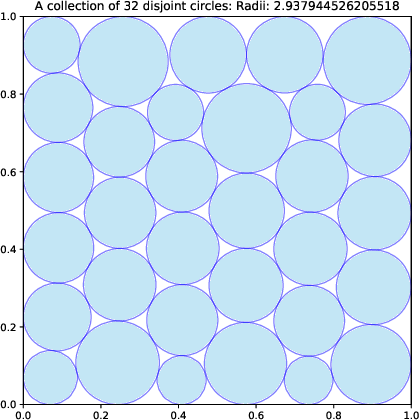

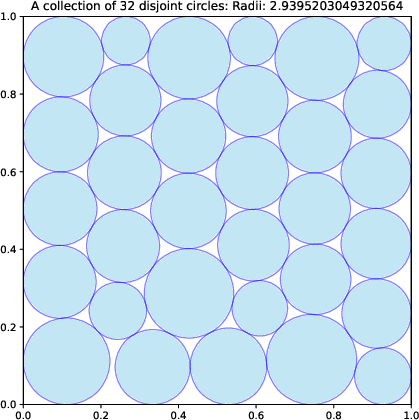

Figure 1: Comparison of OpenEvolve, ShinkaEvolve, and AlphaResearch on Packing Circles (n=26) problems. AlphaResearch outperforms others.

Dual Research Environment

This environment synergizes real-world peer review with execution-based rewards. The simulated peer review environment trains a reward model (AlphaResearch-RM-7B) using historical peer-review data, optimizing idea candidates beyond solely coding-based approaches.

Figure 2: The launch of AlphaResearch involves training reward models using peer-reviewed records and preparing initial proposals.

Execution-Based Verification

Execution-based rewards are facilitated by an automatic verification environment that stresses the program execution aspect of algorithm discovery. Programs are generated and evaluated, storing promising sequences for optimization in subsequent rounds.

AlphaResearchComp Benchmark

The AlphaResearchComp benchmark was constructed to compare AlphaResearch with human researchers across 8 open-ended research tasks. This benchmark tests the agent's discovery capabilities against human-best results in fields such as geometry, combinatorial optimization, and harmonic analysis.

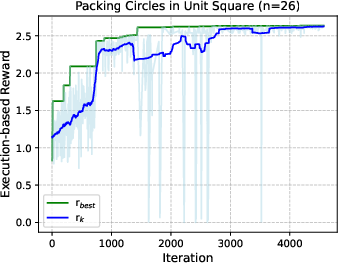

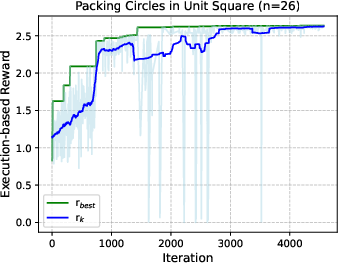

AlphaResearch shows a 2/8 win rate against expert humans, notably improving solutions for "Packing Circles" problems, achieving a sum of radii surpassing human benchmarks.

Figure 3: Execution-based reward trends for AlphaResearch on packing circles (n=26) and third autocorrelation inequality problems.

Implementation Details

AlphaResearch's iterative algorithm proposes ideas influenced by a peer-review-informed reward model and adjusts program generation subsequently attempting execution-based optimization. The agent demonstrates a trajectory of enhancing ideas and execution results, optimizing towards novel algorithmic solutions.

Reward Model

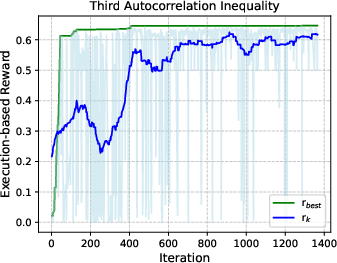

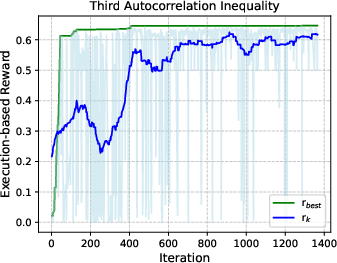

AlphaResearch-RM-7B was trained on peer reviews from past ICLR conferences, filtering fresh ideas that surpass prior results in novelty and impact. The reward model shows a classification accuracy of 72% on unseen ICLR ideas, outperforming baseline models.

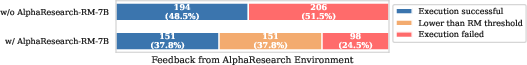

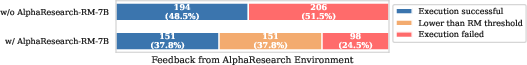

Figure 4: Impact of peer review environment on execution results, highlighting AlphaResearch-RM-7B's filtering effectiveness.

Analysis and Ablations

AlphaResearch's comparison with OpenEvolve and execution-only research agents shows superior performance through the dual-research setup. The agent's novel proposal process drives effective idea verification and optimization.

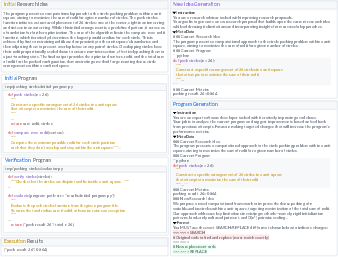

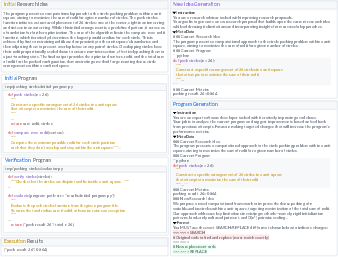

Figure 5: Example of AlphaResearch formatted task.

Case Study

A "Packing Circles" case paper illustrates AlphaResearch's process, starting with initial proposals evolving through systematic idea verification and optimal program execution.

Conclusion

AlphaResearch establishes a pathway for using LLMs to push the boundaries of human research, providing insights into future research agent developments. Limitations exist in expanding real-world problem applicability and improving reward model scale and sophistication.

Figure 6: New construction of AlphaResearch surpassing AlphaEvolve bounds on packing circles, illustrating enhanced sum of radii.