- The paper introduces a novel semantics-guided fuzzy control framework that integrates LLM semantic abstraction with fuzzy logic for underwater multi-robot coverage.

- The methodology employs LLM-driven semantic encoding and expert-based fuzzy inference to translate sensor data into continuous control signals for coordinated navigation.

- Experimental results in simulated environments demonstrate high coverage efficiency, robust performance, and scalable coordination under uncertain conditions.

LLM-Driven Fuzzy Control in Multi-Robot Underwater Coverage

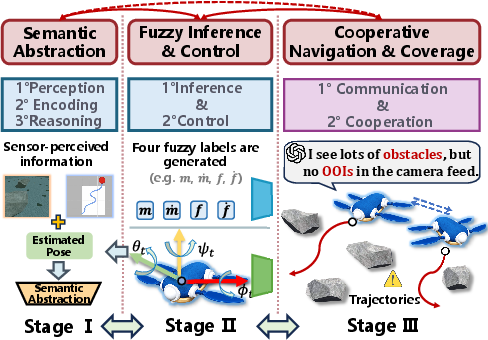

The paper "When Semantics Connect the Swarm: LLM-Driven Fuzzy Control for Cooperative Multi-Robot Underwater Coverage" proposes an innovative approach to address the complexities of underwater multi-robot cooperative coverage tasks. It introduces a semantics-guided fuzzy control framework that combines LLMs with fuzzy logic to enhance perception, decision-making, and coordination among robots in environments where global localization, communication, and environmental certainty are absent.

Introduction to Semantics-Guided Fuzzy Control

Underwater environments pose significant challenges due to limited visibility, unreliable communication, and uncertain dynamic obstacles. Traditional methods like geometry-based planning, optimization-based consensus control, and learning-based approaches often suffer from inefficiencies in underwater conditions due to reliance on stable mapping, frequent information exchange, or large data demands (2511.00783). This paper mitigates these issues by exploiting LLMs for semantic abstraction, fuzzy inference for controllable navigation, and semantic communication for multi-robot coordination.

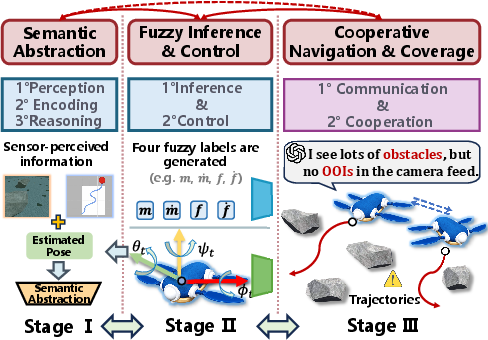

Figure 1: Schematic illustration of the proposed semantics-guided fuzzy control framework, comprising Semantic Abstraction, Fuzzy Inference {additional_guidance} Control, and Cooperative Navigation {additional_guidance} Coverage, which together enable intelligent perception, decision-making, and coordination among multiple robots in the underwater coverage task.

LLM-Guided Semantic Abstraction

Multimodal Perception and Semantic Encoding

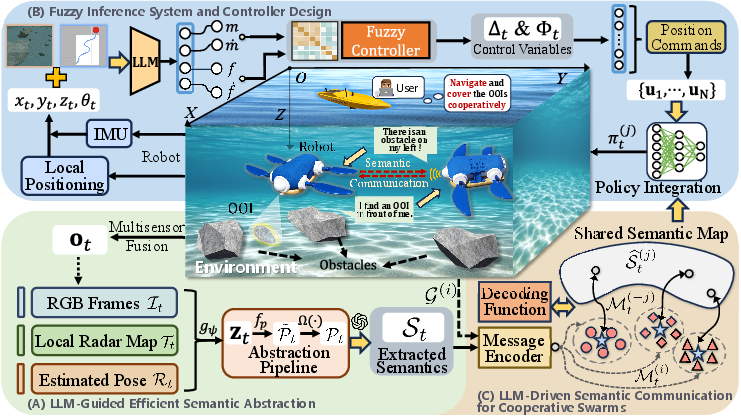

In this framework, raw sensory inputs from RGB cameras, IMU, and radar are processed into high-level semantic tokens via LLMs. This process reduces redundancy and enhances interpretability by abstracting environmental data into concise descriptors of obstacles and exploration potential. For instance, descriptions like "Front area partially explored; dense obstacles on the left" enable more sophisticated reasoning without significant computational overhead.

Structured Prompting for Context-Aware Reasoning

Structured reasoning prompts are generated to query LLMs for semantic inference, ensuring consistency in environmental grounding, temporal behavior continuity, and alignment with mission objectives. These refined semantic abstractions guide downstream decision-making and control processes, providing adaptability in complex underwater environments.

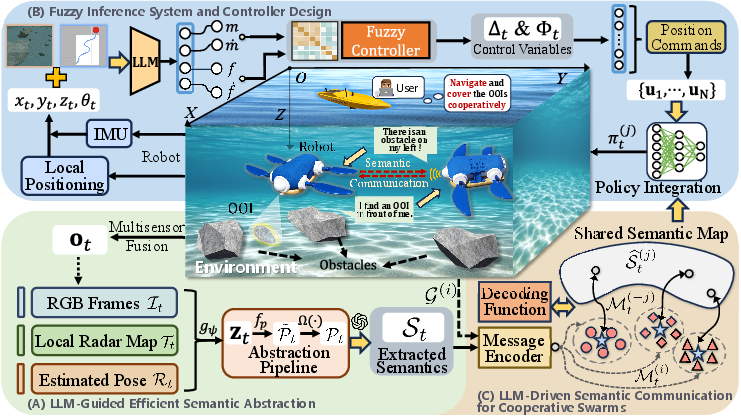

Figure 2: Overview of the semantics-guided fuzzy control framework, which consists of three modules: (A) LLM-Guided Efficient Semantic Abstraction; (B) Fuzzy Inference System and Controller Design; (C) LLM-Driven Semantic Communication for Cooperative Swarms. The framework integrates LLM-guided semantic abstraction, fuzzy inference control, and semantic communication to form a closed-loop perceptionâreasoningâaction cycle. Together, these modules enable interpretable, adaptive, and cooperative navigation for the multi-robot system under uncertain underwater conditions.

Fuzzy Inference for Control and Coordination

Fuzzy Inference System

The semantic descriptors are converted into fuzzy linguistic variables that define navigational intents, such as turning bias and propulsion strength. A fuzzy inference system translates these descriptors into continuous control signals employing expert-informed rules and membership functions.

Fuzzy Controller Design

A fuzzy controller accepts inputs related to moment and force variables, using expert-defined fuzzy rules to generate steering and gait frequency outputs. This design supports stable and interpretable control actions, which respond adaptively to situational changes in perception.

Closed-Loop Control Execution

The control outputs dictate biologically inspired gait dynamics, allowing robots to navigate complex terrains with smooth transitions between turns and propulsion changes. This mechanism integrates hierarchical transformation from perception to motion, facilitating real-time adaptability.

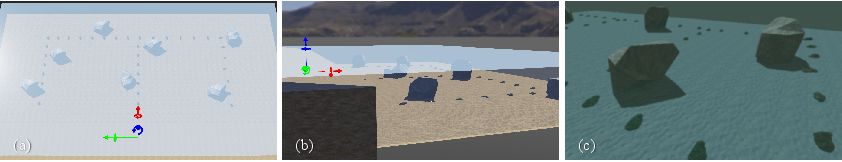

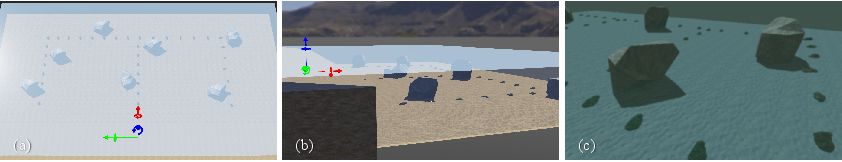

Figure 3: Visualization of the underwater coverage task simulated in the Webots platform. (a) Top-down view. (b) Side view. (c) Robot's real-time camera feed.

Semantic Communication for Cooperative Swarm

Semantic Encoding and Token Communication

Robots communicate intent using semantic tokens derived from local perceptions and task goals, enabling efficient transmission of information over acoustic channels. This mechanism dramatically reduces raw data exchange requirements and enhances cooperative situational awareness.

Collaborative Semantic Reasoning

Received tokens are decoded into a shared semantic understanding, allowing robots to integrate local awareness with collective intent. This shared cognition facilitates adaptive task coordination and minimizes redundant navigational efforts in OOI exploration.

Experimental Results

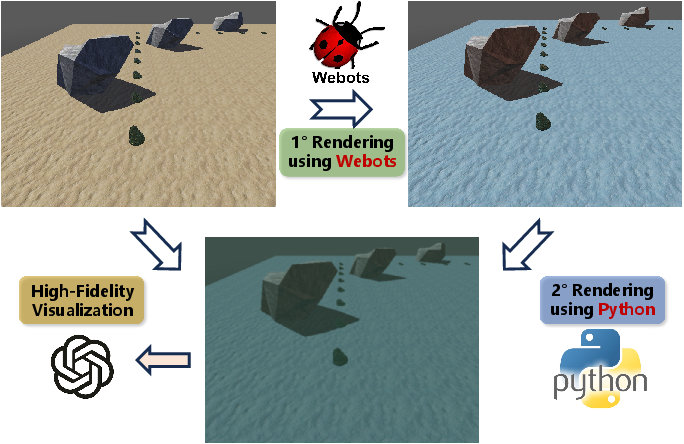

Evaluation via Webots simulations demonstrated the framework's effectiveness across three environments: Grid World, E-Shape, and Disconnected Paths. The results verified superior performance in terms of trajectory length, OOI coverage, and coverage efficiency when compared to baseline methods like BCD and BB.

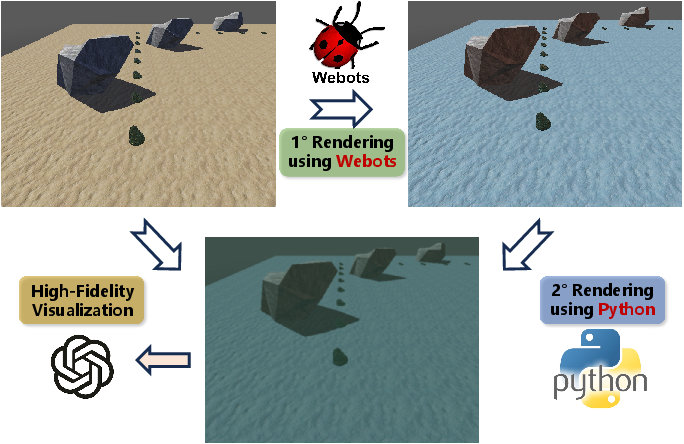

Figure 4: Top-view visualization of the three simulated underwater environmentsâGrid World, E-Shape, and Disconnected Paths, which are used to evaluate coverage performance under different spatial structures. (a) Grid World. (b) E-Shape. (c) Disconnected Paths.

The proposed framework consistently achieved high coverage ratios and efficient exploration paths, demonstrating robustness over environmental uncertainties and scalability in team sizes, as evidenced by the experimental findings.

Conclusion

The semantics-guided fuzzy control framework offers a significant advancement in underwater robotics by integrating LLM-driven semantic abstraction with fuzzy logic to achieve interpretable, adaptable, and efficient multi-robot coverage. Future work will focus on real-world deployment, optimizing semantic communication under acoustic constraints, and further enhancing coordination strategies to fully exploit the framework’s capabilities in diverse underwater challenges.