- The paper introduces OneTrans, merging sequence modeling and feature interaction into a unified Transformer for enhanced recommender performance.

- It employs a unified tokenizer and mixed parameterization to efficiently process both sequential and static features, reducing computational overhead.

- Experimental results reveal significant improvements in offline (AUC/UAUC) and online metrics (order/u, GMV/u) compared to conventional methods.

Introduction

The paper "OneTrans: Unified Feature Interaction and Sequence Modeling with One Transformer in Industrial Recommender" introduces a novel approach for enhancing recommender systems by leveraging a unified Transformer architecture. Traditional recommender systems use distinct modules for sequence modeling and feature interaction, often limiting the ability for bidirectional information exchange and unified optimization. This paper proposes a transformative backbone called OneTrans that consolidates these tasks, which leads to improved performance and efficiency.

Motivation and Background

Industrial recommender systems typically follow a multi-stage pipeline consisting of recall and ranking stages. Conventional models for the ranking stage use either sequence modeling or feature interaction independently. The paper identifies key limitations in this traditional approach, including restricted information flow and increased computational latency due to separate execution paths for modeling and interaction modules. Inspired by the scaling success of LLMs such as those described in the works of Kaplan et al. [kaplan2020scaling], this paper aims to explore similar scaling principles in the context of recommender systems.

Methodology

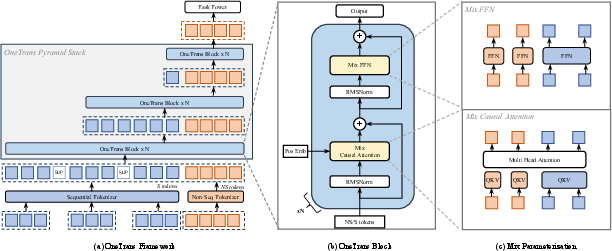

OneTrans employs a unified Transformer framework that integrates sequence modeling and feature interaction into a single architecture. It utilizes a unified tokenizer that maps both sequential attributes (user behaviors) and non-sequential attributes (static features such as user profile) into a cohesive token sequence suitable for Transformer processing.

Architecture

The core architectural components of OneTrans include:

Experimental Evaluation

The paper conducts comprehensive experiments using industrial-scale data to evaluate OneTrans against traditional baselines, showing substantial improvements in both offline metrics like AUC and UAUC, and online business metrics such as order/u and GMV/u.

Key Findings

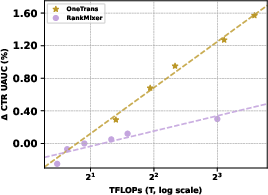

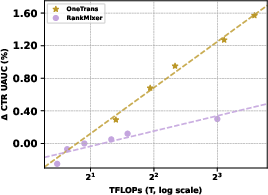

- Performance Gains: OneTrans\textsubscript{L}, the larger configuration of the model, outperformed conventional models like RankMixer+Transformer in AUC/UAUC metrics, confirming its superiority in capturing complex interactions and sequences.

- Efficiency: Leveraging LLM optimizations such as FlashAttention and mixed-precision training resulted in a significant reduction in FLOPS and memory usage, enabling practical deployment in high-throughput environments.

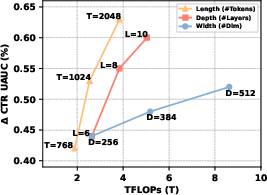

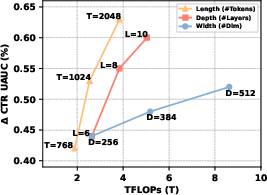

Figure 2: Trade-off: FLOPs vs.\ ΔUAUC

Implications

The integration of sequence modeling and feature interaction within a single Transformer architecture not only simplifies the modeling pipeline but also enhances performance and scalability. The findings indicate a strong potential for adopting similar architectures in other domains where large-scale data and complex feature interactions are prevalent.

Conclusion

OneTrans presents a robust and scalable solution for industrial recommender systems by unifying sequence modeling and feature interaction into a single Transformer framework. This design not only achieves improved prediction accuracy but also aligns with contemporary computational optimizations, making it suitable for real-world deployment. Future work will likely explore adaptive mechanisms and further scalability improvements to augment OneTrans' capabilities across diverse application scenarios.