- The paper presents an innovative unified generative framework that replaces multi-stage architectures with a single end-to-end model for e-commerce search.

- It details a novel methodology combining keyword-enhanced hierarchical quantization encoding and multi-view behavior sequence injection to effectively capture user intent.

- Empirical results demonstrate significant improvements in recall, CTR, and resource efficiency, underscoring the framework's industrial viability and potential to streamline search systems.

OneSearch: A Unified End-to-End Generative Framework for E-commerce Search

Introduction and Motivation

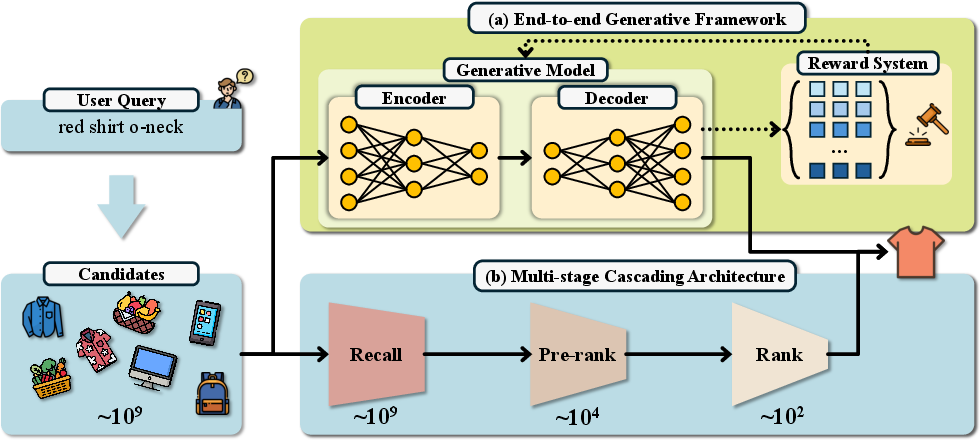

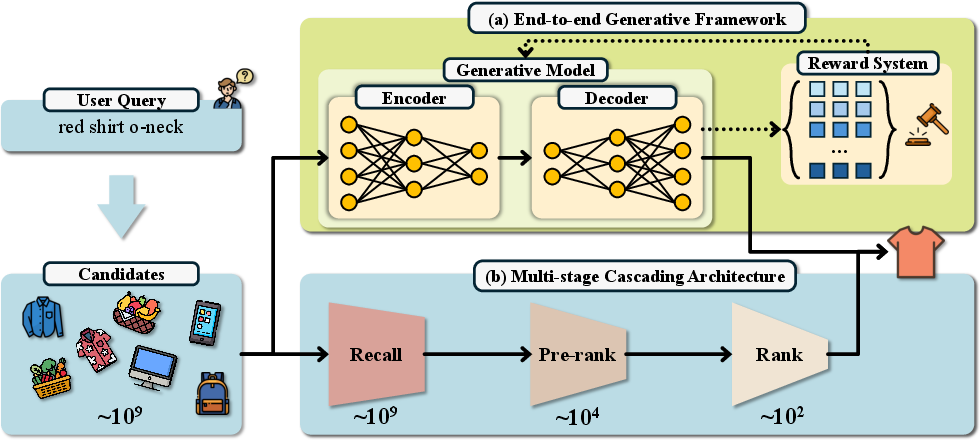

The paper introduces OneSearch, an industrial-scale, end-to-end generative retrieval framework for e-commerce search, designed to address the inherent limitations of traditional multi-stage cascading architectures (MCA). In conventional e-commerce search systems, the MCA paradigm segments retrieval into recall, pre-ranking, and ranking stages, each optimized for different objectives and computational constraints. This fragmentation leads to suboptimal global performance due to objective collisions, inefficient resource utilization, and limited ability to model user intent holistically.

OneSearch proposes a unified generative approach that directly maps user queries and behavioral context to item candidates, eliminating the need for multi-stage filtering and enabling joint optimization of relevance and personalization. The framework is deployed at scale on the Kuaishou platform, serving millions of users and demonstrating significant improvements in both offline and online metrics.

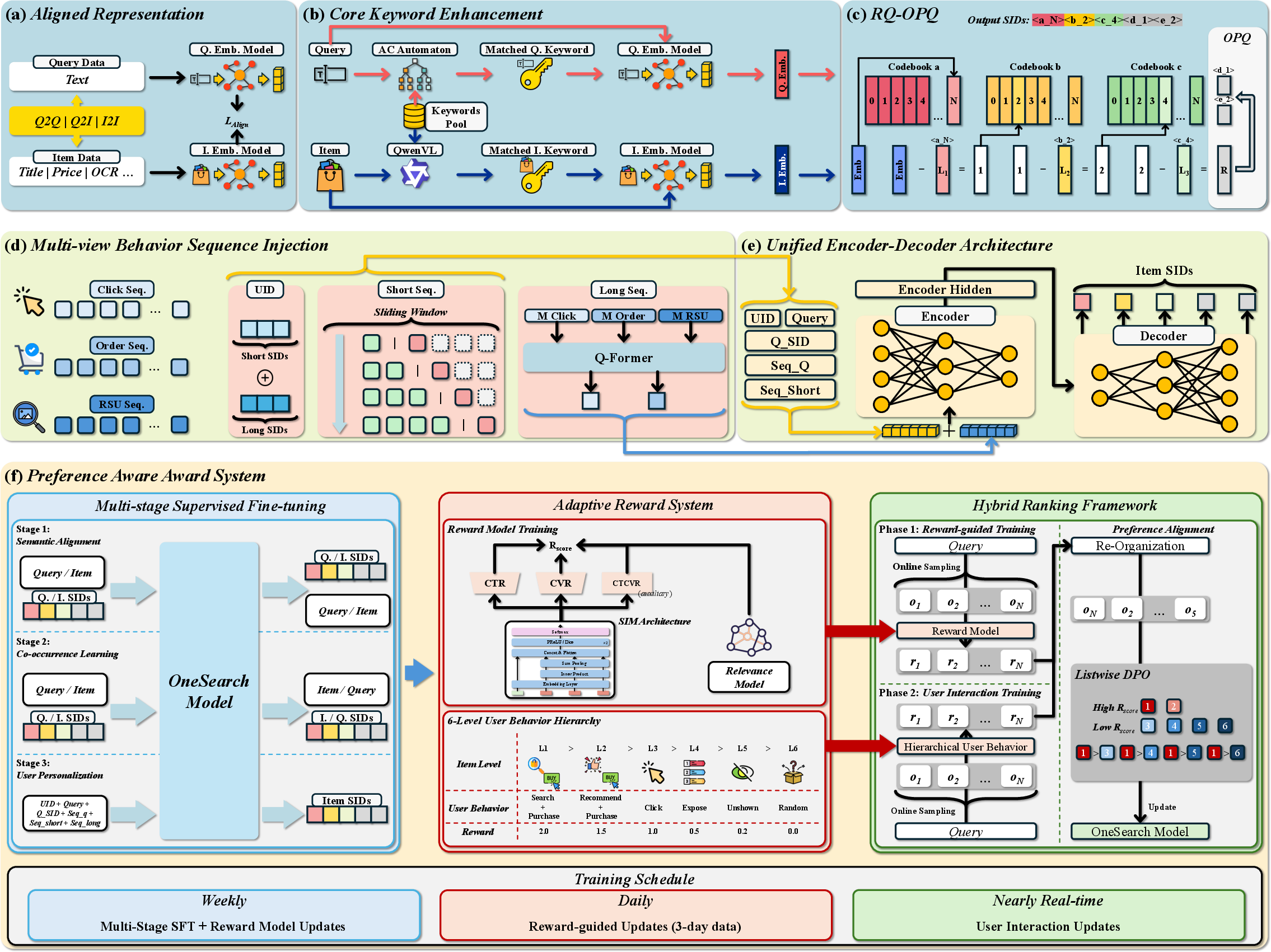

Figure 1: (a) The proposed End-to-End generative retrieval framework (OneSearch), (b) the traditional multi-stage cascading architecture in E-commerce search.

System Architecture and Key Innovations

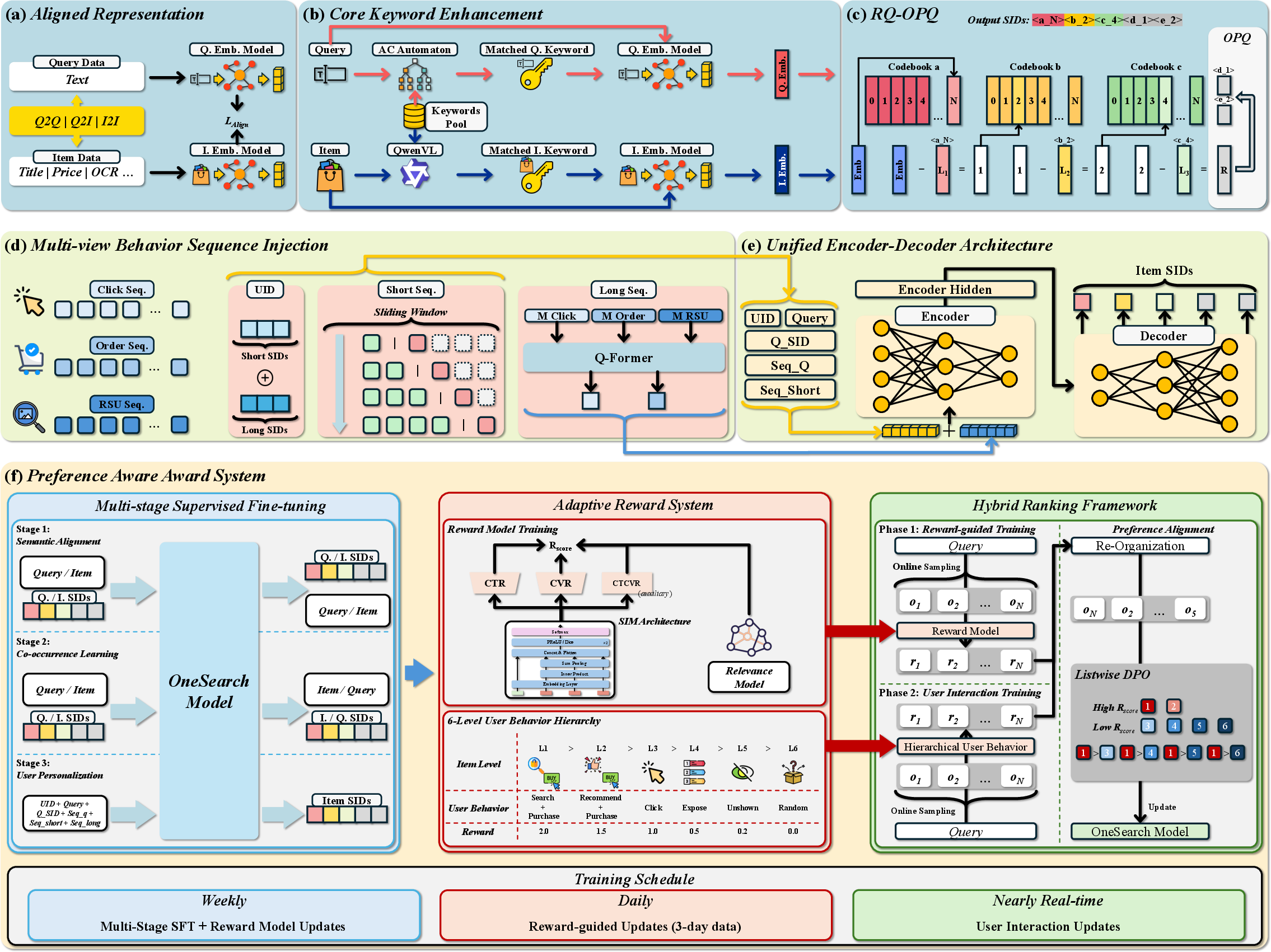

OneSearch is architected around four principal components:

- Keyword-Enhanced Hierarchical Quantization Encoding (KHQE): This module encodes items and queries into semantic IDs (SIDs) using a hierarchical quantization schema, augmented with core keyword extraction to preserve essential attributes and suppress irrelevant noise. The encoding pipeline combines RQ-Kmeans for hierarchical clustering and OPQ for fine-grained residual quantization, ensuring high codebook utilization and independent coding rates.

- Multi-view Behavior Sequence Injection: User modeling is achieved by integrating explicit short-term and implicit long-term behavioral sequences. User IDs are constructed from weighted aggregations of recent and historical clicked items, and both short and long behavior sequences are injected into the model via prompt engineering and embedding aggregation, respectively. This multi-view approach enables comprehensive personalization.

- Unified Encoder-Decoder Generative Model: The system employs a transformer-based encoder-decoder architecture (e.g., BART, mT5, or Qwen3) to jointly model user, query, and behavioral context, generating item SIDs as output. The model is trained with a combination of supervised fine-tuning and preference-aware reinforcement learning.

- Preference-Aware Reward System (PARS):

A multi-stage supervised fine-tuning process aligns semantic and collaborative representations, followed by an adaptive reward system that leverages hierarchical user behavior signals and list-wise preference optimization. The reward model is trained on real user interactions, incorporating CTR, CVR, and relevance signals.

Figure 2: The OneSearch framework: (1) KHQE for semantic encoding, (2) multi-view behavior sequence injection, (3) unified encoder-decoder generative retrieval, (4) preference-aware reward system.

Hierarchical Quantization Encoding and Tokenization

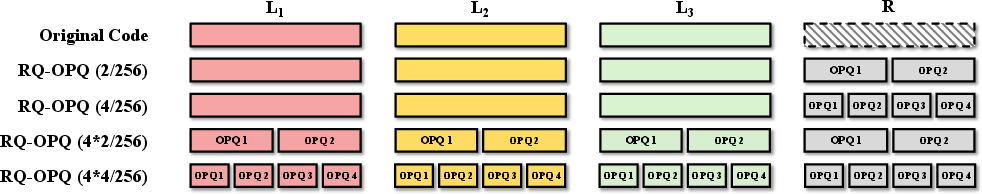

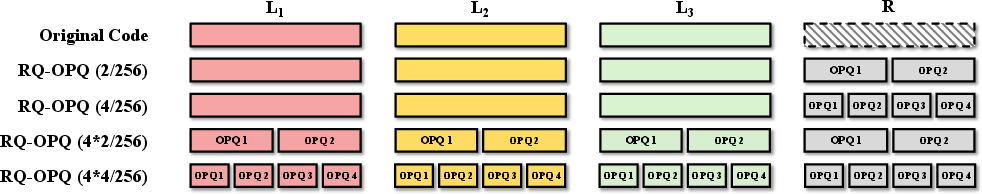

The KHQE module addresses the challenge of representing items with long, noisy, and weakly ordered textual descriptions. By extracting core keywords using NER and domain-specific heuristics, the encoding process emphasizes essential attributes (e.g., brand, category) and suppresses irrelevant tokens. The hierarchical quantization pipeline operates as follows:

- RQ-Kmeans: Hierarchically clusters item embeddings, maximizing codebook utilization and independent coding rates.

- OPQ: Quantizes residual embeddings to capture fine-grained, item-specific features.

- Core Keyword Enhancement: Core keywords are embedded and averaged with item representations, further improving the discriminative power of SIDs.

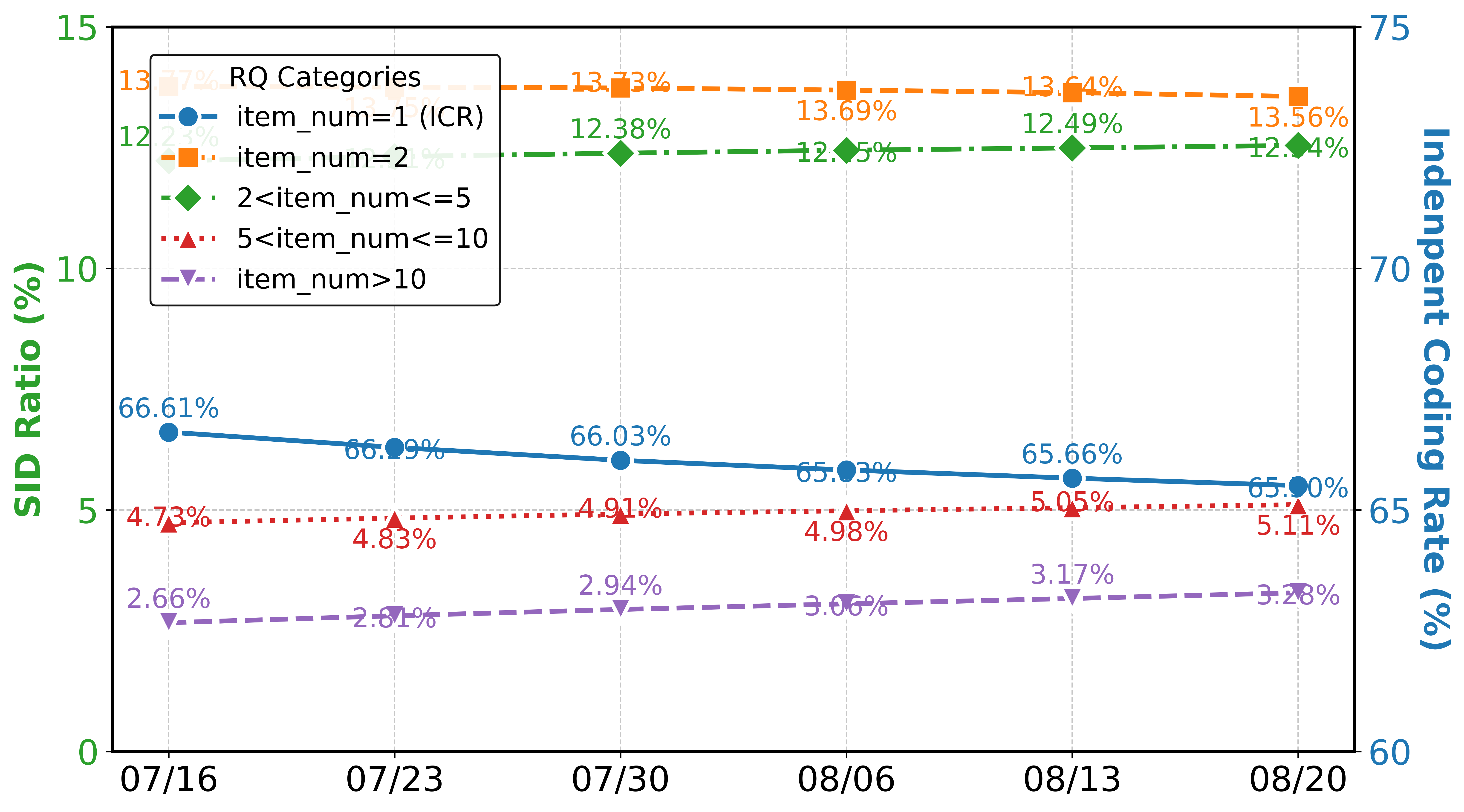

Empirical results demonstrate that this approach yields higher recall and ranking performance compared to standard RQ-VAE or balanced k-means tokenization, with significant improvements in codebook utilization and independent coding rates.

Figure 3: Different hierarchical quantization encodings of items, illustrating the impact of KHQE and OPQ on SID assignment.

Multi-view User Behavior Modeling

OneSearch's user modeling strategy integrates three perspectives:

- Behavior Sequence-Constructed User IDs:

User IDs are computed as weighted sums of SIDs from recent and long-term clicked items, providing a semantically meaningful and behaviorally grounded identifier.

- Explicit Short Behavior Sequences:

Recent queries and clicked items are explicitly included in the model prompt, enabling the model to capture short-term intent shifts.

- Implicit Long Behavior Sequences:

Long-term behavioral patterns are aggregated via centroid embeddings at multiple quantization levels, efficiently encoding user profiles without excessive prompt length.

Ablation studies confirm that sequence-constructed user IDs and explicit/implicit behavior sequence injection yield substantial gains in both recall and ranking metrics, outperforming random or hashed user ID baselines.

Unified Generative Retrieval and Training Paradigm

The encoder-decoder model ingests the full user context and outputs item SIDs via constrained or unconstrained beam search. Training proceeds in three supervised fine-tuning stages:

- Semantic Content Alignment: Aligns SIDs with textual descriptions and category information.

- Co-occurrence Synchronization: Models collaborative relationships between queries and items.

- User Personalization Modeling: Incorporates user IDs and behavior sequences for personalized generation.

A sliding window data augmentation strategy is applied to short behavior sequences, enhancing the model's ability to generalize to users with limited history.

Preference-Aware Reward System and Hybrid Ranking

The reward system is designed to optimize both relevance and conversion objectives:

User interactions are categorized into six levels, with adaptive weights derived from calibrated CTR and CVR metrics. The reward model is a three-tower architecture predicting CTR, CVR, and CTCVR, with an additional relevance score.

- Hybrid Ranking Framework:

List-wise DPO training is used to align the generative model's output with the reward model's ranking, followed by further fine-tuning on pure user interaction data to overcome the limitations of reward model distillation.

This hybrid approach enables OneSearch to achieve a Pareto-optimal balance between relevance and personalization, surpassing the performance ceiling of traditional MCA systems.

Experimental Results

Offline Evaluation

OneSearch is evaluated on a large-scale industry dataset from Kuaishou's mall search platform. Key findings include:

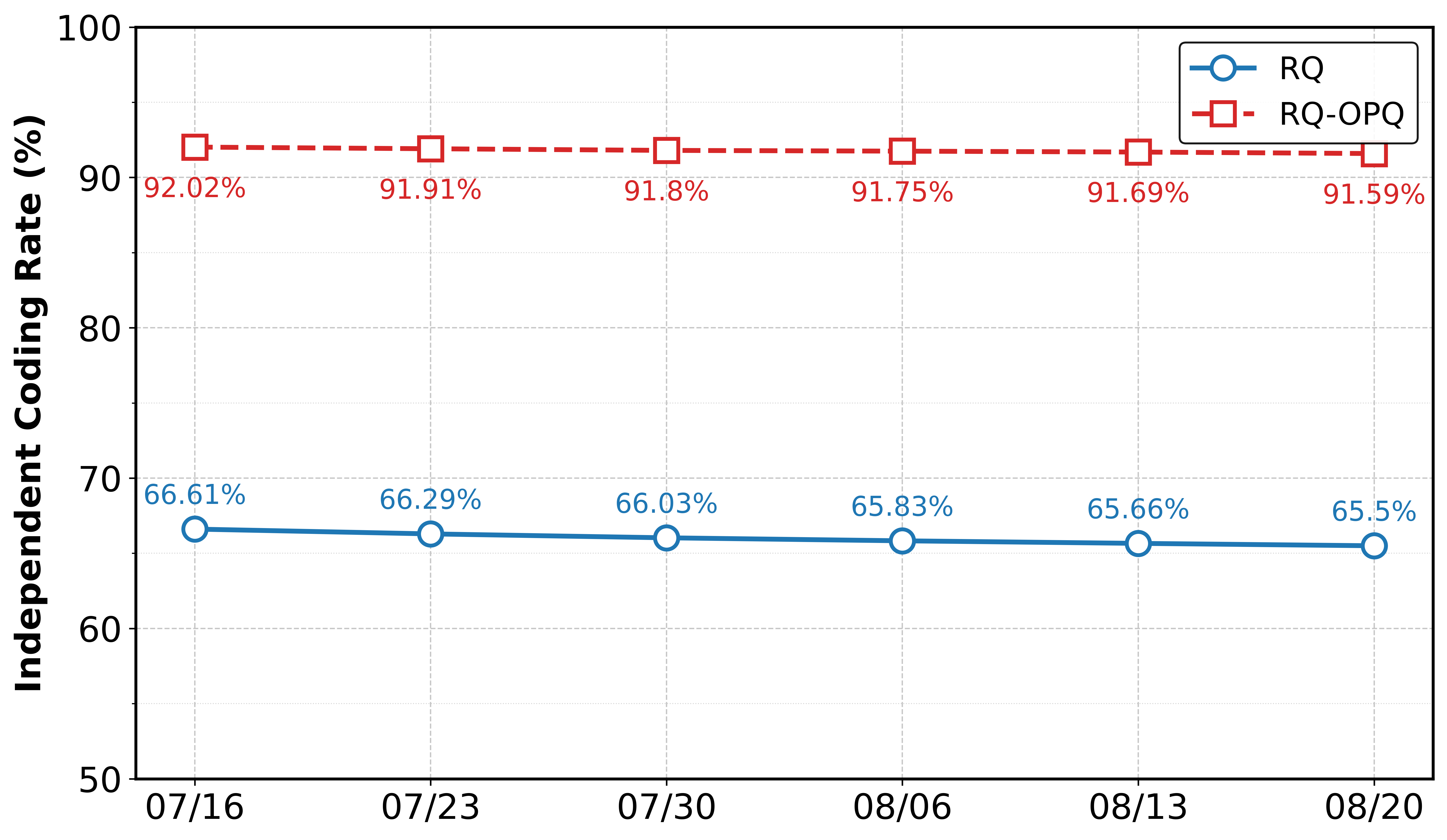

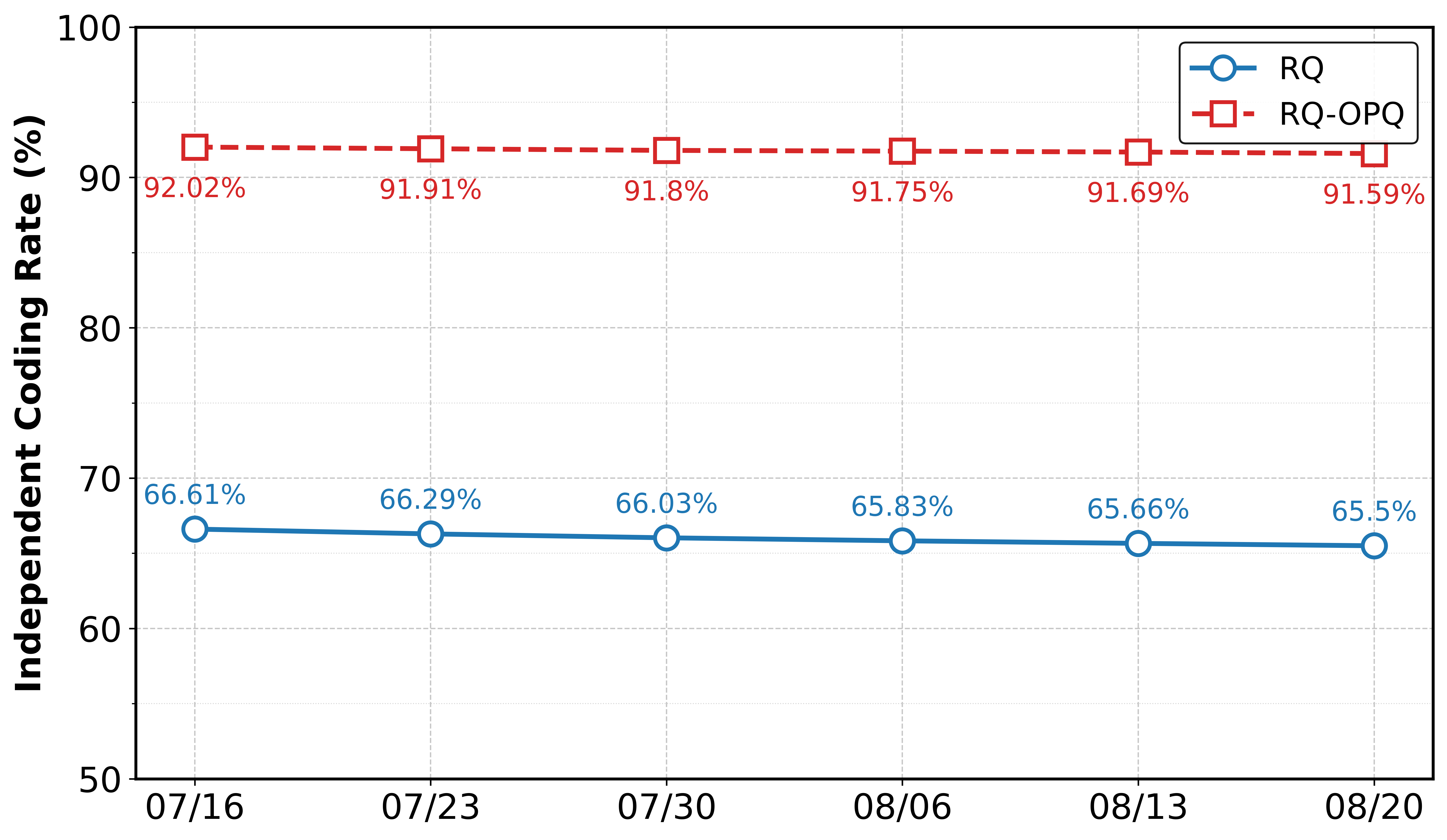

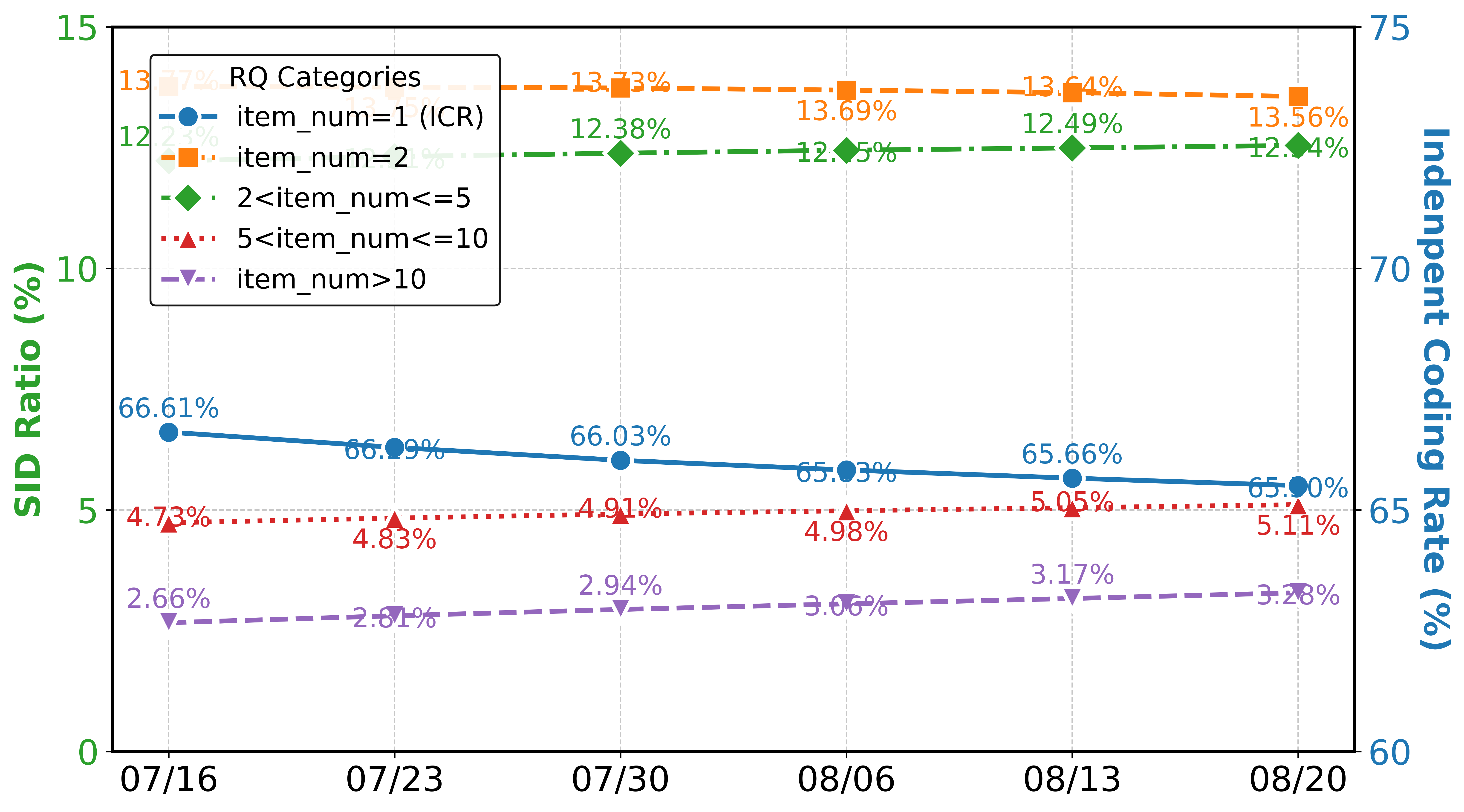

OneSearch achieves higher recall (HR@350) and comparable or superior ranking (MRR@350) compared to the online MCA baseline.

KHQE, OPQ, and multi-view behavior sequence injection each contribute significant performance gains. The system is robust to item pool changes, maintaining high codebook utilization and independent coding rates over time.

Figure 4: ICR and SID ratio indicators of RQ-Kmeans over time, demonstrating stability under dynamic item pool conditions.

Online A/B Testing

Deployed on the Kuaishou platform, OneSearch demonstrates:

- CTR and Conversion Gains:

Statistically significant improvements: +1.67% item CTR, +2.40% buyers, +3.22% order volume.

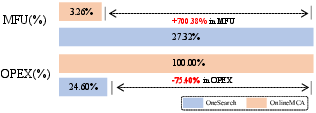

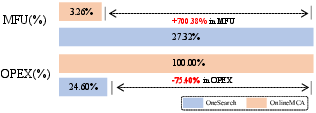

Model FLOPs Utilization increases from 3.26% (MCA) to 27.32% (OneSearch), and operational expenditure is reduced by 75.40%.

Figure 5: Comparisons of MFU and OPEX for onlineMCA and OneSearch, highlighting substantial resource efficiency improvements.

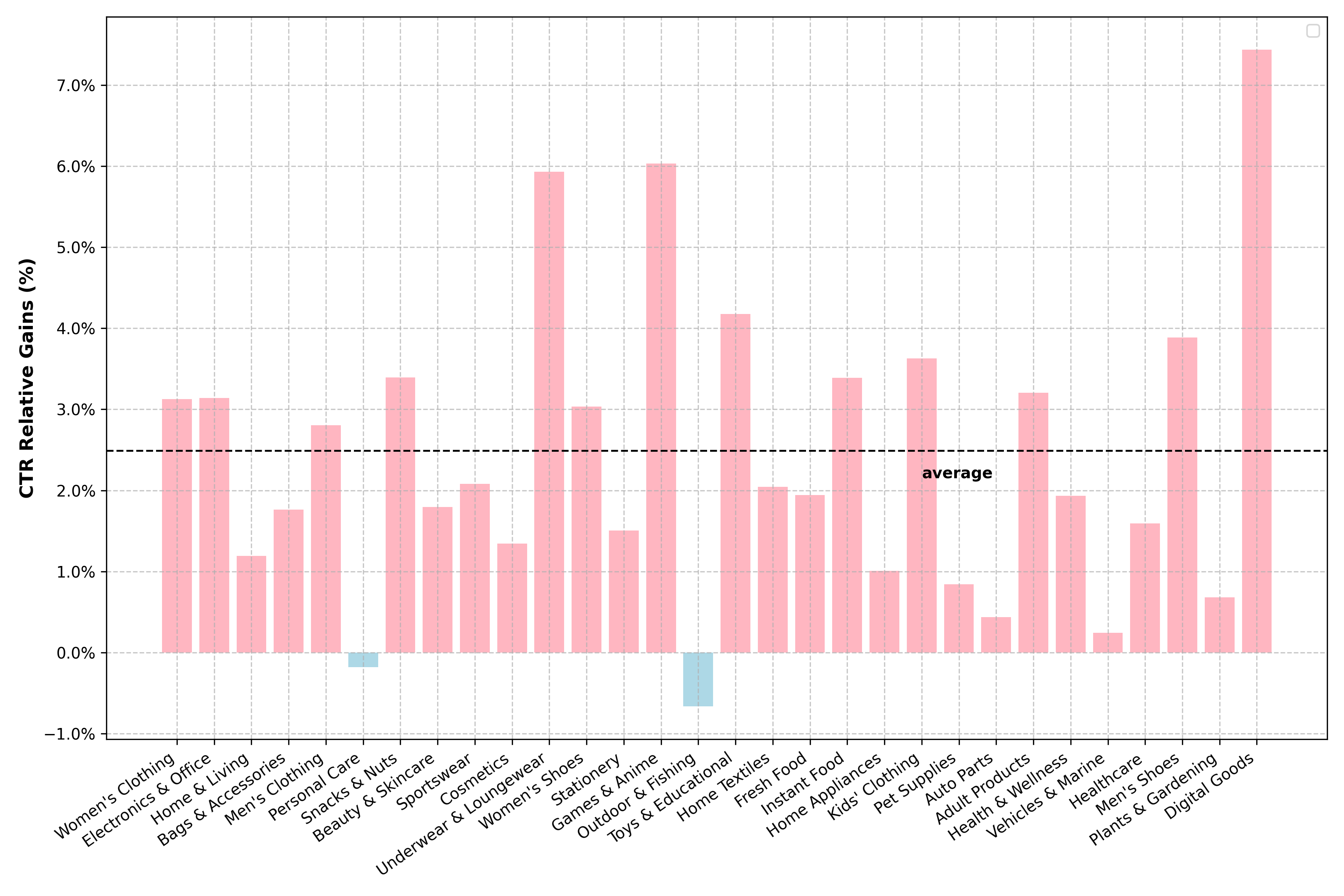

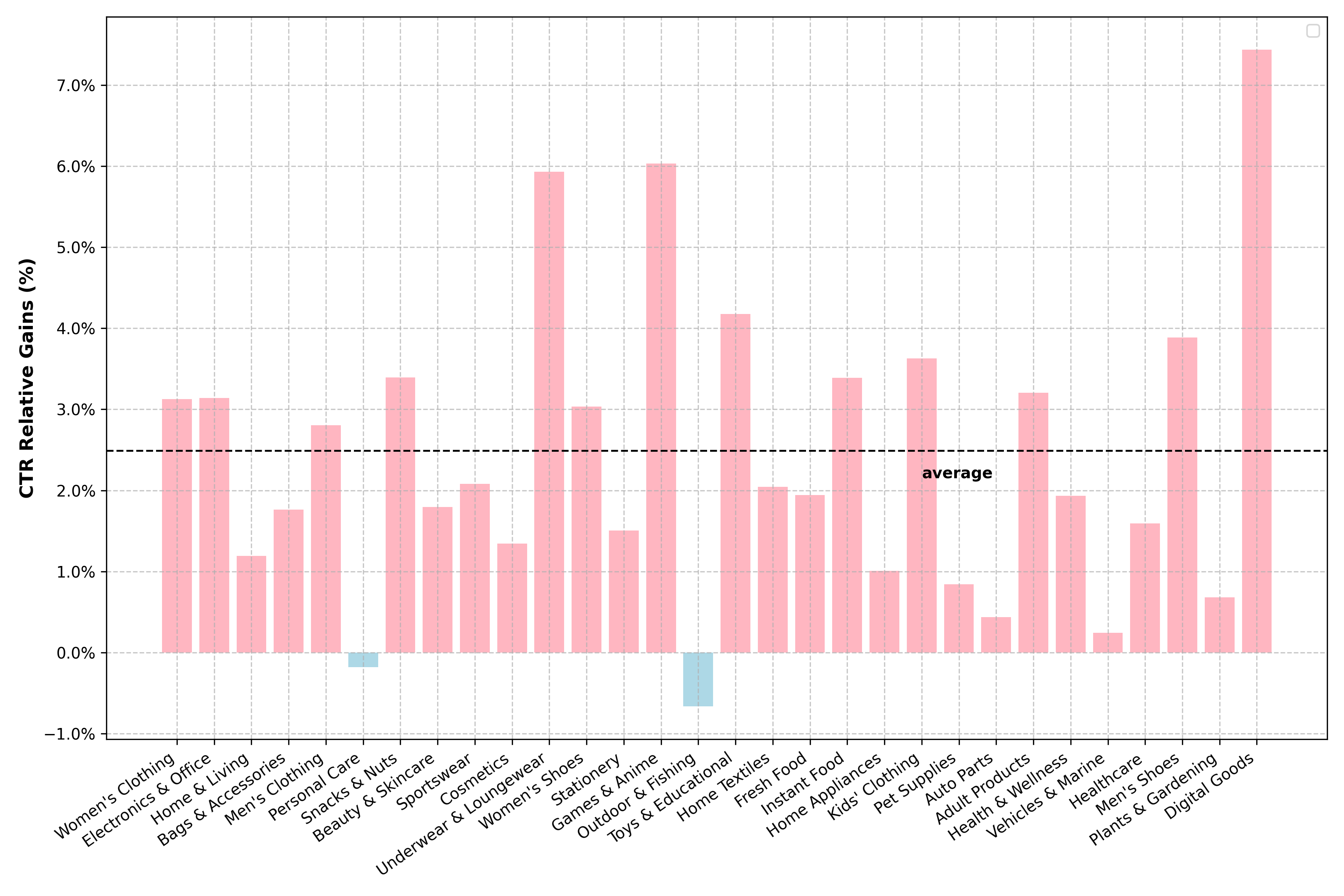

- Industry and Query Coverage:

Gains are observed across 28 of the top 30 industries and for queries of all popularity levels, including long-tail queries.

Figure 6: Online CTR relative gains for the top 30 industries, showing broad applicability of OneSearch.

Increases in page good rate, item quality, and query-item relevance, confirming improvements in user experience.

Implications and Future Directions

OneSearch demonstrates that unified, end-to-end generative retrieval can replace complex, fragmented MCA pipelines in industrial e-commerce search, yielding improvements in both user engagement and system efficiency. The framework's modular design—combining advanced quantization, multi-view user modeling, and preference-aware optimization—enables robust adaptation to dynamic item pools and evolving user behavior.

Key implications:

OneSearch reduces operational complexity, improves hardware utilization, and enhances user experience at scale.

The results challenge the necessity of multi-stage architectures for large-scale retrieval, suggesting that joint modeling of relevance and personalization is feasible and beneficial.

Future work should focus on real-time tokenization for streaming data, further reinforcement learning for preference alignment, and integration of multi-modal item features (e.g., images, video) to enhance semantic understanding and reasoning.

Conclusion

OneSearch establishes a new paradigm for e-commerce search by unifying retrieval and ranking in a single generative model, leveraging hierarchical quantization, multi-view user modeling, and preference-aware optimization. Extensive offline and online evaluations confirm its superiority over traditional MCA systems in both effectiveness and efficiency. The deployment at scale on Kuaishou demonstrates its industrial viability and sets a benchmark for future research in generative retrieval for search and recommendation.