- The paper introduces RecGPT, a foundation model that leverages unified tokenization and hybrid attention for zero-shot, cross-domain recommendation.

- The paper details a transformer-based approach integrating bidirectional-causal attention and Trie-based beam search for efficient sequential prediction.

- The paper demonstrates strong cold-start and scalability performance across six diverse datasets, highlighting practical benefits for real-world systems.

RecGPT: A Foundation Model for Sequential Recommendation

Research in recommender systems has continually advanced towards achieving cross-domain generalization and effective operation in data-sparse environments. The paper "RecGPT: A Foundation Model for Sequential Recommendation" addresses key limitations of traditional recommender systems by introducing a foundation model capable of zero-shot cross-domain effectiveness through advanced tokenization and attention mechanisms. This essay details the methodological innovations, architectural decisions, and empirical validations of the RecGPT model.

Architectural Framework

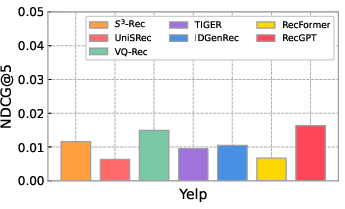

The RecGPT framework innovates upon traditional ID-based recommender systems by employing a text-driven approach. The architecture is built on three pivotal components:

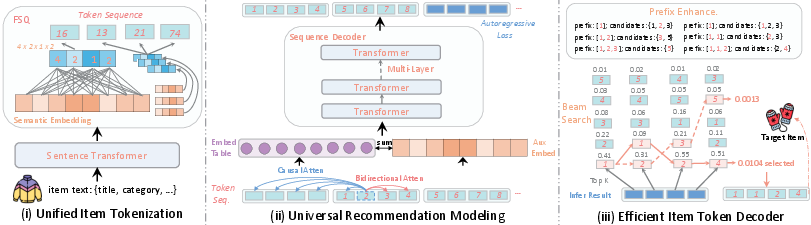

- Unified Item Tokenization: Items are converted from text descriptions into discrete tokens via the Sentence Transformer and Finite Scalar Quantization (FSQ). This process standardizes diverse textual inputs into a unified space, facilitating cross-domain knowledge transfer without model retraining. The quantization mechanism ensures that the semantic richness of item information is preserved across different domains, overcoming the limitations of ID-based systems.

Figure 1: Architecture of our proposed foundation model-RecGPT\ for recommender systems, featuring unified item tokenization, universal recommendation modeling, and efficient token decoding.

- Universal Recommendation Modeling: This component utilizes a transformer-based sequence encoder with hybrid bidirectional-causal attention mechanisms. This design captures both intra-item and inter-item dependencies, essential for maintaining item coherence and modeling sequential dynamics inherent in user interaction data. The integration of auxiliary semantic pathways aids in mitigating quantization-related information loss.

- Efficient Token Decoder: The decoder employs an enhanced beam search algorithm optimized with Trie-based prefix constraints for real-time cross-domain next-item prediction. This strategy navigates the vast token space efficiently, allowing accurate and robust recommendations within practical inference time limits.

Empirical Observations

RecGPT's effectiveness is empirically demonstrated through extensive evaluations, revealing significant advantages:

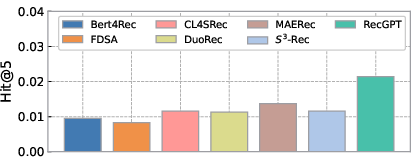

- Zero-shot Generalization: Across six diverse datasets, RecGPT consistently outperforms traditional models even in zero-shot scenarios. The architecture allows for immediate embedding of new items based solely on their textual description, bypassing the need for retraining.

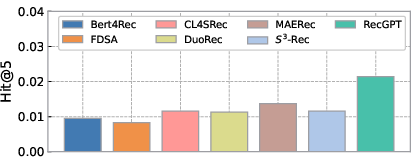

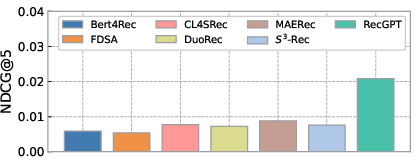

Figure 2: Performance on Industrial Dataset. Zero-shot comparison of RecGPT against baselines on a production news platform. Results show RecGPT's superior (a) Hit Rate and (b) NDCG performance.

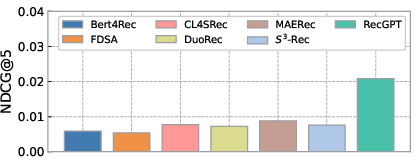

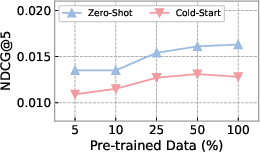

- Cold-Start Performance: The model excels in cold-start scenarios, which are notoriously challenging for recommender systems. The semantic tokenization mechanism enables RecGPT to derive meaningful recommendations from minimal user-item interactions.

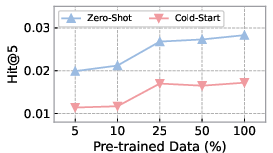

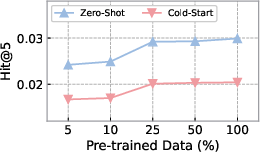

- Scalability and Efficiency: The model adheres to predictable scaling laws, demonstrating improved performance with increased data availability. This property highlights the practical benefit of leveraging larger datasets over mere architectural complexity increases.

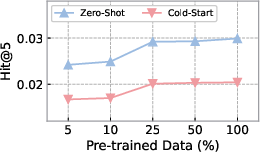

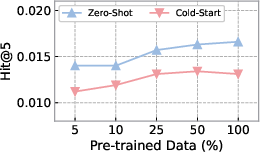

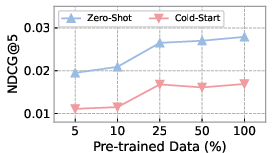

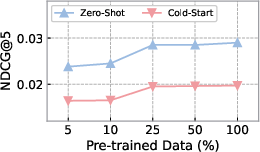

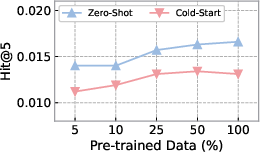

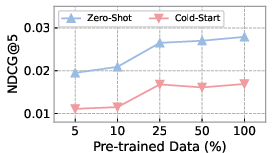

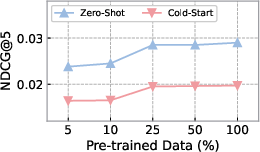

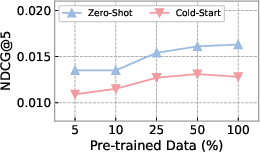

Figure 3: Performance w.r.t. the volume of training data.

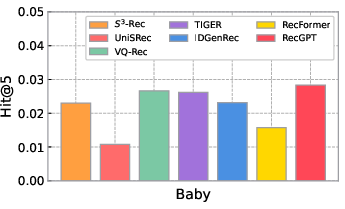

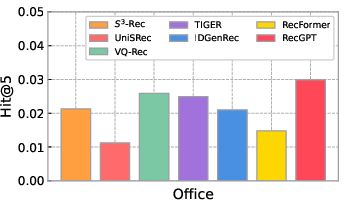

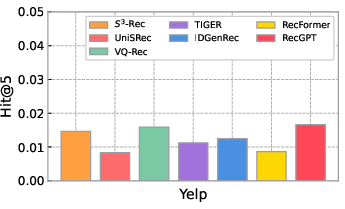

Comparative Analysis

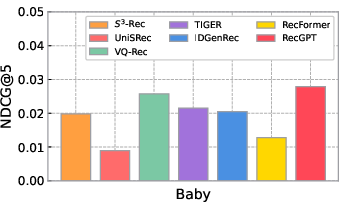

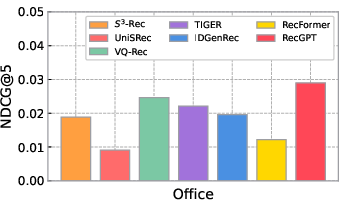

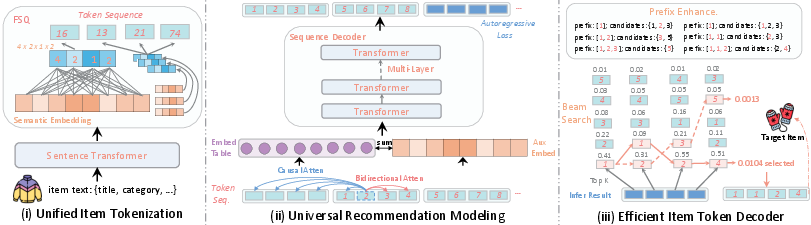

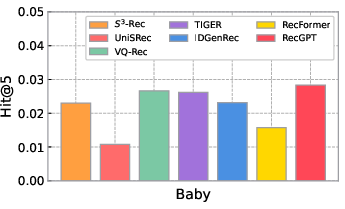

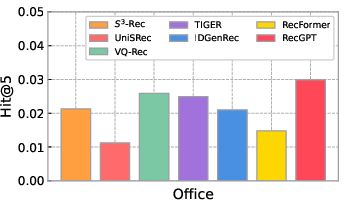

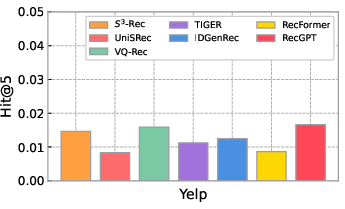

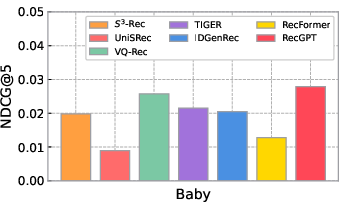

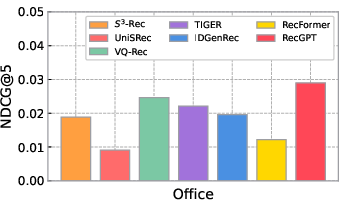

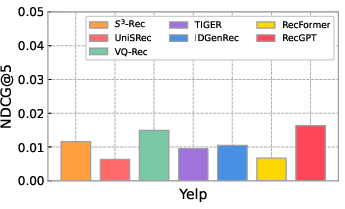

A key contribution of the paper is the model's superiority over leading pre-trained sequential recommenders. When benchmarked against contemporary models equipped with self-supervised learning and vector-quantized approaches, RecGPT showed robust advantages in predictive performance, confirming the efficacy of its innovative tokenization and attention mechanisms.

Figure 4: Performance comparison of pre-trained sequential recommenders across different datasets, measuring both ranking accuracy (HR@10, top) and quality (NDCG@10, bottom).

Practical Implications and Future Directions

The robust zero-shot performance and adaptability of RecGPT pave the way for deploying recommendation systems in rapidly changing environments and on platforms with varying data structures. Future research could focus on further integrating diverse modalities beyond text, potentially incorporating visual or auditory data to enrich item representations.

Furthermore, while the current architecture is highly efficient in computational terms, examining lightweight adaptations for deployment in resource-constrained environments remains a valuable avenue. Bridging foundational knowledge in LLMs with this approach could yield even more generalizable and capable recommender systems.

Conclusion

RecGPT signifies a forward leap in recommender system design, showcasing how foundation models with unified tokenization and intelligent attention mechanisms can achieve superior cross-domain and cold-start recommendation performance. The foundation laid by RecGPT's architecture provides a fertile ground for future explorations in semantic-rich and domain-agnostic recommendation solutions.