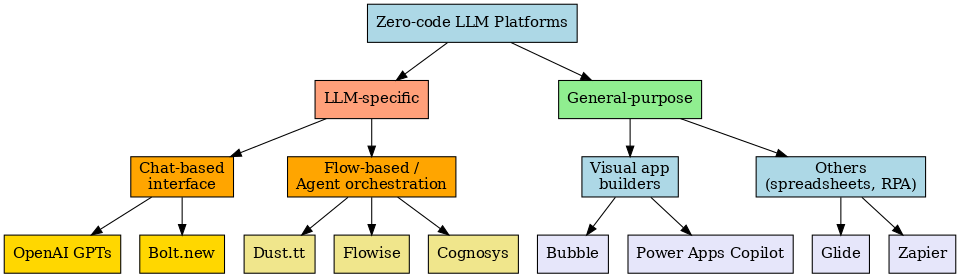

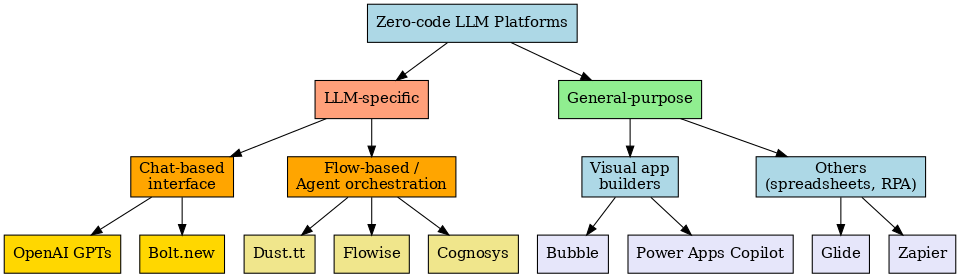

- The paper introduces a taxonomy categorizing zero-code LLM platforms by interface, backend support, output type, and extensibility.

- The paper compares dedicated LLM app builders and general-purpose no-code platforms, highlighting strengths and trade-offs in agent support and workflow orchestration.

- The paper discusses limitations in customizability, scalability, and reliability while outlining future trends like multimodality and on-device deployment.

Introduction and Motivation

The proliferation of LLMs has catalyzed a paradigm shift in software development, enabling the emergence of zero-code platforms that allow users to build sophisticated applications through natural language instructions rather than traditional programming. This survey systematically reviews the landscape of such platforms, focusing on their taxonomy, core features, comparative strengths, and the trade-offs inherent in their adoption. The analysis encompasses both dedicated LLM-based app builders (e.g., OpenAI Custom GPTs, Bolt.new, Dust.tt, Flowise, Cognosys) and general-purpose no-code platforms (e.g., Bubble, Glide) that have integrated LLM capabilities.

The surveyed platforms are categorized along four principal axes: interface type, LLM backend support, output type, and extensibility. This taxonomy clarifies the heterogeneity of the ecosystem and the spectrum of user experiences and application domains.

Figure 1: Taxonomy of zero-code platforms for LLM-powered app creation.

- Platform Category: Dedicated LLM platforms (e.g., Dust.tt, Flowise, Cognosys) are optimized for agent-centric or workflow-driven applications, while general no-code platforms (e.g., Bubble, Glide) focus on broader app development, with LLMs as augmentative features.

- Interface Type: Ranges from conversational (chat-based) and visual programming (flow/graph editors) to traditional GUI builders and template-driven configurations.

- LLM Backend: Some platforms are tightly coupled to specific providers (e.g., OpenAI, Anthropic), while others are model-agnostic, supporting multiple LLMs or even on-premise deployment.

- Output Type: Outputs include chatbots/AI assistants, full web/mobile applications, backend workflows, or hybrid solutions.

- Customization and Extensibility: Varies from pure no-code (natural language only) to low-code hooks, code exportability, and plugin-based extensibility.

This taxonomy highlights that "zero-code LLM platform" is not monolithic; rather, it encompasses a continuum from highly specialized agent builders to general-purpose app creators with embedded AI.

Core Features and Capabilities

The platforms are compared across several critical capabilities:

- Agent Support: Platforms like Cognosys and Dust.tt support autonomous, multi-step agents with tool use, while OpenAI GPTs and general no-code tools offer more limited agentic behavior.

- Memory and Knowledge Integration: Advanced platforms integrate both short-term (session-based) and long-term (RAG, vector DBs) memory, enabling context-aware and knowledge-grounded applications.

- Workflow and Control Logic: Visual flow editors (Flowise) and workflow orchestrators (Dust.tt) provide explicit control over logic and branching, whereas chat-centric platforms rely on implicit LLM reasoning.

- API Integration: The breadth of tool and API connectivity is a key differentiator, with open-source and enterprise-focused platforms offering extensive integration options.

- Multimodal and AI-Assisted Features: Emerging support for image, audio, and document processing, as well as AI-assisted app construction (e.g., Bubble AI Builder, Power Apps Copilot), is expanding the scope of zero-code development.

The survey provides a granular comparison of representative platforms:

- OpenAI Custom GPTs: Enable rapid creation of chatbots via instruction and knowledge upload, but are limited in logic complexity and extensibility.

- Bolt.new: Combines chat-driven code generation with real-time deployment, suitable for users with some technical background.

- Dust.tt: Emphasizes workflow orchestration, data connectivity, and agent monitoring, targeting enterprise use cases.

- Flowise: Open-source, visual flow builder leveraging LangChain, offering high extensibility and self-hosting.

- Cognosys: Focuses on AutoGPT-style agents for non-technical users, with high abstraction but limited customizability.

- Bubble and Glide: General-purpose no-code platforms with LLM integration, supporting full app creation and AI-augmented features.

Trade-offs and Limitations

Zero-code LLM platforms introduce several trade-offs:

- Customizability vs. Simplicity: Accessibility comes at the cost of limited control over advanced logic, integrations, and model fine-tuning.

- Scalability and Performance: Overheads in workflow orchestration and LLM API usage can impede performance and cost-efficiency at scale.

- Vendor Lock-in: Proprietary platforms often restrict code export and data portability, raising concerns for long-term maintainability.

- Reliability and Validation: LLM-driven logic is inherently probabilistic, complicating testing and error handling; most platforms lack robust evaluation pipelines.

- Prompt Engineering: Despite the "no-code" label, effective use often requires prompt design expertise, which can be a barrier for non-technical users.

- Shallow Learning: Teams may lack deep understanding of underlying AI and software architecture, impeding future migration to custom solutions.

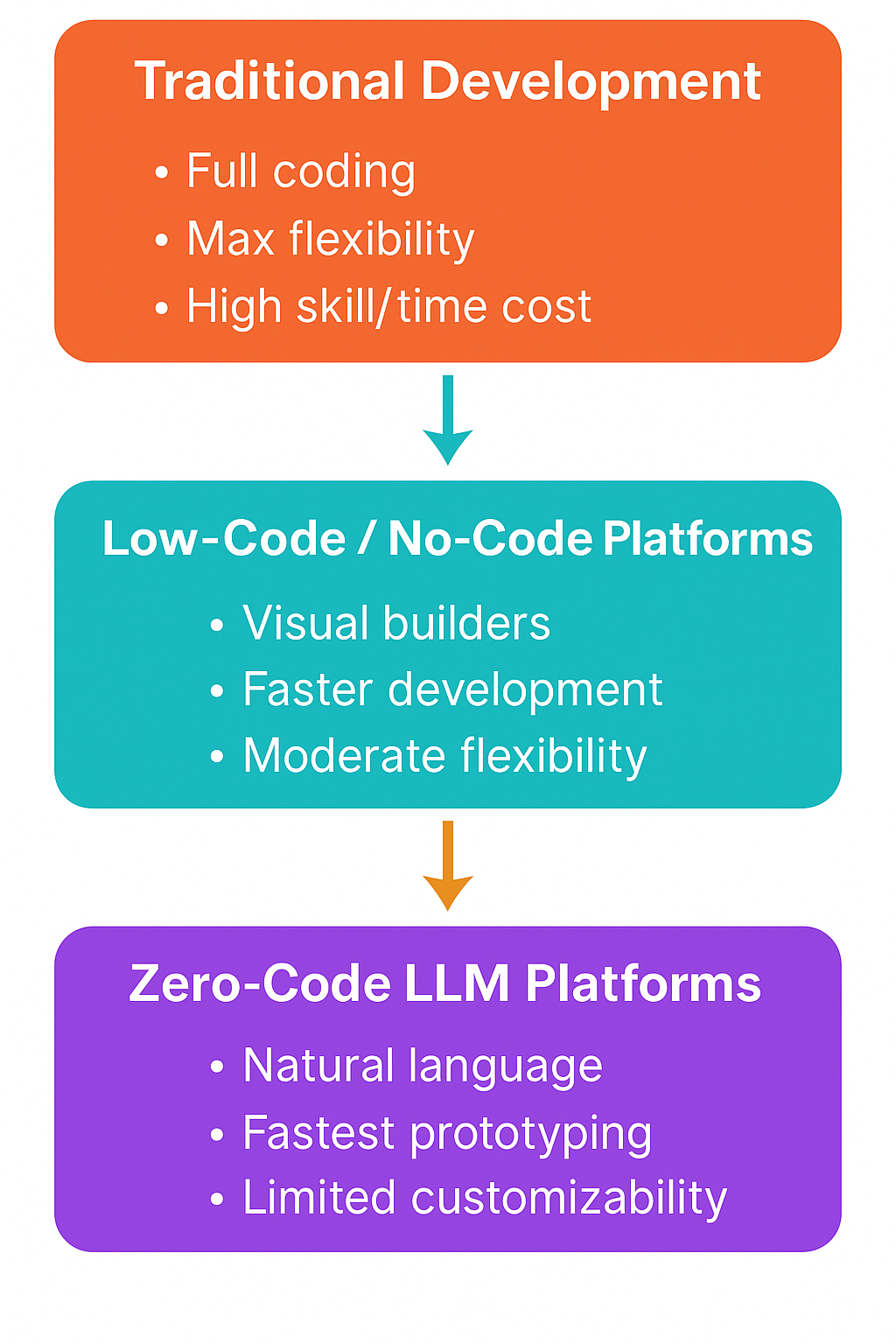

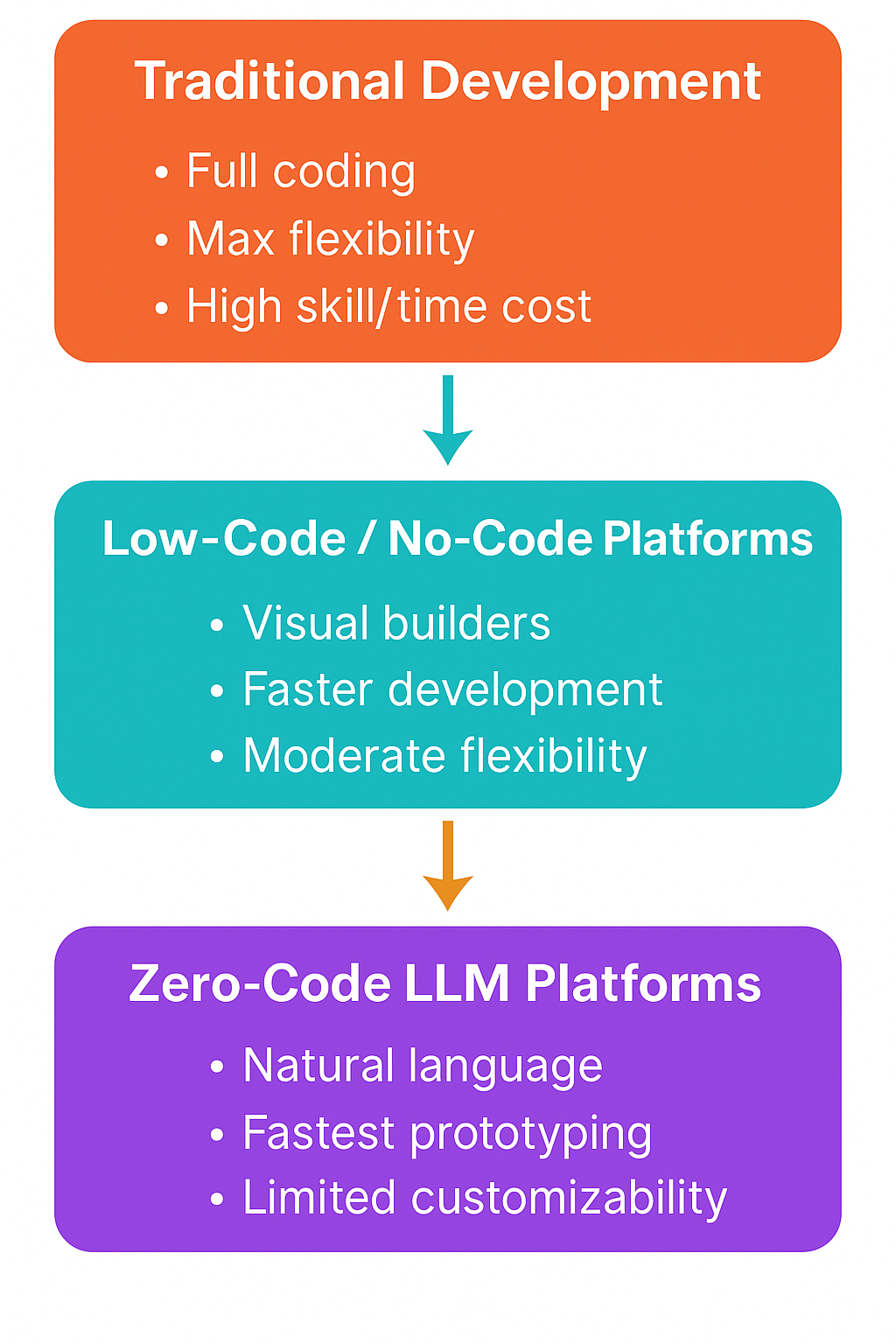

Comparison with Traditional and Low-Code Development

Figure 2: Development Paradigms: Traditional vs. Low-Code vs. Zero-Code LLMs.

- Traditional Coding: Offers maximal flexibility and control, but at the cost of higher development time and required expertise.

- Low-Code: Balances visual development with code extensibility, but struggles with complex, non-standard logic.

- Zero-Code LLM: Excels in rapid prototyping and accessibility, particularly for domain experts, but is constrained in scalability, reliability, and advanced customization.

The coexistence of these paradigms is likely, with zero-code tools serving as accelerators for prototyping and internal tools, and traditional development reserved for production-grade, mission-critical systems.

Future Directions

Several trends are poised to shape the evolution of zero-code LLM platforms:

- Multimodal Capabilities: Integration of image, audio, and video processing will broaden application domains.

- On-Device and Private LLMs: Advances in model efficiency will enable local deployment for privacy and offline use.

- Advanced Orchestration: Multi-agent workflows, debugging tools, and safety features will enhance robustness and transparency.

- Collaboration and Community Sharing: Template galleries and marketplaces will facilitate reuse and onboarding.

- Convergence with IDEs: Hybrid interfaces will allow seamless collaboration between non-developers and engineers.

- AI Improvements: As LLMs improve in reasoning and reliability, zero-code platforms will inherit these advances, reducing the need for prompt engineering and manual validation.

Conclusion

Zero-code LLM platforms represent a significant advancement in democratizing AI-powered application development. By abstracting away programming through natural language and visual interfaces, they enable rapid prototyping and empower a broader range of users. However, these gains are counterbalanced by limitations in customizability, scalability, and reliability. The trajectory of the field suggests increasing multimodality, local deployment, and hybrid collaboration models, with the boundary between developer and non-developer tools becoming increasingly permeable. As LLMs and orchestration frameworks mature, zero-code platforms are likely to become integral to the software development lifecycle, particularly for prototyping, internal tools, and domain-specific automation.