AI use in American newspapers is widespread, uneven, and rarely disclosed (2510.18774v1)

Abstract: AI is rapidly transforming journalism, but the extent of its use in published newspaper articles remains unclear. We address this gap by auditing a large-scale dataset of 186K articles from online editions of 1.5K American newspapers published in the summer of 2025. Using Pangram, a state-of-the-art AI detector, we discover that approximately 9% of newly-published articles are either partially or fully AI-generated. This AI use is unevenly distributed, appearing more frequently in smaller, local outlets, in specific topics such as weather and technology, and within certain ownership groups. We also analyze 45K opinion pieces from Washington Post, New York Times, and Wall Street Journal, finding that they are 6.4 times more likely to contain AI-generated content than news articles from the same publications, with many AI-flagged op-eds authored by prominent public figures. Despite this prevalence, we find that AI use is rarely disclosed: a manual audit of 100 AI-flagged articles found only five disclosures of AI use. Overall, our audit highlights the immediate need for greater transparency and updated editorial standards regarding the use of AI in journalism to maintain public trust.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper looks at how often American newspapers use AI to help write the articles you read online, which kinds of stories use AI the most, and whether newspapers tell readers when AI is involved.

What questions did the researchers ask?

The researchers wanted to answer, in simple terms:

- How much of today’s news is written partly or fully by AI?

- Where and when is AI used most (by topic, by newspaper size, by owner, by state, by language)?

- Do top national papers use AI in opinion pieces, and is that changing over time?

- Do newspapers clearly tell readers when AI helps write a story?

- When AI is used, does it usually replace the writer, or does it just help edit?

How did they paper it?

To keep things fair and big enough to trust, they built three large collections of real newspaper articles and analyzed them.

The three datasets

- “Recent”: 186,000+ articles from 1,500+ U.S. newspapers, published online between June and September 2025 (lots of local and national outlets).

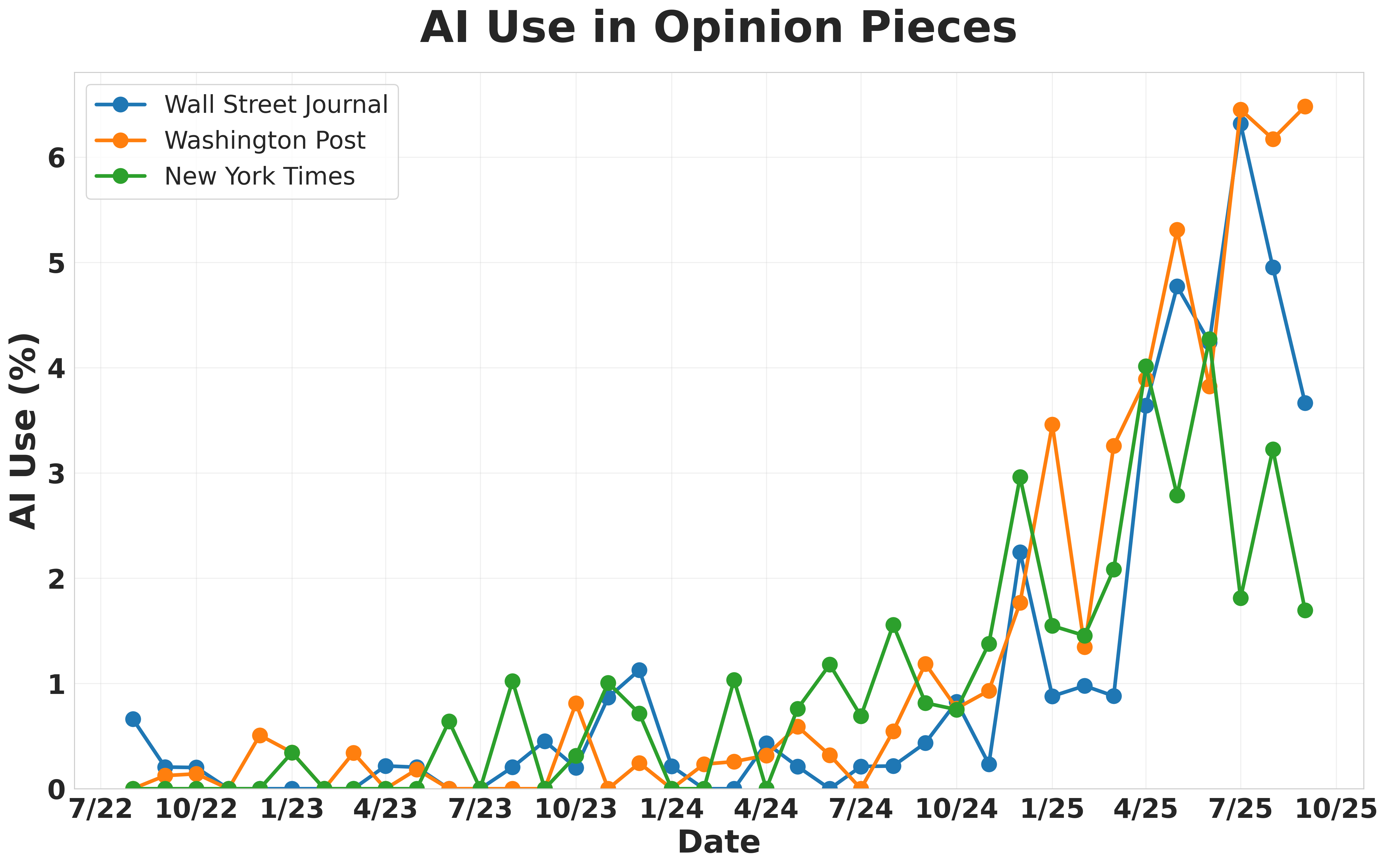

- “Opinions”: 45,000 opinion pieces from the New York Times, Washington Post, and Wall Street Journal (Aug 2022–Sept 2025).

- “AI (reporters)”: 20,000 articles by 10 long‑time reporters who wrote before and after ChatGPT came out, to see how their habits changed over time.

How they spotted AI writing

They used a tool called Pangram, which is like a metal detector—but for AI-written text. You feed in an article, and it predicts if the writing is:

- Human (mostly human-written)

- Mixed (some parts human, some parts AI)

- AI (mostly or fully AI-written)

Pangram also gives a score (0–100%) for “how likely this is AI.” The tool has been tested a lot and makes mistakes only rarely, but like any detector, it isn’t perfect.

They also:

- Checked topics (like weather, tech, sports) using a standard news-topic list, so they could compare AI use by subject.

- Matched newspapers to their size and owner (using public databases) to see if that mattered.

- Double-checked a sample with another detector (GPTZero) and did a manual read of 100 AI-flagged articles to look for disclosure.

- Looked at long quotes (over 50 words) to see if quotes were human-written inside AI-flagged stories.

What did they find?

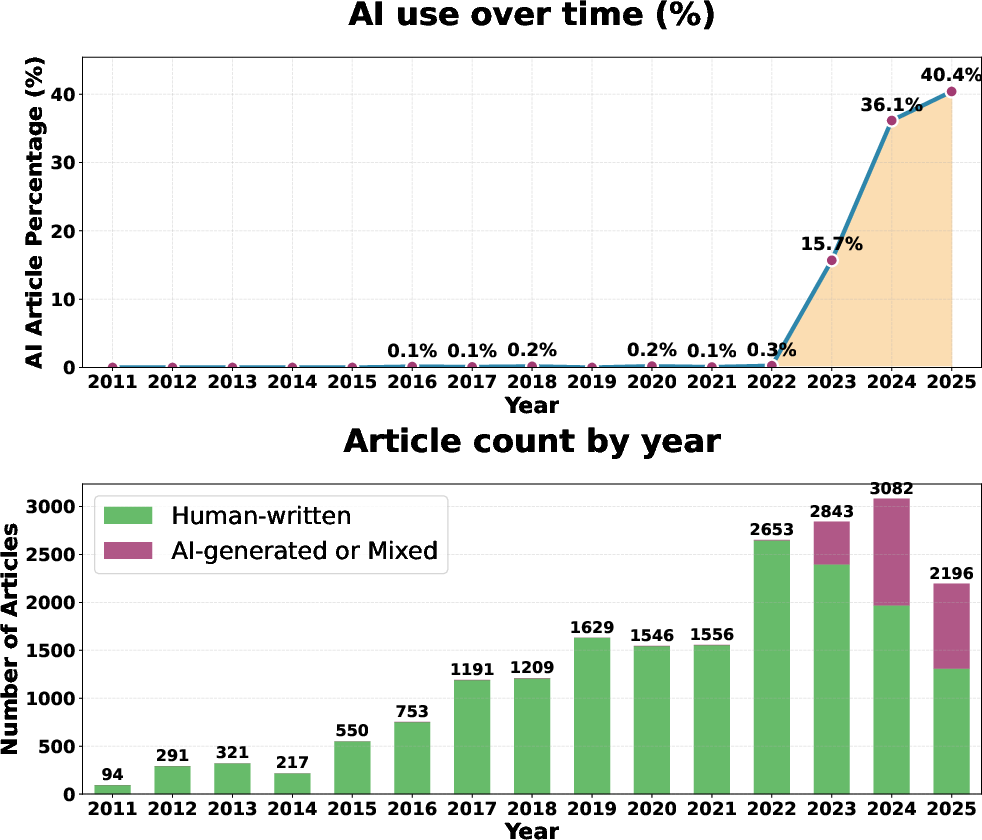

1) About 9% of new U.S. newspaper articles use AI in some way

Across 186,000 recent articles, roughly 1 in 11 were partly or mostly AI-written.

Why it matters: That’s a lot of news being shaped by AI—sometimes fine (like routine weather updates), sometimes risky (if errors slip in, or if readers aren’t told).

2) AI use is uneven—who uses it most?

- Smaller local papers use AI more than big national ones. Local newsrooms often have fewer staff and smaller budgets, so automation can be tempting.

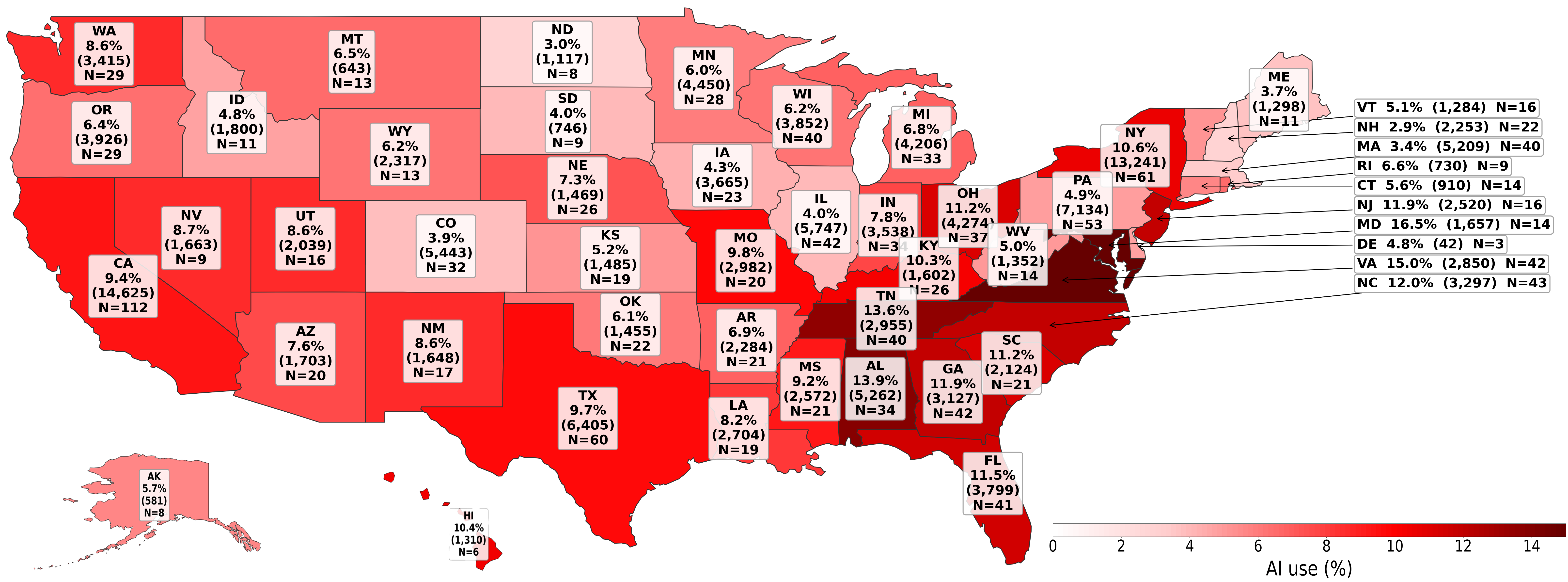

- Some states (especially in the Mid‑Atlantic and the South) had higher AI rates than others.

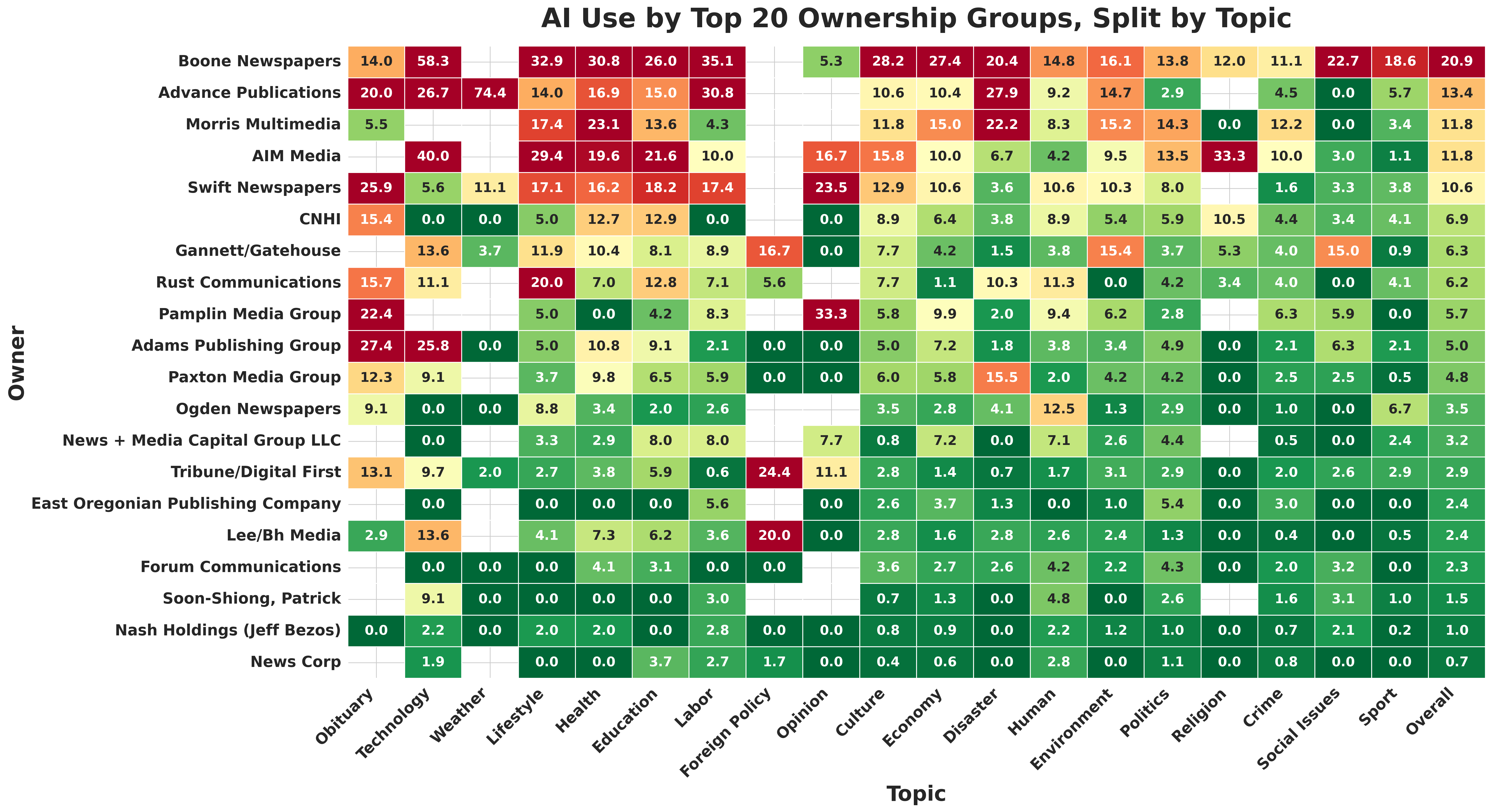

- AI use varies by owner. For example, two owners (Boone Newsmedia and Advance Publications) had especially high use, while several other big groups used it far less.

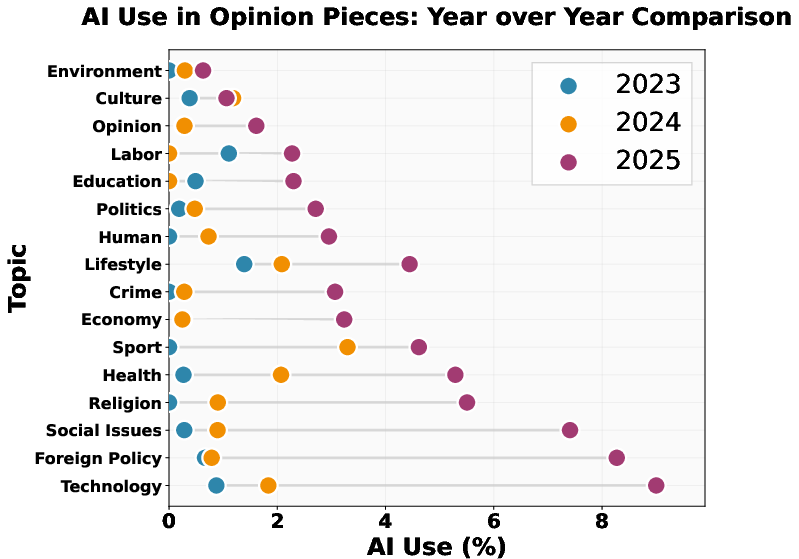

3) Certain topics use AI more

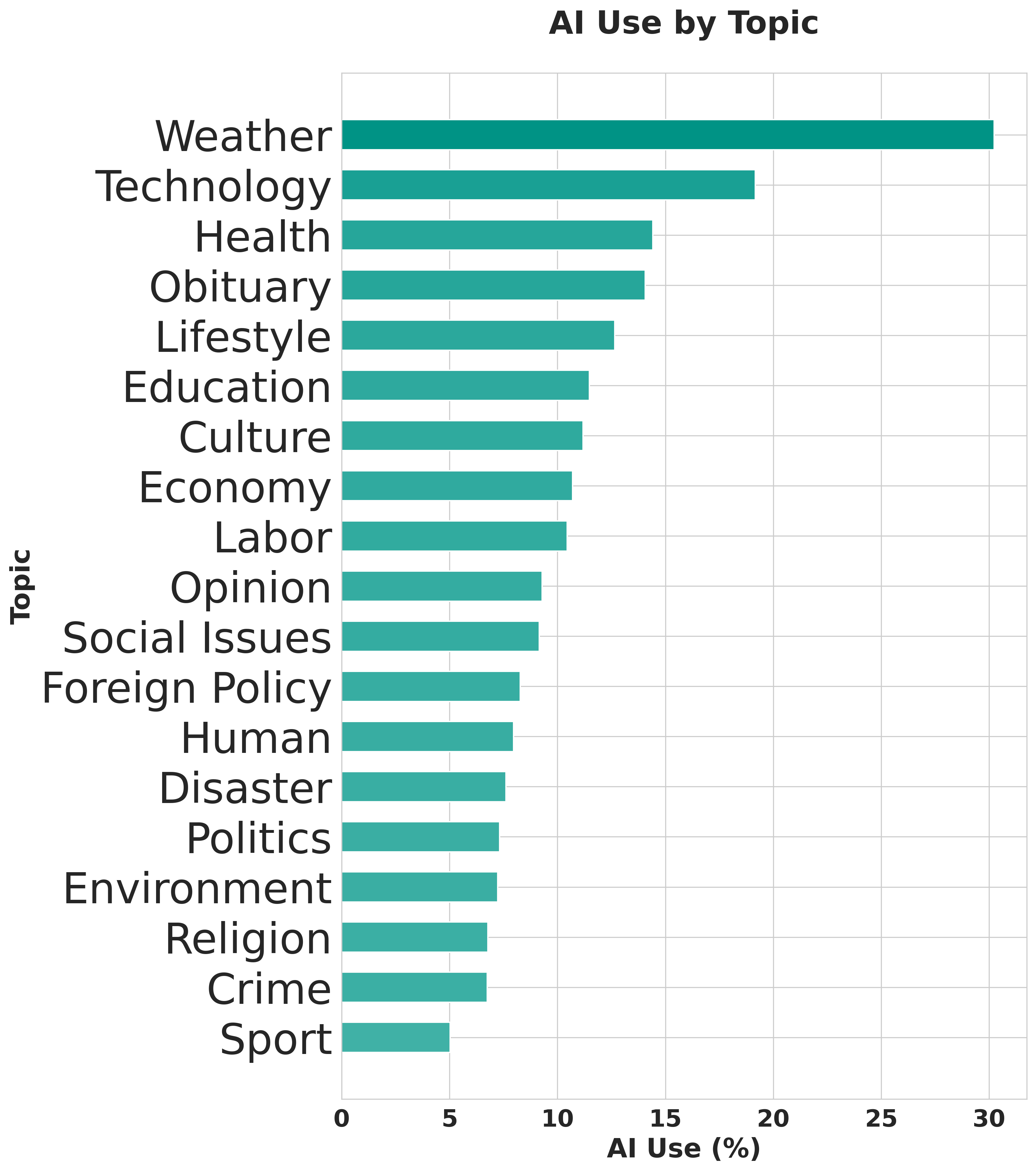

- Highest: Weather, science/technology, and health.

- Lower: Conflict/war, crime/justice, and religion.

Translation: Stories with routine data (like weather) are easier to auto-draft. Sensitive topics see less AI—probably because accuracy and tone matter more.

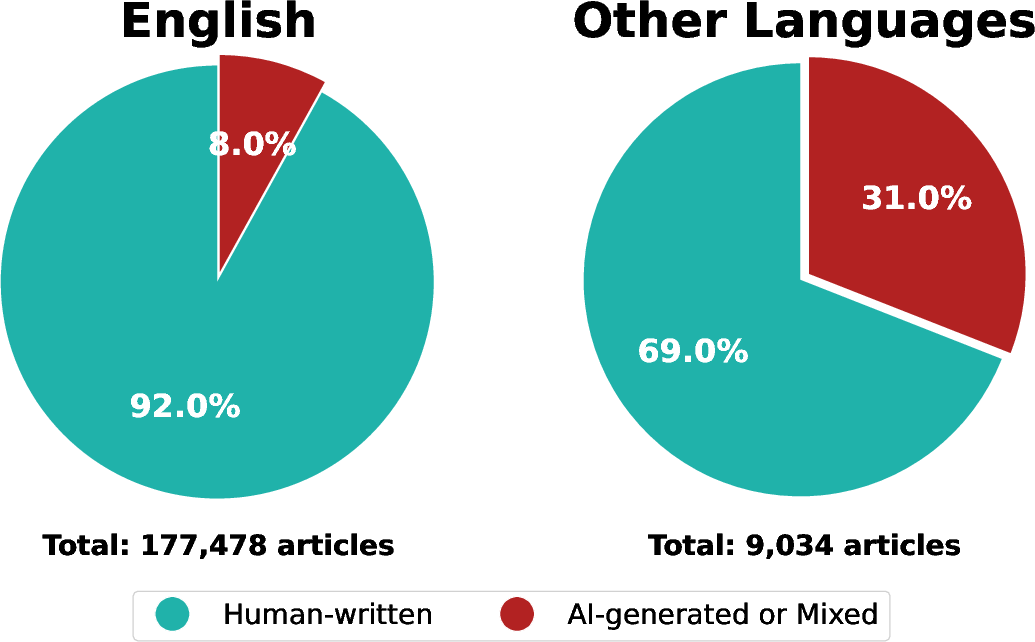

4) Non‑English articles used AI more often

- English articles: about 8% used AI.

- Other languages (often Spanish in U.S. outlets): about 31% used AI.

This suggests AI is often used for translation or fast drafting in bilingual coverage.

5) Opinion pieces at top newspapers are much more likely to use AI—and rising fast

- Opinion articles (NYT, WaPo, WSJ) were about 6.4× more likely to use AI than news articles from the same outlets in mid‑2025.

- From 2022 to 2025, AI use in these opinion sections jumped around 25×.

- Guest writers (politicians, CEOs, scientists) used AI far more than full‑time columnists.

Why it matters: Opinion pages can change minds. If AI helps write persuasive arguments, readers should know.

6) AI help is often “mixed,” not a full replacement

Many AI‑flagged pieces looked like teamwork: humans plus AI editing or drafting. The detector can’t always tell exactly how the AI was used (edit vs. draft), only that some parts look AI‑generated.

7) AI writing is rarely disclosed

In a manual check of 100 AI‑flagged articles from different newspapers, only 5 clearly told readers that AI helped. Most outlets didn’t have public AI policies, and even a few with bans still published AI‑flagged articles.

Why it matters: Readers can’t judge whether AI was used responsibly if they aren’t told it was used at all.

8) Many AI‑flagged articles still contain real human quotes

In stories with long quotes, most AI‑flagged articles had at least one human‑written quote. That suggests reporters may gather real quotes and facts, then use AI to draft or polish the story around them.

9) AI‑written stories also show up in print

Some AI‑flagged pieces appeared in printed newspapers (not just online), reaching older readers who may find it harder to check sources.

What does this mean?

- Trust and transparency: People tend to trust news more when they know how it was made. If AI is used, readers should be told where and how (for example, “AI drafted this weather report; an editor checked it”).

- Set better rules: Newsrooms need clear, public policies on when AI is allowed, how it’s checked for accuracy, and how to tell readers about it.

- Use AI wisely: AI can be useful for routine tasks (like weather summaries or grammar). But on sensitive topics—or persuasive opinion pieces—extra care and clear disclosure are crucial.

- Keep watching: AI use is growing and changing quickly. Public dashboards and follow‑up studies can help keep newsrooms accountable and readers informed.

Important limits to remember

- Detectors can’t always say exactly how AI was used—only that parts look AI‑written.

- Even good detectors can make rare mistakes.

- Circulation numbers are historical proxies (mainly from around 2019), not current total audience size.

- Machine‑translated articles might look “AI‑ish” to detectors, but small checks suggested real human writing still reads as human after translation.

- The paper covered a specific time window; patterns can change over time.

The big takeaway

AI is already a significant behind‑the‑scenes helper in American journalism—especially in smaller outlets, certain topics like weather and tech, and opinion pages at top papers. But readers are almost never told when AI is involved. Using AI isn’t automatically bad, yet being open about it is essential. Clear labels, solid editorial rules, and careful human oversight can help newsrooms use AI’s benefits without losing the public’s trust.

Knowledge Gaps

Below is a single, concrete list of the key knowledge gaps, limitations, and open questions that remain unresolved by the paper. These items are framed to be actionable for future researchers.

- Ground-truth validation is limited: only 100 AI-flagged articles were manually checked (and only purplebg), with no systematic verification of tealbg articles for false negatives or stratified checks across topics, owners, states, or languages.

- The role of AI in mixed-authorship (orangebg) texts is indeterminate: there is no method to estimate the proportion of AI-generated vs. human-written content or to differentiate editing, drafting, translation, and templating; segment-level provenance remains unresolved.

- Detector reliance and validation gaps: the audit hinges primarily on Pangram; cross-detector comparison with GPTZero is limited (small, binary subset; excludes orangebg), and there’s no thorough evaluation of detector recall, multilingual performance, short-text reliability, or robustness against evasion (paraphrasing/human post-editing).

- Machine translation confound: the higher AI share in non-English pieces may reflect translation pipelines; controlled experiments to separate human-written, human-translated, machine-translated, and AI-generated content are missing.

- Sampling biases in recent: coverage is constrained to June–September 2025, RSS-dependent feeds, and “up to 50 articles” per outlet per crawl, potentially underrepresenting paywalled content, print-only articles, and seasonal/topic variation (e.g., weather-heavy months).

- Syndication and duplication are not de-duplicated: chain-wide replication or wire copy might inflate AI-use counts per owner/topic; provenance tracking to cluster identical/near-identical articles across outlets is absent.

- Ownership and circulation metadata are incomplete/outdated: owner mapping covers ~52% of outlets; circulation is a 2019 print proxy (self-reports; excludes digital audience), which may mischaracterize current scale and skew analyses of AI use by size.

- Topic classification reliability is moderate and uncalibrated by language: zero-shot Qwen3-8B labels align 77% with humans on a small English-only sample; cross-language accuracy, topic boundary errors, and IPTC taxonomy coverage (e.g., obituaries/Other) remain under-explored.

- Language identification method and accuracy are unspecified: there is no evaluation of language detection errors, which could misassign articles to “other languages” and affect AI-use estimates.

- No direct quality/factuality assessment: the paper does not quantify factual errors, hallucinations, bias, or corrections/retractions in AI vs. human articles; impact on reliability across topics and owners is unknown.

- Disclosure audit is narrow and non-representative: only 100 purplebg articles from unique outlets were reviewed; a systematic, large-scale, automated and manual audit of disclosures (including orangebg and tealbg) and outlet policy pages is missing.

- Opinions dataset coverage and author labeling are under-specified: ProQuest-based collection may under-sample online-only content; author occupational categorization lacks a reproducible protocol; AI-use claims in op-eds are not corroborated by author/editor workflows.

- Longitudinal panel (ai dataset) is selection-biased: reporters were chosen for having multiple AI-flagged articles; results are not representative of broader journalist populations; causal drivers of adoption (policy changes, tooling, staffing) are untested.

- Quote analysis is length-limited and lacks source verification: only quotes >50 words were analyzed; fabrication in shorter quotes is unexamined; there is no cross-source validation (e.g., checking quotes against transcripts/press releases).

- Drivers of geographic variation are not investigated: the observed state-level AI use differences are not linked to economic pressures, staff size, CMS/tooling, chain policy environments, or local news ecosystem health.

- Non-text and non-generative AI use is out of scope: the audit does not measure AI in images, video, audio, graphics, transcription, translation, summarization, or research workflows, likely understating total AI integration in newsrooms.

- Section-level placement of AI within articles is unknown: there is no segmentation analysis of where AI appears (headline, lede, nut graf, body, conclusion), limiting actionable editorial guidance.

- Print prevalence is anecdotal: AI use in print is not quantified; print-only outlets are not systematically audited; an OCR-based print pipeline to measure AI use is absent.

- Uncertainty quantification is limited: many reported rates (by topic, owner, language, state) lack confidence intervals and sensitivity analyses (e.g., to detector thresholds, article length, and sampling schemes).

- Outlet authenticity and “AI newspapers” detection lacks criteria: identifying and validating fake or fully AI-generated outlets (e.g., Argonaut) requires a protocol for outlet legitimacy (staff pages, registries, WHOIS, IP ownership).

- Policy–practice link is untested: relationships between public AI policies (including explicit bans) and observed AI use are anecdotal; a systematic compliance measurement over time is needed.

- Reader impact is inferred, not measured: beyond Pew surveys, there’s no experimental evidence on how disclosures (content-, section-, or extent-specific) affect trust, comprehension, persuasion, or engagement.

- Tooling, models, and workflow provenance are unknown: the paper cannot identify which LLMs or CMS integrations were used, prompt practices, or editorial review steps; newsroom interviews or telemetry could fill this gap.

- Equity implications in bilingual communities are unexplored: higher AI use in Spanish-language outlets raises fairness and quality questions for bilingual audiences; differential resource constraints and editorial oversight are not examined.

- Orangebg taxonomy and labeling protocol is absent: a standardized mixed-authorship rubric (edit vs. draft vs. translate vs. template) with human annotation at segment level would enable finer-grained analysis and guidance.

- Press-release and wire-copy confounds are not controlled: detectors may conflate formulaic PR/wire language with AI; source provenance tracing and PR/wire baselines are needed.

- Data sharing limits reproducibility: only links (not full texts) are released; article drift/paywalls can hinder replication; snapshots (e.g., text archives, hashes) under appropriate permissions would support durable auditing.

- Detector recall on known AI content isn’t estimated: using a ground-truth set of disclosed-AI articles to estimate Pangram’s recall for news text (by topic/language/length) is missing.

- Template-heavy domains (weather, obituaries, sports) may confound detection: controlled baselines to differentiate templating from AI generation are needed, especially within chains that standardize content.

Collections

Sign up for free to add this paper to one or more collections.