- The paper reveals that disclosing AI authorship significantly boosts immediate reader engagement without degrading perceived quality.

- The study uses a between-subjects survey experiment with 599 participants to compare human-written, AI-assisted, and fully AI-generated articles.

- The findings suggest that while transparency leads to short-term engagement gains, it does not influence long-term willingness to consume AI-generated news.

The integration of AI into journalism has raised questions regarding its impact on perceived article quality and reader engagement. The paper "Willingness to Read AI-Generated News Is Not Driven by Their Perceived Quality" explores this by investigating whether the disclosure of AI involvement affects reader willingness to engage with AI-generated news and if it influences perceived quality.

Research Context and Objectives

AI's role in news production has expanded significantly, encompassing tasks from data aggregation to full text generation. This development poses democratic challenges, primarily concerning transparency and trust in the information disseminated by AI systems. The study scrutinizes three central research questions: the perceived quality of AI-generated versus human-generated news articles, the influence of disclosing AI involvement on engagement levels, and future willingness to read AI-generated news.

Experimental Design and Methodology

The study employs a between-subjects survey experiment with 599 participants from German-speaking Switzerland. Participants are divided into three groups: articles written by journalists (control), rewritten with AI assistance, and fully AI-generated. The survey gauges initial quality perceptions without disclosing authorship, followed by engagement measures once AI involvement is revealed.

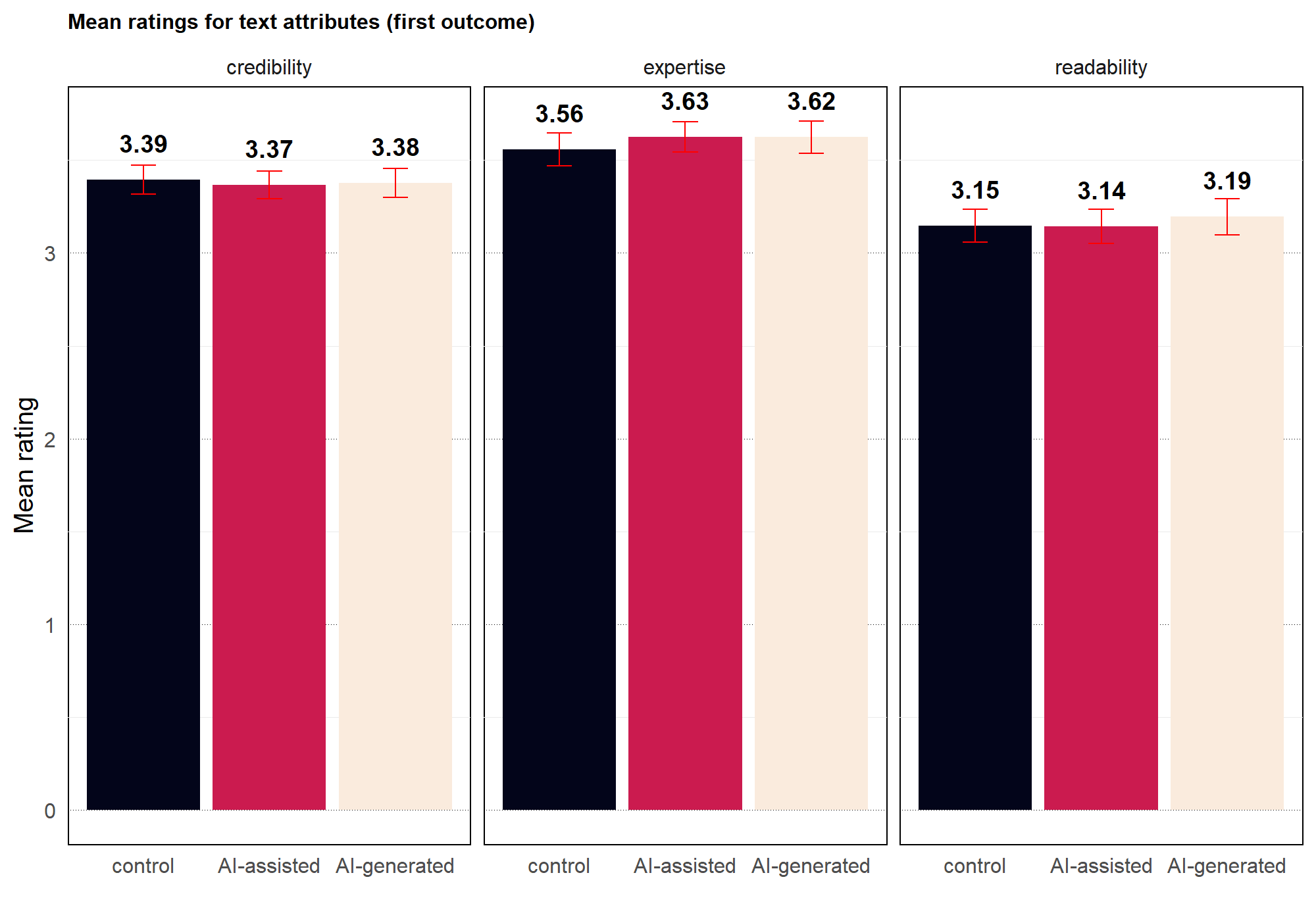

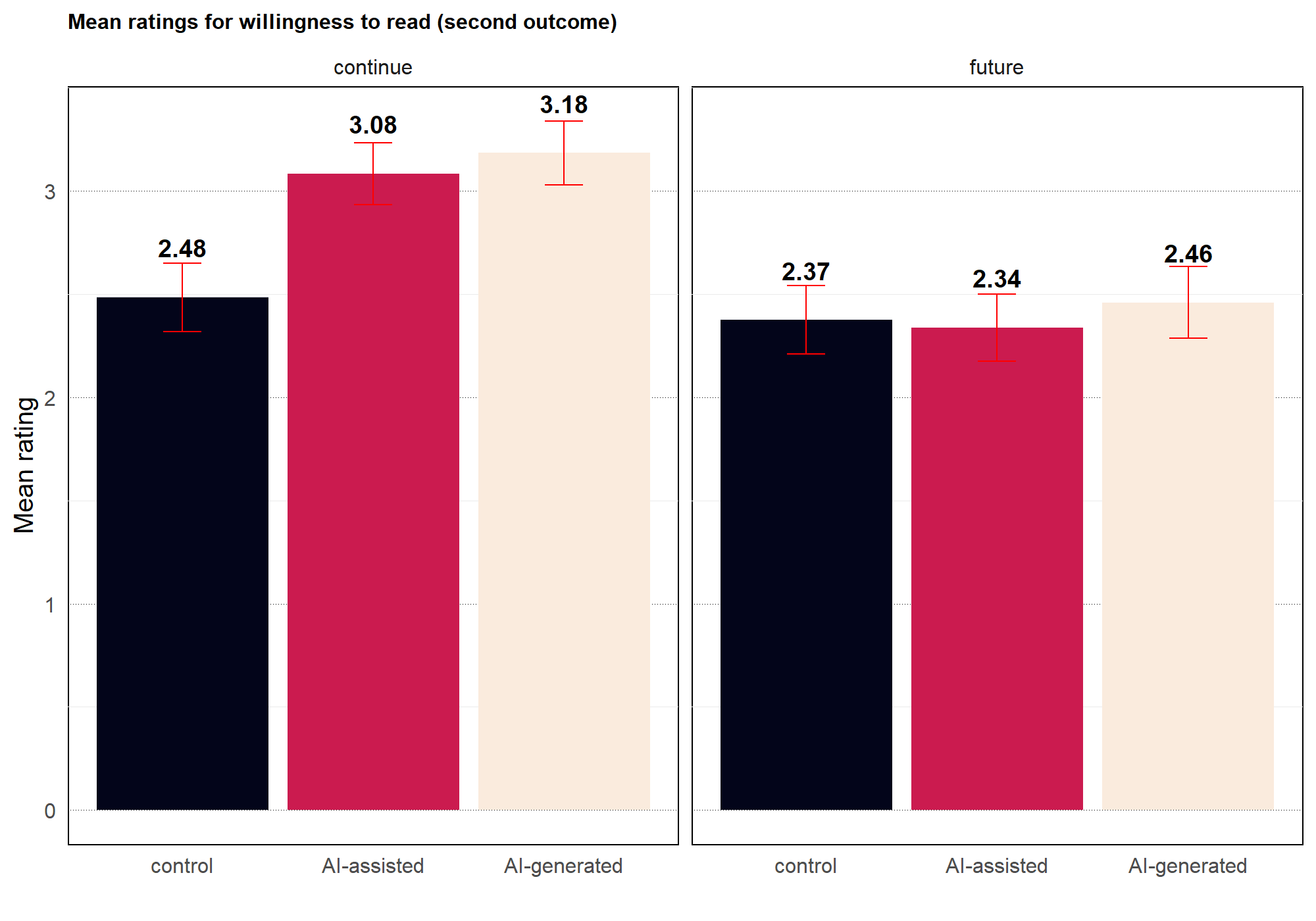

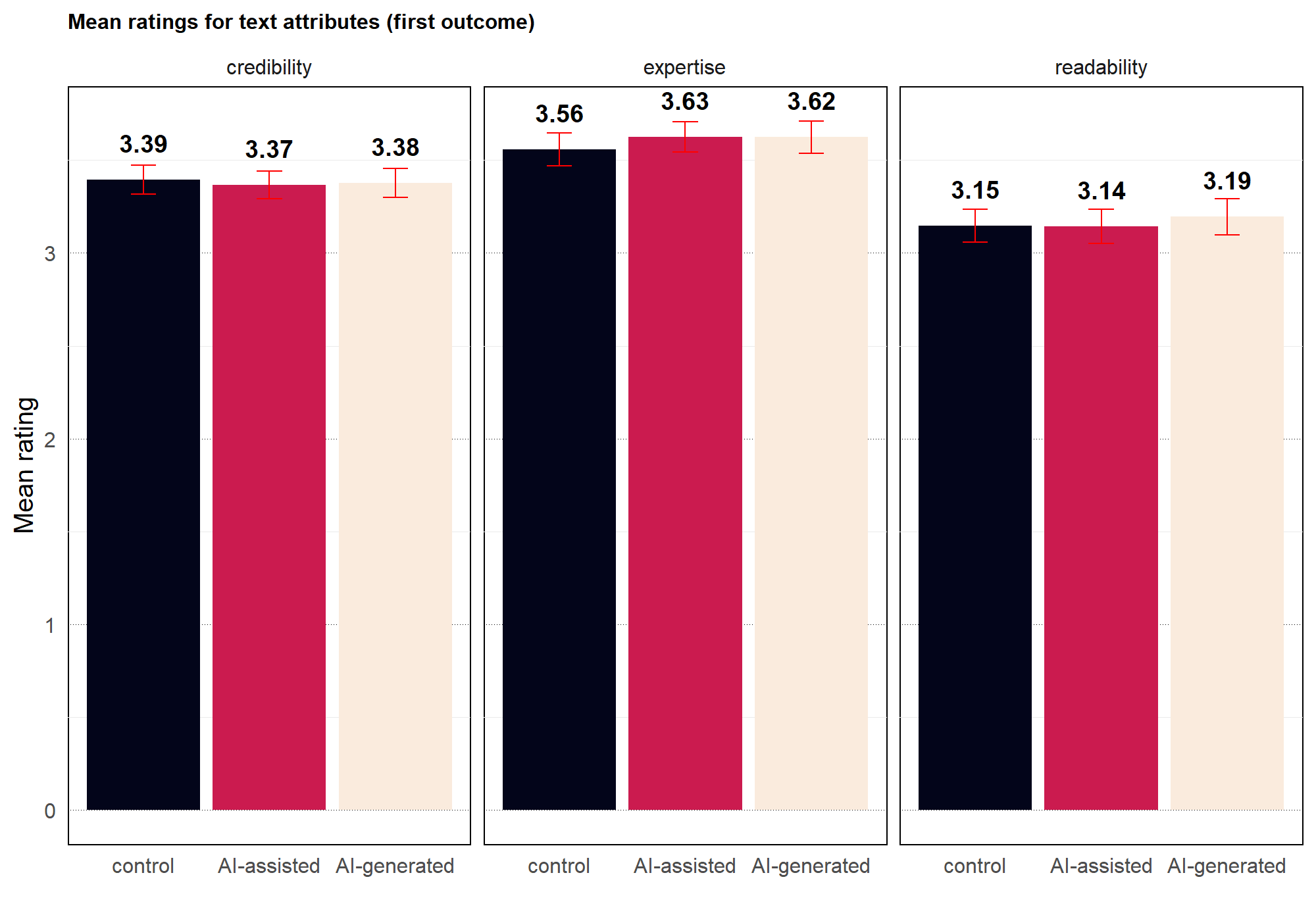

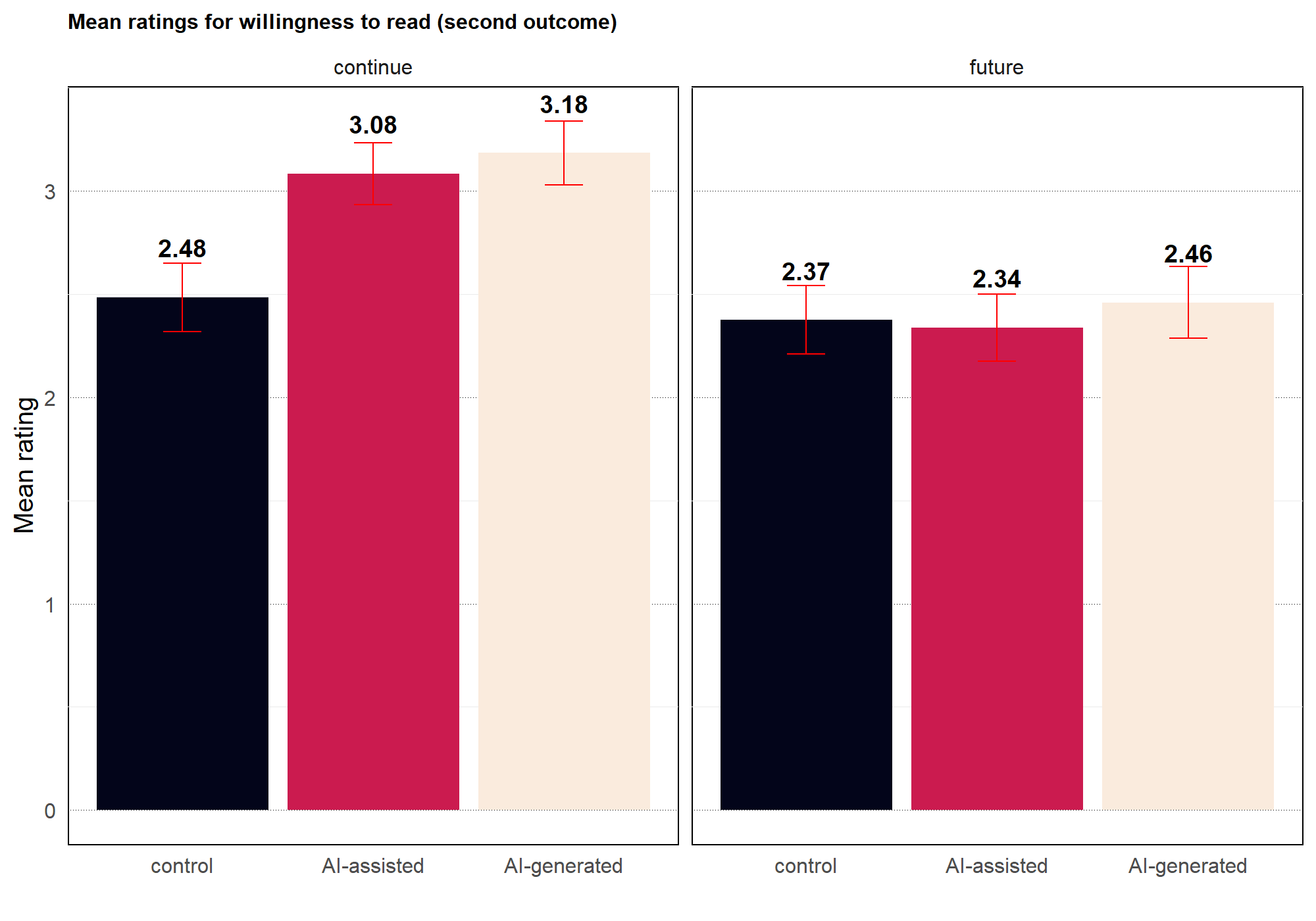

Engagement and quality perceptions are visualized in the study through comparative analysis of different groups.

Figure 1: Average ratings (first outcome: quality)

Figure 2: Average ratings (second outcome: willingness to read)

Results

The results indicate no significant differences in perceived quality across the article types; readers rated articles similarly in terms of expertise, readability, and credibility irrespective of AI involvement. This suggests pre-existing quality perceptions do not necessarily diminish with AI authorship.

However, disclosure of AI involvement notably increased readers' willingness to engage further with the articles. This was particularly pronounced in the AI-generated group, where willingness to continue reading saw a significant uplift compared to the control group. This suggests a curiosity-driven engagement when AI authorship is transparent, dismissing previous assumptions that AI might deter readers.

Surprisingly, despite the short-term engagement boost, there was no significant impact on participants' openness to consuming AI-generated news in future contexts. This highlights a complex relationship between initial engagement and long-term acceptance.

Discussion and Implications

The findings challenge preconceptions about AI's negative impact on news consumption due to perceived quality deficits. Instead, transparency in AI's role appears beneficial in enhancing immediate engagement, although it does not translate into increased willingness for future AI news consumption.

From a practical standpoint, news organizations can leverage AI as a tool for increased immediate reader engagement by clearly highlighting its role without detracting from perceived quality. However, the lack of change in long-term willingness to engage with AI-generated content suggests this might be a temporary effect influenced by novelty.

Future research should aim at understanding the psychological mechanisms that drive engagement solely attributable to AI transparency. Additionally, longitudinal studies could offer insights into whether repeated exposure to AI-generated content under transparent conditions may indeed shift long-term attitudes.

Conclusion

The research presents a nuanced perspective on AI integration in journalism, emphasizing the importance of transparency for immediate engagement without compromising perceived quality. While AI doesn't diminish news quality perceptions when undisclosed, clearly communicating its integration might offer immediate engagement benefits. Nonetheless, sustained acceptance and willingness to read AI-generated news remain unaffected, prompting inquiries into the factors limiting long-term engagement shifts. These findings underline the need for strategic approaches in AI journalism deployment to maximize engagement potential without compromising trust.