- The paper proposes Executable Knowledge Graphs (xKG) that integrate research literature with runnable code to tackle replication challenges in AI.

- It details a modular, hierarchical graph construction method that curates literature and extracts techniques to generate executable code modules.

- Experimental results show performance gains, with up to a 10.90% improvement when paired with state-of-the-art agent frameworks.

Overview of Executable Knowledge Graphs for Replicating AI Research

This paper addresses the replication issue in AI research by introducing Executable Knowledge Graphs ({xKG}). The authors propose a new approach to facilitate the reproduction of AI research by creating a structured knowledge base that combines textual insights with executable code from scientific literature. This approach aims to address existing challenges such as insufficient code execution capabilities and limited retrieval methods that overlook practical implementation signals.

Introduction

AI research generates numerous publications annually, but replicating these studies remains a challenge due to incomplete code repositories and scattered background information. Existing methods rely heavily on retrieval-augmented generation (RAG), which often lacks the insight needed for effective implementation. Executable Knowledge Graphs are proposed as a solution, offering a structured knowledge representation that integrates detailed code snippets and technical insights extracted from literature.

Methodology

Design of {xKG}

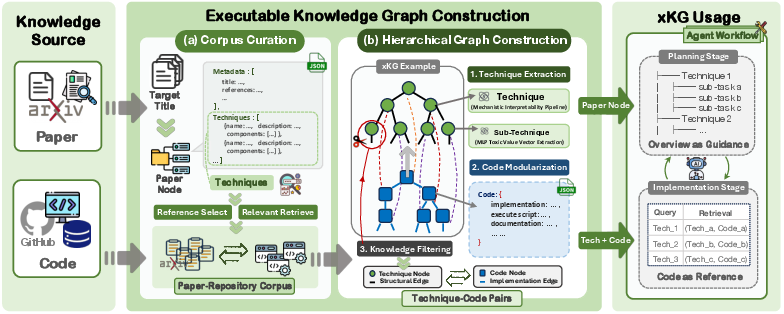

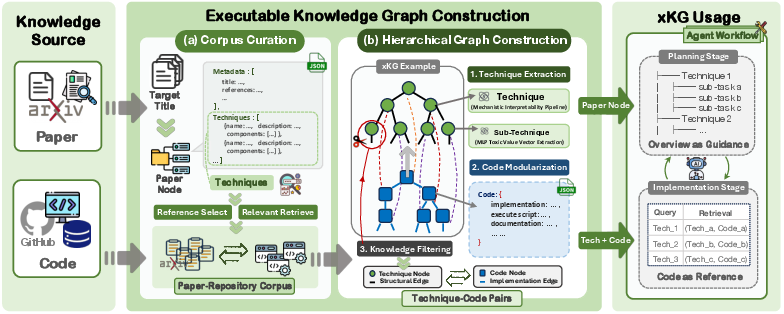

The Executable Knowledge Graph ({xKG}) is a modular, hierarchical graph designed to facilitate AI research replication. It comprises nodes representing papers, techniques, and executable code modules. Nodes are interlinked via structural and implementation edges, encapsulating both theoretical concepts and their corresponding runnable code.

Figure 1: The structure of {xKG}, integrating papers, techniques, and executable components.

Graph Construction

The {xKG} is constructed using a multi-step process:

- Corpus Curation: Automating the identification of relevant literature and corresponding code repositories using o4-mini.

- Technique Extraction: Extracting academic concepts from papers and linking them to executable components through RAG methods.

- Code Modularization: Synthesizing code snippets with techniques to produce executable modules, checked for executability.

The final {xKG} comprises 42 curated papers encompassing 591,145 tokens, enabling scalable knowledge integration across various research domains.

Experimental Results

Evaluation

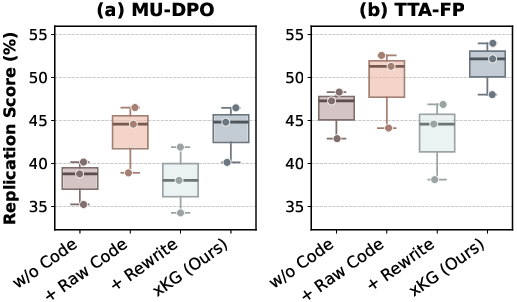

The efficacy of {xKG} is evaluated using the PaperBench Code-Dev benchmark integrated within multiple agent frameworks. The notable gains are demonstrated, with improvements of up to 10.90% when integrated with PaperCoder and o3-mini.

A detailed analysis reveals {xKG}'s benefits across multiple facets:

Case Study

A deep dive into the MU-DPO paper showcases how {xKG} augments an agent's ability to plan and implement complex research tasks more effectively by offering modular, verified code components.

Figure 3: MU-DPO case paper showing enhanced implementation through {xKG} integration.

Conclusion

Executable Knowledge Graphs offer a robust framework for replicating AI research by bridging the gap between theoretical concepts and executable implementations. The modularity and reusability inherent to {xKG} make it a valuable tool for enhancing AI research replication. This work outlines the potential for scaling knowledge in AI, reducing noise in current retrieval methods, and providing streamlined support for emerging research domains. Future work will focus on expanding {xKG} to broader research areas and refining its applicability across diverse scientific inquiries.