Customer-R1: Personalized Simulation of Human Behaviors via RL-based LLM Agent in Online Shopping (2510.07230v1)

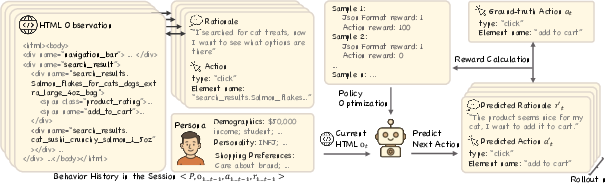

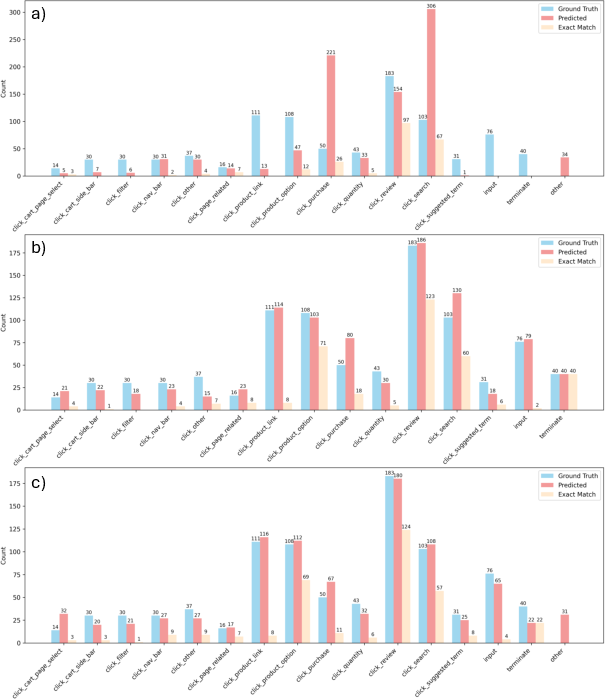

Abstract: Simulating step-wise human behavior with LLMs has become an emerging research direction, enabling applications in various practical domains. While prior methods, including prompting, supervised fine-tuning (SFT), and reinforcement learning (RL), have shown promise in modeling step-wise behavior, they primarily learn a population-level policy without conditioning on a user's persona, yielding generic rather than personalized simulations. In this work, we pose a critical question: how can LLM agents better simulate personalized user behavior? We introduce Customer-R1, an RL-based method for personalized, step-wise user behavior simulation in online shopping environments. Our policy is conditioned on an explicit persona, and we optimize next-step rationale and action generation via action correctness reward signals. Experiments on the OPeRA dataset emonstrate that Customer-R1 not only significantly outperforms prompting and SFT-based baselines in next-action prediction tasks, but also better matches users' action distribution, indicating higher fidelity in personalized behavior simulation.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, actionable list of what remains missing, uncertain, or unexplored in the paper.

- Generalization beyond Amazon/OPeRA: The approach is only evaluated on amazon.com-like HTML from the OPeRA dataset. It is unknown how Customer-R1 performs on other e-commerce sites, domains (e.g., travel, groceries), languages, or non-HTML UIs (apps/React-native), and whether the policy transfers across different page structures and interaction patterns.

- Dataset scale and diversity: The public dataset is small (527 sessions, 49 users) and highly imbalanced (86% clicks). Future work should assess scalability to larger, more diverse cohorts, and quantify performance across demographics, shopping intents, and interface designs.

- Personalization validity and metrics: While the paper claims “better matches users’ action distribution,” there is no standardized per-user personalization metric (e.g., per-user KL/EMD to ground-truth distributions, user-level hit rate, or calibration error). A formal, user-centric evaluation framework is needed to verify personalization fidelity.

- Unseen-user generalization and cold-start: The model is conditioned on personas, but it is unclear how well it performs on completely new users (no historical data) or with sparse/no persona information. Experiments with leave-one-user-out and cold-start scenarios are missing.

- Persona quality, reliability, and dynamics: Personas are derived from surveys/interviews and injected as static text. It is unknown how noisy, outdated, or conflicting persona attributes affect behavior. Methods for dynamically updating personas from observed actions and resolving persona–task conflicts (formally, not via ad hoc “page precedence”) are absent.

- Fairness and ethics: The paper does not analyze whether persona-conditioned policies introduce bias or stereotyping across demographics/personality traits. There is no fairness auditing, differential performance analysis, or privacy risk assessment for persona use.

- Rationale augmentation effects: Most rationales are synthetic (claude-3.5-sonnet). The impact of synthetic vs. human rationales on personalization and action accuracy is not isolated. There is no evaluation of rationale quality or a reward signal for rationale faithfulness/consistency with actions.

- Reward design limitations: Rewards focus on exact action match and JSON format, ignoring near-equivalent choices (e.g., alternative but reasonable clicks), effort/cost, satisfaction, risk, or time. This may penalize plausible actions and fails to capture user-centric objectives. Experiments with preference-based, inverse RL, or multi-objective reward formulations are missing.

- Hand-tuned reward weights and stability: The difficulty-aware weights (e.g., 2000/1000/10/1) are manually set with minimal sensitivity analysis. There is no systematic paper of stability, robustness, or sample efficiency under different weightings or regularization (entropy bonuses, type-specific KL, or distribution constraints).

- Reward hacking and coverage constraints: RL-only policy collapses to frequent actions (clicks, purchase, review, search). Methods to prevent collapse (e.g., constraints ensuring per-type coverage, curriculum learning, balanced sampling, or structured policies) are not explored.

- Format reward utility: The paper includes a format reward for JSON validity but does not isolate its effect. Ablations to quantify how much the format reward contributes to performance or stability are missing.

- Long-horizon credit assignment: The setup optimizes next-step actions without explicit long-horizon returns (beyond purchase vs. terminate). It remains unclear how to incorporate multi-step objectives (e.g., minimizing search effort, optimizing cart quality, or session-level utility) and whether rationale actually improves credit assignment.

- Termination modeling: The RL-only model fails to predict termination, and even SFT+RL shows difficulty on rare actions. Specialized termination modeling (hazard models, cost-to-go estimation, or tailored rewards/gating) remains unexplored.

- Action schema coverage: The action taxonomy includes only input, click, terminate; common behaviors like scroll, hover, back, navigation, filter toggling, or multi-step macros are not modeled. Extending the schema and measuring gains is an open task.

- State representation: The model ingests raw HTML sequences with “name” attributes added. The generality of this approach to uninstrumented sites, dynamic DOMs, or noisy HTML is untested. Structured state representations (DOM graphs, accessibility trees, element embeddings, or retrieval-based context) should be compared.

- Context management and memory: The truncation strategy drops early HTML while keeping actions/rationales. The impact of different memory mechanisms (summarization, retrieval, episodic memory, or key-value caches) on personalization and accuracy is not evaluated.

- Evaluation breadth: Metrics emphasize exact match and F1; sequence-level metrics (e.g., per-session utility, action prefix accuracy, cumulative regret), human believability ratings, and statistical significance across multiple training runs are lacking. Run-to-run variance and confidence intervals are not reported.

- Model family and scaling: Results focus on Qwen2.5-7B (and a weak 3B baseline). Scaling laws (parameter/context), comparisons with other families (Llama, GPT, Mistral), and the role of larger reasoning models are open questions.

- Robustness to UI/domain shift: The approach has not been stress-tested against UI/layout changes, A/B variations, missing elements, or content noise. Robustness strategies (policy distillation, domain randomization, or test-time adaptation) are not explored.

- Online/interactive validation: The work is entirely offline. There are no live user studies, A/B tests, or real-time evaluations to verify whether simulated behaviors improve UX testing or recommender pipelines.

- Equivalent actions and semantic correctness: Exact string matches can misclassify semantically equivalent actions (e.g., clicking different but functionally equivalent buttons). A semantic equivalence evaluation and reward design to handle near-equivalents is missing.

- Persona–action attribution: The paper shows aggregated improvements with persona but does not dissect which persona dimensions (e.g., price sensitivity vs. brand loyalty) actually drive decision differences. Causal attribution or controlled studies per trait are absent.

- Misalignment and noise robustness: Beyond shuffled personas, there are no experiments on partial, noisy, or adversarial persona inputs, nor on calibration under persona uncertainty.

- Environmental/compute constraints: Training requires large GPU clusters (A100 80GB, P4de), but there is no analysis of compute cost, energy, or strategies for lightweight deployment (parameter-efficient RL/SFT, LoRA, distillation).

- Data labeling and subtype mapping: Fine-grained click subtypes are inferred from element names; the accuracy of this mapping and its sensitivity to annotation errors are not validated.

- Security and misuse: Simulated user agents may be misused (e.g., botting, market manipulation). Safety controls, detection mechanisms, and policy constraints are not discussed.

Collections

Sign up for free to add this paper to one or more collections.