Character Mixing for Video Generation (2510.05093v1)

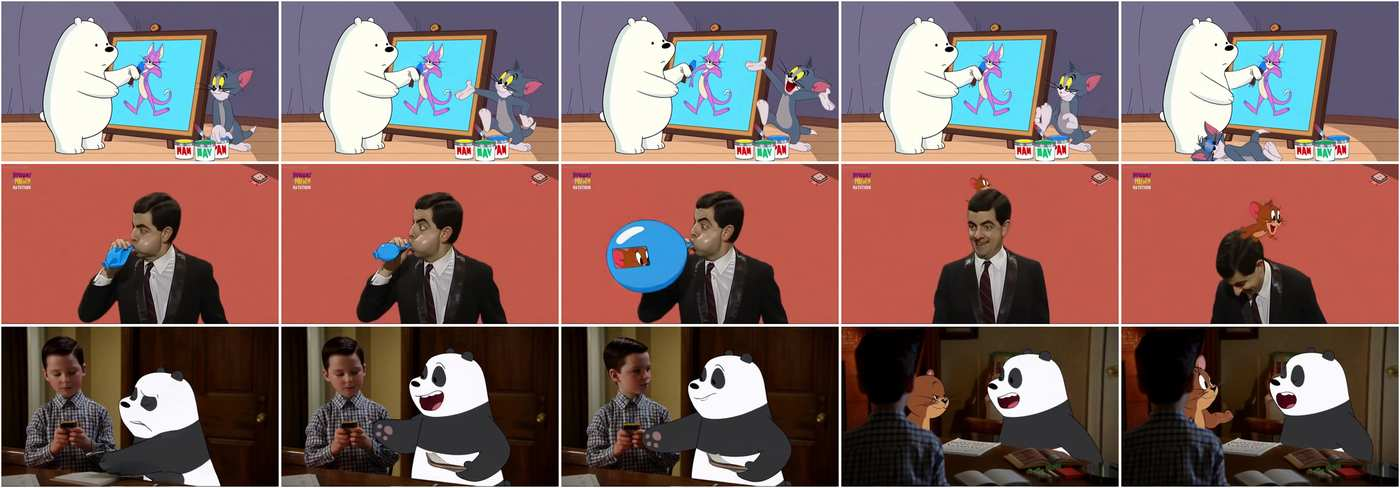

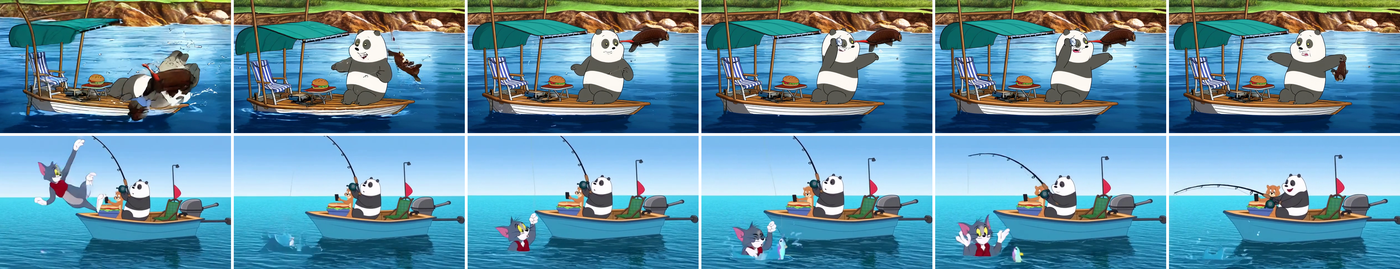

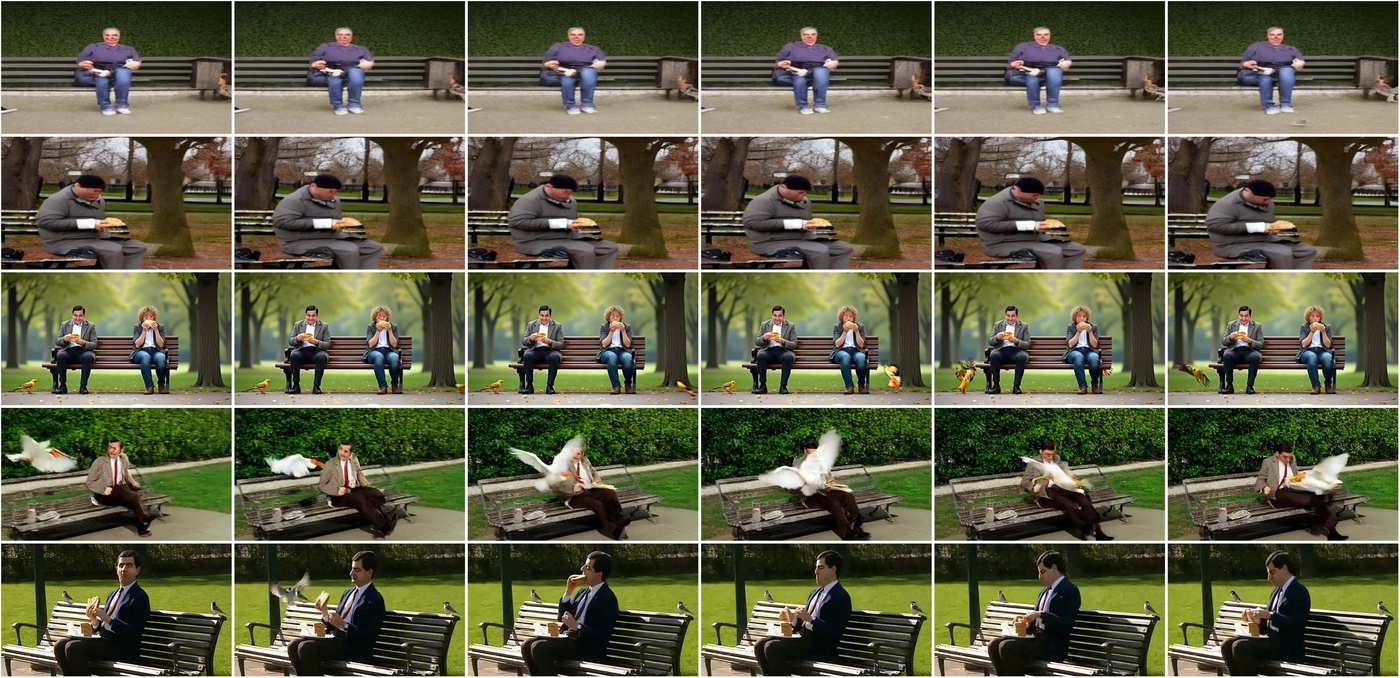

Abstract: Imagine Mr. Bean stepping into Tom and Jerry--can we generate videos where characters interact naturally across different worlds? We study inter-character interaction in text-to-video generation, where the key challenge is to preserve each character's identity and behaviors while enabling coherent cross-context interaction. This is difficult because characters may never have coexisted and because mixing styles often causes style delusion, where realistic characters appear cartoonish or vice versa. We introduce a framework that tackles these issues with Cross-Character Embedding (CCE), which learns identity and behavioral logic across multimodal sources, and Cross-Character Augmentation (CCA), which enriches training with synthetic co-existence and mixed-style data. Together, these techniques allow natural interactions between previously uncoexistent characters without losing stylistic fidelity. Experiments on a curated benchmark of cartoons and live-action series with 10 characters show clear improvements in identity preservation, interaction quality, and robustness to style delusion, enabling new forms of generative storytelling.Additional results and videos are available on our project page: https://tingtingliao.github.io/mimix/.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about making AI create short videos where famous characters from different shows meet and interact naturally. Think Tom and Jerry teaming up with Mr. Bean, or Ice Bear from We Bare Bears chatting with Young Sheldon. The big idea is to keep each character looking and acting like themselves, even when they’re mixed together in a single scene, and even if they come from totally different styles (cartoon vs. real-life).

Objectives

Here are the main questions the paper tries to answer:

- How can we teach an AI to remember who a character is (their look) and how they move or behave (their personality)?

- How can we make characters who never appeared together interact in believable ways?

- How can we mix different visual styles (like cartoons and real people) without making them look weird or “blended” into the wrong style?

Methods and Approach

To solve these problems, the authors use two key ideas. Below, technical words are explained with simple analogies.

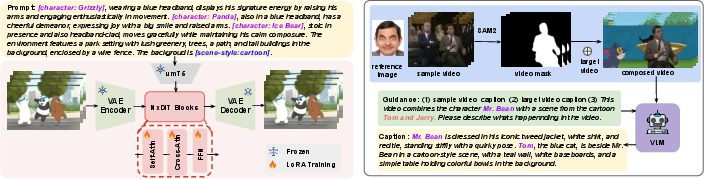

1) Cross-Character Embedding (CCE)

- What it is: An “embedding” is like a compact label or code that the AI uses to remember a concept. Here, the AI learns a separate “code” for each character’s identity and behavior.

- How it works: They write special captions for each training video clip using a format like:

[Character: Tom], chases Jerry.[Character: Jerry], hides behind a vase.[scene-style: cartoon] - Why this helps: These labels act like name tags plus action notes. They tell the AI exactly which character is doing what, and what style the scene is (cartoon or realistic). This lets the AI separate “who” from “what” and “how,” so later it can combine characters who never met before and still keep their behavior and look accurate.

- Extra details:

- They use GPT-4o to write these detailed captions, based on visual frames, transcripts, and show metadata.

- They fine-tune a powerful text-to-video model (Wan 2.1) using a light add-on called LoRA. LoRA is like adding small “clip-on” learning pieces so the model can learn new things without changing its whole brain.

2) Cross-Character Augmentation (CCA)

- The problem: When the AI trains on both cartoons and real-life shows, it sometimes gets confused and makes Mr. Bean look like a cartoon or Ice Bear look too realistic. The authors call this “style delusion.”

- The solution: They create “synthetic” training clips by cutting out a character from their original show and placing them into a background from a different style. For example, Mr. Bean in a Tom and Jerry kitchen, or Panda on a real park bench.

- Why it works: Even if these are imperfect “copy–paste” collages, they teach the AI that different styles can coexist without changing the character’s original look. They also add a style tag in the caption:

[scene-style: cartoon]or[scene-style: realistic], to make style control clear. - Extra details:

- They use tools like SAM2 to segment characters.

- They carefully filter these synthetic clips to keep identity accurate and the scenes relevant.

- Important finding: A small amount of this synthetic data helps a lot; too much can make the videos less realistic.

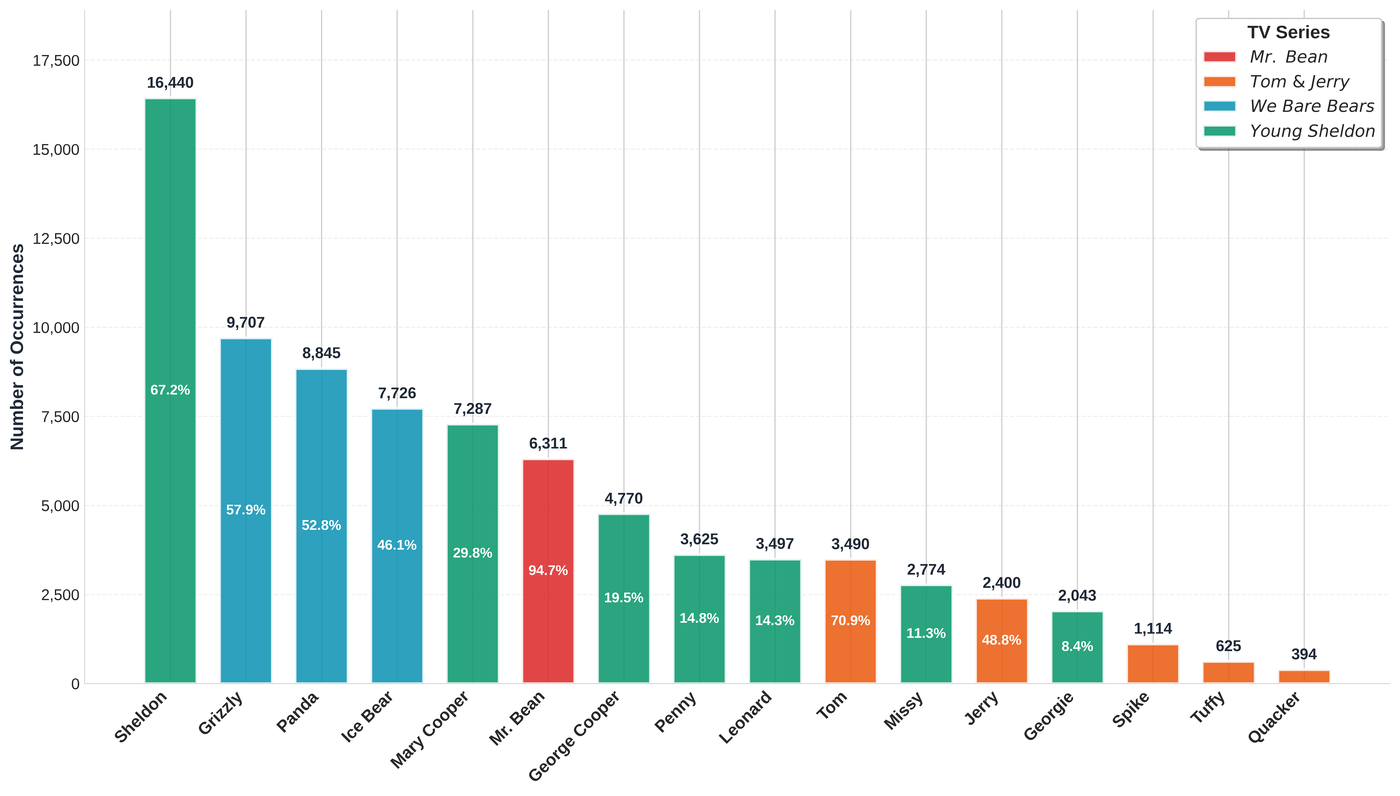

The Dataset

- They collected about 81 hours of videos (52,000 clips) from:

- Cartoons: Tom and Jerry, We Bare Bears

- Live-action shows: Mr. Bean, Young Sheldon

- Each clip is around 5 seconds and includes character names and style tags in the captions, so the model learns both identity and behavior.

How the Model Learns

- The core video generator is a diffusion model (think: starting from noisy frames and gradually “cleaning” them until a clear video appears).

- LoRA fine-tuning teaches the model about these specific characters without overfitting.

- The special captions and the synthetic mixed-style clips guide the model to keep identity, behavior, and style consistent.

Main Findings and Why They Matter

- Better identity preservation: The model keeps characters recognizable (Tom looks like Tom; Ice Bear looks like Ice Bear).

- More faithful motion and behavior: Characters move and act like they do in their original shows (Jerry’s quick scurrying, Mr. Bean’s clumsy antics, Sheldon’s lecturing).

- Natural interactions: Characters who never met (like Tom and Sheldon) can share scenes and react to each other in believable ways.

- Stronger style control: Adding

[scene-style]tags and a small amount of synthetic mixed-style data reduces “style delusion,” so cartoons stay cartoonish and real people stay realistic. - Caption structure matters: Using both

[character]and[scene-style]tags in captions leads to the most reliable results.

These improvements were shown with comparisons against other video generation systems and through both automatic metrics (for motion, quality, aesthetics) and AI-based judgments for identity, behavior, style, and interaction quality.

Implications and Impact

- Generative storytelling: Fans and creators could make fun crossovers—Mr. Bean in Tom and Jerry’s world or Panda collaborating with Mary Cooper—without losing each character’s charm.

- Content creation: Studios, educators, and game designers could quickly prototype scenes mixing characters from different universes and styles.

- Better control: The method shows how to keep characters distinct and authentic when mixing complex content, which is key for future creative tools.

Simple caveats:

- Adding a brand-new character still requires some fine-tuning and clear captions.

- Very complex scenes with many similar-looking characters can still confuse the model sometimes.

Overall, this research takes a big step toward making multi-character, mixed-style video generation practical and believable, opening the door to creative “what-if” videos where our favorite characters meet across worlds.

Knowledge Gaps

Knowledge Gaps, Limitations, and Open Questions

The following list synthesizes what remains missing, uncertain, or unexplored in the paper. Each point is framed to be actionable for future research.

- Ambiguity in CCE’s mechanism: The paper labels “Cross-Character Embedding” as a core technique but does not specify an explicit architectural module, representation, or training objective beyond tagged captions and LoRA fine-tuning. Clarify whether CCE is a learned embedding layer, a prompt engineering strategy, or a parameterization within the text encoder, and measure its contribution independently of caption tags.

- Scalability to unseen characters: The method requires explicit identity annotations and LoRA fine-tuning on the curated character footage. Investigate zero/few-shot generalization to new identities (user-provided, novel IPs, rare characters) without re-finetuning, e.g., via textual inversion, adapters, or modular identity-behavior libraries.

- Limited style coverage: Style control relies on a coarse binary tag ([scene-style: cartoon|realistic]). Extend to richer style taxonomies (cel-shaded, anime, claymation, noir, period-specific cinematography) and evaluate whether CCA mitigates delusion across diverse artistic domains.

- Dependence on synthetic cut-and-paste compositing: CCA uses 2D segmentation (SAM2) and naive paste into opposite-domain backgrounds. Study more physically and visually consistent augmentation (matting, relighting, shadow/occlusion synthesis, depth-aware compositing, 3D scene reconstruction) and quantify its effect on realism and interaction plausibility.

- Optimal augmentation ratio generality: The paper finds ~10% synthetic data beneficial on their dataset. Test whether the optimal ratio generalizes across domains, characters, and backbones, and develop adaptive schedules or curriculum strategies for augmentation.

- Caption quality and reliability: GPT-4o-generated captions act as supervision for identities and actions, yet no quantitative measure of annotation accuracy, hallucination rate, or temporal alignment is provided. Benchmark caption correctness (names, actions, style tags) and assess downstream sensitivity to mis-annotations.

- Audio and script utilization: Audio and scripts are used only to aid captioning, not as training conditions. Explore conditioning on speech, prosody, sound events, or dialogue context to better capture personality, timing, and behavior, and evaluate lip-sync and audio-visual coherence.

- Interaction physics and contact modeling: Current results emphasize “plausible” interactions but do not address physical contact, collision handling, force dynamics, object manipulation consistency, or cross-character occlusion handling. Introduce metrics and tests for physical plausibility and contact-rich interactions.

- Long-horizon narrative generation: Training clips are 5 seconds, and evaluation focuses on short segments. Investigate multi-scene narratives, continuity of identity/behavior across shots, planning of story beats, and memory mechanisms for longer videos.

- Multi-character scalability: Experiments focus on 2–3 characters. Assess performance with larger ensembles (4–8+), overlapping motion patterns, crowded scenes, and complex group dynamics, and quantify identity preservation and interaction coherence at scale.

- Background style ambiguity: The paper mentions adding an “extra prompt for background style” without detailing how conflicts between character-native style and background style are resolved. Formalize background–character style arbitration and provide quantitative controls.

- Benchmark reproducibility and validity: VLM-based metrics (Gemini-1.5-Flash) are not open or standardized, and the table header mentions human evaluation but does not present separate human scores. Release the benchmark, prompts, and evaluation code, add human studies with inter-rater reliability, and compare across multiple VLMs to reduce evaluator bias.

- Data imbalance and bias: The dataset is heavily skewed (e.g., 46 hours Young Sheldon vs. 9 hours Tom and Jerry). Quantify how imbalance affects identity/motion/style metrics, and explore rebalancing or domain-adaptive training to prevent dominance by long-form live-action data.

- Generalization to diverse body types and demographics: Characters are mainly comedic and male. Evaluate across varied ages, genders, body morphologies, attire, and cultural contexts to test identity and behavior preservation broadly.

- Occlusion and fast motion robustness: No dedicated evaluation of identity/motion fidelity under heavy occlusions, rapid camera movement, or motion blur. Add stress tests and metrics for challenging cinematographic conditions.

- Backbone-agnostic claims untested: The approach is claimed to be model-agnostic but only tested on Wan2.1-T2V-14B. Validate on multiple T2V backbones (e.g., Sora-like, HunyuanVideo, VideoLDM variants) and report the sensitivity to LoRA rank, injection points, and text–video encoder choices.

- Baseline selection and fairness: Comparisons emphasize single-subject or non-specialized multi-character methods (SkyReels-A2). Include multi-subject customization baselines (e.g., CustomVideo, Movie Weaver, Video Alchemist) in multi-character interaction tests to establish fairer state-of-the-art comparisons.

- Control granularity: Current prompting controls identity and coarse style but not spatial layout, gaze/pose constraints, path planning, or object-centric interactions. Integrate and evaluate layout conditioning (scene graphs, keypoints), motion paths, or control nets for fine-grained interaction design.

- Identity drift measurement: While identity preservation is reported, there is no metric for temporal identity drift within sequences. Define and track identity consistency across frames and shots, including facial detail stability and attribute persistence.

- Legal and ethical considerations: The curated dataset comprises copyrighted TV shows/animations; dataset availability, licensing, and ethical use are unclear. Articulate dataset release plans, consent, and IP compliance frameworks, and assess potential misuse risks.

- Inference efficiency and deployment: Training details are provided, but inference latency, memory footprint, and throughput under multi-character prompts are not reported. Profile performance and propose optimizations for practical deployment.

- Failure case taxonomy: The discussion notes occasional failures in complex scenes but lacks a systematic analysis of failure types (e.g., style bleed, motion incoherence, identity confusion). Publish a categorized failure set with triggers and mitigation strategies.

- Personality modeling beyond actions: “Behavioral logic” is claimed, but the model is trained with action tags rather than richer personality signals (habits, emotional arcs). Explore modeling of persistent traits, affect, and social conventions, and devise evaluations for personality fidelity.

Practical Applications

Immediate Applications

The following applications can be operationalized today using the paper’s techniques—Cross-Character Embedding (CCE) for identity/behavior grounding and Cross-Character Augmentation (CCA) for style-preserving cross-domain mixing—along with the released benchmark and training recipes (LoRA on a T2V backbone, SAM2-based segmentation, GPT-4o captioning with [character] and [scene-style] tags).

- Studio previsualization of cross-IP scenes (Sector: media/entertainment, VFX)

- What it enables: Rapid previz of crossover scenes (e.g., live-action actor interacting with animated character) with strong identity, behavior, and style fidelity; storyboarding of multi-character blocking; quick iteration on comedic beats and interactions.

- Tools/Workflows: “CCE Prompt Builder” for character–action prompting; LoRA character packs; NLE plugins (e.g., Premiere/After Effects) for shot-level generation and conform; internal asset library of character embeddings.

- Assumptions/Dependencies: Rights to use characters; access to a capable T2V backbone (e.g., Wan2.1-class); GPU capacity for LoRA fine-tuning/inference; captioning quality (GPT-4o) and segmentation quality (SAM2).

- Advertising and branded content prototyping (Sector: marketing/advertising)

- What it enables: Spec creative and A/B variants that combine brand mascots with human ambassadors while preserving each entity’s native style; social-first cutdowns with different interactions per channel.

- Tools/Workflows: SaaS storyboard generator using character–action prompts; “Behavior Embedding Library” for mascots; campaign variant generator with batch prompts and analytics.

- Assumptions/Dependencies: Brand safety checks; approvals from talent/rights-holders; content watermarking; prompt discipline to avoid off-brand behaviors.

- Social media UGC mashup apps (Sector: consumer software/creator tools)

- What it enables: Consumer-facing remix of licensed/owned characters with user-provided personas; short-form videos with style-preserving crossovers; community challenges (e.g., “cartoon meets sitcom”).

- Tools/Workflows: Mobile app with reference-image upload, character tag selection, style tag toggles; server-side LoRA inference; content moderation pipeline.

- Assumptions/Dependencies: Licensing or use of open characters; safety filters; cost controls for inference.

- VFX “character insertion” for plate footage (Sector: post-production)

- What it enables: Insert a non-coexisting character into existing shots while preserving native style (e.g., realistic scene with animated character); fast temp comps for editorial decisions.

- Tools/Workflows: Plate ingest → background/style tagging → character segmentation and CCA composite pretraining → conditioned generation into plate; rotoscoping assist via SAM2 masks.

- Assumptions/Dependencies: Clean plates or robust matting; camera movement constraints; scene-style clarity; artifact cleanup in comp.

- Education content authoring with cross-world role plays (Sector: education/EdTech)

- What it enables: Engaging micro-lessons where familiar characters demonstrate concepts (e.g., animated character explains physics to a live-action student); safe classroom-friendly behaviors encoded via prompts.

- Tools/Workflows: Teacher dashboard for lesson templates; library of “behavior-safe” embeddings; scene-style prompts to match curriculum design.

- Assumptions/Dependencies: Licensing for character likenesses; kid-safe content filters; accessibility subtitling.

- Internal training and corporate comms scenarios (Sector: enterprise L&D)

- What it enables: Scenario reenactments using company mascots or anonymized avatars interacting with realistic employees; policy walk-throughs with memorable character behaviors.

- Tools/Workflows: Template scripts → CCE prompts → batch generation; LMS integration; analytics on engagement.

- Assumptions/Dependencies: HR/legal approvals; tone and behavior constraints; privacy and consent.

- Academic evaluation and benchmarking (Sector: academia/research)

- What it enables: A standard benchmark for multi-character, cross-style generation; reproducible metrics for identity, motion, style, and interaction (Identity-P, Motion-P, Style-P, Interaction-P).

- Tools/Workflows: Public benchmark suites; leaderboards; ablations on caption tags and augmentation ratios.

- Assumptions/Dependencies: Access to compatible VLMs for scoring (e.g., Gemini); shared protocols; dataset licenses for research use.

- Synthetic data generation for multi-agent perception and VLMs (Sector: software/AI)

- What it enables: Curated, labeled multi-entity interactions (with [character] and [scene-style] tags) to train or evaluate VLMs on identity disentanglement, action recognition, and interaction reasoning.

- Tools/Workflows: Automated prompt schedule for diverse interactions; CCA-driven cross-style compositions; corpus export with captions and masks.

- Assumptions/Dependencies: Domain gap to real-world data; careful augmentation ratio tuning to prevent quality regression.

- Localization and cultural adaptation previews (Sector: media localization)

- What it enables: Rapid previews of culturally localized segments where local mascots/hosts interact with global IP in native styles; test audience resonance before full production.

- Tools/Workflows: Style-tagged prompts for regional aesthetics; behavior constraints reflecting cultural norms; side-by-side variant generation.

- Assumptions/Dependencies: Cultural sensitivity review; rights for local characters; governance for stereotypes/bias.

- Fan engagement for events and sports (Sector: sports/entertainment)

- What it enables: Event teasers where team mascots “meet” celebrities or legacy animated characters while keeping each identity/style intact; stadium or social activations.

- Tools/Workflows: Event prompt templates; batch render farm for real-time social drops; approval loops with rights-holders.

- Assumptions/Dependencies: Rapid turnaround GPUs; licensing; compliance with live event content policies.

Long-Term Applications

The following require further development, scaling, licensing frameworks, or research to reach production viability.

- Licensed “character embedding” marketplaces (Sector: media/IP platforms)

- What it enables: Studios license identity/behavior embeddings with governance (allowed actions, tone, styles); creators compose legal crossovers within a compliant engine.

- Tools/Workflows: Registry and DRM for embeddings; policy-enforced prompt validators; watermarking and provenance.

- Assumptions/Dependencies: Industry standards for identity/behavior embeddings; revenue sharing; legal frameworks for rights-of-publicity.

- Real-time broadcast/streaming crossovers (Sector: live media/creator economy)

- What it enables: Streamers or broadcasters mix animated and real characters live (e.g., VTuber interacts with live host) with style-preserving rendering at low latency.

- Tools/Workflows: Distilled or caching-based fast T2V; partial-frame regeneration; GPU edge infrastructure; scripted “interaction macros.”

- Assumptions/Dependencies: Latency/throughput breakthroughs; safety filters at inference; robust audio-visual alignment.

- Interactive games and XR with cross-universe NPCs (Sector: gaming/AR/VR)

- What it enables: NPCs with learned motion idiosyncrasies and personalities that interact across styles inside virtual worlds; dynamic cutscenes personalized by player prompts.

- Tools/Workflows: Extension from 2D video to 3D/NeRF/GS pipelines; behavior trees seeded from CCE; real-time style control.

- Assumptions/Dependencies: Differentiable 3D video generation maturity; low-latency control; IP licensing for in-game use.

- Feature-length AI-generated crossover productions (Sector: film/TV)

- What it enables: Long-form episodes or films where multiple franchises co-exist, with consistent identity, behavior arcs, and style continuity across scenes.

- Tools/Workflows: Multi-scene script-to-video with scene graphs; shot continuity constraints; editorial control tools; long-horizon memory of character states.

- Assumptions/Dependencies: Advances in long-context T2V coherence; production-grade QC; union and legal agreements.

- Therapy and education at scale via familiar-character role play (Sector: healthcare/EdTech)

- What it enables: Personalized therapeutic scenarios (e.g., CBT role-plays) and tutoring with safe, consented character surrogates to increase engagement and adherence.

- Tools/Workflows: Clinician/teacher dashboards with behavior-guardrails; content review; outcome tracking.

- Assumptions/Dependencies: Clinical validation; ethical review boards; privacy protections; specialized licensing.

- Synthetic multi-agent datasets for robotics and world models (Sector: robotics/AI)

- What it enables: Large-scale, labeled multi-agent interactions for training perception/planning modules; curricula with increasing interaction complexity and style domains.

- Tools/Workflows: Programmatic scenario generation; domain randomization across styles; temporal annotations of interaction events.

- Assumptions/Dependencies: Transfer to real-world remains a challenge; need simulators aligning with generated visuals; evaluation protocols.

- Standardized evaluation and safety frameworks for identity/behavior fidelity (Sector: policy/governance)

- What it enables: Policy-grade metrics for identity preservation, behavior constraints, and cross-style integrity; certification of compliant generative systems.

- Tools/Workflows: Open benchmarks (Identity-P, Motion-P, Style-P, Interaction-P) extended with safety metrics; red-teaming suites for misuse.

- Assumptions/Dependencies: Regulator and industry adoption; consensus on acceptable behavior boundaries; watermarking/c2pa provenance.

- Script-to-episode authoring with controllable interaction arcs (Sector: media tools)

- What it enables: LLM-driven multi-scene episode generation with character bibles encoded as CCE embeddings; consistent callbacks, running gags, and interaction logic.

- Tools/Workflows: Scene graph planners; beat sheets mapped to prompts; “interaction rehearsal” passes; editorial UIs.

- Assumptions/Dependencies: Long-horizon narrative coherence; creative control affordances; integration with traditional pipelines.

- Personalized learning companions and accessibility media (Sector: accessibility/EdTech)

- What it enables: Learners choose a licensed character as a “guide” rendered in native style across subjects; adaptive difficulty and multimodal explanations.

- Tools/Workflows: Curriculum-aligned prompt templates; behavior-adaptive embeddings; closed-loop assessment.

- Assumptions/Dependencies: Age-appropriate behavior constraints; licensing at scale; fairness and bias monitoring.

- Cross-studio collaboration sandboxes (Sector: media ecosystems)

- What it enables: Secure, shared environments where studios test crossovers with strict policy controls and audit trails; co-production prototyping.

- Tools/Workflows: Federated training on character embeddings; access control; compliance dashboards; joint asset vaults.

- Assumptions/Dependencies: Interoperability standards (embedding formats, prompt schemas); legal frameworks; secure compute enclaves.

Notes on Feasibility and Dependencies Across Applications

- Technical

- Requires a high-capacity T2V backbone; LoRA fine-tuning infrastructure; high-quality captions (GPT-4o) and masks (SAM2); careful CCA ratio tuning (≈10% synthetic clips worked best in the paper).

- Current limitation: new characters need (re)training/fine-tuning; open-world zero-shot remains a research problem.

- Real-time use cases depend on substantial efficiency gains and partial-frame regeneration.

- Legal/Ethical

- Rights and licensing for character likeness, behavior, and derivative works; rights-of-publicity for real people.

- Provenance and watermarking to mitigate deepfake/misuse risks; content moderation for UGC scenarios.

- Operational

- Cost control for GPU inference at scale; QA for style delusion and identity drift; human-in-the-loop approvals for branded/regulated content.

These applications directly leverage the paper’s innovations—explicit character–action prompting (CCE) for disentangling identity and behavior, and style-aware synthetic co-existence (CCA) to prevent style delusion—unlocking practical cross-domain, multi-character video generation with controllable interactions.

Glossary

- Ablation paper: An experiment that removes or varies components to assess their impact on performance. "We conduct ablation studies to investigate how different captioning strategies influence the modelâs ability to learn and ground each character as a distinct concept."

- Adam optimizer: A gradient-based optimization algorithm that uses adaptive estimates of lower-order moments. "train for 5 epochs with the Adam optimizer (learning rate 1e-4, batch size 64)."

- LAION aesthetic predictor: A learned model for estimating the aesthetic quality of images. "Aesthetic evaluates the artistic and beauty value perceived by humans towards each video frame using the LAION aesthetic predictor."

- Anchored prompts: Prompting technique that uses fixed anchor tokens or structures to preserve distinct subject identities. "Movie Weaver~\cite{liang2025movieweaver} employs tuning-free anchored prompts to preserve distinct identities."

- Backbone: The core neural network architecture that provides base representations; often kept frozen during fine-tuning. "During fine-tuning, backbone parameters are frozen and only LoRA layers are updated, ensuring efficiency and reducing overfitting."

- Benchmark: A standardized set of data and metrics for comparative evaluation. "We further establish the first benchmark for multi-character video generation, evaluating identity preservation, motion fidelity, interaction realism, and style consistency."

- Character--Action Prompting: A captioning format that explicitly separates character identities from actions to disentangle behavior. "Our key insight is to design a character--action captioning format that explicitly grounds each characterâs identity while separating it from scene context."

- Cross-attention–based fusion: A mechanism that fuses multimodal representations by attending across modalities. "Video Alchemist~\cite{chen2025videoalchemist} addresses this through cross-attentionâbased fusion of text and image representations for open-set subject and background control,"

- Cross-Character Augmentation (CCA): A data augmentation method that synthesizes co-existence and mixed-style clips to enable cross-domain interactions. "we introduce Cross-Character Augmentation (CCA), which enriches training with synthetic co-existence and mixed-style data."

- Cross-Character Embedding (CCE): Learned embeddings that encode identity and behavioral logic across multimodal sources. "We introduce a framework that tackles these issues with Cross-Character Embedding (CCE), which learns identity and behavioral logic across multimodal sources,"

- Diffusion models: Generative models that iteratively denoise samples to synthesize data like images or videos. "The advent of diffusion models has transformed video generation, advancing from early text-to-video systems..."

- FP16: Half-precision floating point format used to reduce memory and improve training efficiency. "mixed-precision (FP16) training is used for efficiency."

- Foundation model: A large pre-trained model serving as a base for fine-tuning on downstream tasks. "enabling us to fine-tune one foundation model on each characterâs individual footages while still allowing them to co-exist and interact naturally at inference time."

- Gradient clipping: A technique to cap gradient norms to improve training stability. "Gradient clipping is applied for stability,"

- GPT-4o: A multimodal LLM used here for automatic caption generation. "We employ GPT-4o~\cite{openai2024gpt4o} to automatically generate captions."

- Identity anchors: Explicit textual tags that anchor character identity to control generation. "Each character mention is annotated with [character:name] tags, which act as identity anchors and support controllable character-level generation."

- Identity blending: Undesirable fusion of multiple subjects into a composite identity in multi-concept generation. "Compared to sigle-concept personalization, multi-concept customization is more challenging due to identity blending, where multiple characters risk being fused into a composite scene."

- Identity-P: A VLM-based metric that scores how well visual identity is preserved. "Identity-P evaluates how well the generated video preserves each characterâs visual identity and distinctive features."

- Image-to-video (I2V): Generative setup that converts an input image into a video. "Wan2.1-I2V cannot directly generate videos from a character image."

- Interaction-P: A VLM-based metric assessing naturalness and plausibility of interactions. "Interaction-P evaluates the naturalness and plausibility of multi-character interactions."

- Low-Rank Adaptation (LoRA): A parameter-efficient fine-tuning method that inserts low-rank adapters into layers. "We fine-tune Wan2.1-T2V-14B~\cite{wan2025video} with Low-Rank Adaptation (LoRA)~\cite{hu2021lora}."

- Mixed-precision training: Training with multiple numeric precisions to speed up computation and save memory. "mixed-precision (FP16) training is used for efficiency."

- Motion-P: A VLM-based metric that scores authenticity of character-specific movements and behaviors. "Motion-P measures the authenticity of character-specific movements and behaviors relative to their canonical personality traits."

- Multi-character mixing: Generative setup of composing multiple distinct characters interacting coherently in one scene. "We proposed the first video generation framework for multi-character mixing that addresses both the non-coexistence challenge and the style dilution challenge."

- Multi-concept customization: Generation with multiple distinct subject concepts while avoiding identity fusion. "Compared to sigle-concept personalization, multi-concept customization is more challenging due to identity blending,"

- MUSIQ: A learned image quality predictor used to assess distortions in frames. "Quality measures the imaging quality, referring to the distortion (e.g., over-exposure, noise, blur) by the image quality predictor MUSIQ~\cite{ke2021musiq}."

- Non-coexistence challenge: The difficulty that characters from different sources never co-occur in training data. "The first is the non-coexistence challenge: characters from different shows never co-occur in any training video, leaving no paired data to model their joint interactions."

- OmniGen: A unified image generation model used to synthesize inputs for downstream I2V. "we first employ OmniGen~\cite{xiao2025omnigen} to synthesize an image using the prompt and reference,"

- Parameter-efficient fine-tuning: Methods that adapt large models with few trainable parameters. "explore parameter-efficient fine-tuning and joint optimization for multi-subject composition."

- RAFT: An optical flow model used to quantify motion dynamics. "Dynamic quantifies the degree of motion dynamics using RAFT~\cite{raft}."

- Reference-image matching: Filtering step ensuring identity alignment by comparing to reference images. "For live-action characters such as Mr. Bean and Young Sheldon, we perform reference-image matching to retain clips with accurate identity alignment."

- SAM2: A segmentation model used for extracting characters from source videos. "Characters are segmented using SAM2~\cite{ravi2024sam2}, which handles both live-action and animation content."

- Scene-style tags: Explicit caption tokens indicating whether the scene is cartoon or realistic. "explicit style tags ([scene-style:cartoon] or [scene-style:realistic]), providing the model with clear supervision for style control."

- Style-aware data augmentation: Augmentation that preserves native appearances while mixing styles. "We tackle this by introducing a style-aware data augmentation strategy that composites characters from different domains into the same video while preserving their native appearances."

- Style delusion: Unwanted drift of character rendering into another visual style. "mixing styles often causes style delusion, where realistic characters appear cartoonish or vice versa."

- Style dilution challenge: Failure mode where mixed-styled training causes unstable or diluted styles. "We proposed the first video generation framework for multi-character mixing that addresses both the non-coexistence challenge and the style dilution challenge."

- Style-P: A VLM-based metric scoring consistency with a character’s original artistic style. "Style-P assesses the consistency of each characterâs original artistic and visual style."

- Synthetic Cross-Domain Compositing: Procedure for creating clips by inserting segmented characters into opposite-style backgrounds. "Synthetic Cross-Domain Compositing."

- Text-to-video (T2V): Generative setup that synthesizes videos directly from text prompts. "fundamental text-to-video (T2V) generation models~\cite{cogvideox,sora,wan2025video,genmo2024mochi,google2024veo,kong2024hunyuanvideo,kling,wan2025video} have achieved substantial progress in general video synthesis."

- VBench: A benchmark suite of automated video quality and temporal coherence metrics. "we adopt Consistency, Motion, Dynamic, Quality, and Aesthetic from VBench~\cite{vbench}."

- ViCLIP: A video–text consistency model used to compute frame-level alignment. "Consistency evaluates the overall video-text consistency across frames computed by ViCLIP~\cite{wang2023internvid}."

- Vision–LLM (VLM): A model that jointly understands visual and textual inputs for evaluation or generation. "we employ a visionâLLM (VLM) and introduce four specialized metrics: Identity-P, Motion-P, Style-P, and Interaction-P."

- Wan2.1-I2V: A foundation image-to-video model used as a baseline. "Wan2.1-I2V cannot directly generate videos from a character image."

- Wan2.1-T2V-14B: A large text-to-video backbone used for fine-tuning in this work. "We fine-tune Wan2.1-T2V-14B~\cite{wan2025video} with Low-Rank Adaptation (LoRA)~\cite{hu2021lora}."

- Zero-shot: Generation without task-specific fine-tuning for a new subject or identity. "More recent zero-shot approaches like ID-Animator~\cite{he2024idanimator} leverage facial adapters to enable identity-consistent generation from a single reference image without fine-tuning."

Collections

Sign up for free to add this paper to one or more collections.