- The paper introduces region-based affine supervision which overcomes limitations of point-based drag editing by leveraging DiT priors for enhanced realism.

- The methodology integrates adapter-enhanced inversion and hard-constrained background preservation to maintain subject identity and non-edited content.

- Empirical evaluations on ReD Bench and DragBench-DR show DragFlow achieves superior spatial correspondence and image fidelity in complex editing tasks.

DragFlow: Region-Based Supervision for DiT-Prior Drag Editing

Motivation and Problem Analysis

Drag-based image editing enables fine-grained, spatially localized manipulation by allowing users to specify how regions of an image should move or deform. Prior approaches, predominantly built on UNet-based DDPMs (e.g., Stable Diffusion), suffer from unnatural distortions and limited realism, especially in complex scenarios. This is attributed to the insufficient generative prior of these models, which fail to constrain optimized latents to the natural image manifold. The emergence of Diffusion Transformers (DiTs) trained with flow matching (e.g., FLUX, SD3.5) provides substantially stronger priors, but direct application of point-based drag editing to DiTs is ineffective due to the finer granularity and narrower receptive fields of DiT features.

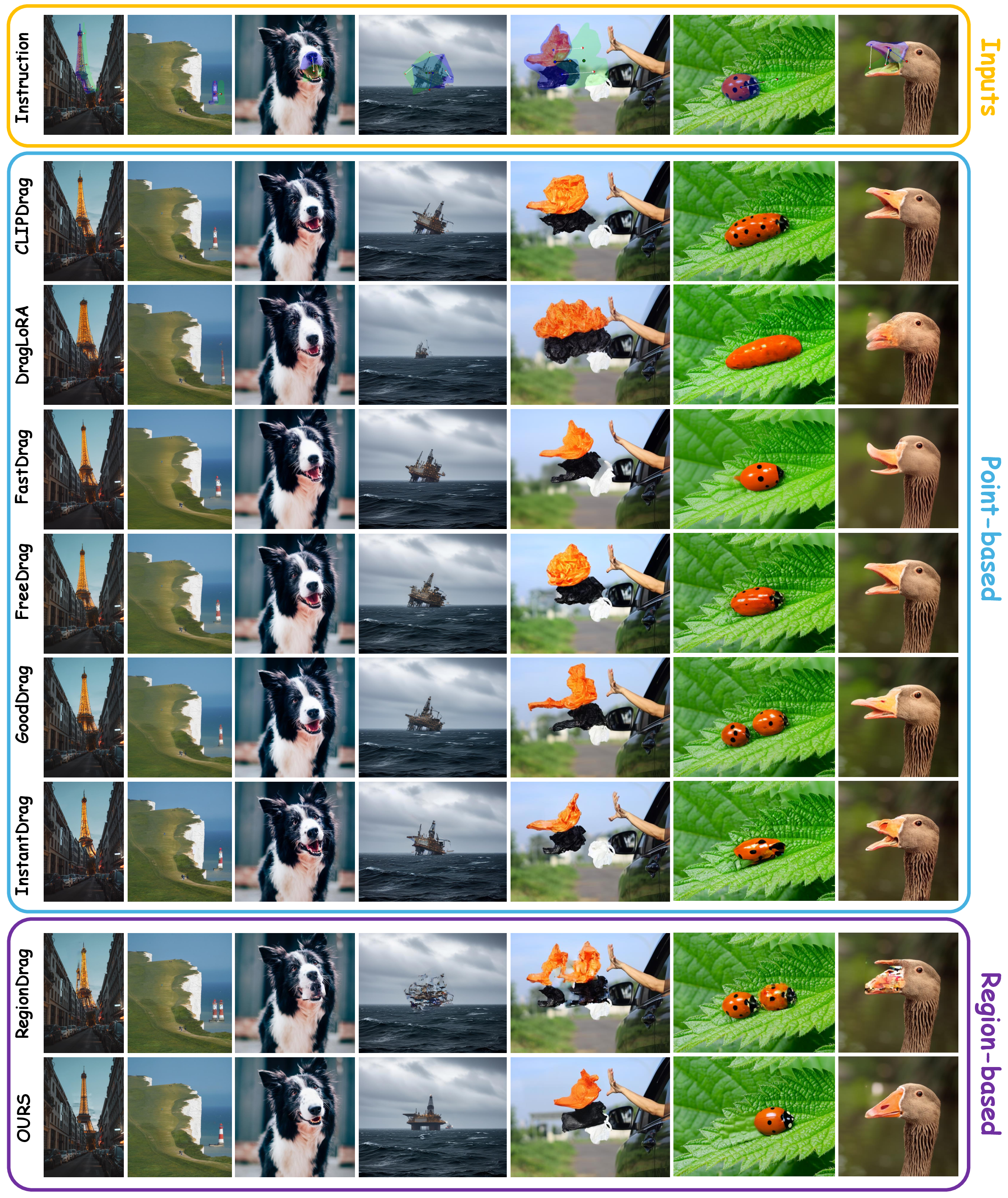

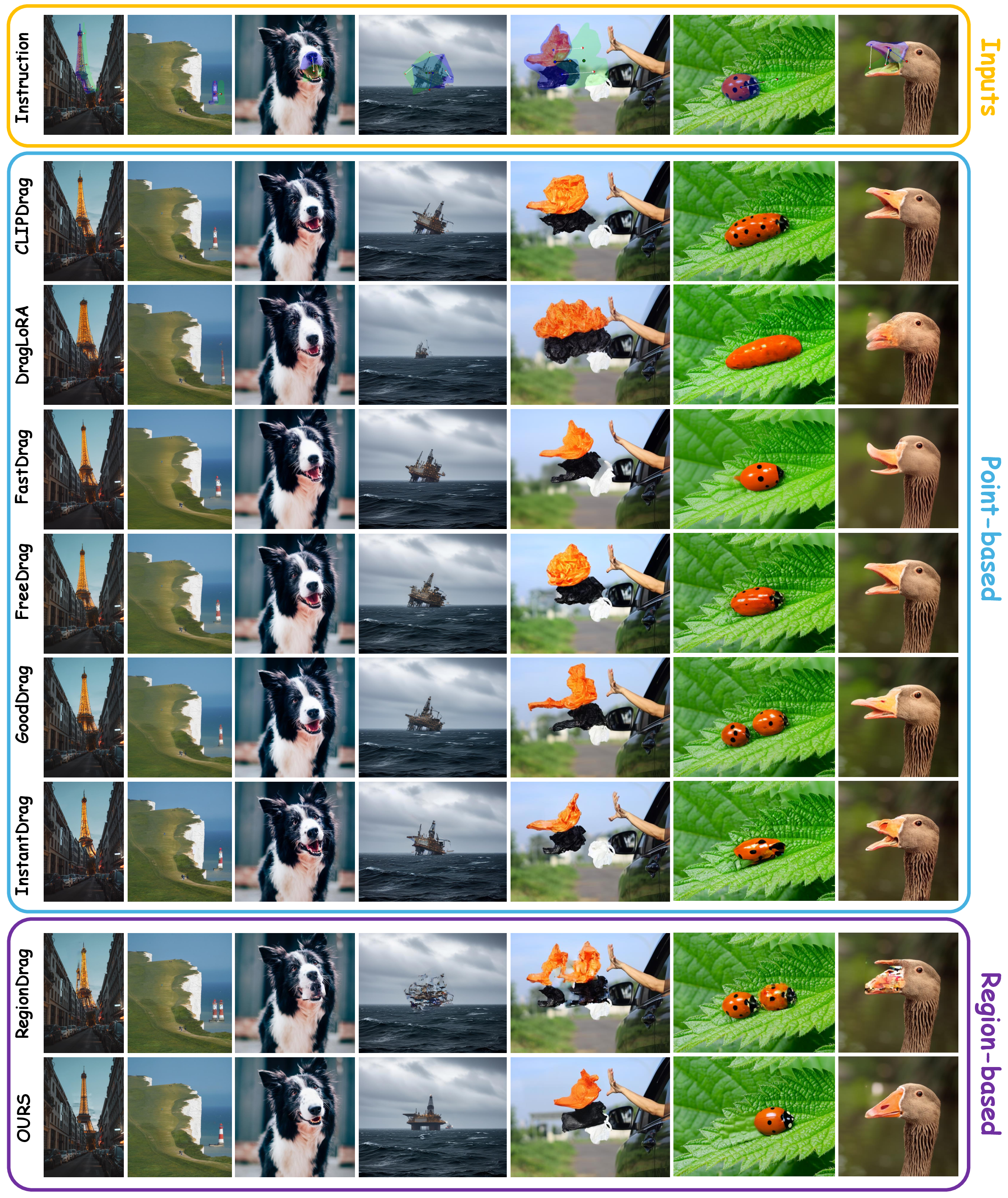

Figure 1: Comparison of drag-editing results between baselines and DragFlow, demonstrating reduced distortions and improved realism in challenging scenarios.

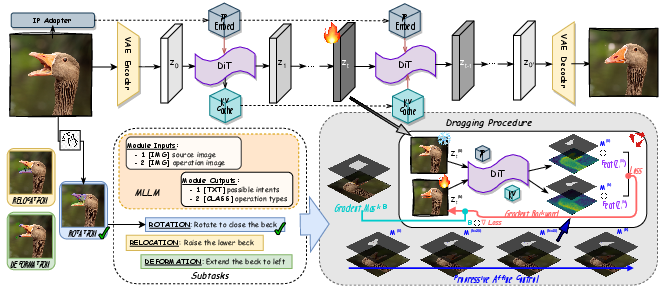

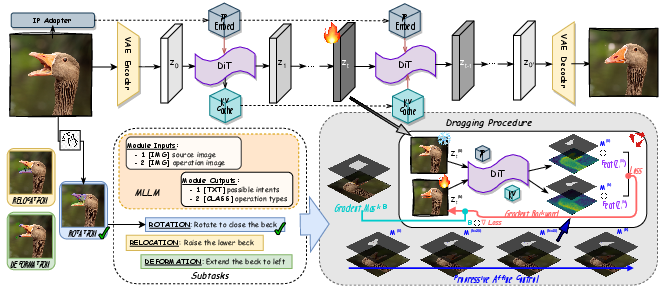

DragFlow Framework: Region-Level Affine Supervision

DragFlow introduces a region-based editing paradigm, departing from point-wise supervision. Users specify source region masks and target points, which are interpreted by a multimodal LLM to infer editing intent and operation class. The core innovation is region-level affine supervision: instead of tracking and matching features at individual points, DragFlow matches features between entire source and target regions, using affine transformations to propagate masks and guide optimization. This approach provides richer semantic context and mitigates the instability and myopic gradients inherent in point-based methods.

Figure 2: Overview of the DragFlow framework, highlighting region-level affine supervision, adapter-enhanced inversion, and hard-constrained background preservation.

The iterative latent optimization is governed by a loss function that matches DiT features within the region masks, progressively moving the source region toward the target via affine transformations. The affine parameters are interpolated over optimization steps, enabling smooth relocation, deformation, or rotation of regions.

Background Preservation and Subject Consistency

DragFlow replaces traditional background consistency losses with hard constraints: gradients are only applied to editable regions, while the background is reconstructed from the original latent, ensuring robust preservation of non-edited content. This is critical for DiT models, especially CFG-distilled variants like FLUX, which exhibit significant inversion drift.

Subject consistency is further enhanced by adapter-based inversion. Pretrained open-domain personalization adapters (e.g., IP-Adapter, InstantCharacter) extract subject representations from reference images and inject them into the model prior, substantially improving identity preservation under edits. This approach outperforms standard key-value injection, which is less effective in DiT settings due to pronounced inversion drift.

Empirical Evaluation and Benchmarking

DragFlow is evaluated on two benchmarks: the newly introduced Region-based Dragging Benchmark (ReD Bench) and DragBench-DR. ReD Bench provides region-level annotations, explicit task tags (relocation, deformation, rotation), and contextual descriptions, enabling rigorous assessment of region-based editing. Metrics include masked mean distance (MD) for spatial correspondence and LPIPS-based image fidelity (IF) for semantic and structural consistency.

Figure 3: Qualitative comparison of DragFlow with multiple baselines in challenging scenarios, demonstrating superior structural preservation and intent alignment.

DragFlow achieves the lowest MD and highest IFs2t and IFs2s across both benchmarks, indicating precise content manipulation and strong spatial alignment. The method also demonstrates robust background integrity, with only marginal gaps attributable to the inherent limitations of CFG-distilled inversion. Qualitative results show DragFlow consistently avoids the distortions and misinterpretations observed in baselines, especially in complex transformations.

Ablation and Component Analysis

Ablation studies confirm the effectiveness of each DragFlow component. Region-level supervision yields substantial gains in spatial correspondence and semantic fidelity compared to point-based control. Hard-constrained background preservation markedly improves non-edited region integrity. Adapter-enhanced inversion significantly strengthens subject consistency, as evidenced by improved LPIPS scores and visual identity retention.

Figure 4: Qualitative ablation paper comparing DragFlow variants, illustrating the impact of region-level supervision, background constraints, and adapter-enhanced inversion.

Implementation Considerations

DragFlow is implemented with FLUX.1-dev as the base model and FireFlow for inversion. Optimization is performed at selected DiT layers (DOUBLE-17 and DOUBLE-18), empirically identified for their representational fidelity. Adapter integration is plug-and-play, requiring no additional fine-tuning. The framework supports multi-operation balancing via adaptive region weighting and employs automated mask generation for robust gradient constraints. The use of a multimodal LLM for intent inference streamlines user interaction and reduces annotation effort.

Implications and Future Directions

DragFlow demonstrates that region-based supervision is essential for harnessing the full potential of DiT priors in drag-based editing. The framework's modular design enables extensibility to other generative backbones and editing paradigms. The reliance on adapter-enhanced inversion highlights the need for further research into inversion fidelity, especially for highly intricate structures. Future work may explore advanced adapter architectures, improved inversion algorithms, and integration with more expressive user interfaces.

The introduction of ReD Bench sets a new standard for evaluating region-aware editing, facilitating fair and comprehensive comparison across methods. The demonstrated gains in controllability, faithfulness, and output quality suggest that region-based approaches will become the norm for interactive image editing in DiT-based generative models.

Conclusion

DragFlow establishes a principled framework for drag-based image editing with DiT priors, overcoming the limitations of point-based supervision and UNet-centric methods. Through region-level affine supervision, hard-constrained background preservation, and adapter-enhanced inversion, DragFlow achieves state-of-the-art performance in both quantitative and qualitative metrics. The approach is robust, extensible, and practical for real-world interactive editing, with clear implications for future research in generative model-based image manipulation.