Diffusion Transformer (DiT) Models

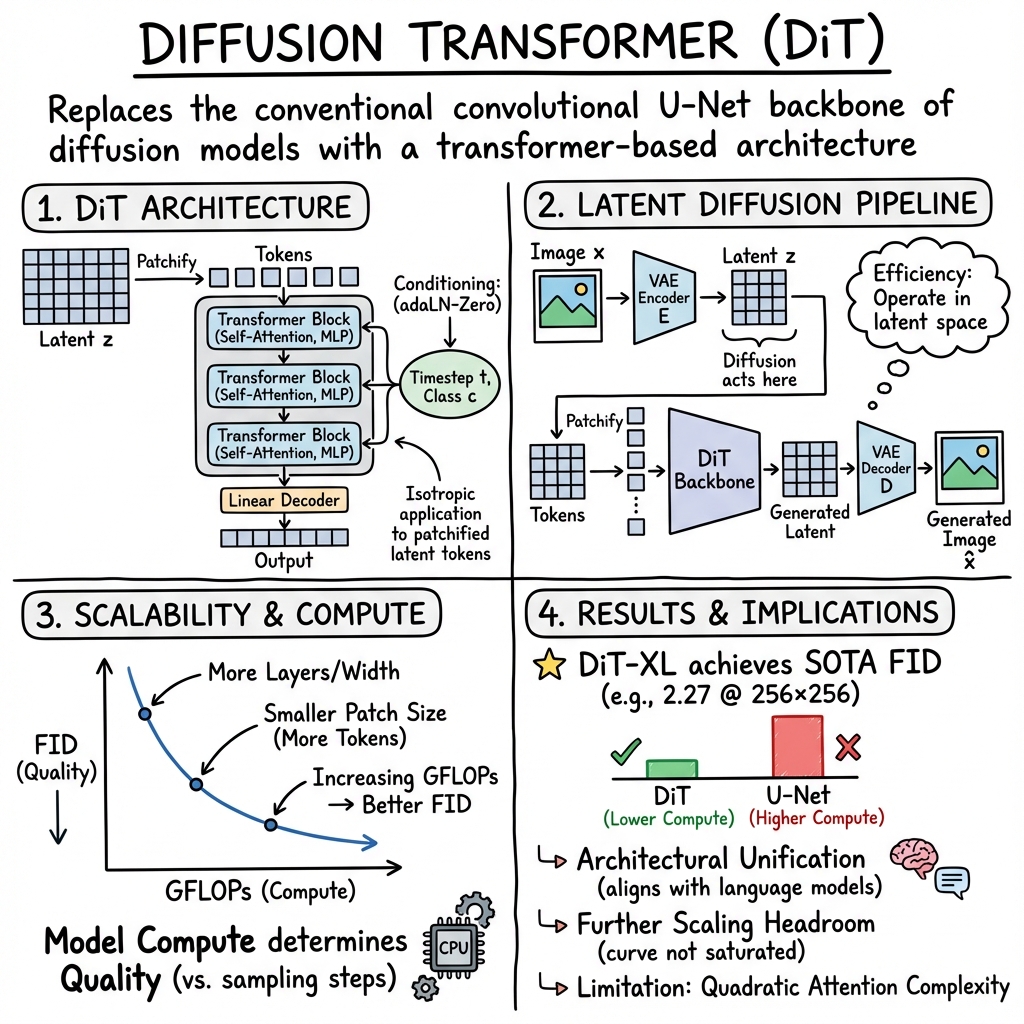

- Diffusion Transformer Models are innovative generative frameworks that replace convolutional U-Nets with transformer architectures operating in the latent space.

- They leverage patchification and transformer blocks with integrated conditioning, enhancing computational efficiency and model scalability.

- Empirical results demonstrate state-of-the-art FID improvements with lower GFLOPs, paving the way for unified, multi-modal generative modeling.

A Diffusion Transformer (DiT) is a generative modeling framework that replaces the conventional convolutional U-Net backbone of diffusion models with a transformer-based architecture. In the DiT paradigm, the model operates in the latent space of a pre-trained VAE, processes patchified latent tokens via a stack of transformer blocks, and incorporates conditioning information directly into the generative modeling process. By systematically analyzing scalability with respect to network depth, width, and token count, the DiT architecture achieves state-of-the-art performance across high-resolution image generation benchmarks, and its design has catalyzed subsequent architecture, efficiency, and application advances.

1. Architectural Foundations and Conditioning Mechanisms

DiT models are predicated on the isotropic application of transformer blocks to patchified latent-space tokens. An image is first encoded by a frozen, pre-trained VAE into a latent (e.g., a map for a image). This latent is patchified into tokens, each embedded into a fixed-dimensional space. The tokens are processed by a transformer encoder, with positional embeddings () added. Each transformer block consists of self-attention and MLP layers, with adaptations to incorporate conditioning on the noise timestep and possibly class labels . Four types of conditioning are evaluated: in-context token conditioning, cross-attention, adaptive layer normalization (adaLN), and the adaLN-Zero variant which initializes block scaling to zero.

The computation for a forward pass is as follows:

- For ,

Adaptive layer norm parameters in adaLN/adaLN-Zero are regressed from embedding sums of the noise timestep and any auxiliary labels, allowing conditional information to modulate the denoising process at every layer (Peebles et al., 2022).

2. Latent Diffusion Modeling Pipeline

Instead of directly modeling high-dimensional pixels, DiT operates on the latent space of a VAE, which provides computational and memory efficiency benefits. The VAE encoder transforms images to . Diffusion now acts on , i.e., where is the diffusion timestep. After sampling, the generated latent is decoded by , recovering the full pixel-space image.

Patchification of this latent—rather than the full input—creates the token sequence for the transformer. This approach uses fewer tokens (and therefore reduced compute) for a given fidelity, while the patch size trade-off modulates the number of tokens , directly affecting the controllable compute-versus-quality axis.

3. Scalability and Forward Pass Complexity

Scalability in DiT is systematically studied in terms of forward pass complexity, measured in GFLOPs, and its direct impact on generative quality, primarily via FID:

- Transformer Depth/Width: Increasing the number of blocks and/or channel dimensions consistently reduces FID. For instance, larger DiT-XL variants exhibit pronounced FID improvements over smaller DiT-B/L designs, independently of parameter count (Peebles et al., 2022).

- Token Count (Patch Size): Reducing the patch size increases , raising GFLOPs nearly linearly (e.g., halving increases tokens and compute ). Models at fixed GFLOPs, achieved by different mixes of depth/width or token count, yield similar FID.

- Empirical Relationship: FID improvement is tightly coupled to computational cost as opposed to the number of sampling steps, indicating that scaling model compute is fundamentally more effective than employing longer sampling schedules.

In summary, DiT upholds and extends the scaling laws observed in language modeling, with generation quality largely a function of aggregate model compute.

4. Experimental Performance and Metrics

On class-conditional ImageNet benchmarks, DiT-XL/2 achieves a SOTA FID of 2.27 at and 3.04 at , with competitive IS, sFID, and precision/recall. Importantly, these results are obtained with a forward pass compute of 118.6 GFLOPs () or 524.6 GFLOPs () per sample, markedly lower than earlier U-Net baselines (e.g., ADM: GFLOPs at ).

Supporting experiments show that, holding model size constant, more sampling steps cannot close the gap to larger DiT models; e.g., even with quadrupled steps, a small DiT fails to match the FID of a larger variant. This underlines the importance of model capacity—and thus compute—over mere sampling schedule extension.

5. Architectural and Application Implications

DiT demonstrates that scaling transformer-based diffusion models in the latent space enables new SOTA regimes in image generation while maintaining compute efficiency. Key implications include:

- Architectural Unification: By decoupling image generation from the convolutional U-Net paradigm, DiT enables cross-domain architectural unification. The transformer backbone and patch-wise tokenization procedure align visual generative modeling with advances in language modeling, paving the way for vision-language interoperability and unified multi-modal backbones (Peebles et al., 2022).

- Extensibility: The DiT framework facilitates straightforward integration of conditioning signals and supports future adaptation into text-to-image generation frameworks. The isotropic transformer structure also admits direct application of future advances in efficient transformer modeling (e.g., windowed attention, linear transformers).

- Further Scaling: The negative FID–GFLOPs correlation curve does not saturate at the studied scales, suggesting substantial headroom for further scaling and performance improvement via larger models and/or more computational resource allocation.

6. Limitations and Prospective Research Directions

While DiT offers clear advantages in scalability and performance, several limitations and future development avenues are apparent:

- Quadratic Attention: Standard transformer self-attention retains quadratic complexity in token count. As resolution or feature count increases, optimization via local/global attention or sparse attention schemes becomes important (Peebles et al., 2022).

- Memory Efficiency: The absence of skip connections, in contrast to U-Nets, may pose challenges for information flow in specialized downstream applications.

- Domain Transfer and Hybrid Models: Varied modalities (text, audio) or conditional tasks (e.g., restoration, segmentation) may benefit from tailored tokenization and hybrid transformer–convolutional designs.

- Parallel and Efficient Inference: Recent developments (e.g., acceleration frameworks, quantization, and dynamic token routing) build upon the DiT backbone to further reduce generation latency and memory overhead.

The DiT paradigm represents a pivotal transition in diffusion-based generative modeling, establishing transformers as the central architectural substrate for scalable, high-fidelity, and efficient image synthesis (Peebles et al., 2022). The design principles and scaling behaviors observed in this line of work continue to underpin subsequent advances in large-scale generative modeling across vision and multi-modal domains.