MemGen: Weaving Generative Latent Memory for Self-Evolving Agents (2509.24704v1)

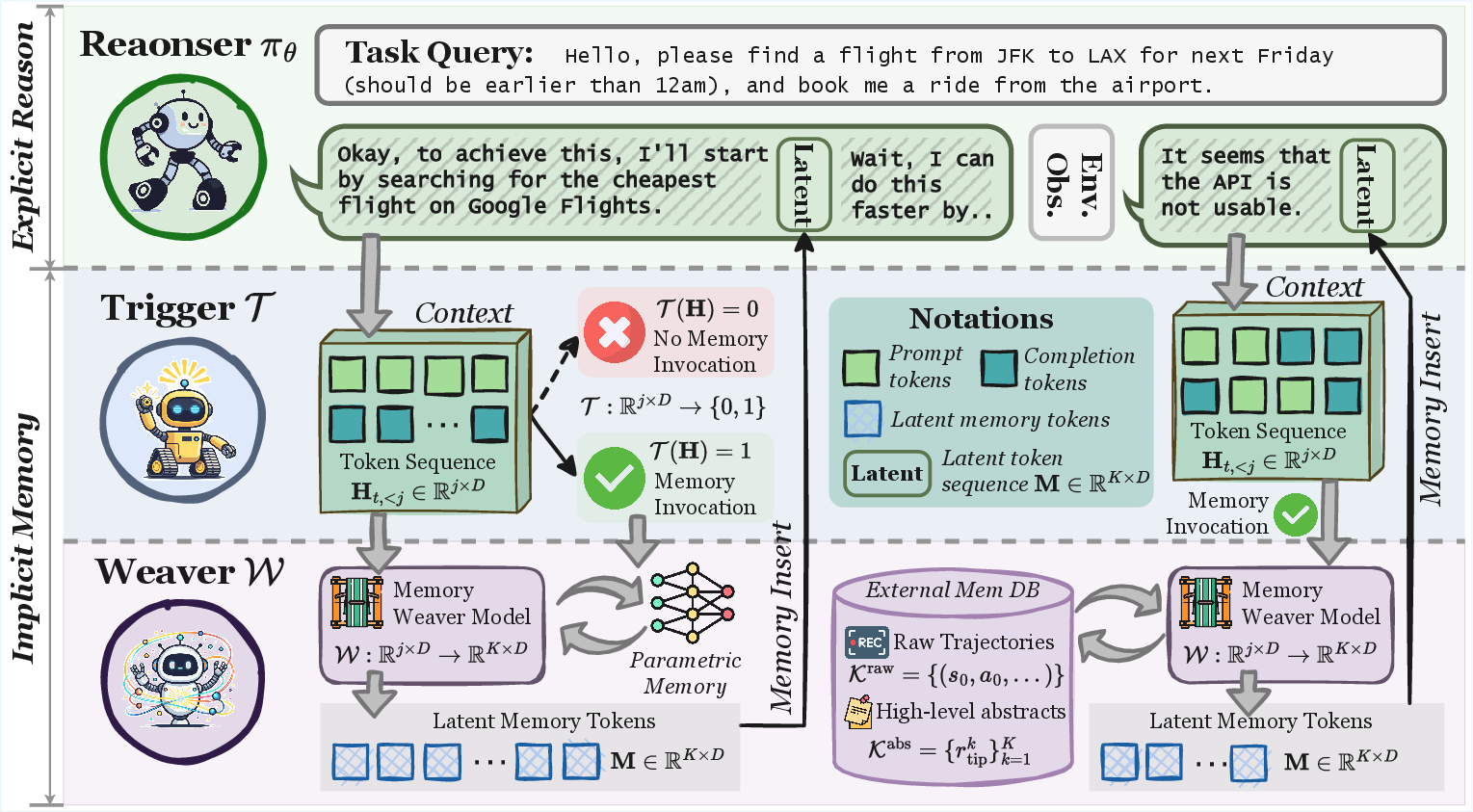

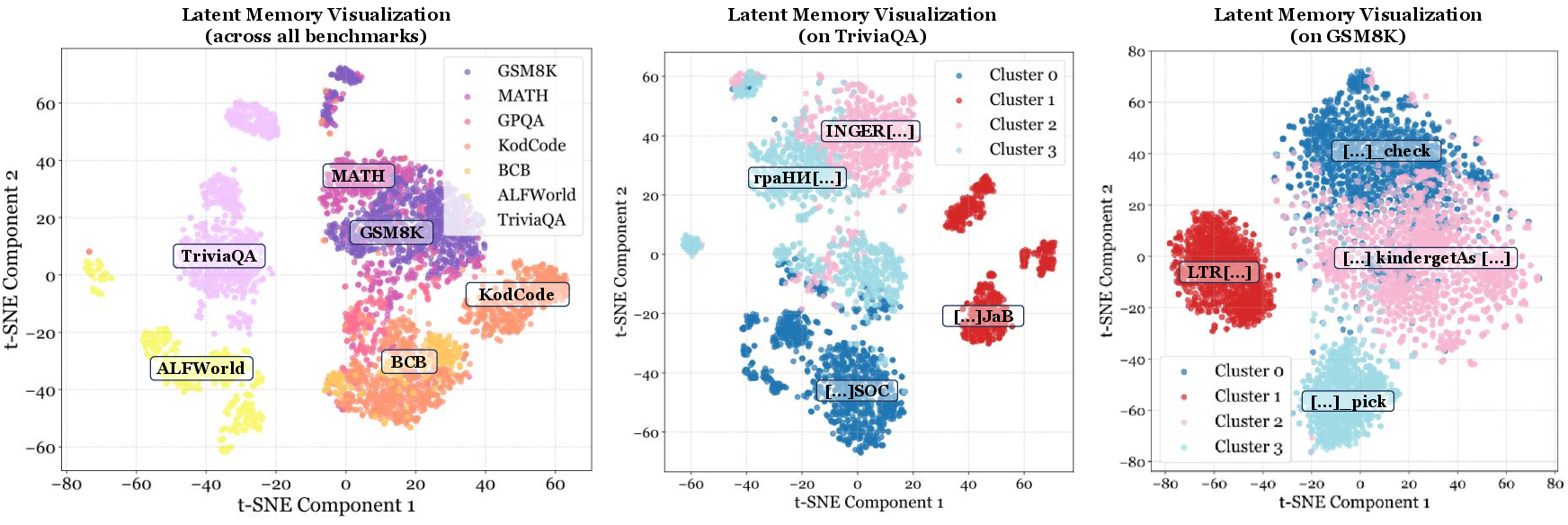

Abstract: Agent memory shapes how LLM-powered agents, akin to the human brain, progressively refine themselves through environment interactions. Existing paradigms remain constrained: parametric memory forcibly adjusts model parameters, and retrieval-based memory externalizes experience into structured databases, yet neither captures the fluid interweaving of reasoning and memory that underlies human cognition. To address this gap, we propose MemGen, a dynamic generative memory framework that equips agents with a human-esque cognitive faculty. It consists of a \textit{memory trigger}, which monitors the agent's reasoning state to decide explicit memory invocation, and a \textit{memory weaver}, which takes the agent's current state as stimulus to construct a latent token sequence as machine-native memory to enrich its reasoning. In this way, MemGen enables agents to recall and augment latent memory throughout reasoning, producing a tightly interwoven cycle of memory and cognition. Extensive experiments across eight benchmarks show that MemGen surpasses leading external memory systems such as ExpeL and AWM by up to $38.22\%$, exceeds GRPO by up to $13.44\%$, and exhibits strong cross-domain generalization ability. More importantly, we find that without explicit supervision, MemGen spontaneously evolves distinct human-like memory faculties, including planning memory, procedural memory, and working memory, suggesting an emergent trajectory toward more naturalistic forms of machine cognition.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces MemGen, a new way to give AI agents a “memory” that works more like a human’s. Instead of just stuffing facts into the AI or copying notes from a database, MemGen lets the AI create and use its own short, internal “machine notes” while it is thinking. This helps the AI learn from experience, make better decisions, and avoid forgetting old knowledge.

What questions does the paper ask?

The authors focus on a simple idea: how can an AI’s memory work smoothly with its thinking, like a real mind? They ask:

- Can an AI decide for itself when it needs to “remember” something during problem-solving?

- Can it generate helpful internal memory (not just retrieve old text) to guide its next steps?

- Can this memory help across different tasks (like math, coding, science, and web search) without causing the AI to forget other things?

How does MemGen work?

MemGen adds two small “helpers” around a regular LLM (the main AI stays frozen and unchanged). Think of the main AI as a student, and the helpers as tools that improve paper habits.

- First, why not just use standard methods?

- Parametric memory: Fine-tuning a model’s parameters is like rewriting the student’s brain. It can help but may cause “catastrophic forgetting” (losing general knowledge).

- Retrieval memory: Grabbing old notes from a database is like pasting extra text into the homework. It’s helpful, but clunky and not truly part of the AI’s thinking.

MemGen’s idea: let the AI generate short, machine-only memory on the fly that blends into its reasoning.

The two key parts

- Memory Trigger (when to recall)

- What it does: Watches the AI’s ongoing thoughts as it writes each sentence. When it senses the AI is stuck or about to make a mistake, it decides to “invoke memory.”

- How it learns: Using reinforcement learning (RL), a trial-and-error method where the trigger gets rewarded when its decision leads to better results. It also learns not to trigger too often.

- Memory Weaver (what to recall)

- What it does: When triggered, it creates a short sequence of special “latent tokens” (think of them as tiny, machine-only note cards) based on the AI’s current state. These notes are not human-readable, but the AI understands them.

- How it helps: The AI then continues thinking with these new notes woven into its internal process, improving planning, accuracy, and consistency.

- Extra: The weaver can also combine info from outside sources (like a database) but turns it into those compact, machine-native notes so it fits smoothly into the AI’s thinking.

Because the main AI stays frozen, MemGen avoids catastrophic forgetting. Only the small helper parts are trained.

What did they find?

Across many benchmarks (math, coding, science questions, web trivia, and a game-like environment where the AI performs actions), MemGen showed strong results.

Here are the key takeaways:

- Better performance: MemGen beat popular memory systems (like retrieval-based ones) by as much as about 38%, and even outperformed a strong RL method (GRPO) by up to about 13% on some tasks.

- Works across domains: Training MemGen on one area (like math) also helped in others (like science and coding). The trigger learned to invoke memory more in tasks where it helped and less where it didn’t—so it didn’t “force” memory where it wasn’t useful.

- Less forgetting: Because the main AI is not rewritten, it kept old skills while learning new ones. This is important for long-term learning.

- Human-like memory roles emerged: By studying which “latent notes” mattered for which mistakes, the authors found three natural types of machine memory:

- Planning memory: helps with overall strategy and step ordering.

- Procedural memory: helps with doing things correctly, like using tools and formatting answers.

- Working memory: helps keep track of what’s going on in a long problem so the AI stays consistent.

- Efficient: The extra thinking time stayed small; the system did not significantly slow the AI down.

Why is this important?

MemGen points toward AI that learns more like people do: thinking and remembering at the same time, and creating helpful “mental notes” on the spot. This can make AI assistants:

- more reliable (they plan better and make fewer careless mistakes),

- more adaptable (they improve in one area without hurting others),

- and safer to update (no need to rewrite the whole model and risk forgetting).

In practical terms, this could improve tutoring bots that solve math step-by-step, coding assistants that remember how to use tools, research helpers that keep context straight across long tasks, and more. It’s a step toward self-improving AI agents with memory that feels less like a bolted-on database and more like a real, flexible mind.

Collections

Sign up for free to add this paper to one or more collections.