- The paper introduces Memory Transfer Planning (MTP), a novel framework that reuses and adapts successful control code using LLMs for enhanced robotic manipulation.

- It combines modular code generation, memory retrieval, and context-aware re-planning to effectively transfer learned behaviors across simulated and real-world environments.

- Experimental results on benchmarks like RLBench and CALVIN demonstrate significant performance gains, highlighting the method’s robustness and practicality.

Memory Transfer Planning: LLM-driven Context-Aware Code Adaptation for Robot Manipulation

Introduction

This paper presents Memory Transfer Planning (MTP), a framework for leveraging LLMs in robotic manipulation by reusing and adapting previously successful control code across diverse environments. MTP addresses the persistent challenge of generalization in LLM-driven robotic planning, where existing approaches often require environment-specific policy training or rely on static prompts, resulting in limited transferability and the need for manual intervention. The core innovation of MTP is its ability to retrieve relevant procedural knowledge from a memory of successful code examples and contextually adapt these to new environments, enabling robust re-planning without model fine-tuning.

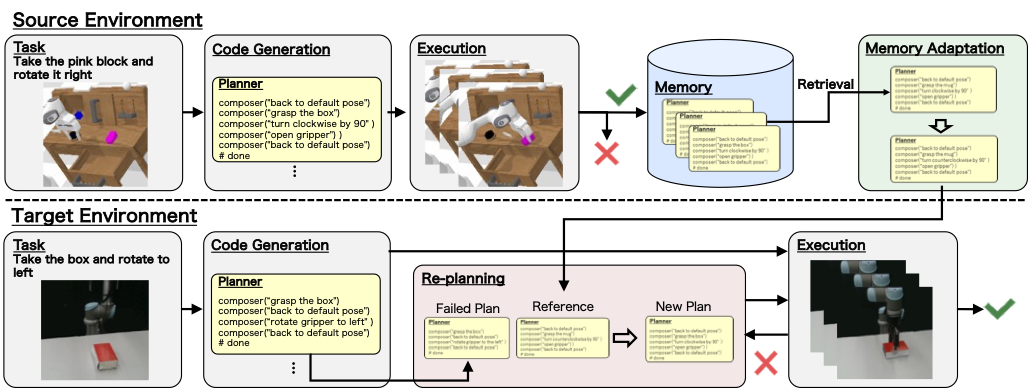

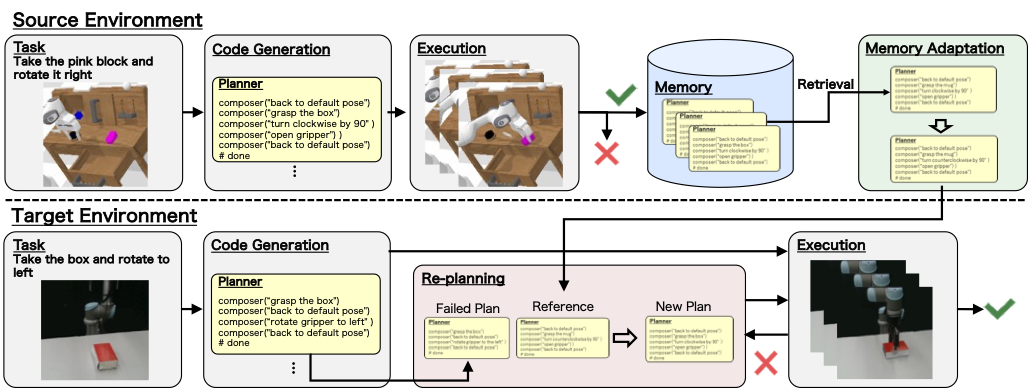

Figure 1: Overview of MTP. Successfully generated code in the source environment is stored in memory and, upon failure in the target environment, similar codes are retrieved and adapted to facilitate re-planning.

MTP Framework

MTP is structured around three primary components: Code Generation, Memory Retrieval, and Re-planning with Adapted Memory.

Code Generation

The initial code generation pipeline decomposes a free-form language instruction into sub-tasks using an LLM-based planner. Each sub-task is further processed by a composer module, which invokes low-level LLM programs (LMPs) to generate executable robot trajectories. This modular approach enables the system to translate high-level instructions into actionable robot control code.

Memory Construction and Retrieval

Upon successful task execution, the generated planner-level code, along with its context (environment, instruction), is stored in a searchable memory. When a new task is encountered and the initial plan fails, MTP computes the similarity between the current instruction and those in memory using sentence-transformer embeddings and cosine similarity. The top-k most relevant code examples are retrieved for potential adaptation.

Memory Adaptation and Re-planning

The retrieved code is not directly reused; instead, it undergoes context-aware adaptation to align with the specifics of the target environment. This adaptation is performed by prompting the LLM with both the retrieved code and examples from the target environment, allowing the model to retarget parameters, adjust pre/post-conditions, and resolve environment-specific differences.

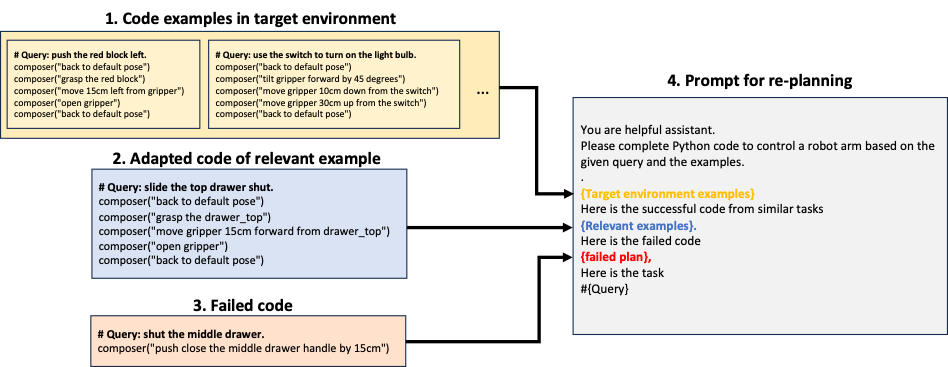

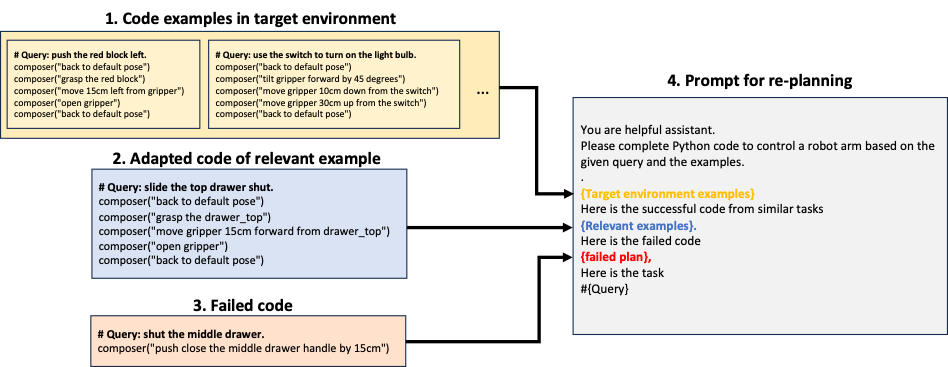

Figure 3: Prompt structure for re-planning, incorporating target environment examples (yellow), adapted memory code (blue), and failed code (red).

Re-planning is then performed by supplying the adapted code as in-context examples to the LLM, which generates a new candidate plan. This process is iterated, using increasingly less similar memory entries if necessary, until success or a maximum number of trials is reached.

Experimental Evaluation

Simulation Benchmarks

MTP was evaluated on RLBench and CALVIN, two established robotic manipulation benchmarks. In RLBench, MTP achieved a mean success rate of 64.4%, outperforming VoxPoser (39.3%), Retry (55.6%), and Self-reflection (60.7%). On CALVIN, MTP reached 67.3% with single instructions and 59.3% with paraphrased instructions, consistently surpassing baselines. Notably, MTP demonstrated robustness to instruction paraphrasing, maintaining higher performance under linguistic variation.

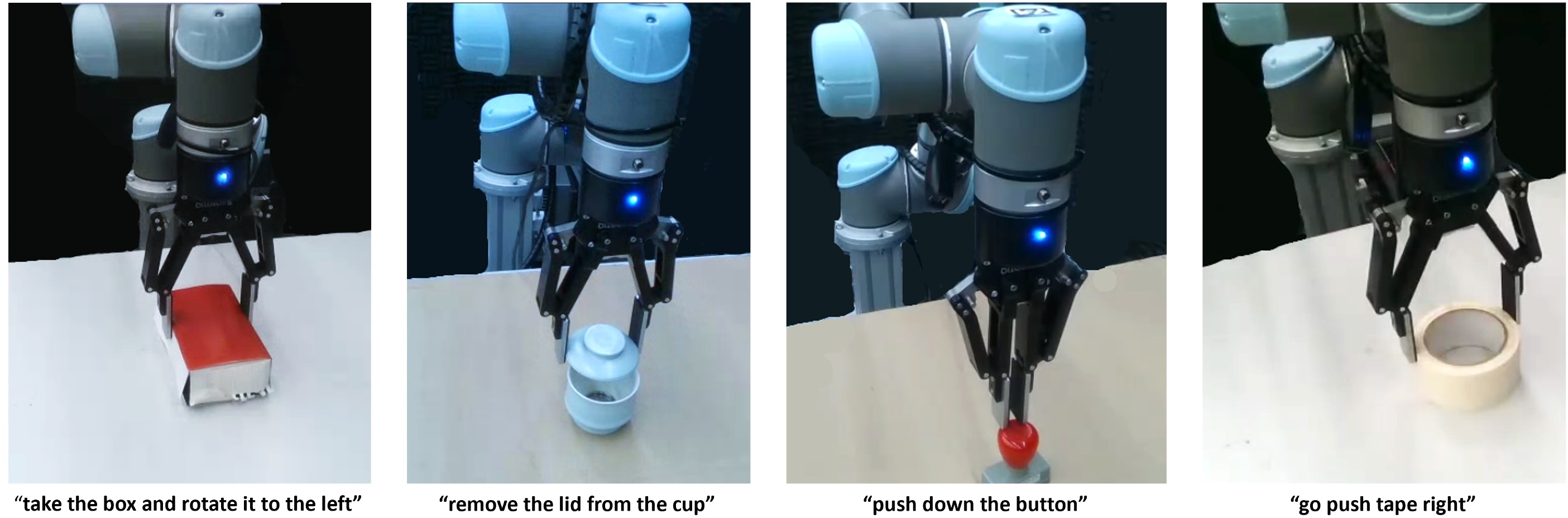

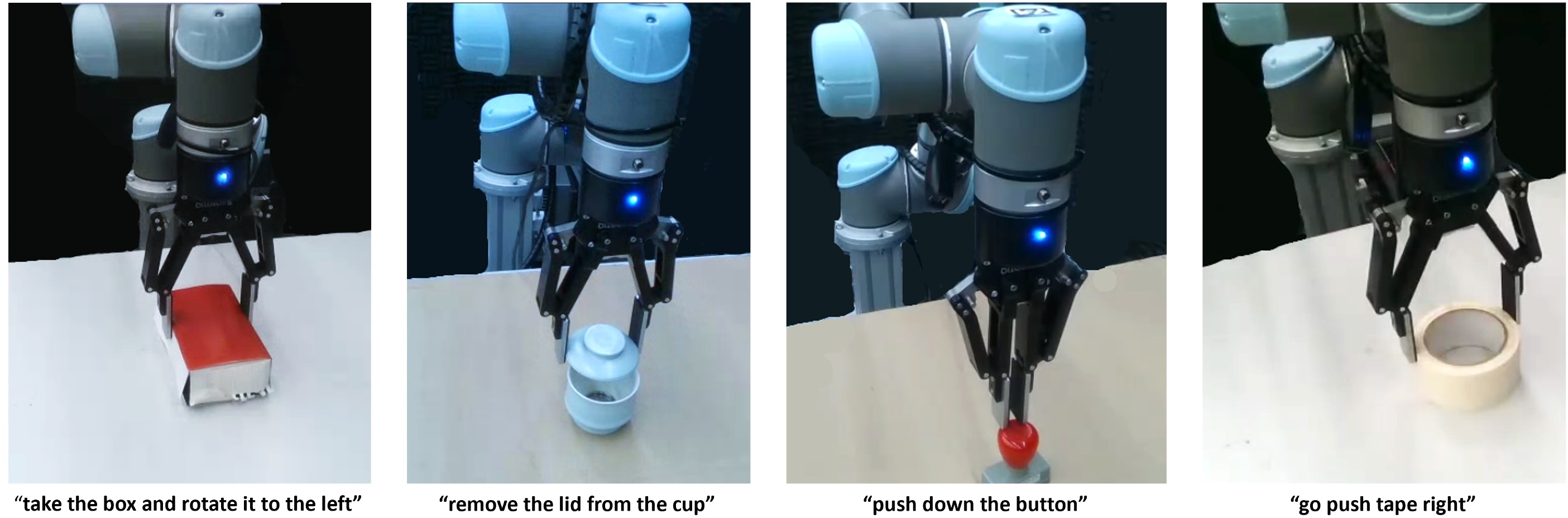

Real-World Robotic Deployment

On a UR5 manipulator equipped with a Robotiq 2F gripper and LangSAM-based segmentation, MTP achieved a 75% overall success rate across four representative tasks, compared to 30% for VoxPoser. The system was able to transfer memory constructed in simulation to real-world tasks without retraining, highlighting the practical utility of the approach.

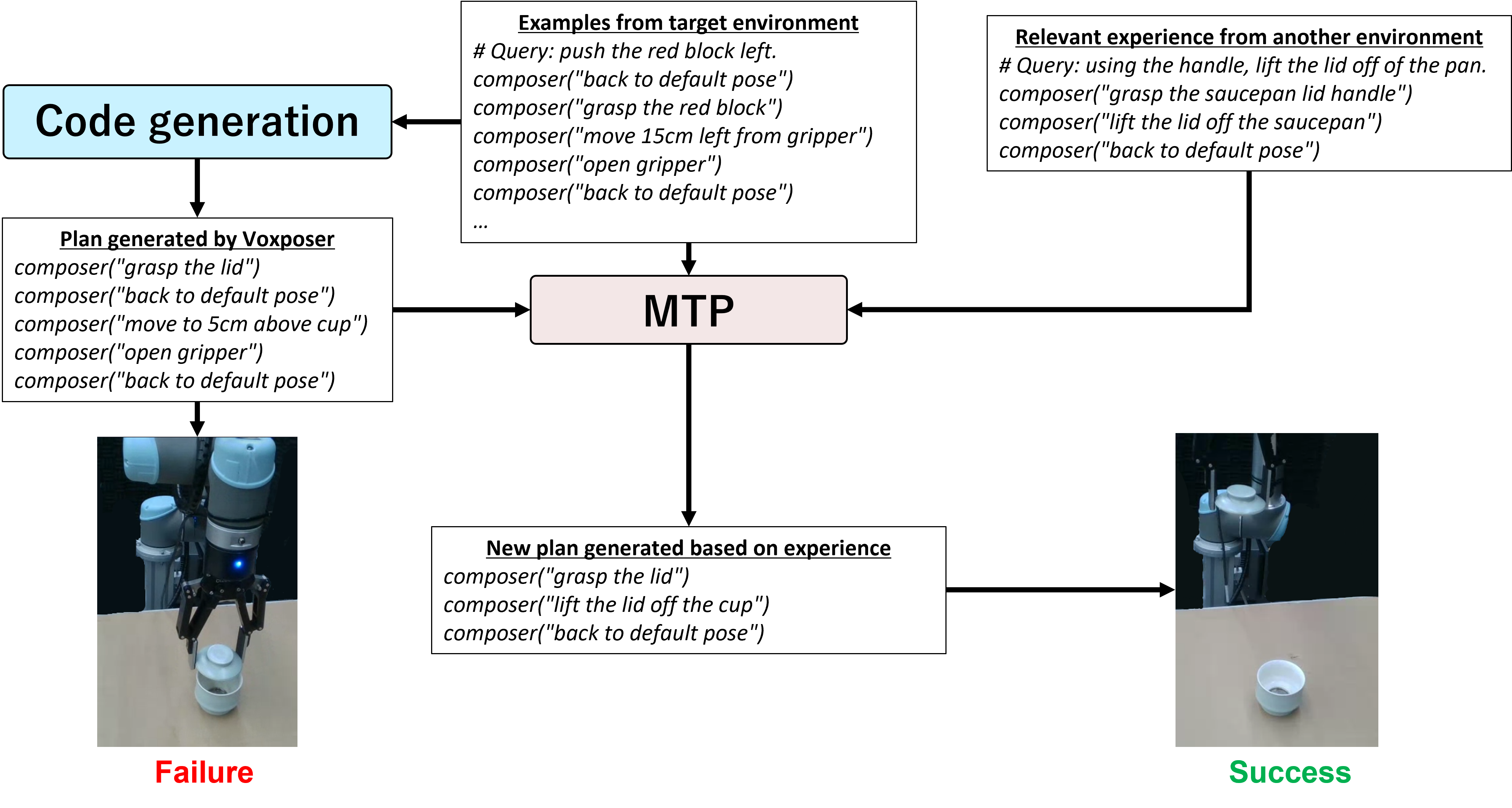

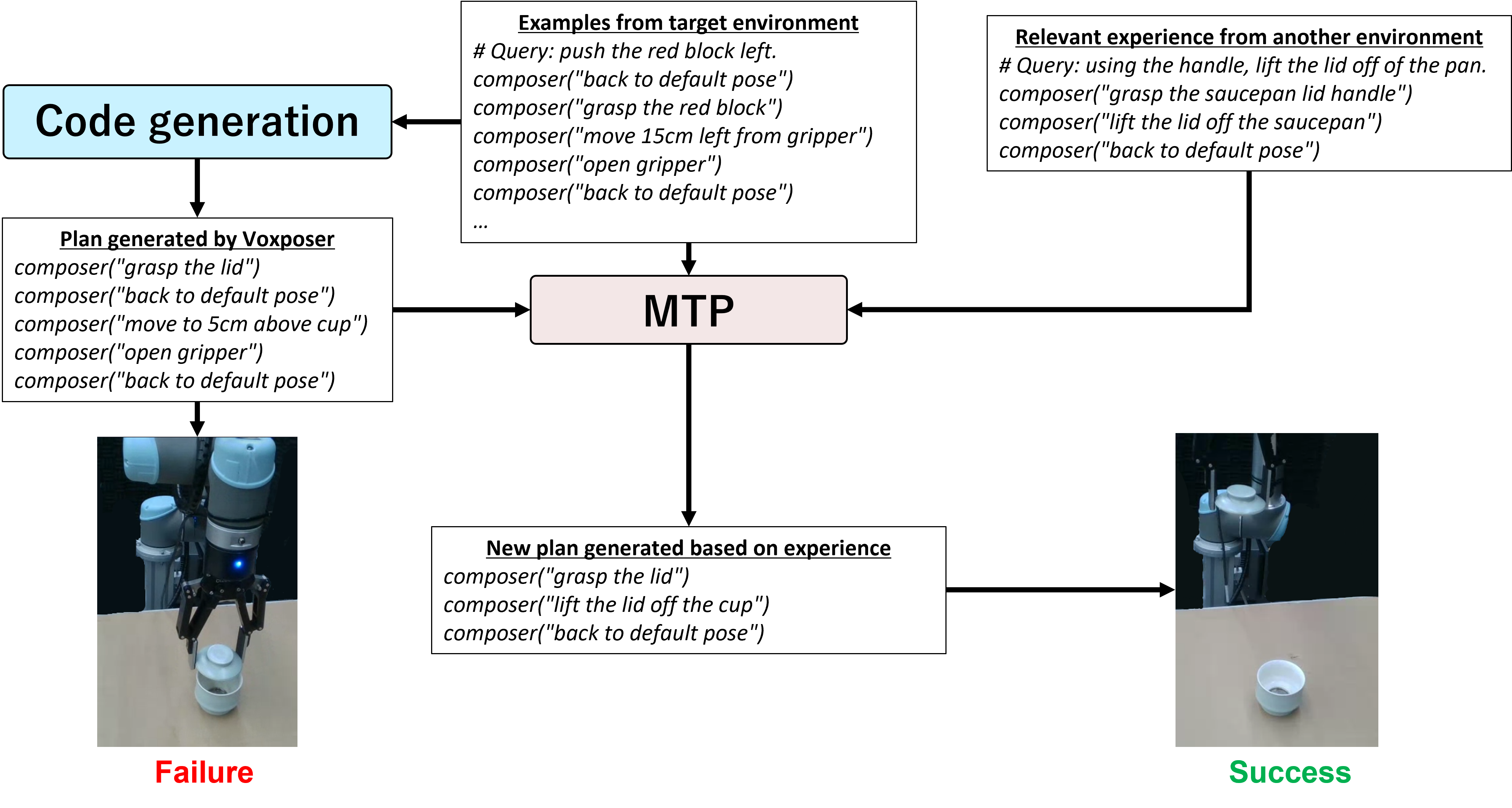

Figure 5: Memory-guided re-planning on UR5 for the "Take Lid Off Cup" task—initial plan fails by resealing the cup, corrected plan succeeds by lifting the lid away.

Figure 2: UR5 executing a variety of real-world manipulation tasks using MTP.

Qualitative Analysis

Comparative plan analysis revealed that MTP-generated plans are more complete and contextually appropriate than those from static code generation. For example, in the "rotate box" task, MTP included both the rotation and proper placement, while VoxPoser left the object suspended. In pushing tasks, MTP adapted its strategy (e.g., switching from push to grasp-and-place) based on prior experience, demonstrating flexible problem-solving.

Ablation Studies

Ablation experiments confirmed the necessity of memory adaptation: removing this component led to a significant drop in success rates (RLBench: 64.4% → 49.3%; CALVIN: 67.3% → 60.0%). Further, the quality and diversity of the memory were shown to impact transfer effectiveness, with RLBench-derived memory yielding higher performance across both benchmarks.

Implications and Future Directions

MTP demonstrates that procedural knowledge, encoded as successful code traces, can be effectively reused and adapted via LLMs to enhance generalization in robotic planning. The approach is parameter-efficient, requiring no model updates, and is compatible with plug-and-play integration into existing LLM-agent pipelines. The strong empirical results, especially in sim-to-real transfer, suggest that memory-based re-planning is a viable path toward scalable, adaptable embodied agents.

However, the current implementation relies on static, text-based memory and does not incorporate dynamic memory management or multimodal grounding. Future work should explore scalable memory architectures, online memory growth/pruning, and integration of visual or sensorimotor modalities to further improve adaptability and robustness in open-ended environments.

Conclusion

Memory Transfer Planning provides a principled framework for context-aware code adaptation in LLM-driven robotic manipulation. By leveraging and adapting prior successful code, MTP achieves superior generalization and task completion rates across simulated and real-world settings. The results validate the central hypothesis that memory-based re-planning, with explicit adaptation, is essential for robust, transferable robotic intelligence. Addressing current limitations in memory management and multimodal integration will be critical for advancing the practical deployment of such systems in complex, dynamic environments.