LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with LLMs

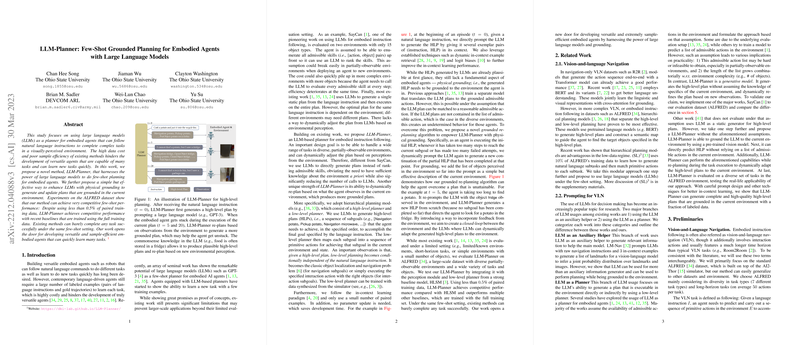

The paper "LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with LLMs" introduces a novel approach to enhance the capabilities of embodied agents by leveraging LLMs such as GPT-3. The core motivation of this research is to address the limitations of existing methods in terms of data efficiency and adaptability in complex, partially-observable environments. The authors propose the LLM-Planner, which performs few-shot grounded planning for embodied agents, thereby aiming to reduce the dependence on large datasets typically required to train such agents.

Methodology

The paper presents the LLM-Planner as a generative model distinct from traditional methods that often require exhaustive lists of admissible actions and rely on ranking mechanisms. By directly generating high-level plans, LLM-Planner circumvents the exhaustive enumeration of skills, enhancing practicality in environments with large and diverse object types. The approach consists of several key components:

- Few-Shot High-Level Planning: LLM-Planner employs in-context learning to use LLMs for generating high-level plans with minimal data. It leverages dynamic in-context example retrieval via a k-nearest-neighbor (kNN) mechanism to enhance the relevance of the chosen examples based on their similarity to the task at hand.

- Grounding and Dynamic Re-Planning: The model introduces a novel grounded re-planning mechanism that allows the agent to dynamically alter its plan based on new environmental perceptions. When the agent encounters obstacles or inefficiencies, it can revise its plan by incorporating the objects it has observed, thereby grounding its actions in its current context.

- Integration with Existing Models: The LLM-Planner is designed to be integrated with existing hierarchical models like HLSM, utilizing pre-trained low-level planners and perception modules to convert high-level instructions into executable actions.

Experimental Evaluation

The efficacy of LLM-Planner is validated on the ALFRED benchmark, which features diverse and complex tasks within a simulated household environment. The results demonstrate that LLM-Planner achieves competitive performance compared to the full data-trained baselines, even though it uses less than 0.5% of the training data. Notably, the grounded re-planning mechanism significantly improves task success rates, illustrating the impact of dynamic adaptation based on environmental feedback.

Implications and Future Directions

The implications of this paper are twofold. Practically, LLM-Planner offers a more data-efficient solution for training embodied agents, reducing the dependency on large datasets while maintaining comparable performance. Theoretically, it opens up new avenues for exploring how LLMs can be leveraged in embodied tasks, particularly in enhancing adaptability and grounding in dynamic environments.

Future research could delve into optimizing prompt designs specifically for planning tasks, exploring other types of LLMs such as those fine-tuned on code (e.g., Codex), and potentially incorporating more sophisticated methods for grounding that could account for deeper nuances in environment-object interactions. Furthermore, refining the object detection components and low-level controllers could ameliorate some of the observed performance bottlenecks, resulting in more robust and reliable agents.

In summary, the "LLM-Planner" paper showcases a promising method for improving the efficiency and adaptability of embodied AI through the use of LLMs for few-shot grounded planning. This work points towards a future where embodied agents can operate more effectively in real-world scenarios, learning from minimal examples and dynamically adjusting to their ever-changing environments.