- The paper introduces a novel RL-free framework that leverages human video demonstrations to extract and refine bimanual non-prehensile primitives.

- The paper's three-stage pipeline optimizes hand trajectories with geometric and contact-aware refinement, achieving an 86.7% success rate across multiple tasks.

- The paper demonstrates robust category-level generalization with parameterized primitives adaptable to unseen objects, outperforming RL-based baselines.

BiNoMaP: Learning Category-Level Bimanual Non-Prehensile Manipulation Primitives

Introduction and Motivation

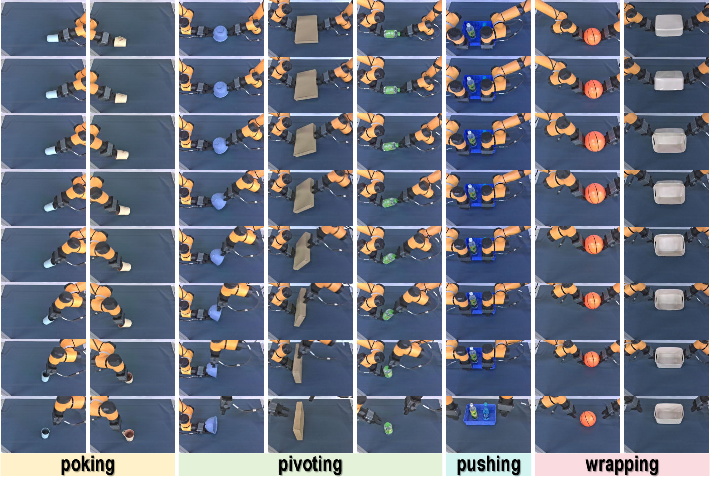

BiNoMaP introduces a novel RL-free framework for learning bimanual non-prehensile manipulation primitives directly from human video demonstrations. Non-prehensile manipulation, encompassing actions such as pushing, poking, pivoting, and wrapping, is essential for handling objects that are ungraspable, fragile, or lack sufficient geometry for reliable grasping. Existing approaches predominantly rely on single-arm setups and reinforcement learning (RL), often requiring structured environments and extensive simulation, which limits generalization and incurs significant sim-to-real gaps. BiNoMaP addresses these limitations by leveraging dual-arm coordination and a three-stage pipeline that extracts, refines, and parameterizes manipulation primitives, enabling robust category-level generalization.

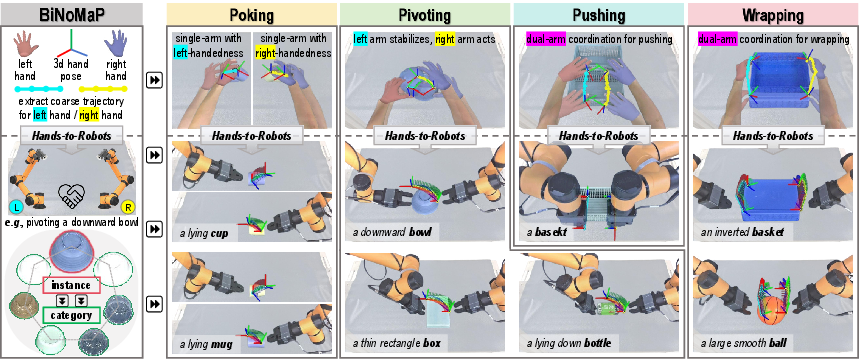

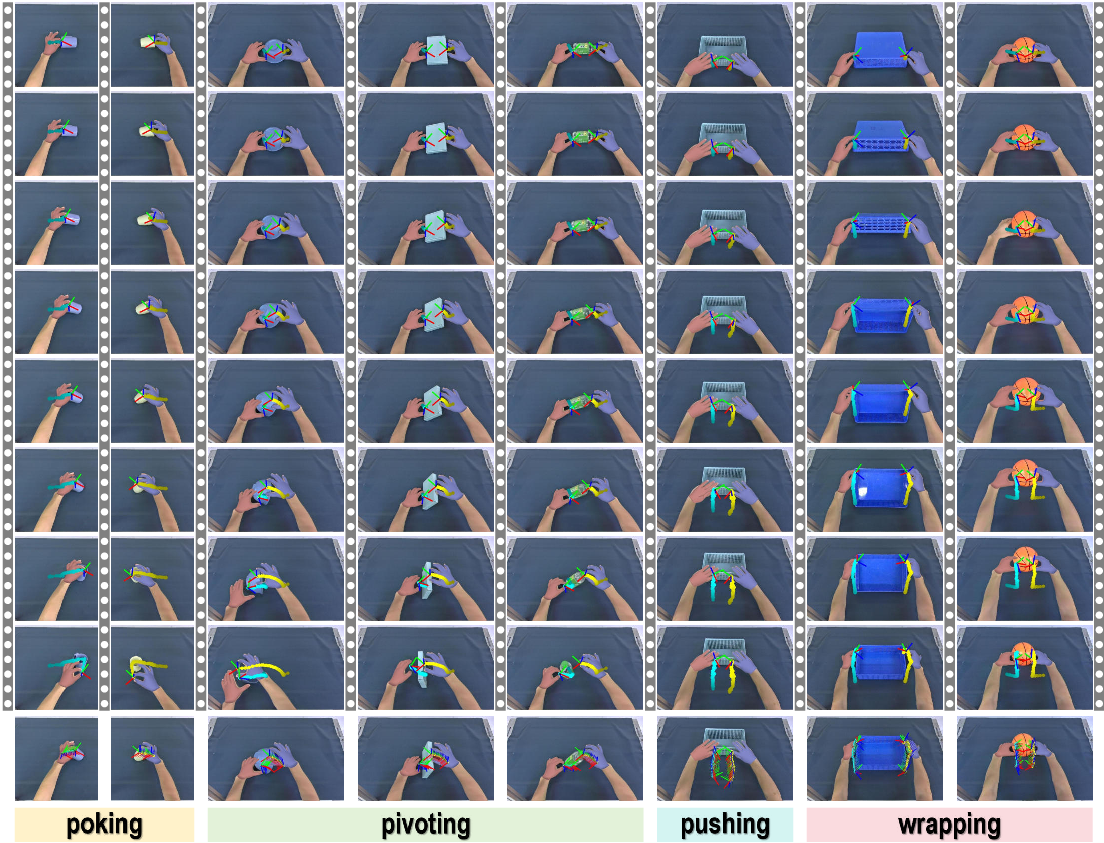

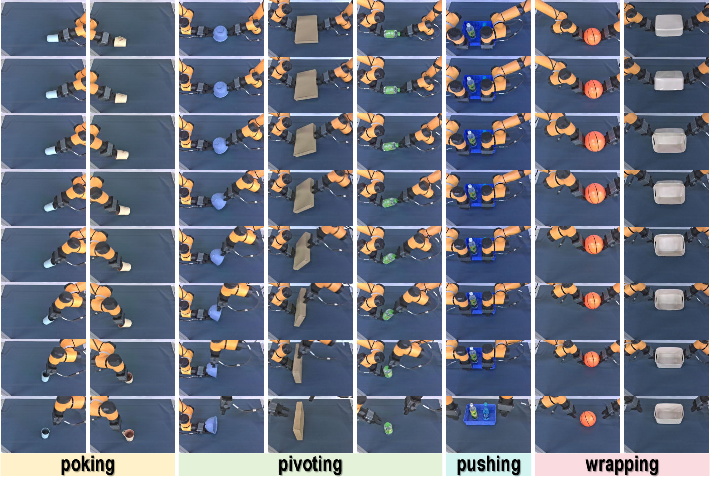

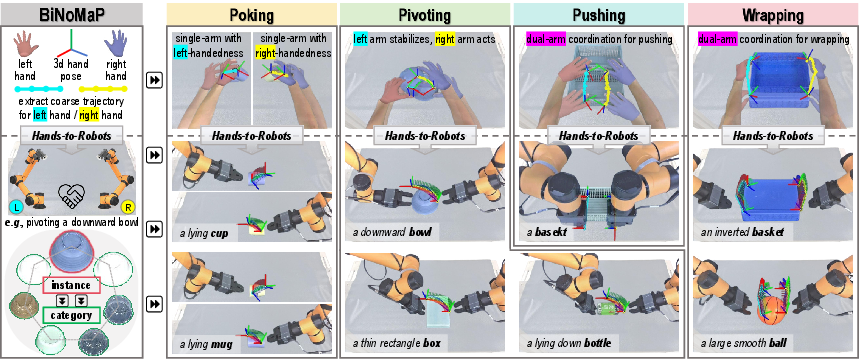

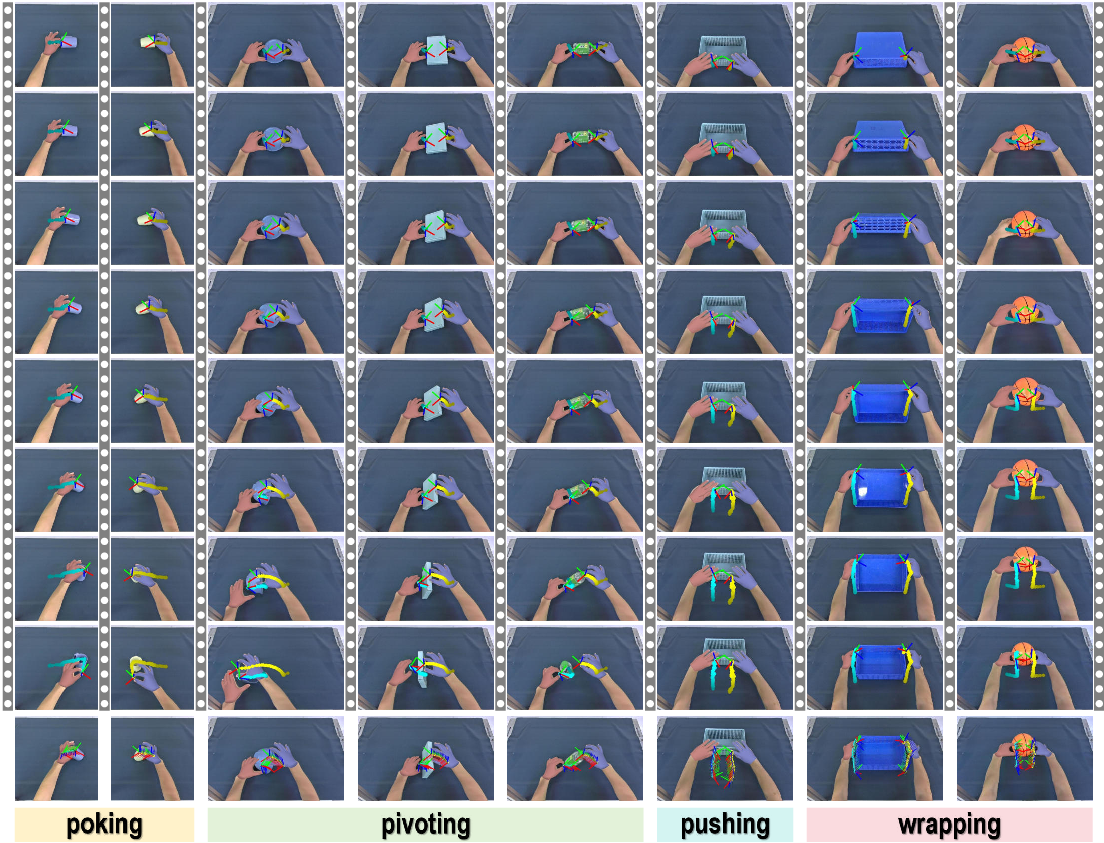

Figure 1: BiNoMaP extracts coarse hand trajectories from human video demonstrations, refines them for dual-arm robots, and parameterizes skills for instance- and category-level generalization across four non-prehensile skills.

Methodology

Three-Stage RL-Free Learning Pipeline

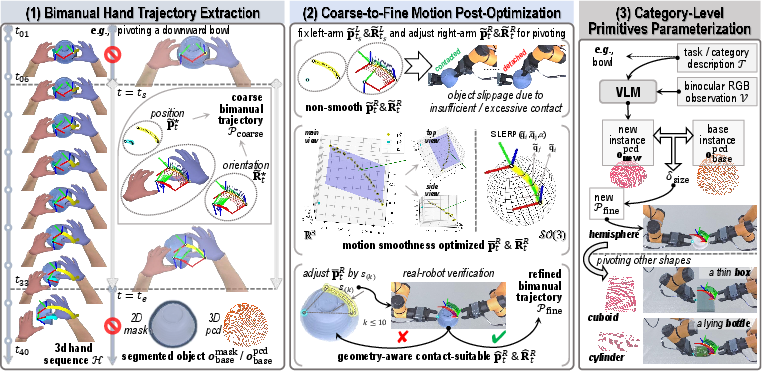

BiNoMaP comprises three sequential stages:

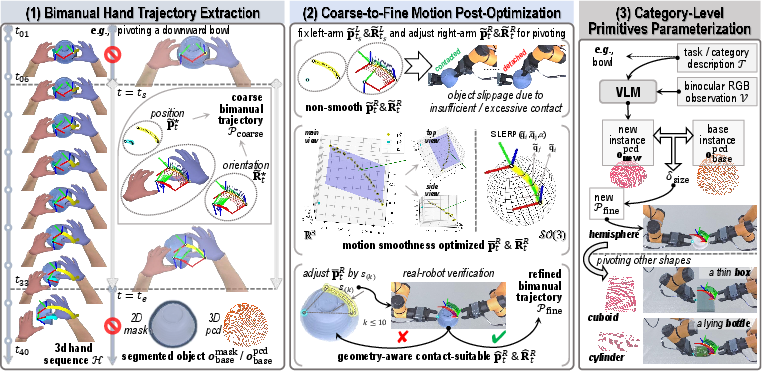

- Bimanual Hand Trajectory Extraction: Human demonstrations are recorded via stereo RGB cameras. 3D hand shapes and poses are reconstructed using WiLoR, and representative contact points are mapped to the robot's workspace. End-effector positions and orientations are approximated by aligning hand joints with gripper spindles, yielding coarse bimanual trajectories.

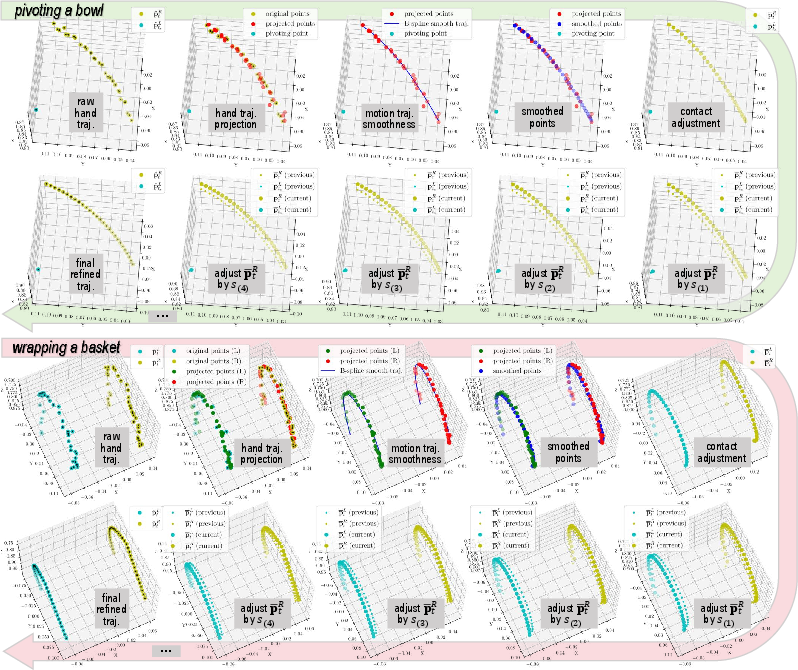

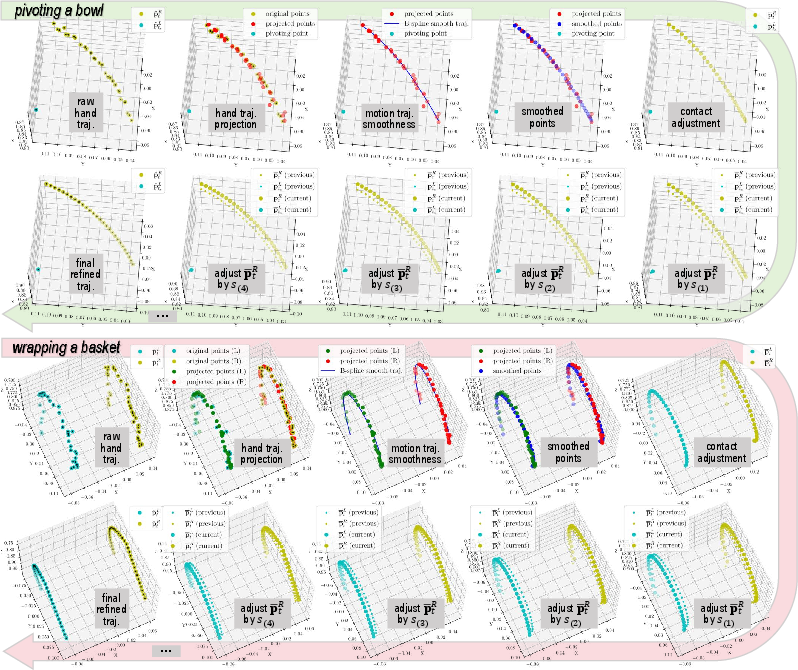

- Coarse-to-Fine Motion Post-Optimization: Extracted trajectories are refined for smoothness and stable contact. Positional smoothing is enforced via coplanarity constraints and B-spline filtering, while rotational transitions are interpolated using SLERP. Geometry-aware iterative contact adjustment ensures millimeter-level precision, adapting the trajectory to the object's point cloud and verifying success via real-robot rollouts.

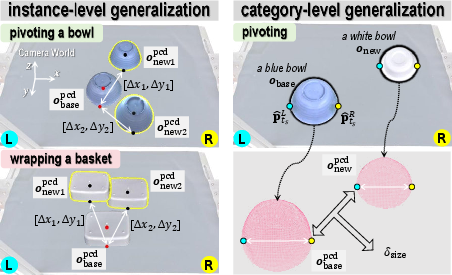

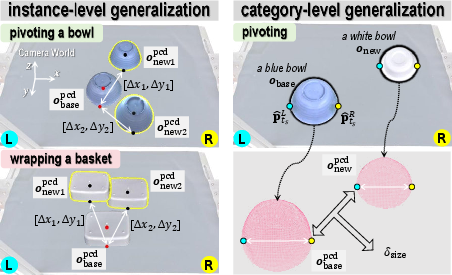

- Category-Level Primitive Parameterization: Optimized instance-level primitives are parameterized by object-relevant geometric attributes (e.g., size, diameter, width). Dimensional variations are computed from segmented object point clouds, and trajectory scaling is applied to generalize skills across unseen category instances.

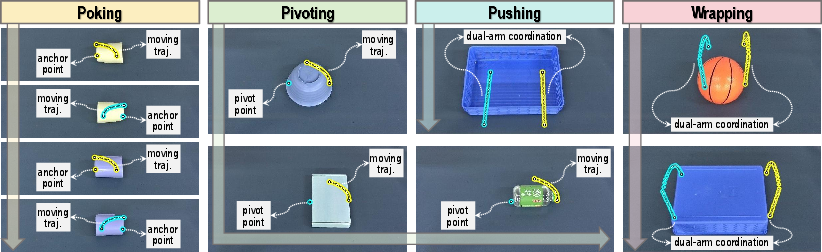

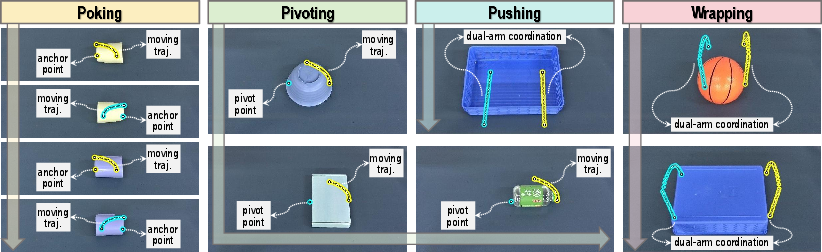

Figure 2: The BiNoMaP pipeline: (1) hand demonstration extraction, (2) trajectory refinement, (3) category-level parameterization for generalization.

BiNoMaP targets four representative bimanual non-prehensile skills: poking, pivoting, pushing, and wrapping. Each skill is instantiated across diverse objects and tasks, including uprightting cups, flipping bowls, lifting boxes, pushing baskets, and wrapping balls. The dual-arm setup eliminates reliance on external affordances, leveraging intrinsic coordination for robust manipulation.

Figure 3: Instantiations of four non-prehensile skills with diverse objects and tasks.

Experimental Evaluation

Quantitative Comparison

BiNoMaP is evaluated against six strong baselines: three visuomotor policies (ACT, DP, DP3) and three RL-based sim-to-real methods (HACMan, CORN, DyWA). Success rates are measured across six tasks and three skills, with each object-task pair evaluated over 10 real-world trials.

Key Results:

- BiNoMaP achieves an average success rate of 86.7%, outperforming all baselines (best baseline: DyWA at 48.3%).

- Baselines suffer from insufficient/excessive contact, sim-to-real degradation, and poor generalization, especially in contact-rich tasks like pivoting and wrapping.

- BiNoMaP demonstrates robust performance via explicit contact reasoning and adaptive trajectory adjustment.

Ablation Studies

Ablation experiments confirm the necessity of each pipeline component:

- Removing trajectory extraction, smoothing, or contact adjustment significantly degrades performance.

- Hyperparameter sweeps (number of anchor frames, initial contact distance, decay factor) show BiNoMaP is robust and insensitive to parameter choices.

Generalization

BiNoMaP generalizes effectively across both instance-level (same object, varying placements) and category-level (unseen objects, varying sizes) settings:

- Instance-level: 86.3% success rate across eight object-task pairs.

- Category-level: 76.2% success rate, with only a modest drop compared to instance-level.

- Baselines deteriorate sharply under category-level generalization (DP3: 25.5%, DyWA: 29.7%).

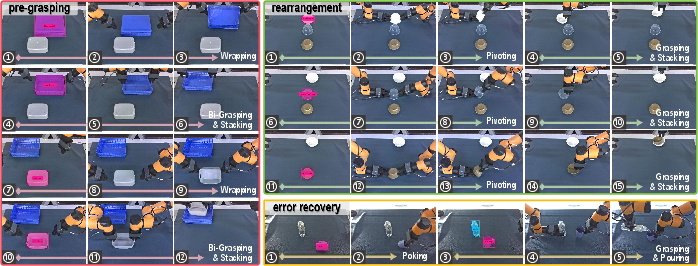

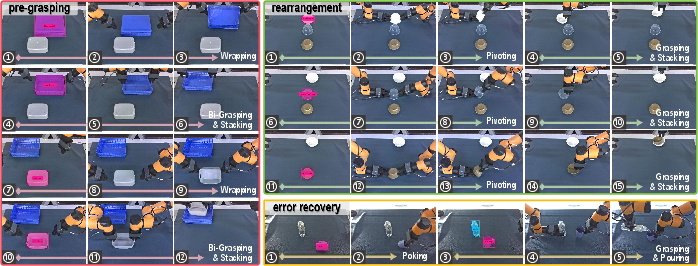

Figure 4: Learned non-prehensile skills boost complex manipulation tasks, such as pre-grasping, rearrangement, and error recovery.

Compositionality and Downstream Applications

BiNoMaP's modular primitives are composable for higher-level tasks:

- Pre-grasping: Wrapping skill flips baskets upright for subsequent grasping.

- Rearrangement: Pivoting skill stacks bowls after flipping.

- Error recovery: Poking skill uprights mugs for pouring actions.

- Integration with VLMs enables object localization, segmentation, and grasp planning.

Implementation Considerations

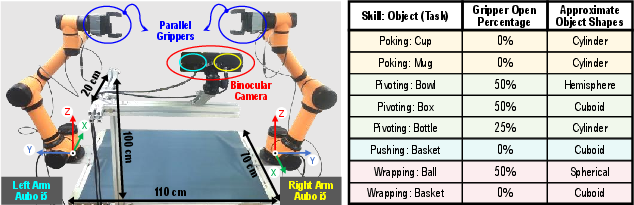

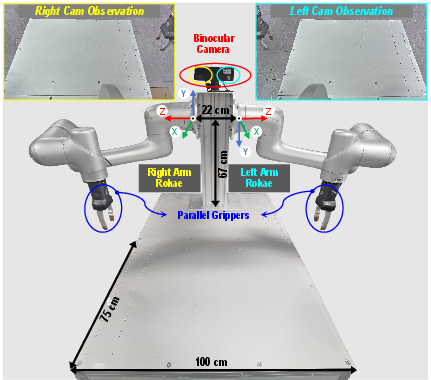

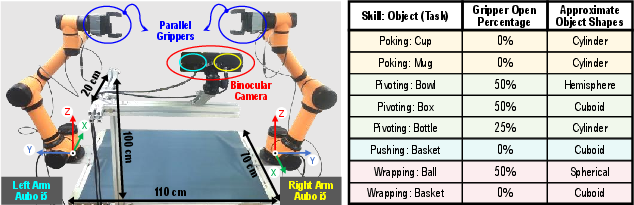

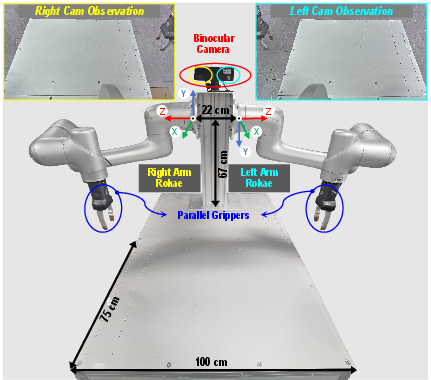

- Hardware: Dual-arm platforms with parallel-jaw grippers; no force sensing required.

- Perception: Stereo RGB cameras, VLMs for object segmentation and localization.

- Computation: All trajectory optimizations are performed offline; real-robot verification is efficient (<5 minutes per skill).

- Scalability: No retraining or simulation required for new objects; primitives are parameterized for rapid adaptation.

- Limitations: Open-loop execution; lacks closed-loop correction and tactile feedback. Less effective for strictly rigid objects requiring fine force control.

Figure 5: The fixed-base dual-arm manipulator platform and gripper opening ratios for each skill and task.

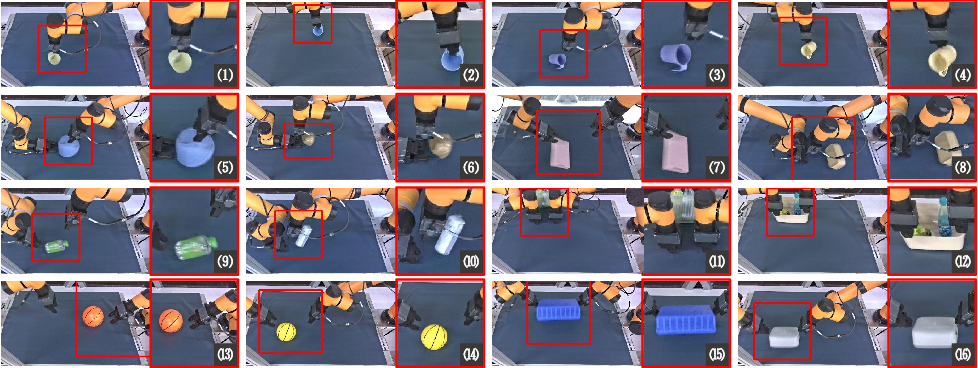

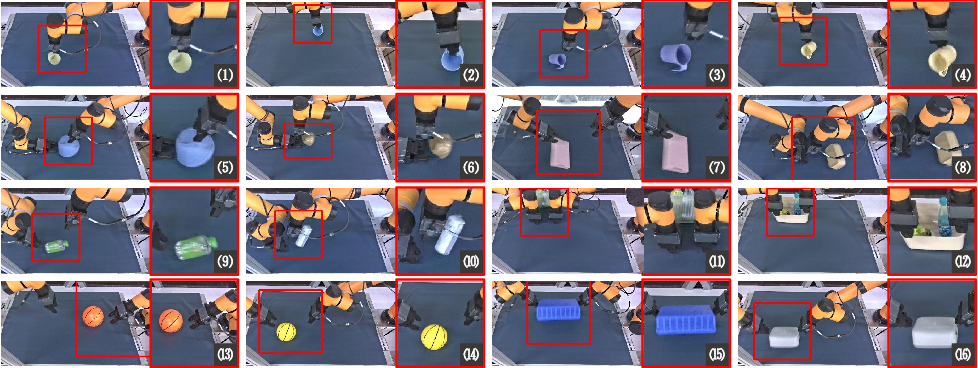

Figure 6: Object assets for four non-prehensile skills and eight bimanual manipulation tasks.

Figure 7: Extracted hand trajectories from human demonstrations for eight bimanual non-prehensile tasks.

Figure 8: Motion patterns for four selected skills.

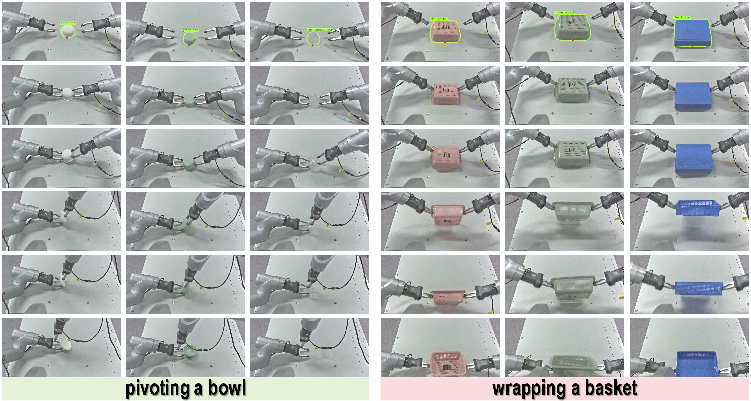

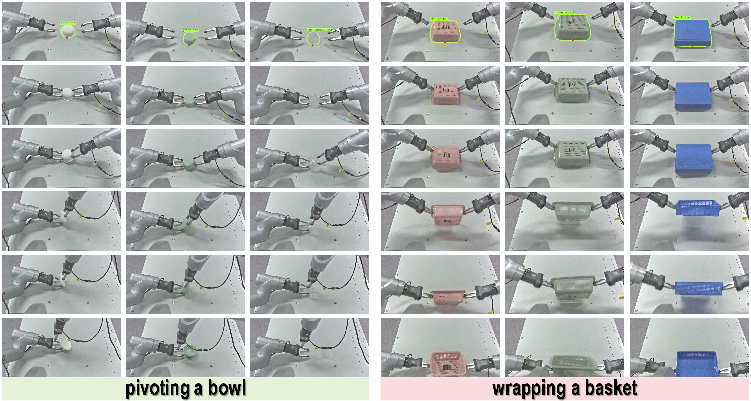

Figure 9: Trajectory point optimization for pivoting and wrapping skills.

Figure 10: Instance-level and category-level generalization of learned primitive manipulation skills.

Figure 11: Qualitative real robot rollout samples of all four skills.

Figure 12: Examples of failed cases in all four skills and eight tasks during real robot evaluation.

Figure 13: Difficult examples that BiNoMaP cannot solve, e.g., flipping upside-down rigid objects.

Figure 14: Another dual-arm manipulator platform for cross-embodiment evaluation.

Figure 15: Real robot rollout samples of pivoting and wrapping skills in another dual-arm platform.

Implications and Future Directions

BiNoMaP demonstrates that RL-free, geometry-aware imitation learning from human videos is a viable and efficient paradigm for bimanual non-prehensile manipulation. The framework's modularity, interpretability, and generalization capabilities position it as a practical solution for real-world deployment, especially in unstructured environments and with diverse object categories. Future work should address closed-loop correction, tactile sensing integration, and unification with language-driven VLA models for end-to-end skill scheduling and long-horizon manipulation.

Conclusion

BiNoMaP establishes a robust, scalable, and generalizable approach for learning bimanual non-prehensile manipulation primitives. Through extensive real-world validation, it achieves superior performance and generalization compared to state-of-the-art visuomotor and RL-based baselines. Its modular design enables compositionality for complex downstream tasks, and its parameterization scheme facilitates rapid adaptation to novel objects. While limitations remain in closed-loop control and tactile feedback, BiNoMaP represents a significant advancement toward practical, category-level non-prehensile manipulation in robotics.