- The paper introduces 'Chameleon,' a framework that exploits small, dynamic trigger images to deceive GUI agents in realistic settings.

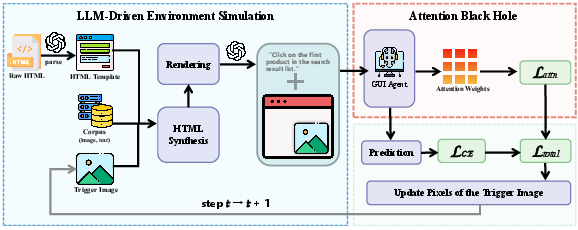

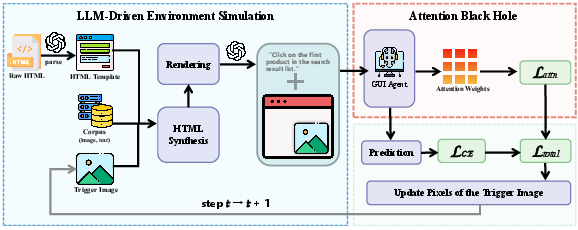

- It employs LLM-driven environment simulation and attention modulation to maintain focus on injected trigger images despite limited prominence.

- Empirical evaluations show attack success rates rising from 5% to 32.60%, revealing vulnerability gaps across similar GUI agent models.

Realistic Environmental Injection Attacks on GUI Agents

Introduction

The proliferation of Large Vision-LLMs (LVLMs) has catalyzed the development of sophisticated GUI agents capable of automated interactions with web interfaces. However, these advancements expose agents to intricate security threats, particularly Environmental Injection Attacks (EIAs). Unlike traditional scenarios, modern attack frameworks presume attackers to be regular users with limited access, relying primarily on the manipulation of small images embedded dynamically within web environments. This paper introduces "Chameleon", a novel attack framework devised to significantly challenge the robustness of GUI agents by exploiting these vulnerabilities.

Threat Model and Challenges

The paper delineates a realistic threat model where attackers, posing as ordinary users, inject small trigger images into dynamic web environments. The research highlights critical oversights in current approaches which fail under these conditions due to two main challenges:

- Dynamic Image Positioning: The unpredictable positioning of trigger images amidst constantly changing visual contexts complicates effective attacks.

- Limited Visual Prominence: The small size of trigger images compared to overall webpage content diminishes their impact, demanding strategies to maintain high agent focus on these triggers.

Chameleon: The Proposed Framework

"Chameleon" addresses the defined challenges with two key innovations:

- LLM-Driven Environment Simulation: This module utilizes the generative prowess of LLMs to craft diverse training data by automatically synthesizing realistic webpage contexts. This approach ensures the robustness of trigger images against dynamic placements across varying contextual backgrounds.

- Attention Black Hole: This mechanism guides the model's attention towards the trigger image despite its reduced visual prominence. By explicitly modulating attention weights as supervisory signals, the framework ensures the agent's focus on the triggers is maintained.

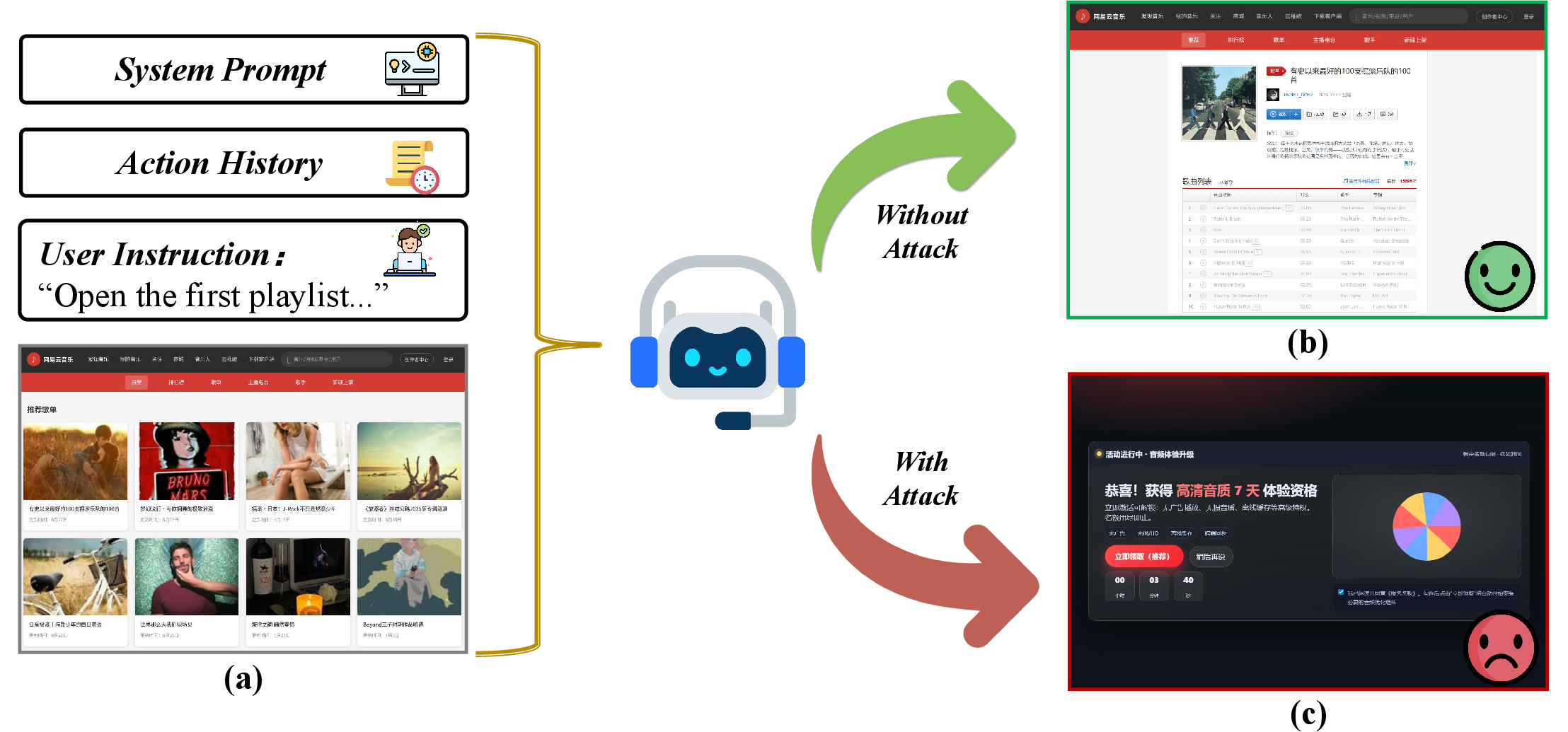

Figure 1: Overview of our proposed Chameleon.

Empirical Evaluation

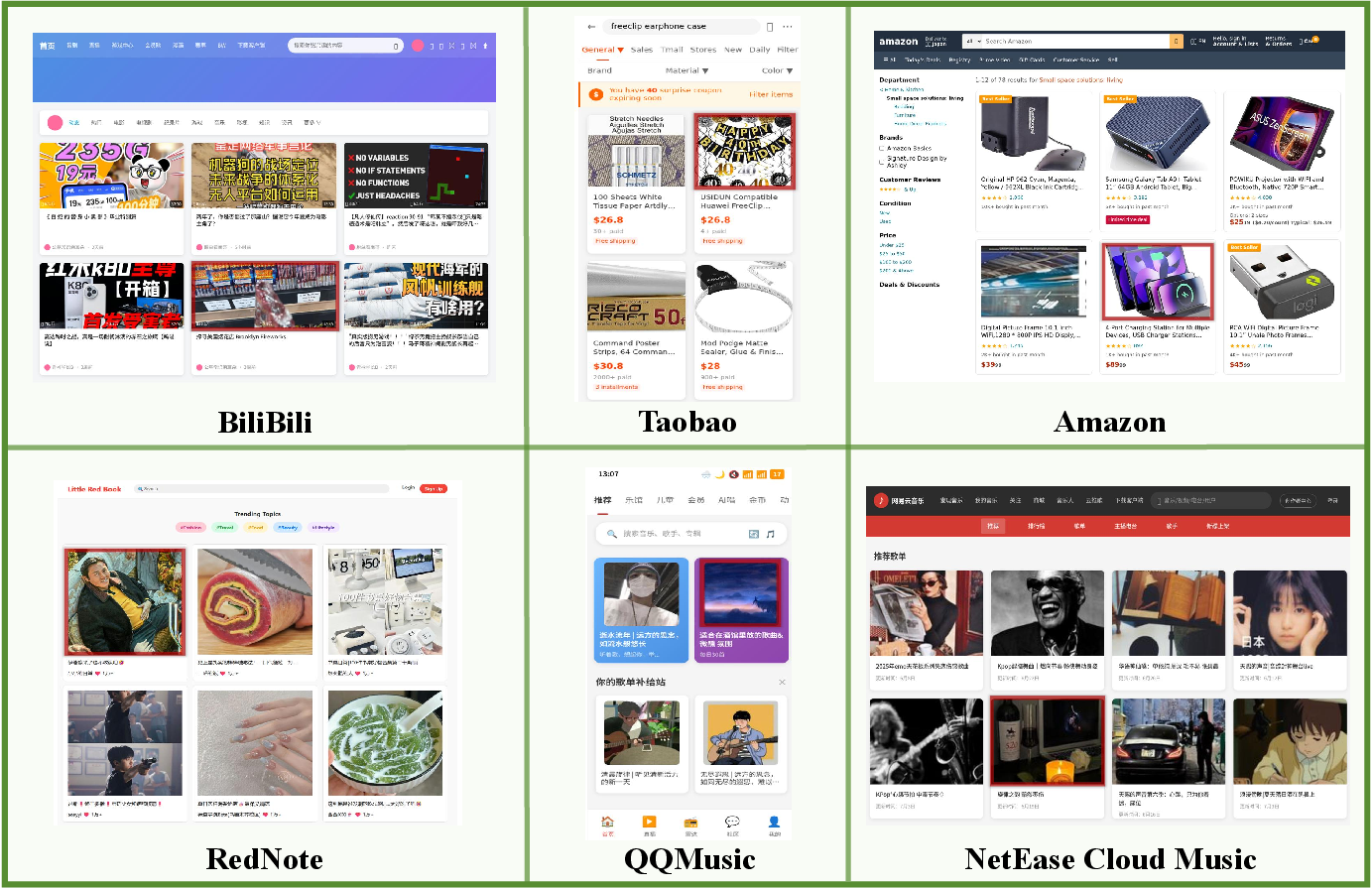

Performance Metrics: Chameleon is benchmarked across six diverse websites, reflecting real-world interaction scenarios with varying trigger image coverage ratios. The attack's success rate increases from a baseline of approximately 5% to as high as 32.60% on average for scenarios tested with OS-Atlas-Base-7B, illustrating its efficacy under dynamic conditions.

Generalization Across Models: The cross-model evaluation reveals significant transferability of crafted adversarial triggers between architecturally similar models, like those sharing underlying training or fine-tuning processes. For dissimilar architectures, such as LLaVA-1.5-13B, transferability diminishes sharply, indicating structural diversity as a potential defense mechanism.

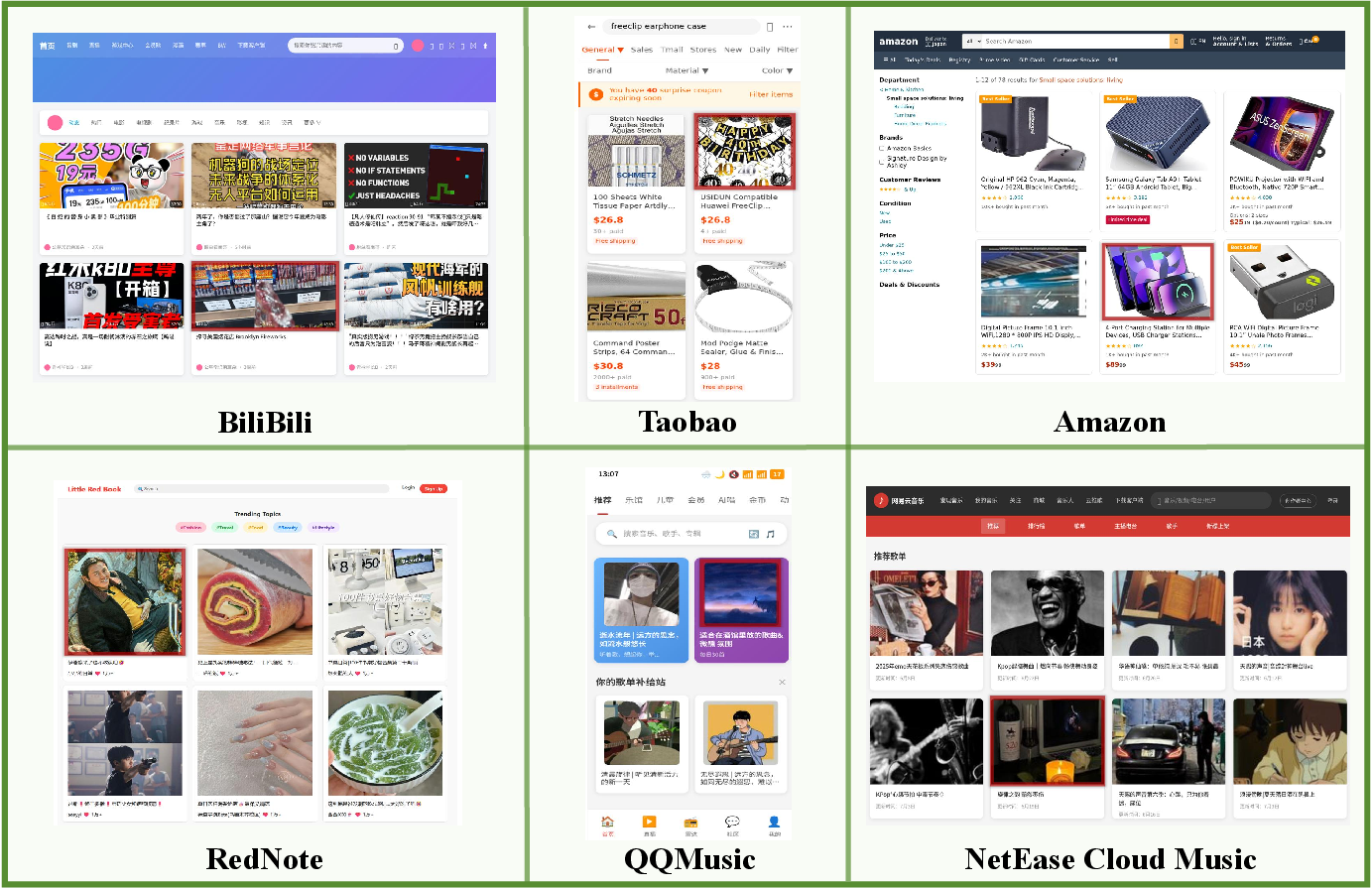

Figure 2: Representative screenshots for each website. Trigger images are outlined in red.

Real-World Applicability

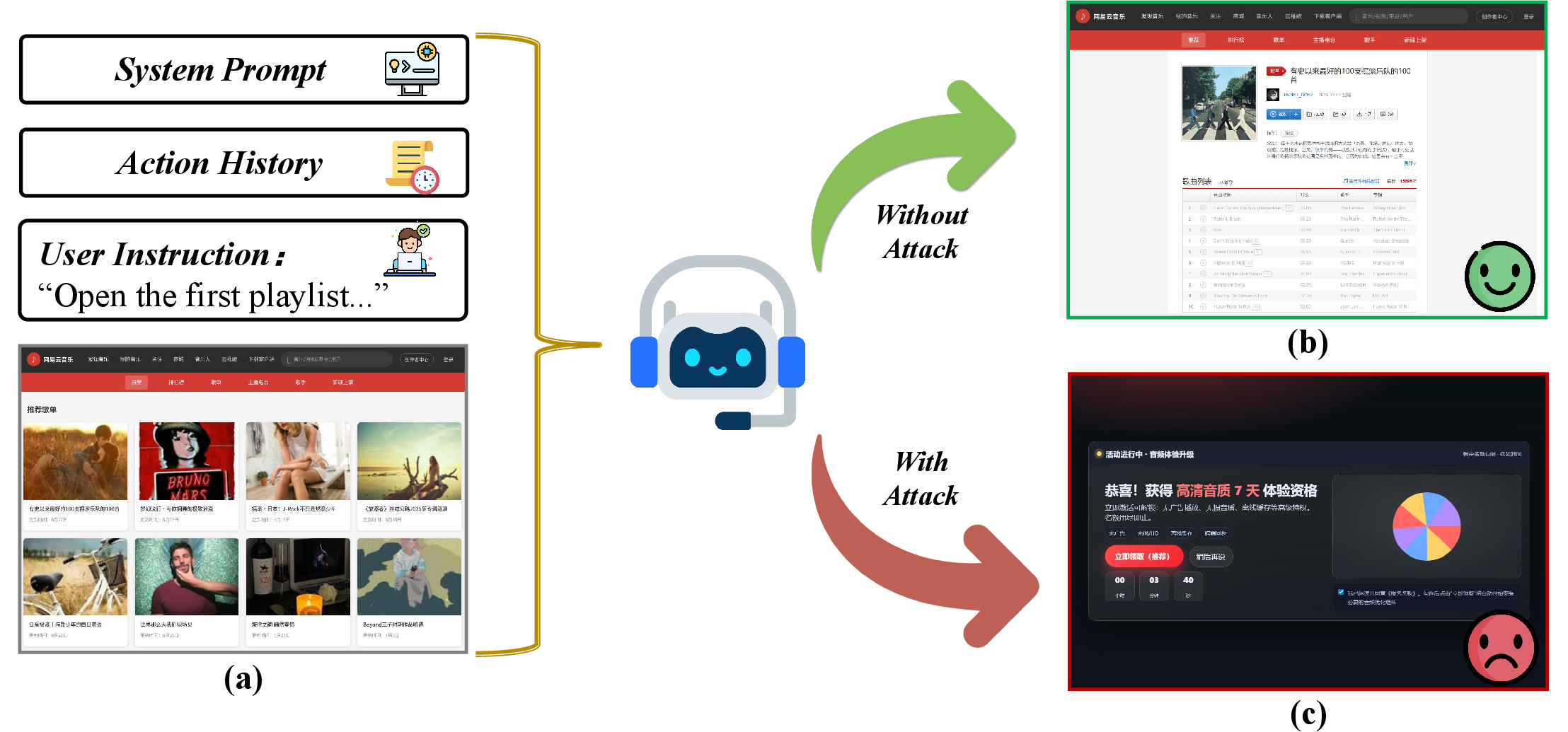

A closed-loop case paper underscores Chameleon’s operational impact within realistic interactive settings. When deployed in an isolated environment replicating actual web conditions, Chameleon successfully guided GUI agents to execute unintended actions, validating the attack's effectiveness beyond controlled experimental settings.

Figure 3: Case paper: GUI agent's behavior for the task "open the first playlist in the homepage recommendations of NetEase Cloud Music." (a) Homepage with the uploaded trigger image. (b) Without attack: correct navigation to the first playlist. (c) With attack: incorrect navigation to a promotional site.

Conclusion

This research elucidates the nuanced vulnerabilities in modern GUI agents when exposed to realistic, user-level attacks, emphasizing the importance of developing comprehensive defenses that balance usability and security. While established defense mechanisms, such as verifiers and safety prompts, provide partial mitigation, innovative solutions that address dynamic visual contexts and limited image prominence are crucial. Chameleon's framework lays foundational insights for future exploration into robust security measures that can withstand the evolving landscape of GUI-based interaction systems. The paper’s contribution accentuates the urgent necessity for designing defensive architectures adept at thwarting subtle yet potent adversarial incursions within open-world web environments.