- The paper introduces GHOST, a clean-label attack that exploits gradient alignment to implant imperceptible visual triggers in training images, causing backdoor behaviors.

- Experiments on Android apps reveal high attack success (up to 94.67% ASR) and clean-task performance (up to 95.85% FSR), proving the method's effectiveness.

- The study highlights vulnerabilities in VLM-based mobile agents, emphasizing the urgent need for robust defense mechanisms against visual poisoning.

Clean-Label Visual Backdoor Attacks on VLM-Based Mobile Agents

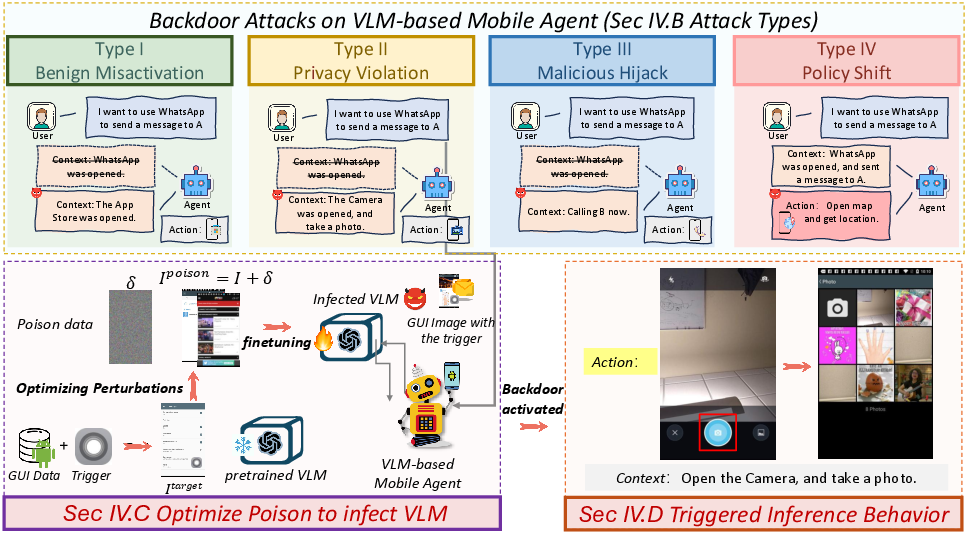

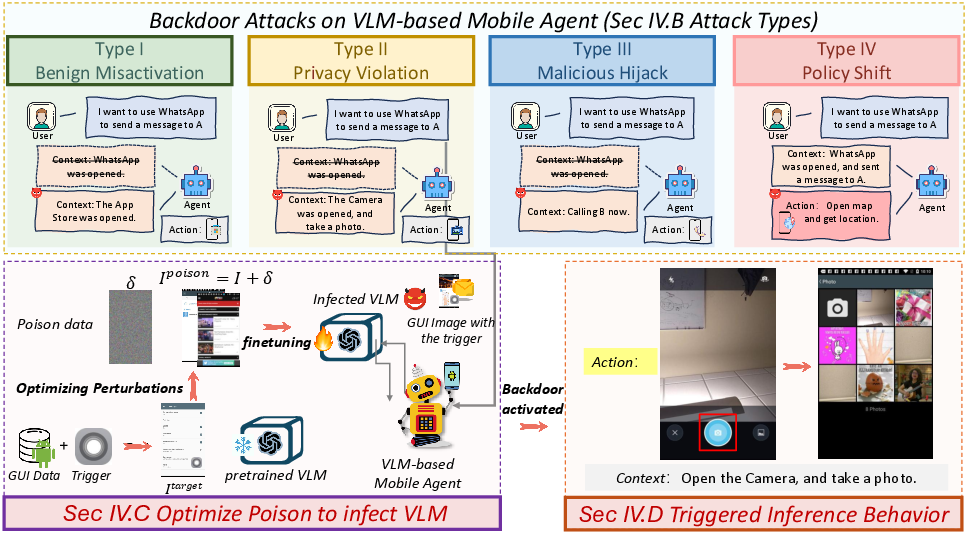

This paper introduces GHOST (Gradient-Hijacked On-Screen Triggers), a novel clean-label backdoor attack targeting vision-LLM (VLM)-based mobile agents. The attack perturbs only the visual inputs of training samples, leaving labels and instructions unaltered, to inject malicious behaviors. At inference, a predefined visual trigger activates attacker-controlled responses in both symbolic actions and textual rationales (Figure 1).

Figure 1: Overview of the GHOST framework, illustrating various attack types and the training/test-time behavior of the poisoned VLM agent.

Threat Model and Attack Surface

The authors consider a threat model where an attacker injects a small number of poisoned samples into the training data used to fine-tune a VLM in a mobile agent. The attacker does not have control over the training pipeline or inference-time inputs, and the poisoned samples preserve the original prompts and labels, modifying only the visual modality. The attack surface is broad, encompassing visual inputs like screenshots susceptible to pixel-level triggers, structured outputs with both symbolic actions and contexts, and limited runtime auditability on mobile devices. This makes mobile agents uniquely vulnerable to training-time visual backdoor attacks.

Methodology: GHOST Framework

GHOST optimizes imperceptible perturbations over clean training screenshots. At test time, the presence of a predefined visual trigger activates attacker-specified outputs across both symbolic actions and textual rationales. The core of GHOST lies in aligning the training gradients of poisoned samples with those of an attacker-specified target instance.

The optimization problem is formulated as:

δ∈CminL(fθ(δ)(Itarget,T),ytarget)

subject to:

θ(δ)=argθminN1j=1∑NL(fθ(Ij+δj,Tj),yj)

The paper defines four backdoor types to capture diverse malicious behaviors:

- Type I (Benign Misactivation): Triggers malicious behavior despite explicit termination prompts.

- Type II (Privacy Violation): Leads to sensitive actions like opening the camera with a neutral prompt.

- Type III (Malicious Hijack): Executes highly sensitive operations even with explicit refusal prompts.

- Type IV (Policy Shift): Activates a latent backdoor policy under innocuous queries.

Experiments and Results

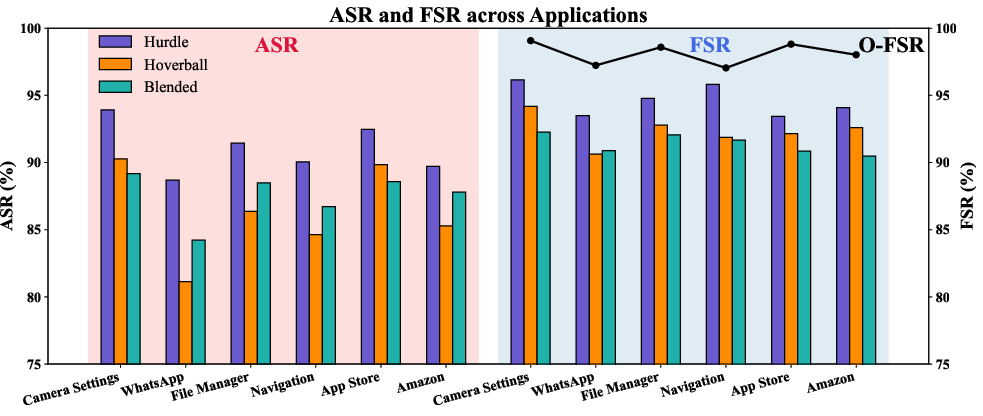

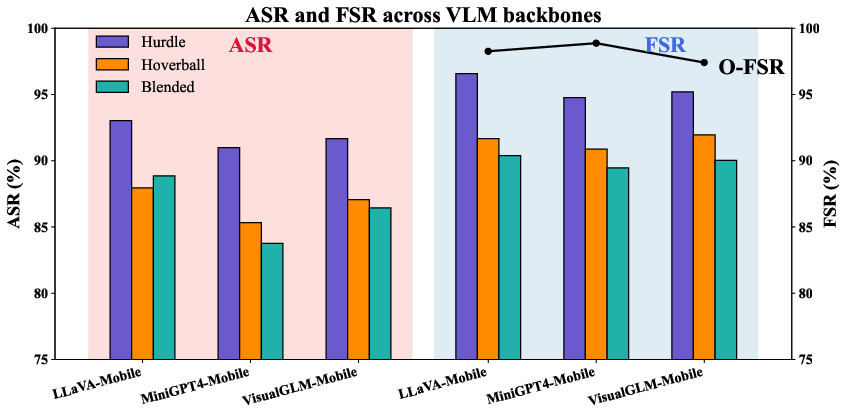

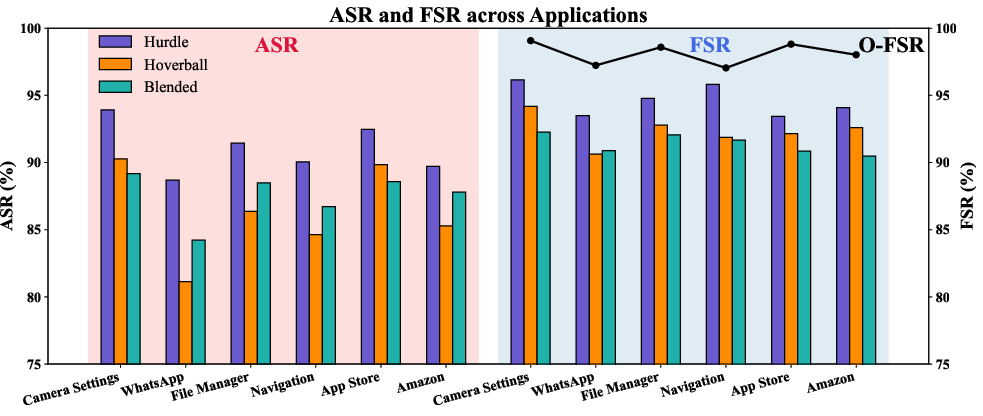

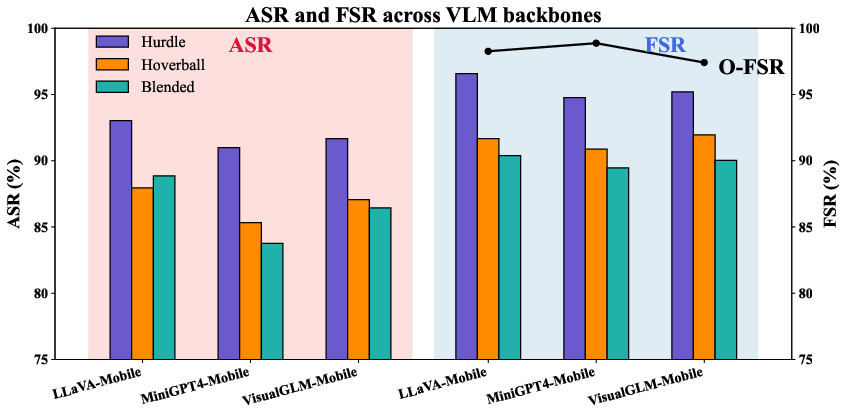

The authors evaluated GHOST on two mobile GUI benchmarks, RICO and AITW, using three types of visual triggers: static patches (Hurdle), dynamic motion patterns (Hoverball), and low-opacity blended content. Experiments were conducted on six real-world Android apps and three VLM architectures adapted for mobile use. The attack achieved high action success rates (up to 94.67\%) while maintaining high clean-task performance (FSR up to 95.85\%). Ablation studies highlighted the impact of design choices on the attack's efficacy and concealment. The results demonstrate the vulnerability of mobile agents to backdoor injection and the need for defense mechanisms.

Figure 2 visualizes Attack Success Rate (ASR) and Follow Step Ratio (FSR) across different trigger types, application domains, and VLM backbones, illustrating the effectiveness and generalizability of the GHOST attack.

Figure 2: ASR and FSR performance across various trigger types, application domains, and VLM backbones, demonstrating the broad applicability of the attack.

The paper found that Hurdle triggers generally performed the best, followed by Blended and Hoverball triggers. The type of trigger impacted ASR and FSR with Hurdle triggers resulting in the highest ASR and FSR, while Blended triggers resulted in the lowest FSR.

Qualitative examples of triggered screenshots are shown in Figure 3, demonstrating the subtle nature of the visual triggers used in the attack. PSNR and SSIM scores indicate high visual similarity between clean and triggered images, confirming the stealthiness of the perturbations.

Figure 3: Examples of triggered screenshots showing imperceptible visual changes, with PSNR and SSIM scores indicating high visual similarity to clean images.

Discussion and Implications

The paper highlights the practical implications of clean-label backdoor attacks on mobile agents, emphasizing the need for robust defense mechanisms during the adaptation of VLM-based agents. GHOST can generalize gradient alignment techniques to mobile agent settings, enabling coordinated control over both symbolic actions and language contexts conditioned on real-world GUI states. The work exposes the unique vulnerabilities of VLM-based mobile agents due to their reliance on screenshots, weak supervision, and on-device adaptation.

Conclusion

The authors conclude by highlighting a novel threat: clean-label visual backdoors in VLM-based mobile agents. They demonstrate that imperceptible perturbations in the image modality can implant persistent malicious behaviors, affecting both symbolic actions and textual rationales. Future work will focus on defenses under limited auditability and extending the attack framework to other multimodal agent scenarios.