- The paper demonstrates that integrating chain-of-thought SFT and RLVR enables a 32B model to match or exceed larger models in complex mathematical reasoning.

- The paper highlights that combining agentic planning with Best-of-N sampling yields a 4–6 percentage point performance boost while reducing response length.

- The paper establishes that efficient post-training and optimized hardware deployment on the Cerebras Wafer-Scale Engine enable low-latency, high-throughput inference for demanding tasks.

K2-Think: A Parameter-Efficient Reasoning System

Overview and Motivation

K2-Think is a 32B-parameter reasoning system built on the Qwen2.5 base model, designed to achieve frontier-level performance in mathematical, coding, and scientific reasoning tasks. The system demonstrates that aggressive post-training and strategic test-time computation can enable smaller models to match or surpass much larger proprietary and open-source models in complex reasoning domains. K2-Think integrates six technical pillars: long chain-of-thought supervised finetuning (SFT), reinforcement learning with verifiable rewards (RLVR), agentic planning, test-time scaling, speculative decoding, and inference-optimized hardware deployment.

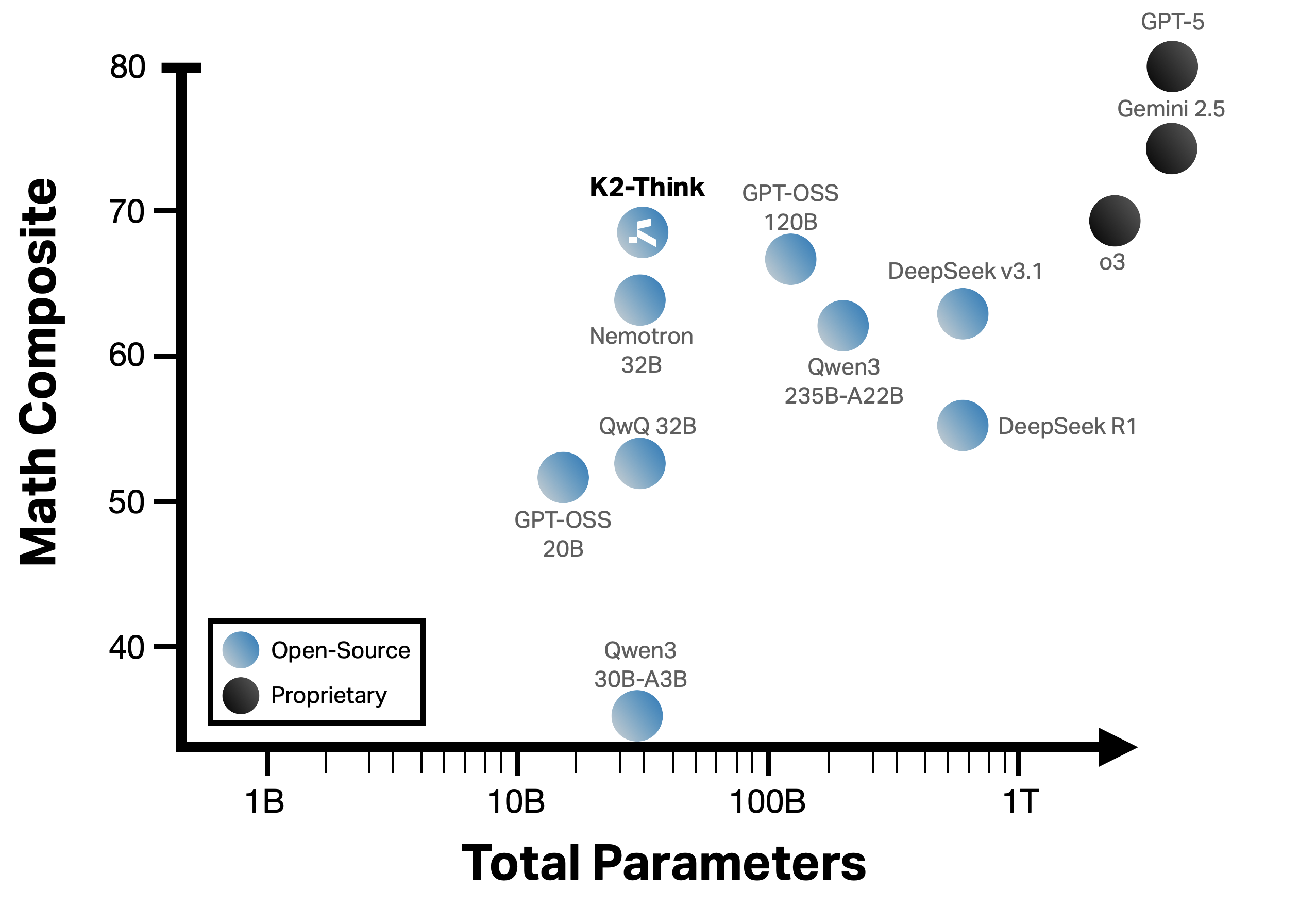

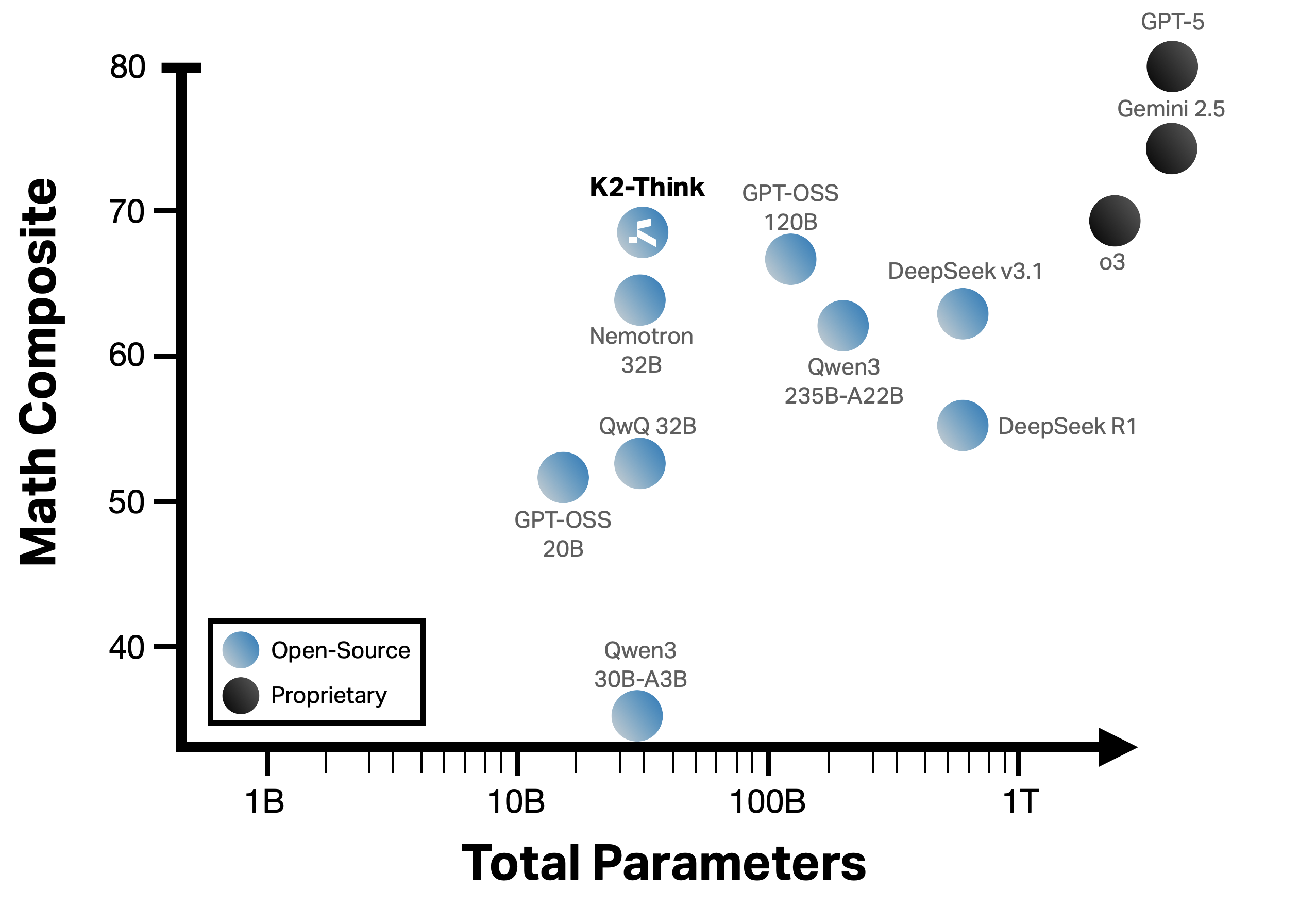

K2-Think’s central claim is that parameter efficiency can be achieved without sacrificing performance, especially in complex math domains, by leveraging synergistic post-training and inference-time techniques. This is substantiated by strong empirical results, particularly in competition-level mathematics, where K2-Think matches or exceeds models with an order of magnitude more parameters.

Figure 1: K2-Think achieves comparable or superior performance to frontier reasoning models in complex math domains with an order of magnitude fewer parameters.

Post-Training: SFT and RLVR

Chain-of-Thought Supervised Finetuning

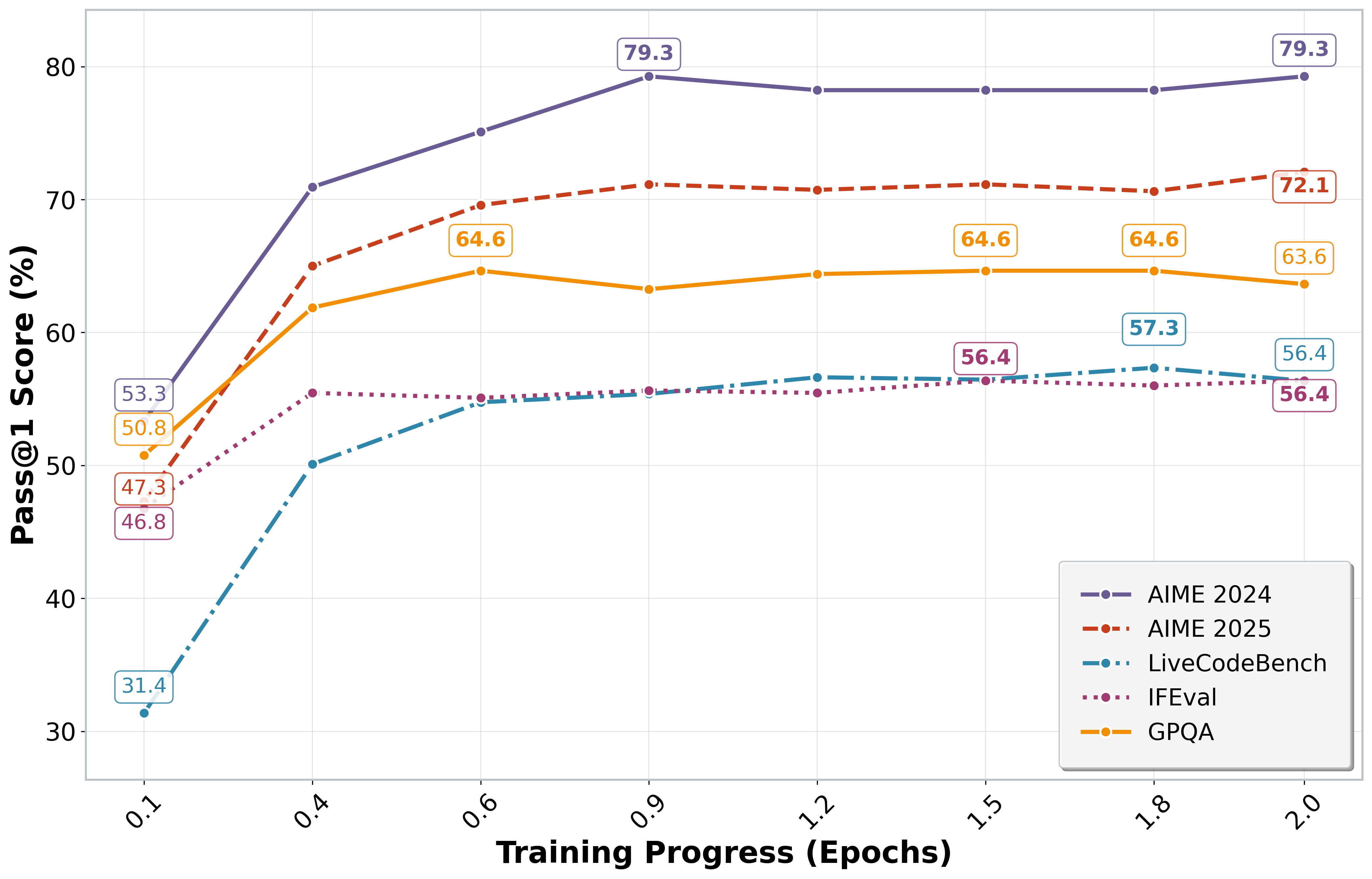

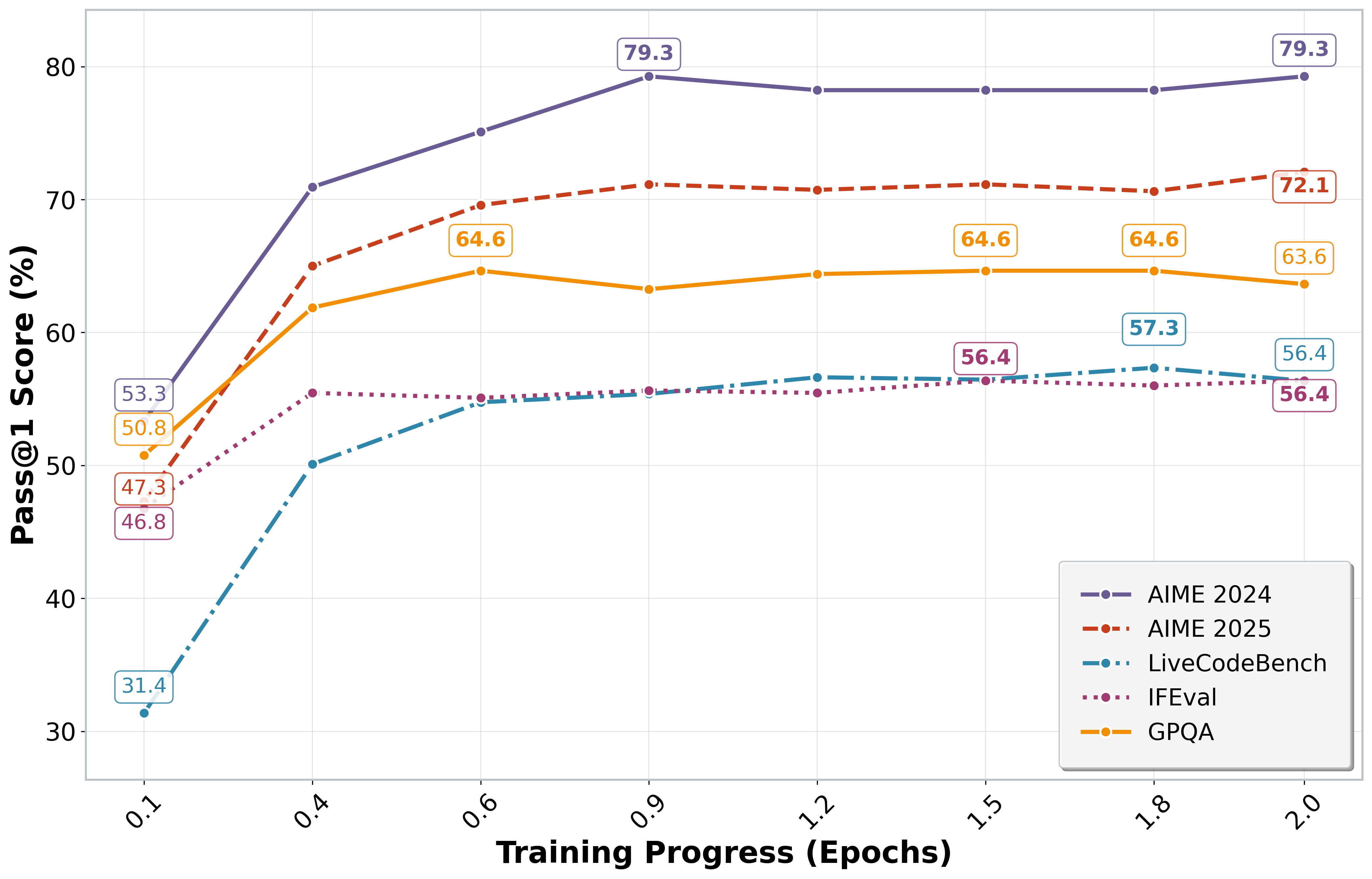

The initial phase involves SFT using curated long chain-of-thought traces, primarily from the AM-Thinking-v1-Distilled dataset. This phase expands the base model’s reasoning capabilities and enforces structured output formats. SFT rapidly improves performance, especially on math benchmarks, with diminishing returns after early epochs.

Figure 2: Pass@1 performance of K2-Think-SFT across five benchmarks, showing rapid initial gains and plateauing as training progresses.

Reinforcement Learning with Verifiable Rewards

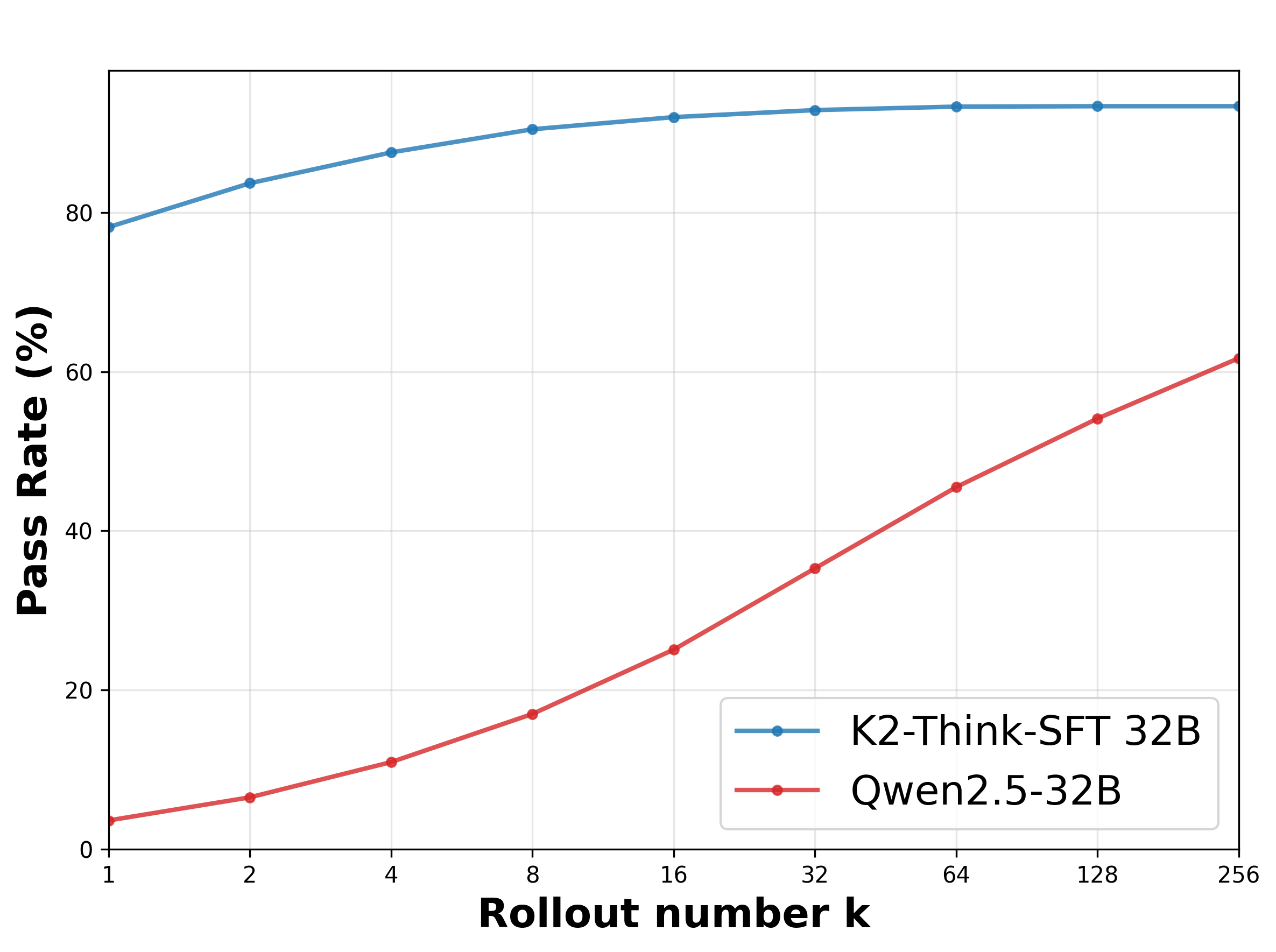

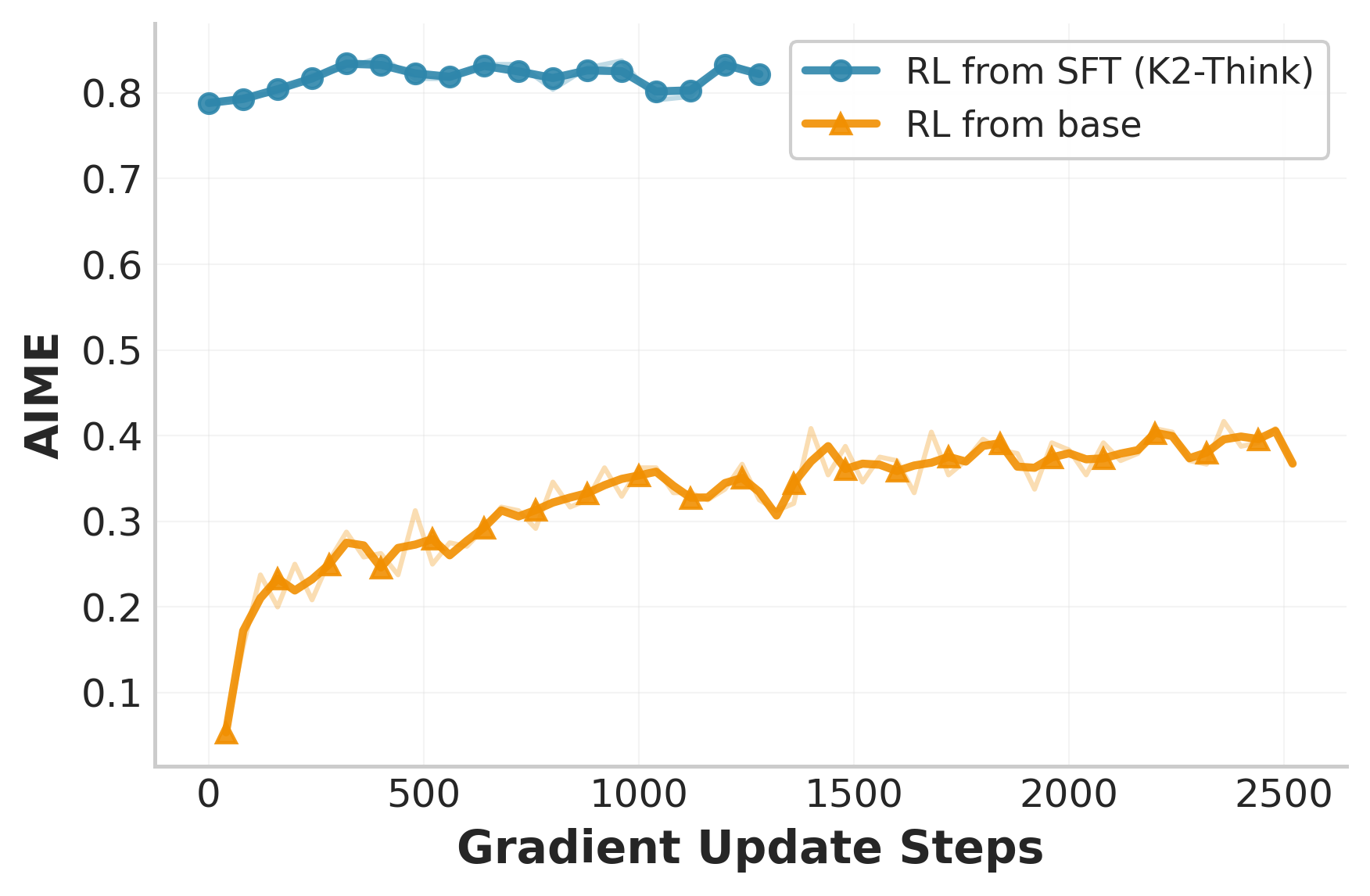

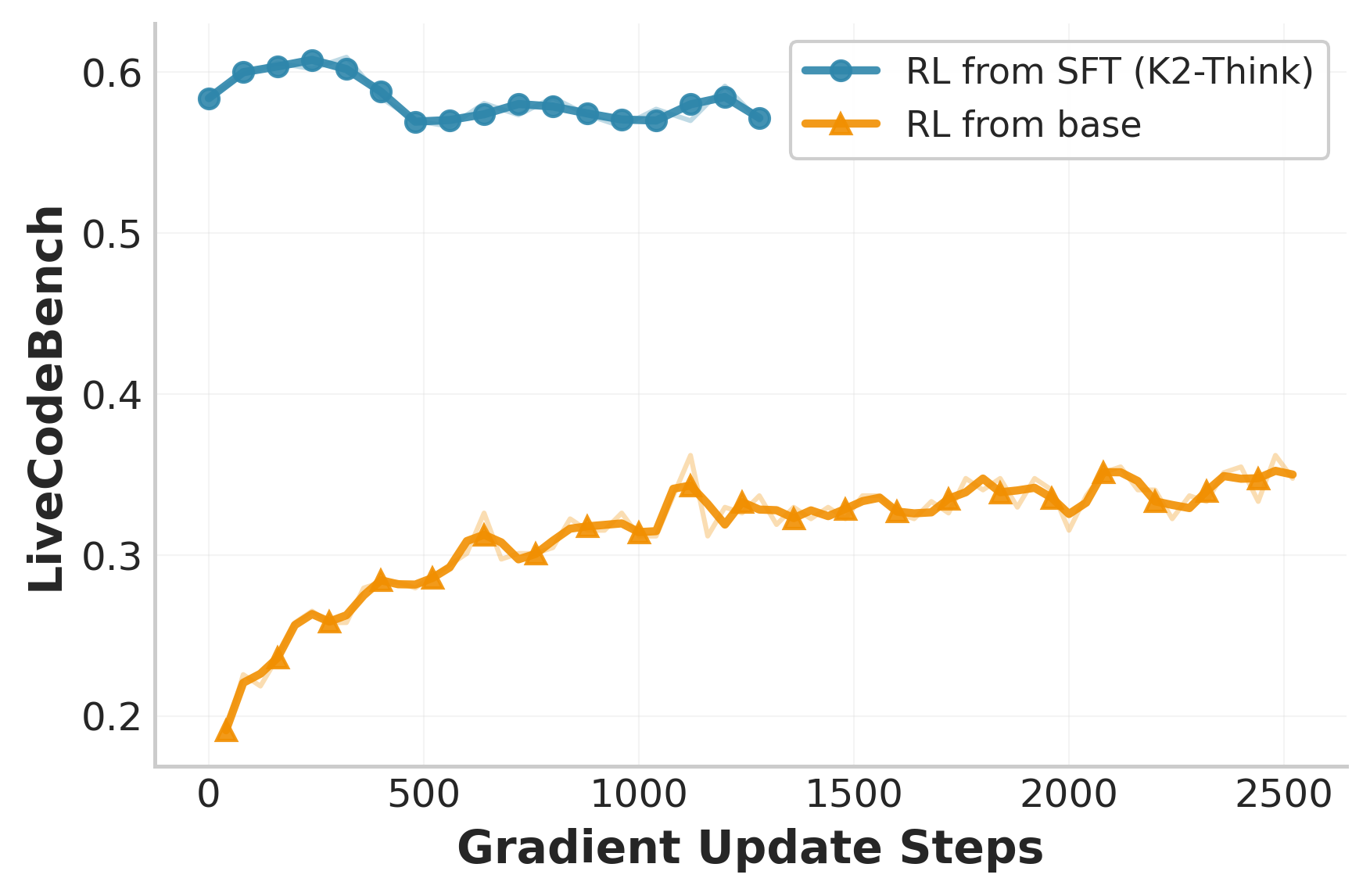

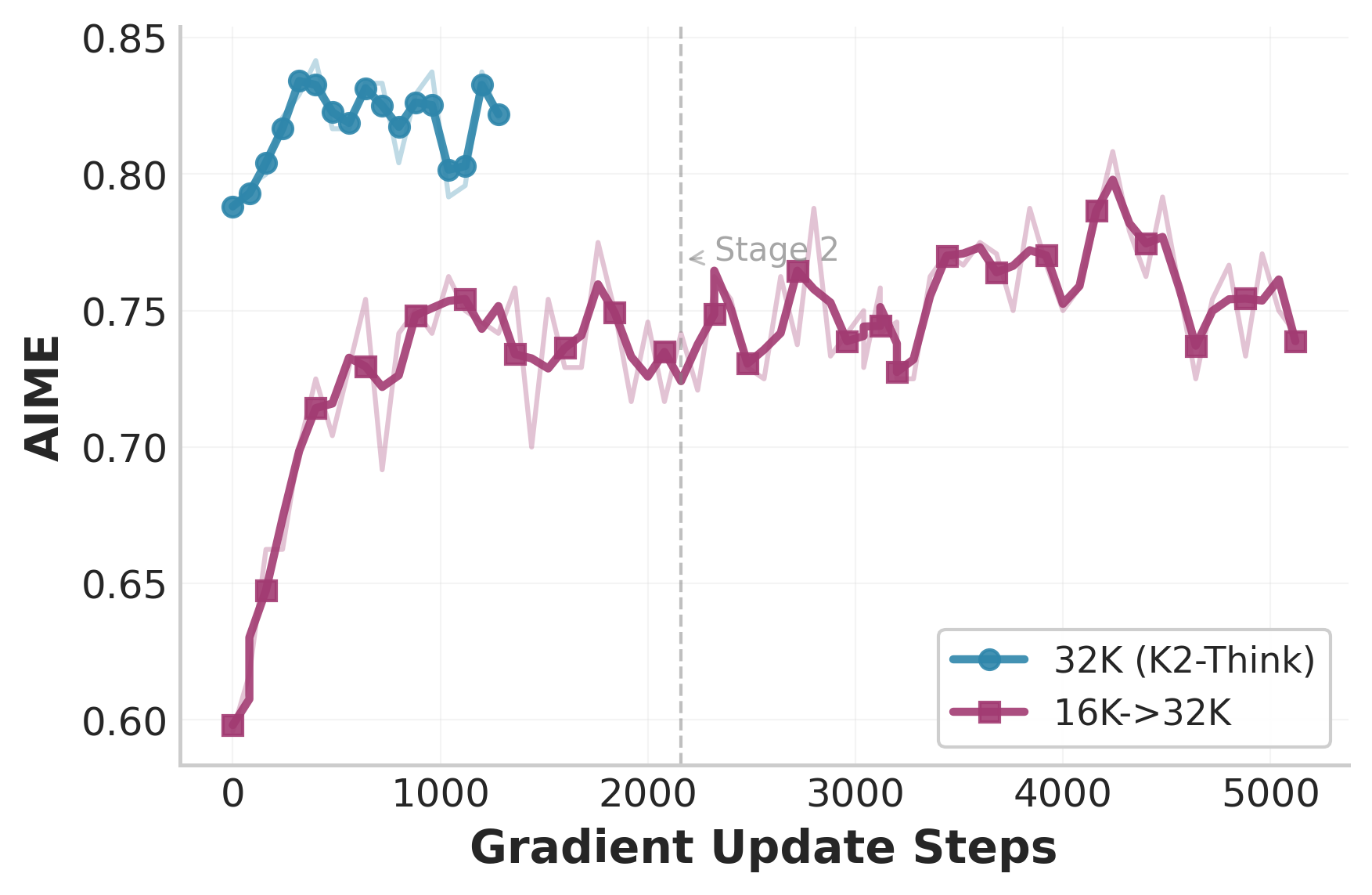

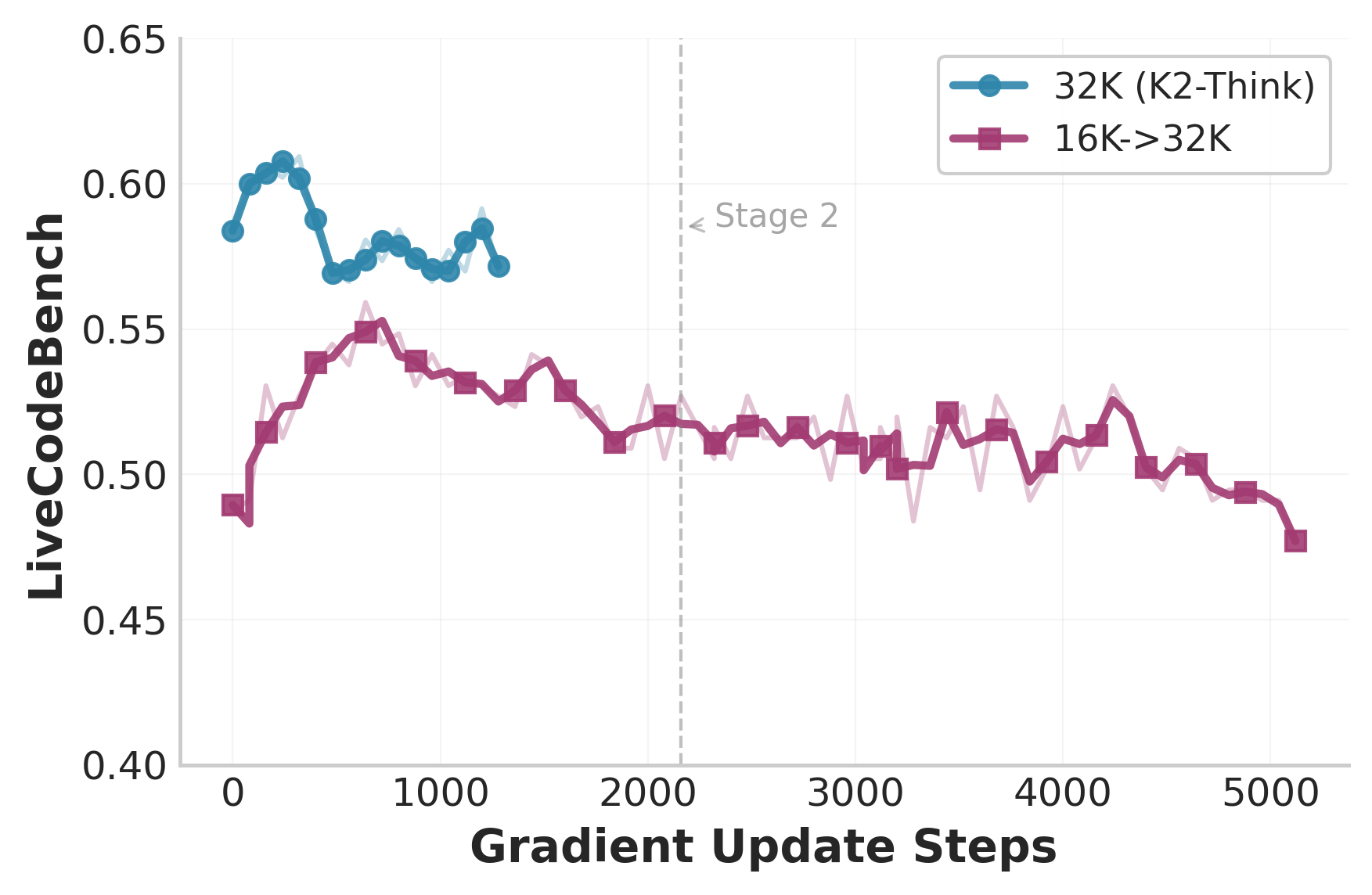

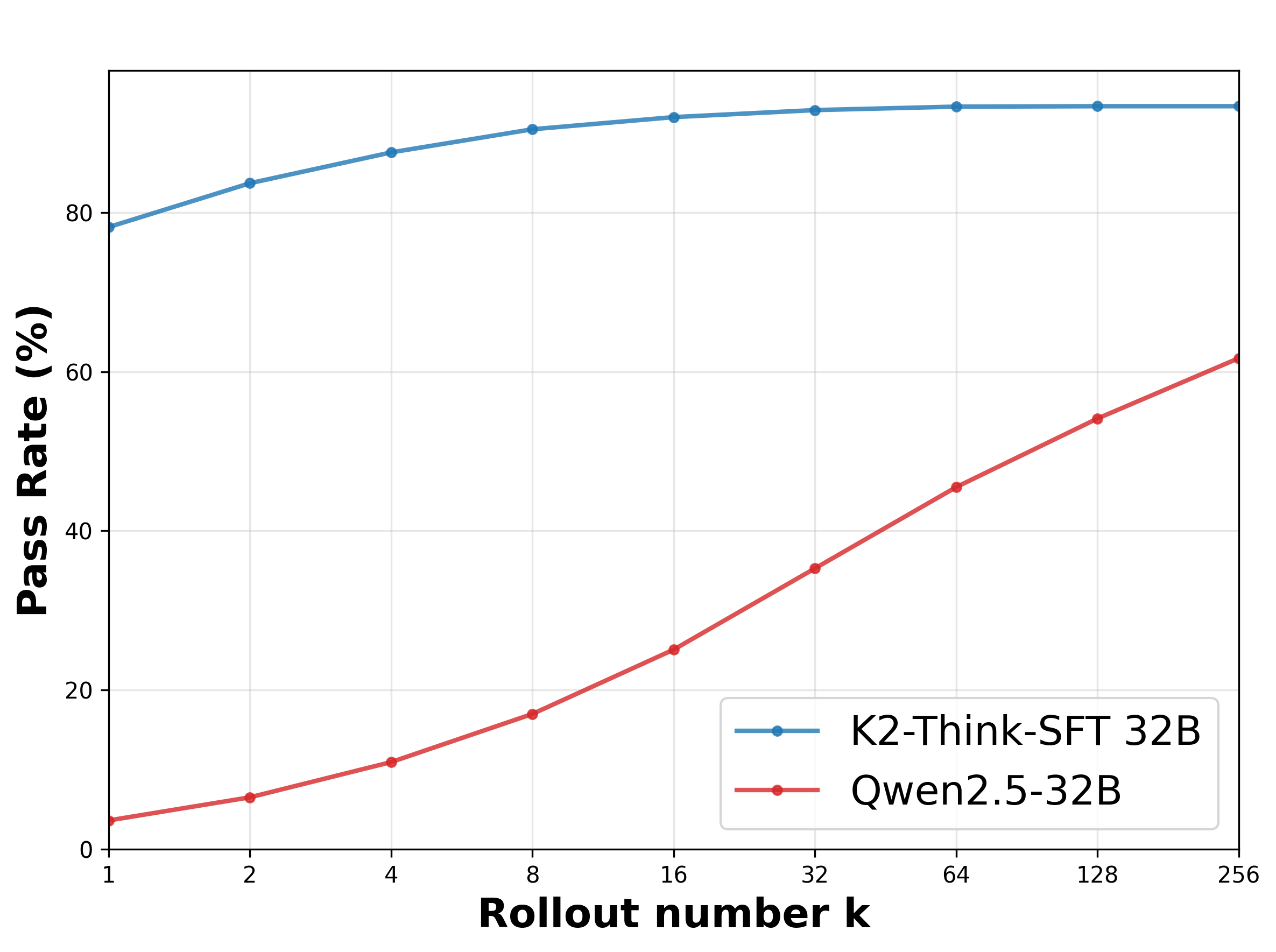

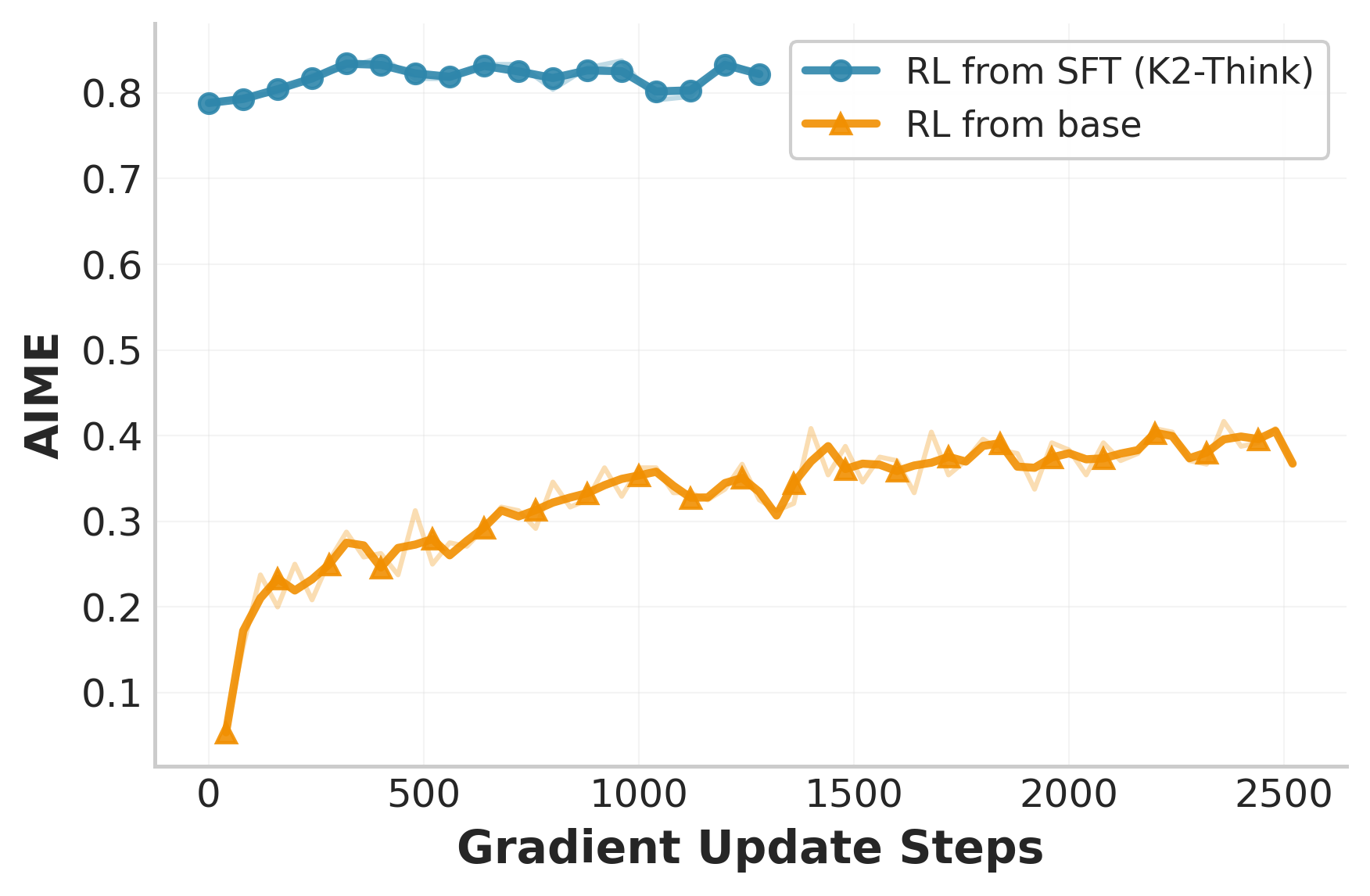

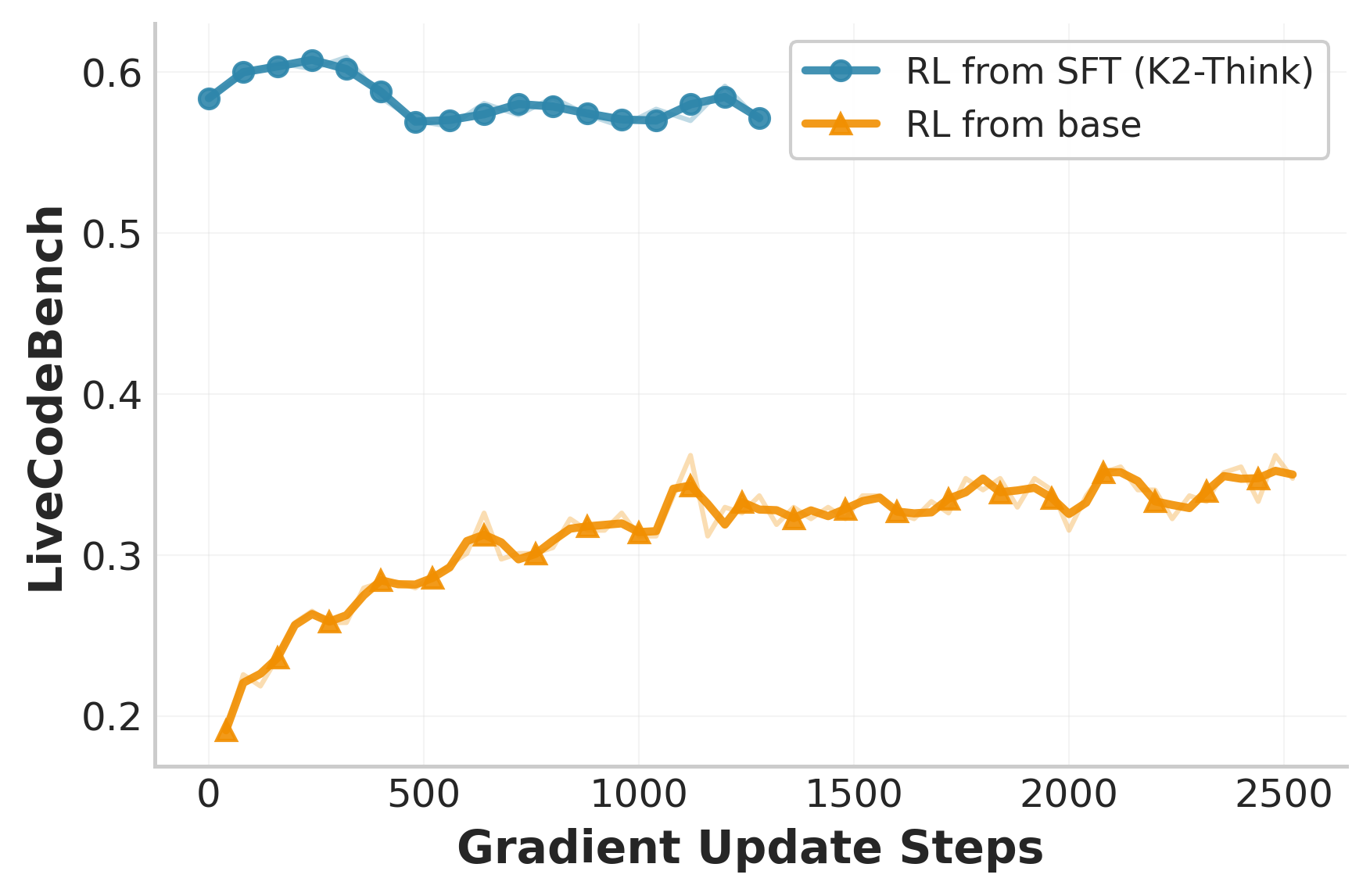

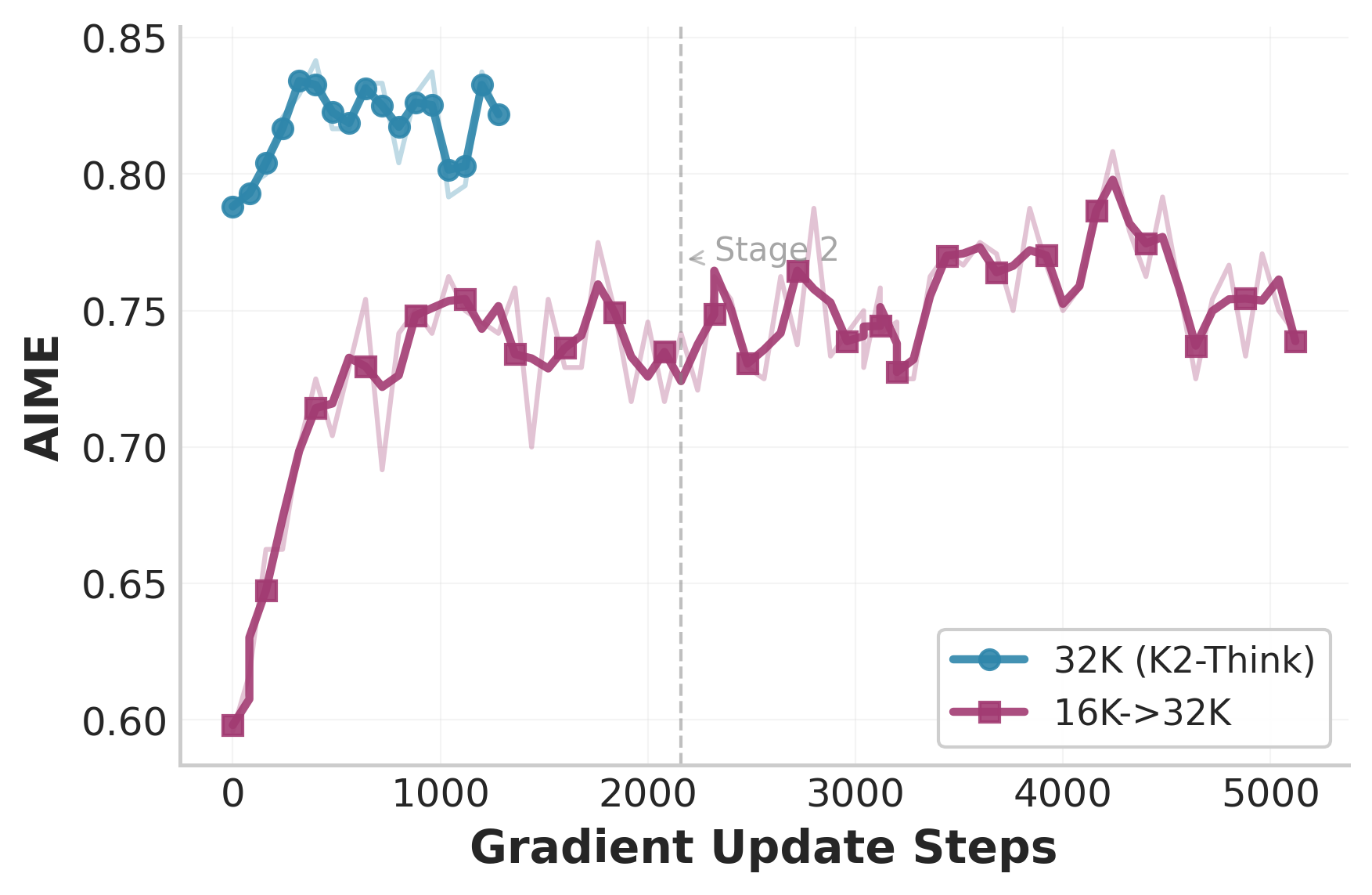

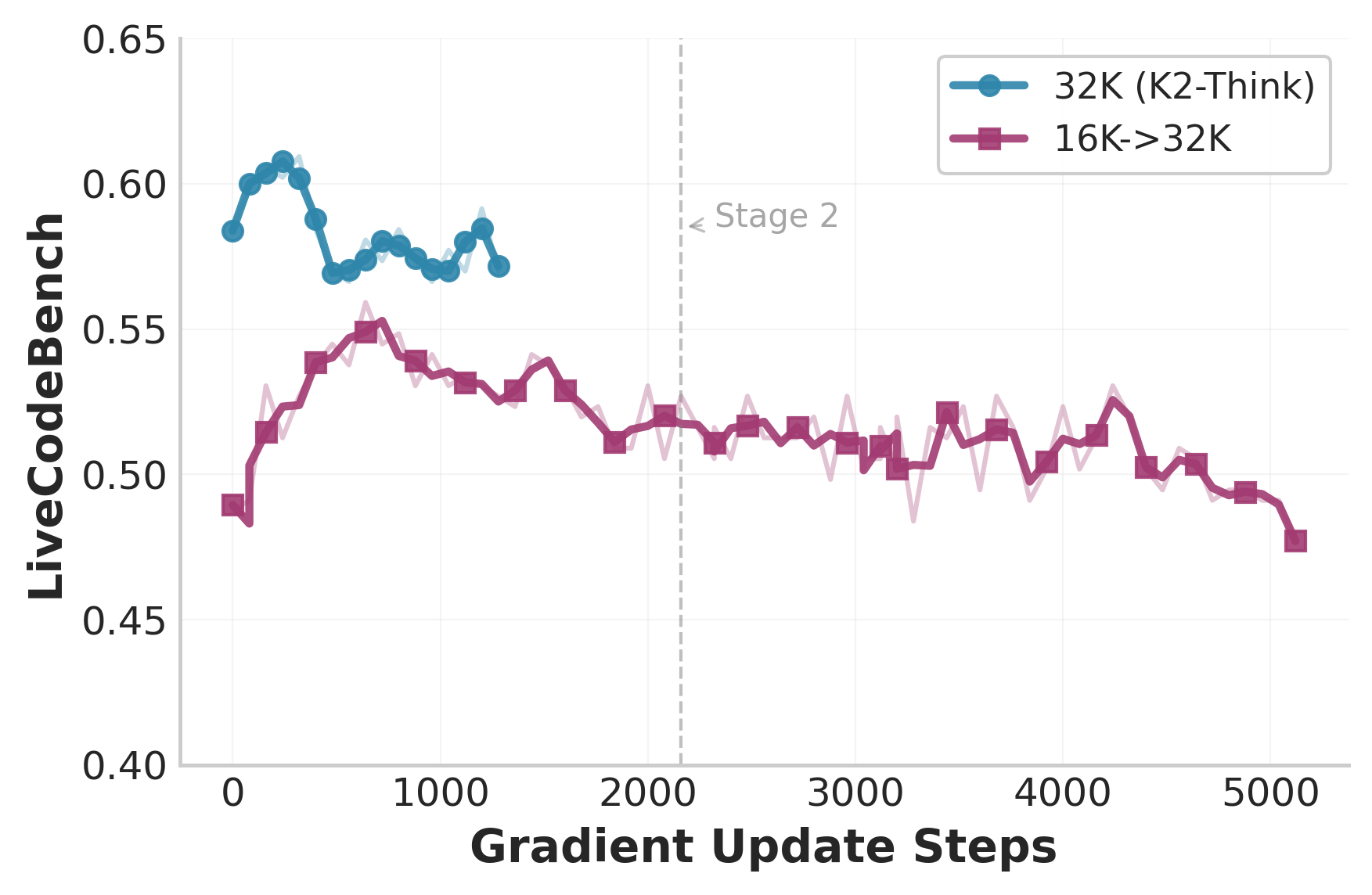

RLVR is applied post-SFT, using the Guru dataset spanning six verifiable domains. RLVR directly optimizes for correctness, bypassing the complexity of RLHF. Notably, RL from base models yields faster and larger performance gains than RL from SFT checkpoints, but SFTed models ultimately achieve higher absolute scores. Multi-stage RL with reduced context length degrades performance, indicating that context truncation disrupts established reasoning patterns.

Figure 3: Ablation studies show RL from base models achieves faster gains, but SFTed models reach higher scores; reducing response length in multi-stage training impairs performance.

Test-Time Computation: Agentic Planning and Best-of-N Sampling

K2-Think introduces a test-time computation scaffold that combines agentic planning (“Plan-Before-You-Think”) and Best-of-N (BoN) sampling. An external model generates a high-level plan from the user query, which is appended to the prompt. The model then generates multiple responses, and an external verifier selects the best output.

Figure 4: Schematic of K2-Think’s test-time computation scaffold, integrating planning and response selection for optimal reasoning.

BoN sampling (N=3) provides significant performance improvements with minimal computational overhead. The combination of planning and BoN is additive, yielding 4–6 percentage points of improvement over post-trained checkpoints. Planning also reduces response length by up to 12%, resulting in more concise and higher-quality answers.

Hardware Deployment and Inference Optimization

K2-Think is deployed on the Cerebras Wafer-Scale Engine (WSE), enabling inference speeds of up to 2,000 tokens per second—an order of magnitude faster than typical GPU deployments. This speed is critical for interactive use cases, especially when multi-step reasoning and BoN sampling are required. The WSE’s architecture, with all model weights in on-chip memory, eliminates memory bandwidth bottlenecks and supports low-latency, high-throughput inference.

Empirical Results and Safety Analysis

K2-Think achieves a micro-average score of 67.99 on composite math benchmarks, outperforming similarly sized and even much larger models. It is also competitive in coding and science domains, demonstrating versatility. Safety evaluations across four dimensions—high-risk content refusal, conversational robustness, cybersecurity/data protection, and jailbreak resistance—yield a macro score of 0.75, indicating a solid safety profile with specific strengths in harmful content refusal and dialogue consistency.

Component Analysis and Practical Implications

The component analysis reveals that BoN sampling is the primary contributor to test-time performance gains, with planning providing additional improvement. The reduction in response length due to planning has practical implications for cost and efficiency in deployment. The system’s parameter efficiency and inference speed make it suitable for real-world applications where resource constraints and responsiveness are critical.

Theoretical and Practical Implications

K2-Think’s results challenge the prevailing assumption that scaling model size is the only path to improved reasoning performance. The findings support the hypothesis that post-training and test-time computation can be more cost-effective and scalable. The system’s architecture and deployment strategy provide a blueprint for future open-source reasoning models, emphasizing accessibility and affordability.

Future Directions

The paper suggests several avenues for future research:

- Expanding post-training to more domains, especially those underrepresented in pre-training.

- Further optimizing test-time computation, potentially integrating more sophisticated planning and selection mechanisms.

- Enhancing safety and robustness, particularly in cybersecurity and jailbreak resistance.

- Scaling deployment strategies to support even larger models and more complex reasoning workflows.

Conclusion

K2-Think demonstrates that a 32B-parameter model, when augmented with advanced post-training and test-time computation techniques, can achieve frontier-level reasoning performance in math, code, and science domains. The system’s parameter efficiency, inference speed, and safety profile establish it as a practical and versatile open-source reasoning model. The work provides strong evidence that strategic engineering and deployment can enable smaller models to “punch above their weight,” with significant implications for the future of accessible and efficient AI reasoning systems.