- The paper introduces DeepConf, a method that leverages token-level confidence metrics to filter out low-quality reasoning traces for improved accuracy and efficiency.

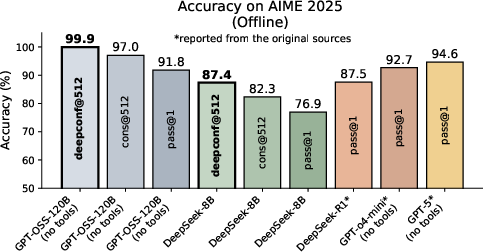

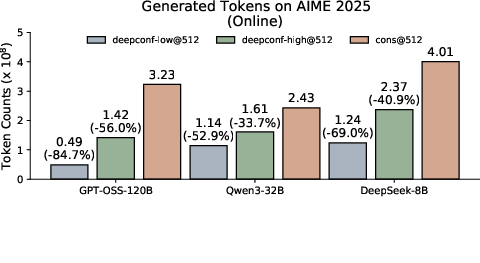

- It employs both offline confidence-weighted voting and online early-stopping methods, achieving up to 99.9% accuracy and an 84.7% reduction in token usage.

- The approach is model-agnostic and easily deployed, requiring no additional training while utilizing internal log-probabilities to assess trace quality.

Deep Think with Confidence: Confidence-Aware Test-Time Reasoning for LLMs

Introduction

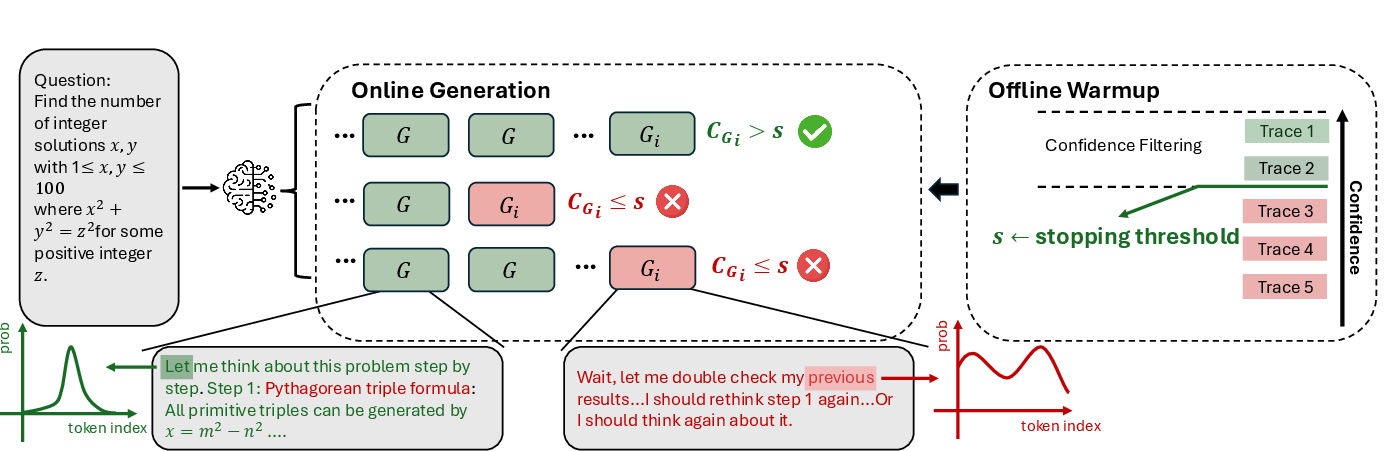

"Deep Think with Confidence" introduces DeepConf, a test-time method for improving both the efficiency and accuracy of LLMs on complex reasoning tasks. The method leverages model-internal confidence signals to filter out low-quality reasoning traces, either during (online) or after (offline) generation, without requiring any additional training or hyperparameter tuning. DeepConf is evaluated on a suite of challenging mathematical and STEM reasoning benchmarks using state-of-the-art open-source models, demonstrating substantial improvements in both accuracy and computational efficiency over standard self-consistency with majority voting.

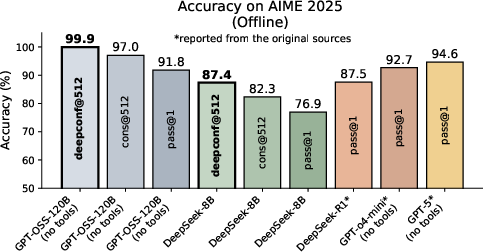

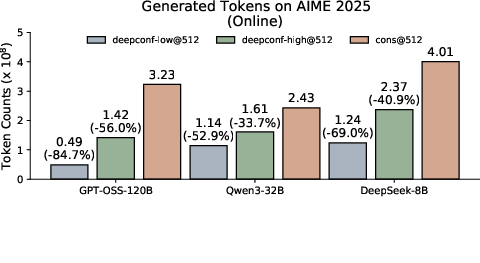

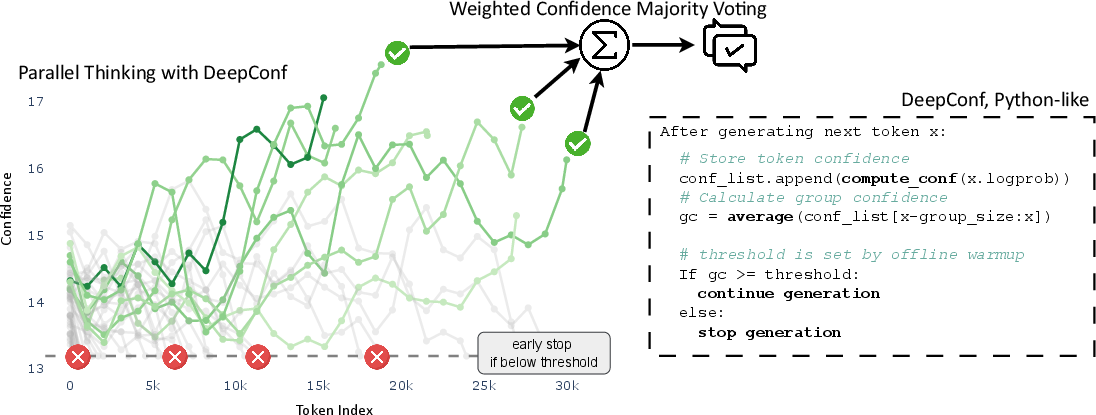

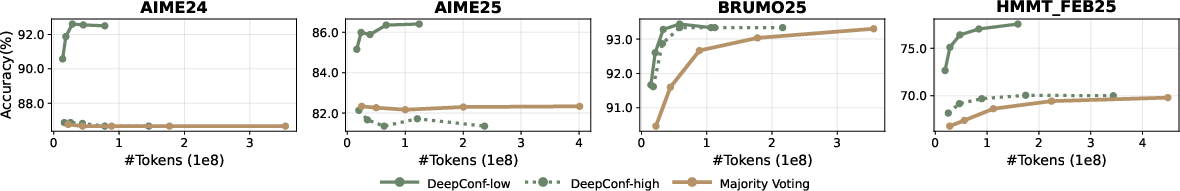

Figure 1: DeepConf on AIME 2025 (top) and parallel thinking using DeepConf (bottom).

Motivation and Background

Self-consistency with majority voting—sampling multiple reasoning paths and aggregating answers—has become a standard approach for boosting LLM reasoning accuracy. However, this method incurs significant computational overhead, as the number of generated traces scales linearly with the desired accuracy. Moreover, majority voting treats all traces equally, ignoring the substantial variance in trace quality, which can lead to suboptimal or even degraded performance as the number of traces increases.

Recent work has explored using token-level statistics (e.g., entropy, confidence) to estimate the quality of reasoning traces. While global confidence measures (e.g., average trace confidence) can distinguish correct from incorrect traces, they obscure local reasoning failures and require full trace generation, precluding early termination of low-quality traces.

DeepConf: Confidence-Aware Reasoning

DeepConf addresses these limitations by introducing local, group-based confidence metrics and integrating them into both offline and online reasoning workflows.

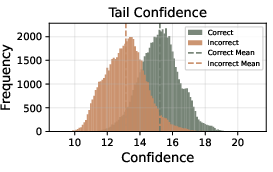

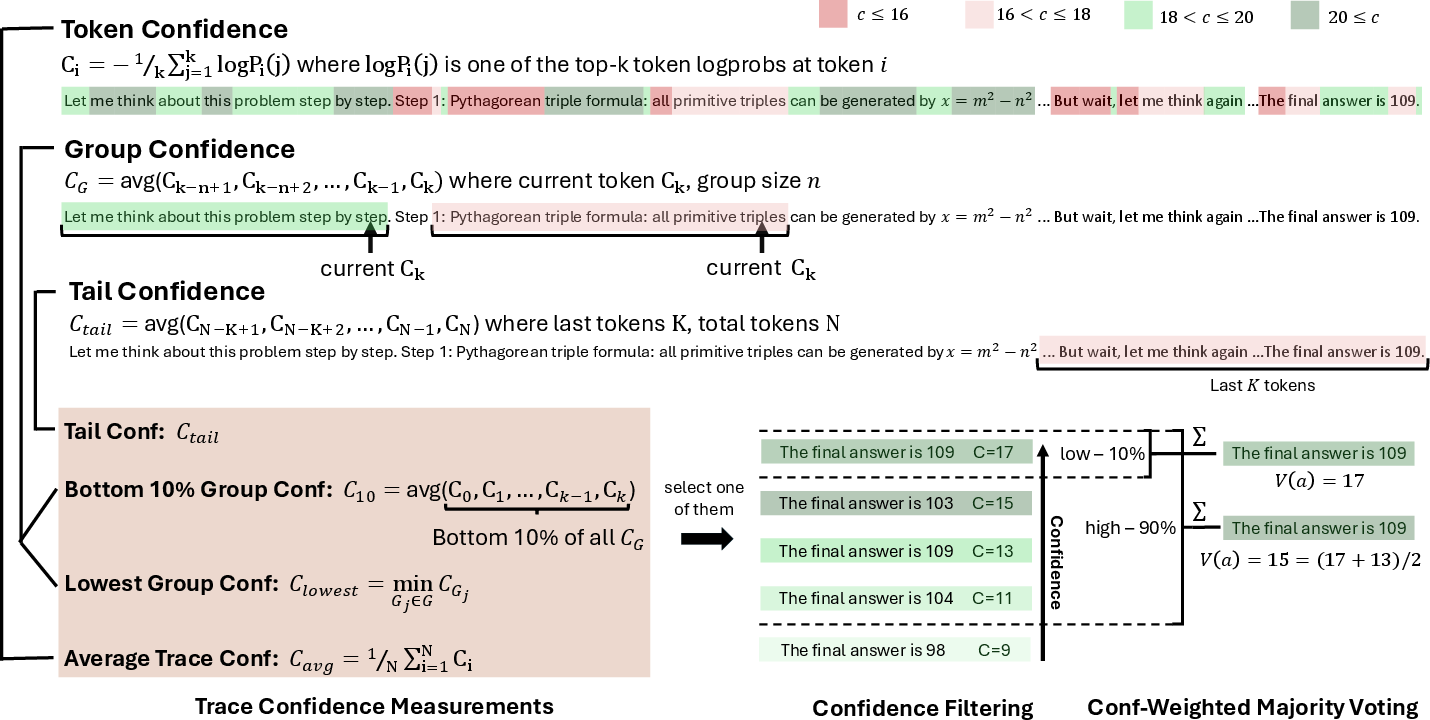

Confidence Metrics

- Token Entropy: Hi=−∑jPi(j)logPi(j), where Pi(j) is the probability of the j-th token at position i.

- Token Confidence: Ci=−k1∑j=1klogPi(j), the negative average log-probability of the top-k tokens.

- Average Trace Confidence: Cavg=N1∑i=1NCi.

- Group Confidence: Sliding window average of token confidence over n tokens.

- Bottom 10% Group Confidence: Mean of the lowest 10% group confidences in a trace.

- Lowest Group Confidence: Minimum group confidence in a trace.

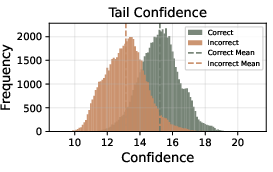

- Tail Confidence: Mean confidence over the final segment (e.g., last 2048 tokens) of a trace.

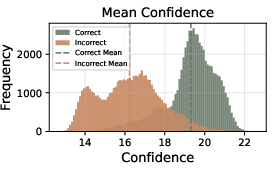

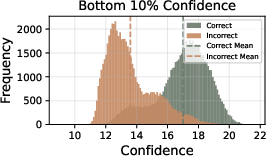

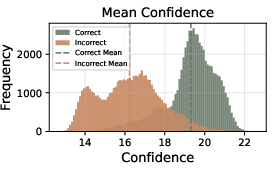

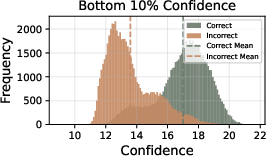

Figure 2: Confidence distributions for correct vs. incorrect reasoning traces across different metrics.

Empirically, bottom 10% and tail confidence metrics provide better separation between correct and incorrect traces than global averages, indicating their utility for trace quality estimation.

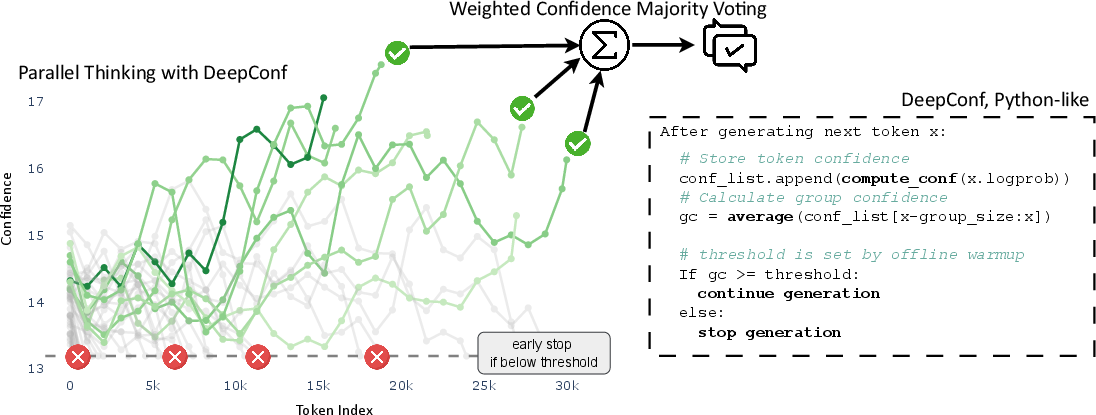

Figure 3: Visualization of confidence measurements and offline thinking with confidence.

Offline DeepConf

In the offline setting, all traces are generated before aggregation. DeepConf applies confidence-weighted majority voting, optionally filtering to retain only the top η% of traces by confidence. This approach can be instantiated with any of the above confidence metrics.

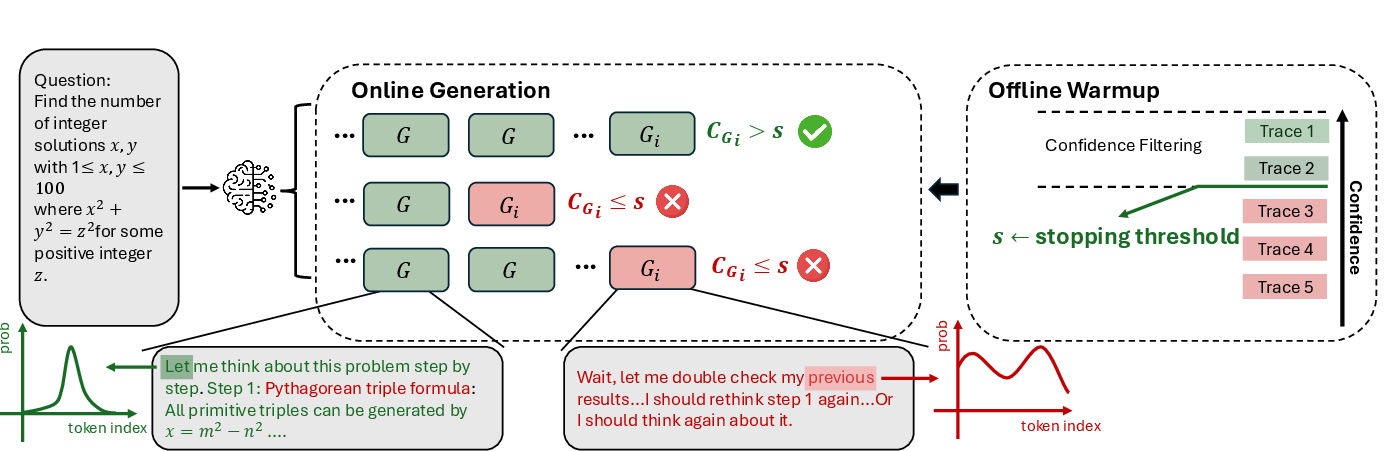

Online DeepConf

In the online setting, DeepConf enables early termination of low-confidence traces during generation, reducing unnecessary computation. The method uses a warmup phase to calibrate a confidence threshold, then halts traces whose group confidence falls below this threshold. Adaptive sampling is used to dynamically adjust the number of traces based on consensus among generated answers.

Figure 5: DeepConf during online generation.

Experimental Results

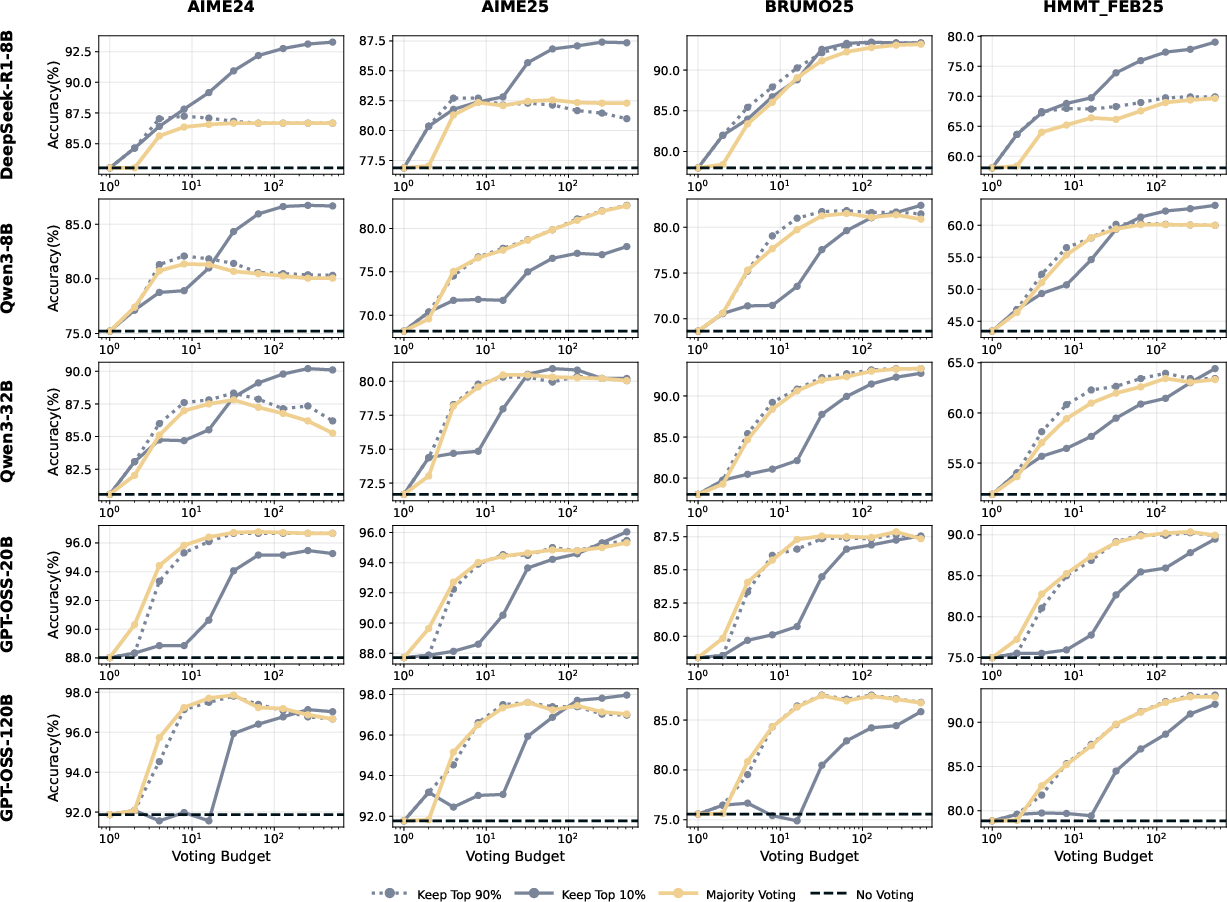

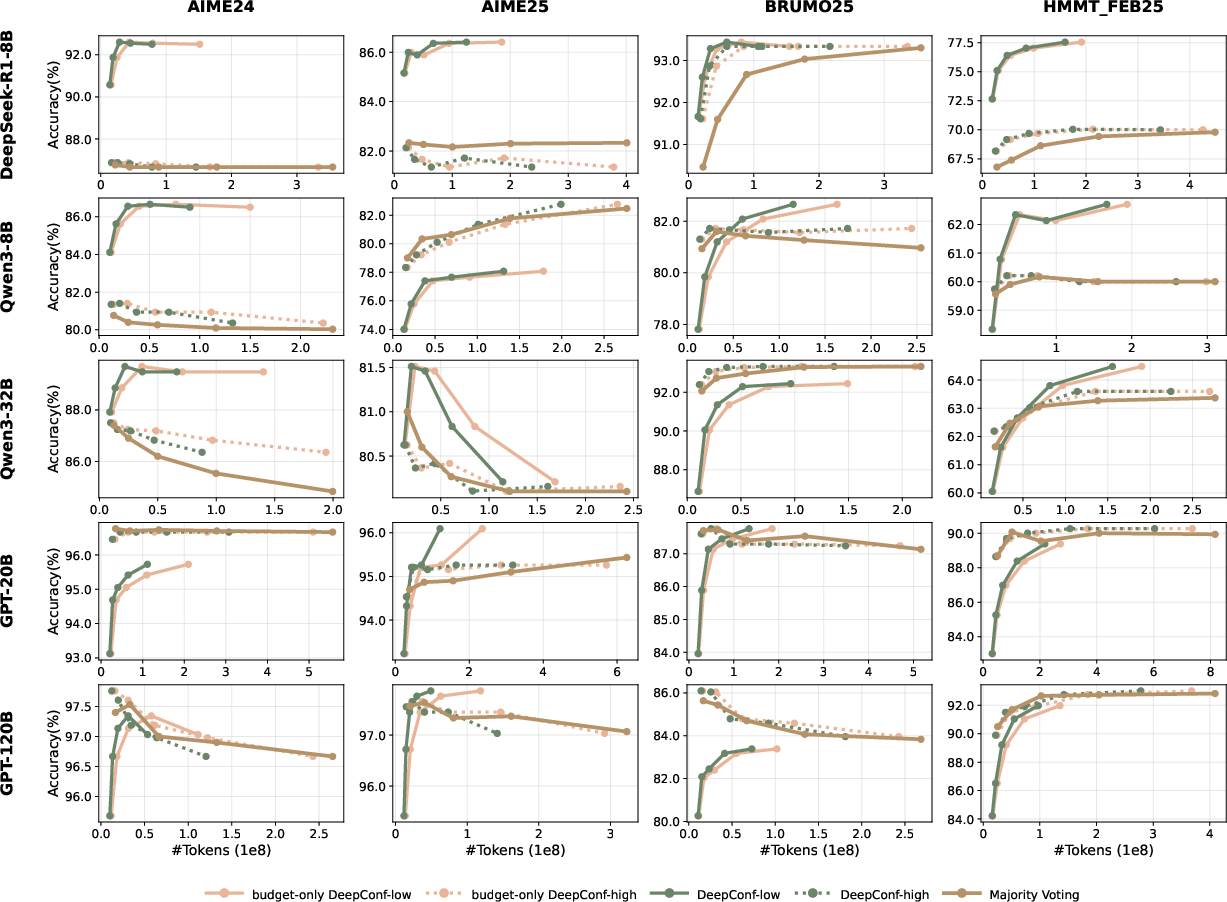

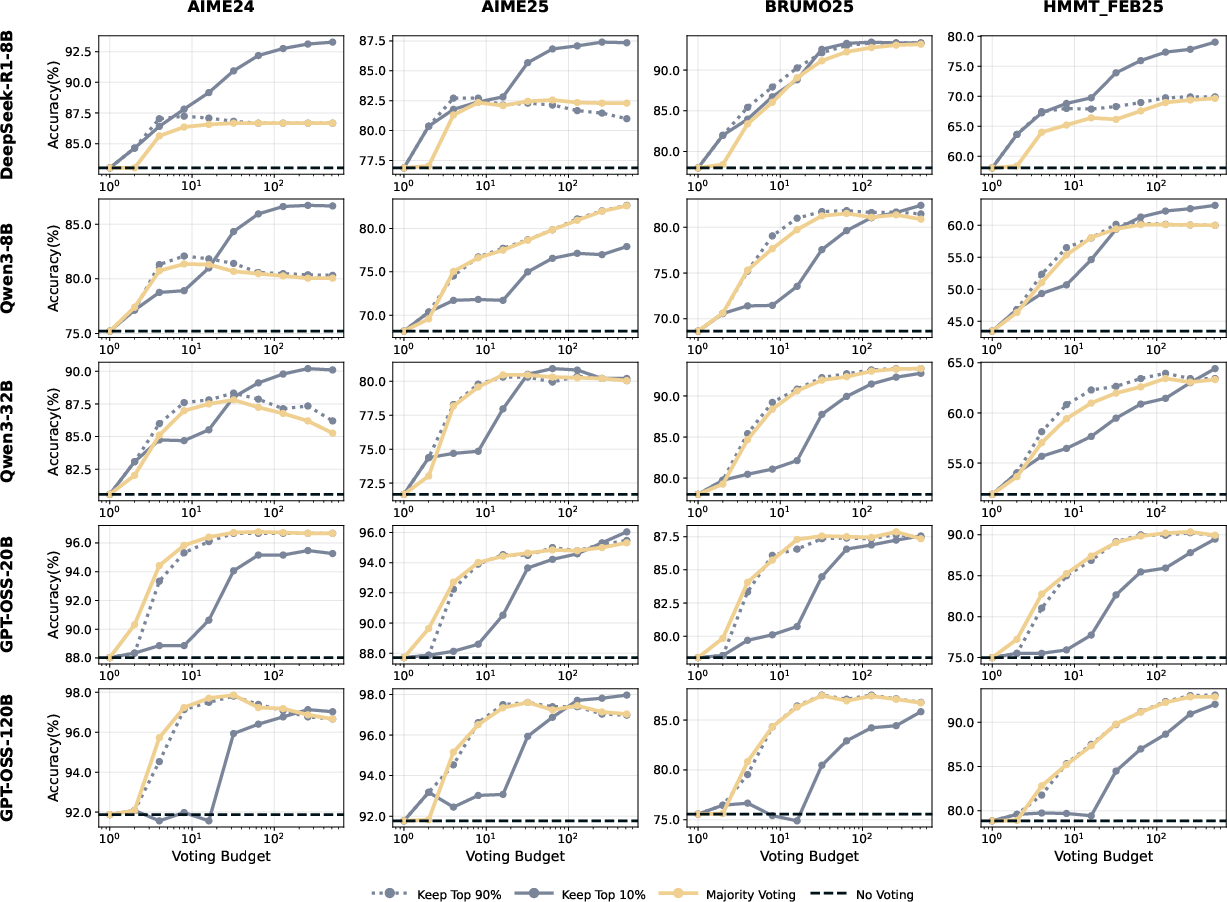

Offline Evaluations

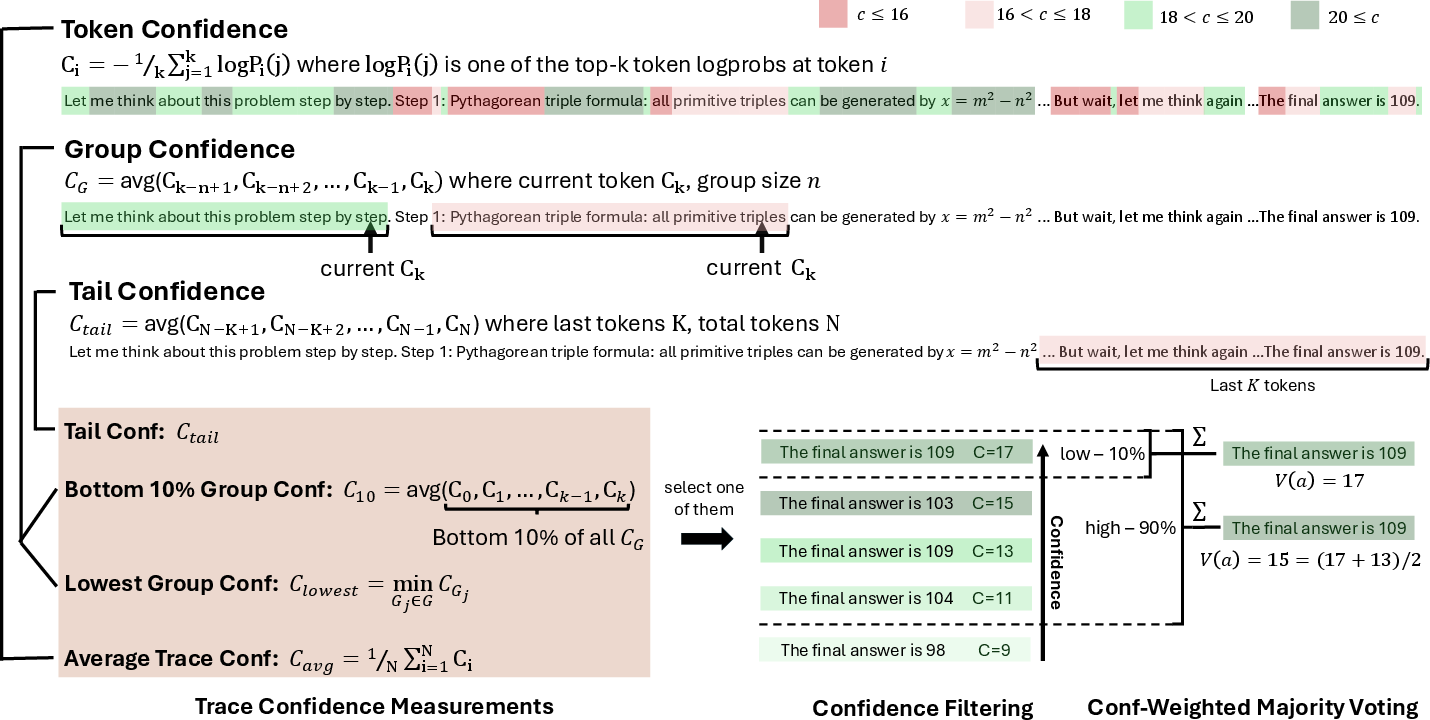

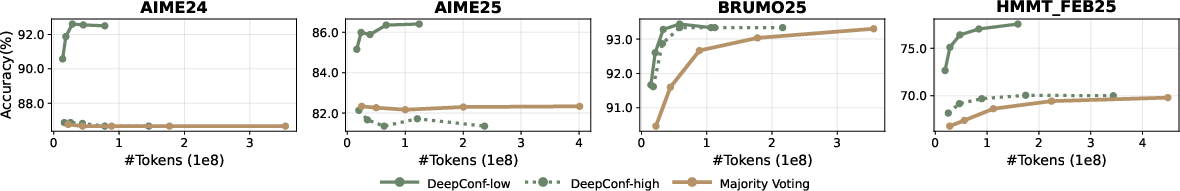

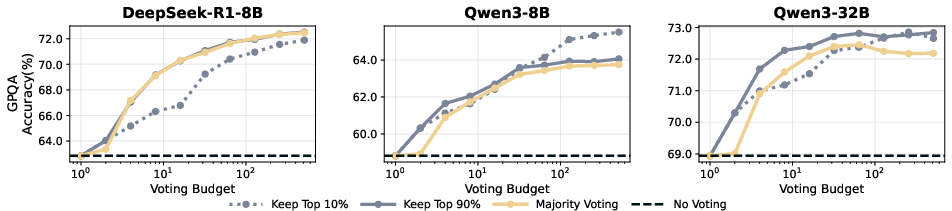

Across five open-source models and five challenging benchmarks, DeepConf consistently outperforms standard majority voting. For example, on AIME 2025 with GPT-OSS-120B, DeepConf@512 achieves 99.9% accuracy (vs. 97.0% for majority voting) and similar gains are observed for smaller models and other datasets. Aggressive filtering (top 10%) yields the largest improvements, but can occasionally hurt performance due to overconfident errors; conservative filtering (top 90%) is safer but less aggressive.

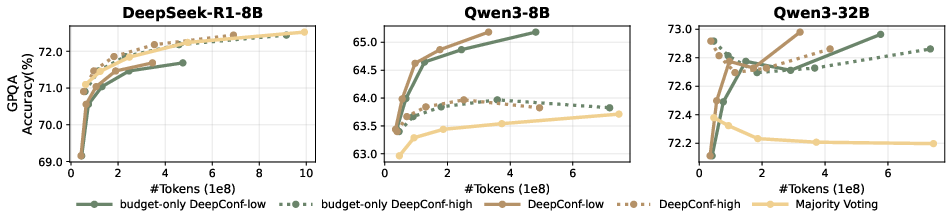

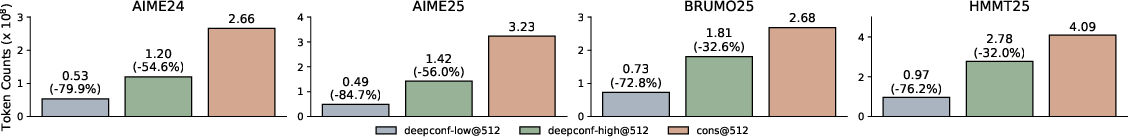

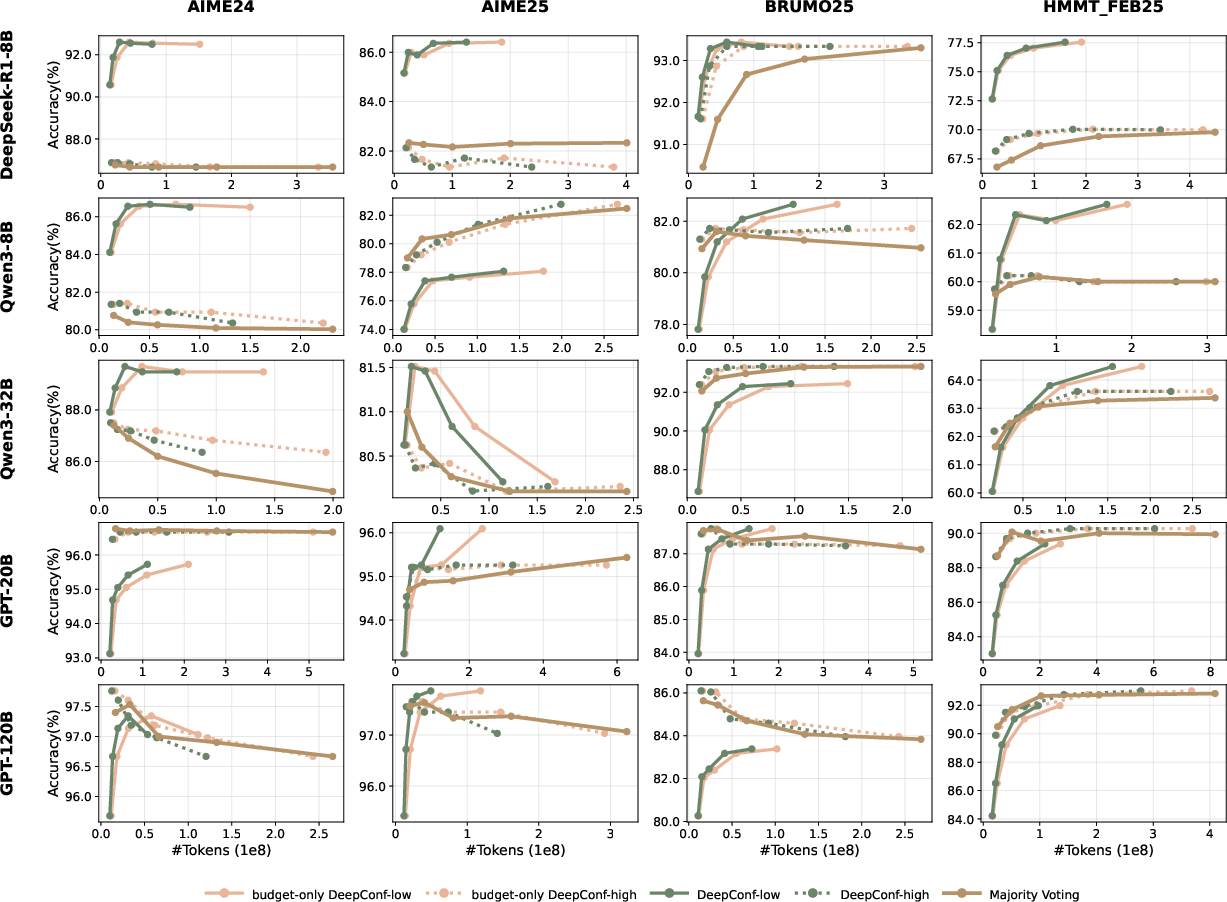

Online Evaluations

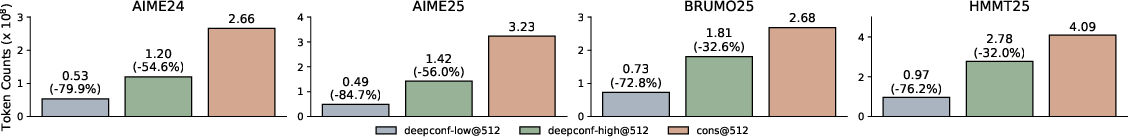

Online DeepConf achieves substantial token savings—up to 84.7% reduction—while maintaining or improving accuracy. For instance, on AIME 2025 with GPT-OSS-120B, DeepConf-low reduces token usage from 3.23×108 to 0.49×108 with a slight accuracy gain (97.1% to 97.9%). The method is robust across model scales and datasets.

Figure 6: Generated tokens comparison across different tasks based on GPT-OSS-120B.

Figure 7: Scaling behavior: Model accuracy vs. voting size for different methods using offline DeepConf.

Figure 8: Scaling behavior: Model accuracy vs. token cost for different methods using online DeepConf.

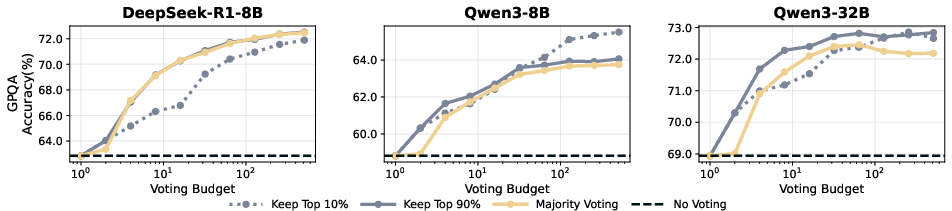

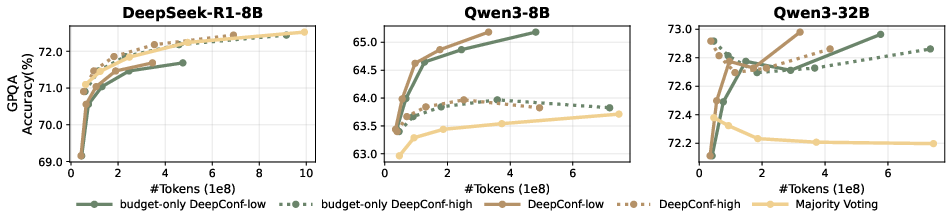

Figure 9: Scaling behavior: Model accuracy vs. budget size for different methods on GPQA-Diamond.

Figure 10: Scaling behavior: Model accuracy vs. token cost for different methods on GPQA-Diamond.

Implementation and Deployment

DeepConf is designed for minimal integration overhead. The method requires only access to token-level log-probabilities and can be implemented with minor modifications to popular inference engines such as vLLM. The online variant requires a sliding window computation of group confidence and a simple early-stopping criterion. The method is compatible with standard OpenAI-compatible APIs and can be toggled per request.

Resource and Scaling Considerations

- Computational Savings: DeepConf achieves up to 85% reduction in generated tokens at fixed accuracy, enabling significant cost savings in large-scale deployments.

- Model-Agnostic: The method is effective across a wide range of model sizes (8B–120B) and architectures.

- No Training Required: DeepConf operates entirely at test time, requiring no additional model training or fine-tuning.

Limitations and Future Directions

A key limitation is the potential for overconfident but incorrect traces to dominate the filtered ensemble, particularly under aggressive filtering. Future work should address confidence calibration and explore integration with uncertainty quantification methods. Extending DeepConf to reinforcement learning settings, where confidence-based early stopping could guide exploration, is a promising direction.

Conclusion

DeepConf provides a practical, scalable approach for improving both the efficiency and accuracy of LLM reasoning via confidence-aware filtering and early stopping. The method is simple to implement, model-agnostic, and delivers strong empirical gains across diverse reasoning tasks and model scales. These results underscore the value of leveraging model-internal confidence signals for test-time reasoning optimization and suggest broad applicability in real-world LLM deployments.