- The paper introduces a reinforcement learning framework that enhances autonomous single-agent reasoning for deep research tasks.

- It employs an agentic inference pipeline with memory management to convert multi-turn interactions into efficient single-turn contextual tasks.

- Experimental results demonstrate significant performance gains and efficient tool usage with models like QwQ-32B and Qwen3.

SFR-DeepResearch: Towards Effective Reinforcement Learning for Autonomously Reasoning Single Agents

Introduction

The paper "SFR-DeepResearch: Towards Effective Reinforcement Learning for Autonomously Reasoning Single Agents" proposes a framework that focuses on the development of Autonomous Single-Agent models optimized for Deep Research (DR) tasks. This approach emphasizes minimal use of tools like web crawling and Python tool integration, contrasting with multi-agent systems that follow predefined workflows. The research centers on continuous reinforcement learning (RL) of reasoning-optimized models to enhance agentic skills while maintaining their reasoning capabilities.

Methodology

Agentic Inference Pipeline

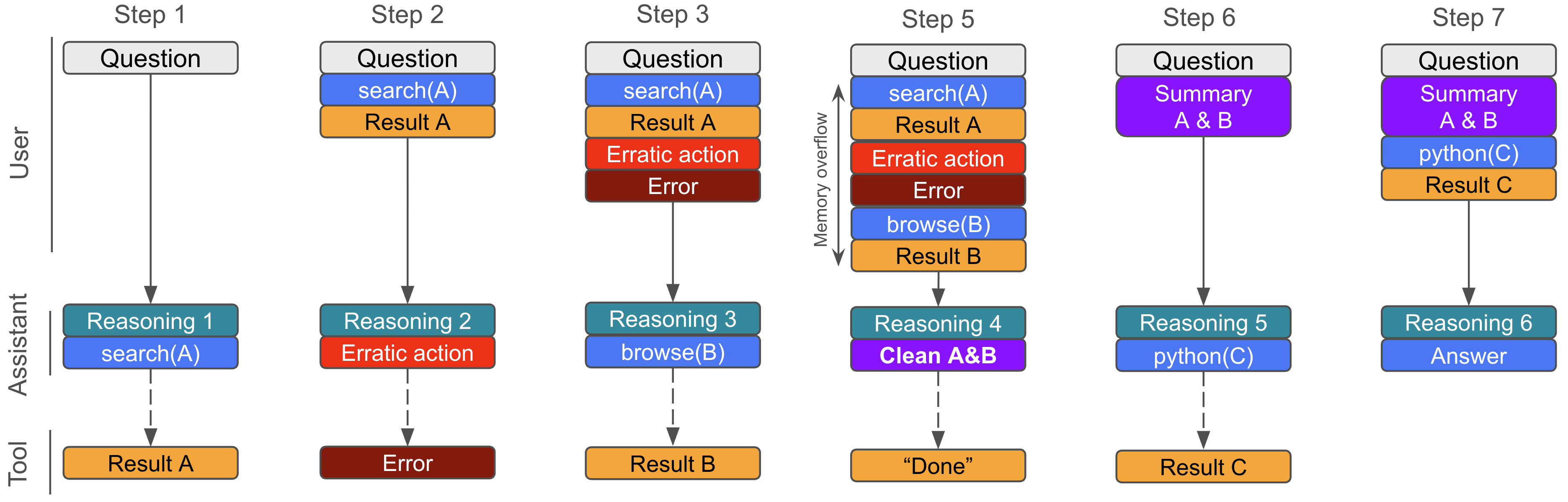

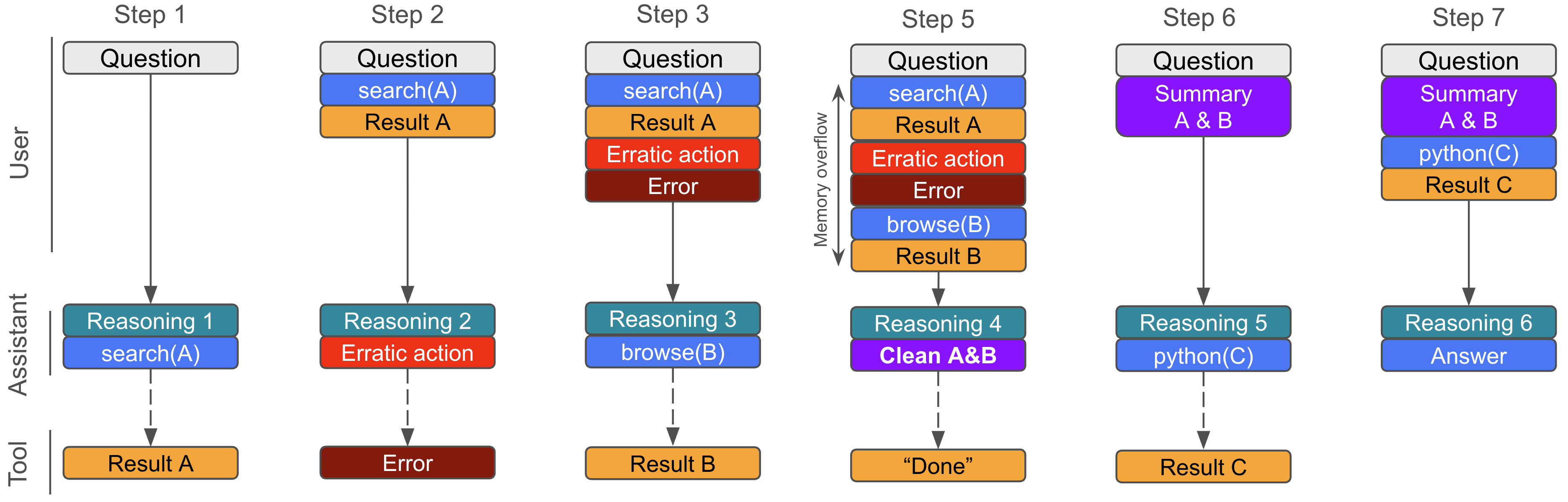

The pipeline employs an agentic scaffolding approach, resembling multi-turn conversations with tools, incorporating a memory management system that allows for an effectively unlimited context window. For models like QwQ-32B and Qwen3, the interaction is framed as a single-turn contextual question answering task, incorporating previous tool calls and responses as memory (Figure 1).

Figure 1: An example tool calling trajectory by our SFR-DR agentic workflow, catered for QwQ-32B and Qwen3 models. The multi-turn interaction is framed as a single-turn contextual question answering problem, where there is always only 1 user turn. The previous tool calls and responses are packed as memory and placed in the user turn together with the question.

Training Data Synthesis and RL Recipe

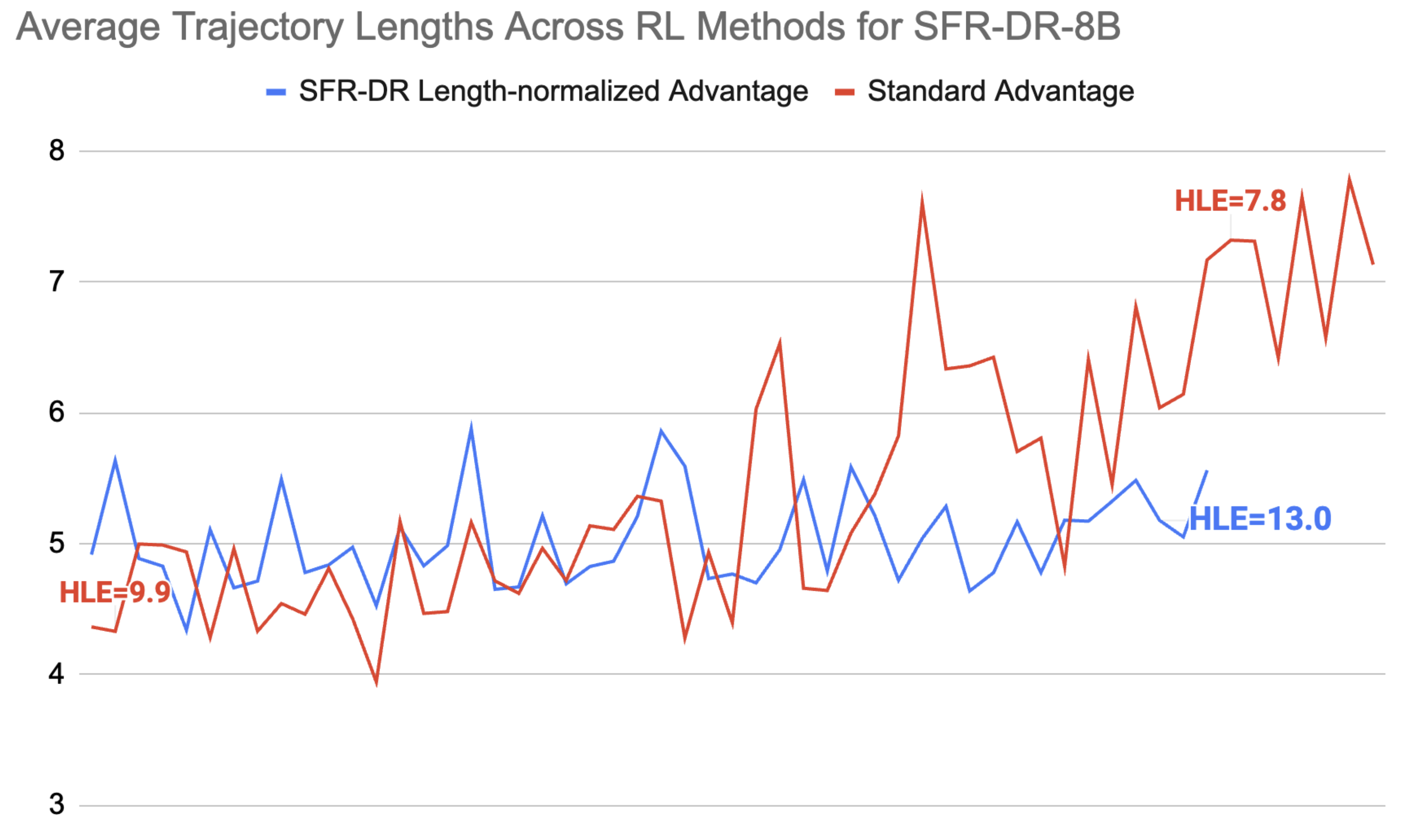

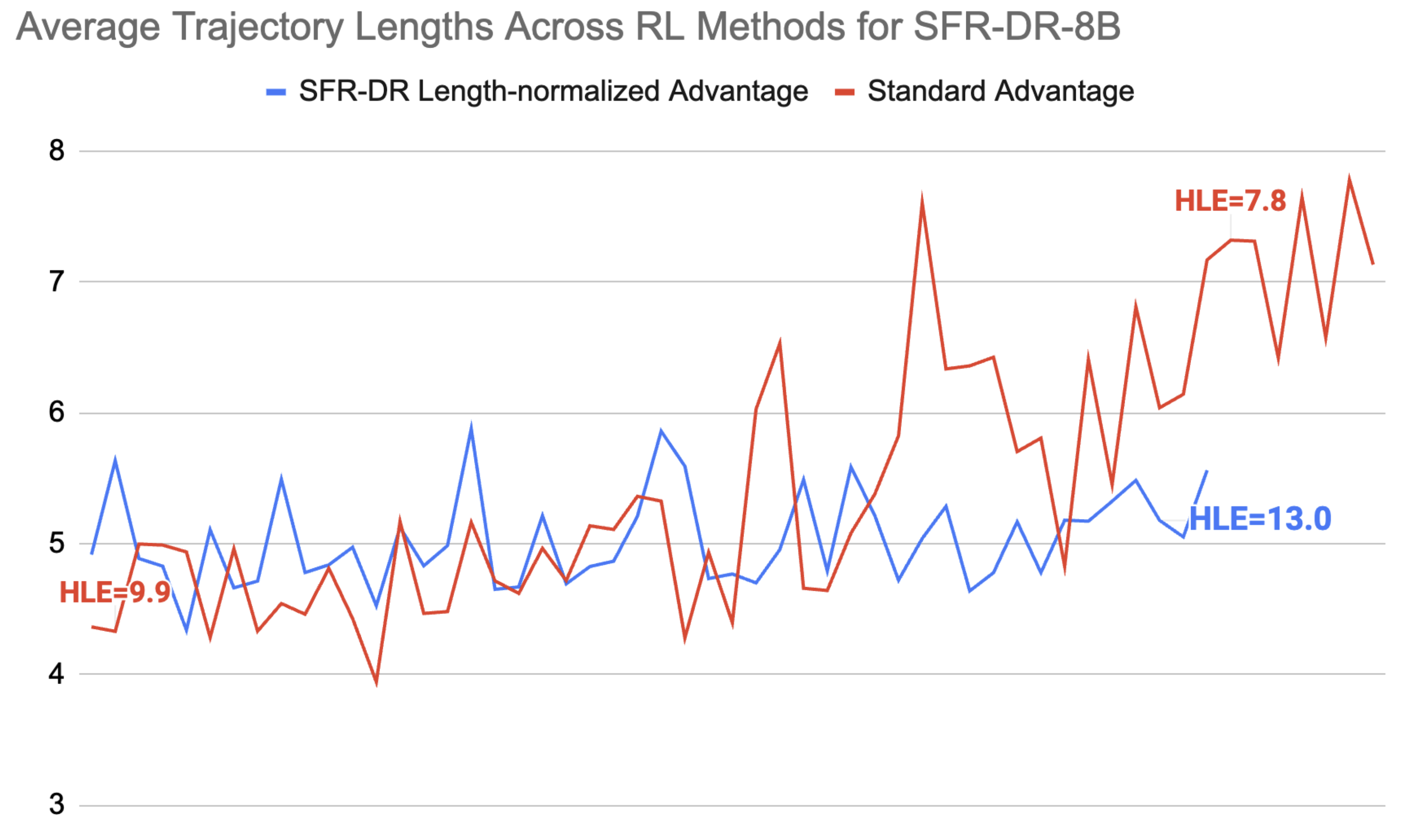

The research introduces a synthetic data pipeline tailored for short-form QA and long-form report writing tasks. This dataset is challenging, requiring extensive reasoning and tool use. The RL training employs a length-normalized REINFORCE-based approach, incorporating trajectory filtering, partial rollouts, and reward modeling to stabilize the policy optimization process. This framework effectively minimizes unintended behaviors such as repetitive tool calls, which are mitigated by length normalization (Figure 2).

Figure 2: Average training trajectory lengths of SFR-DR-8B agents over the course of RL training with and without our proposed length normalization.

Results

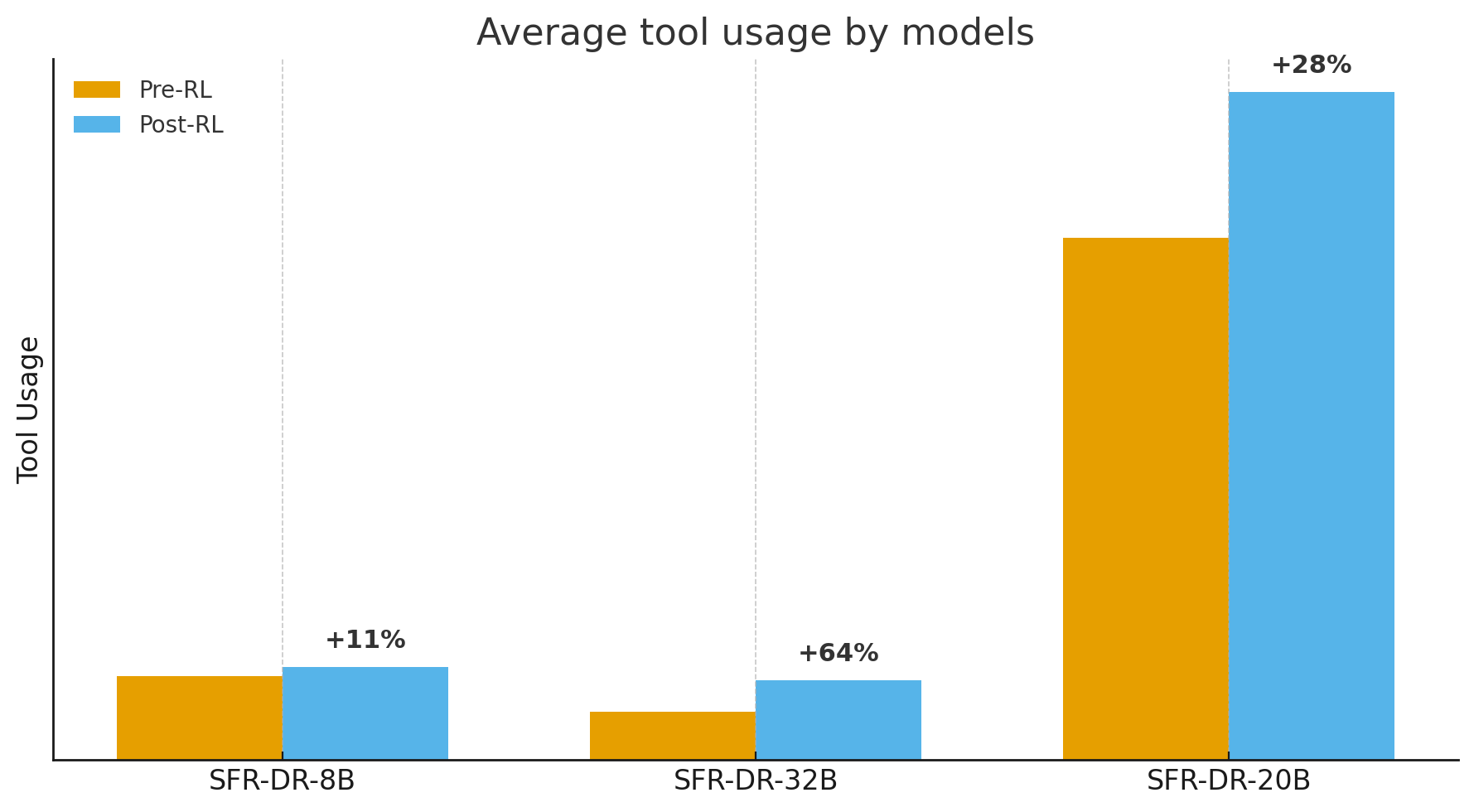

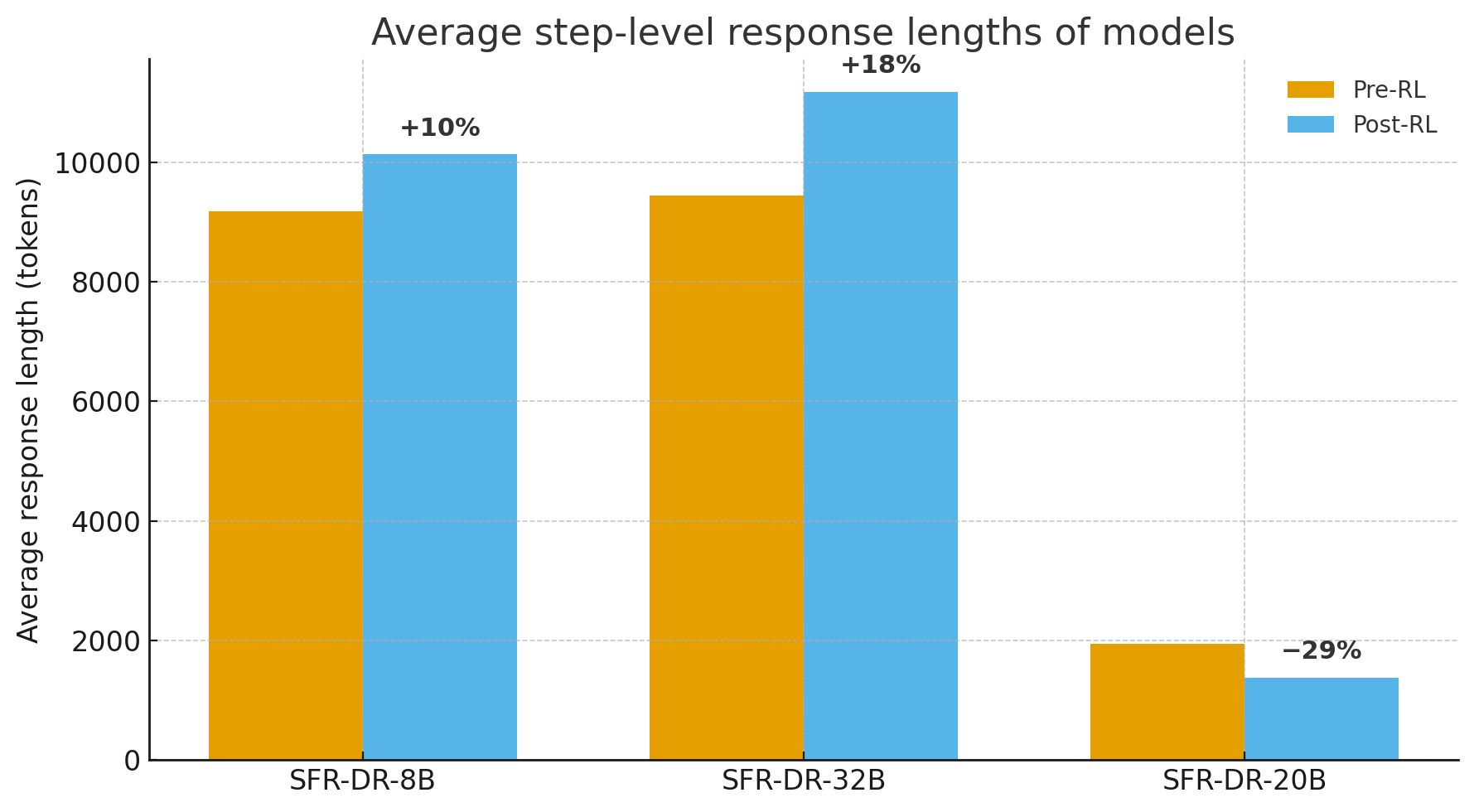

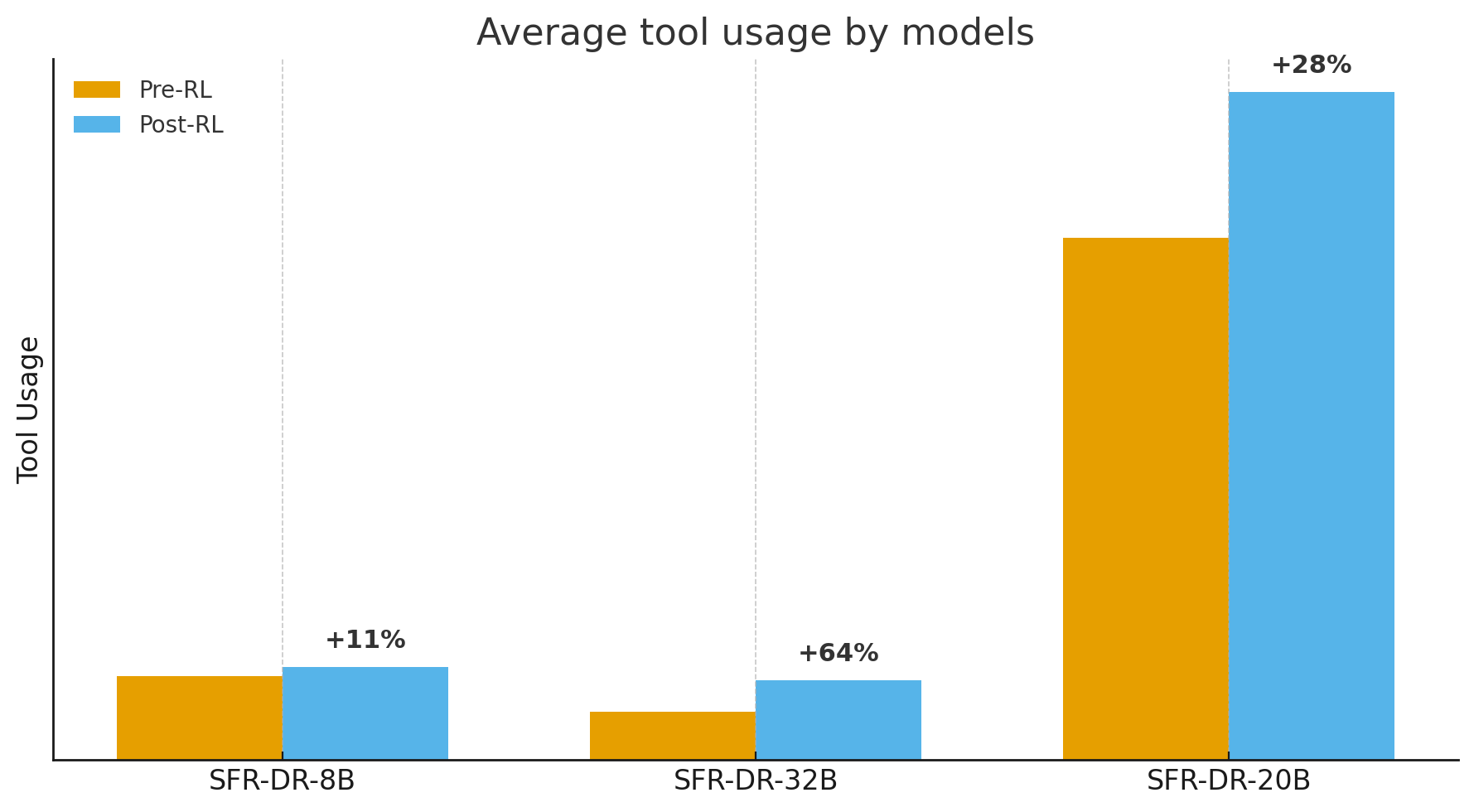

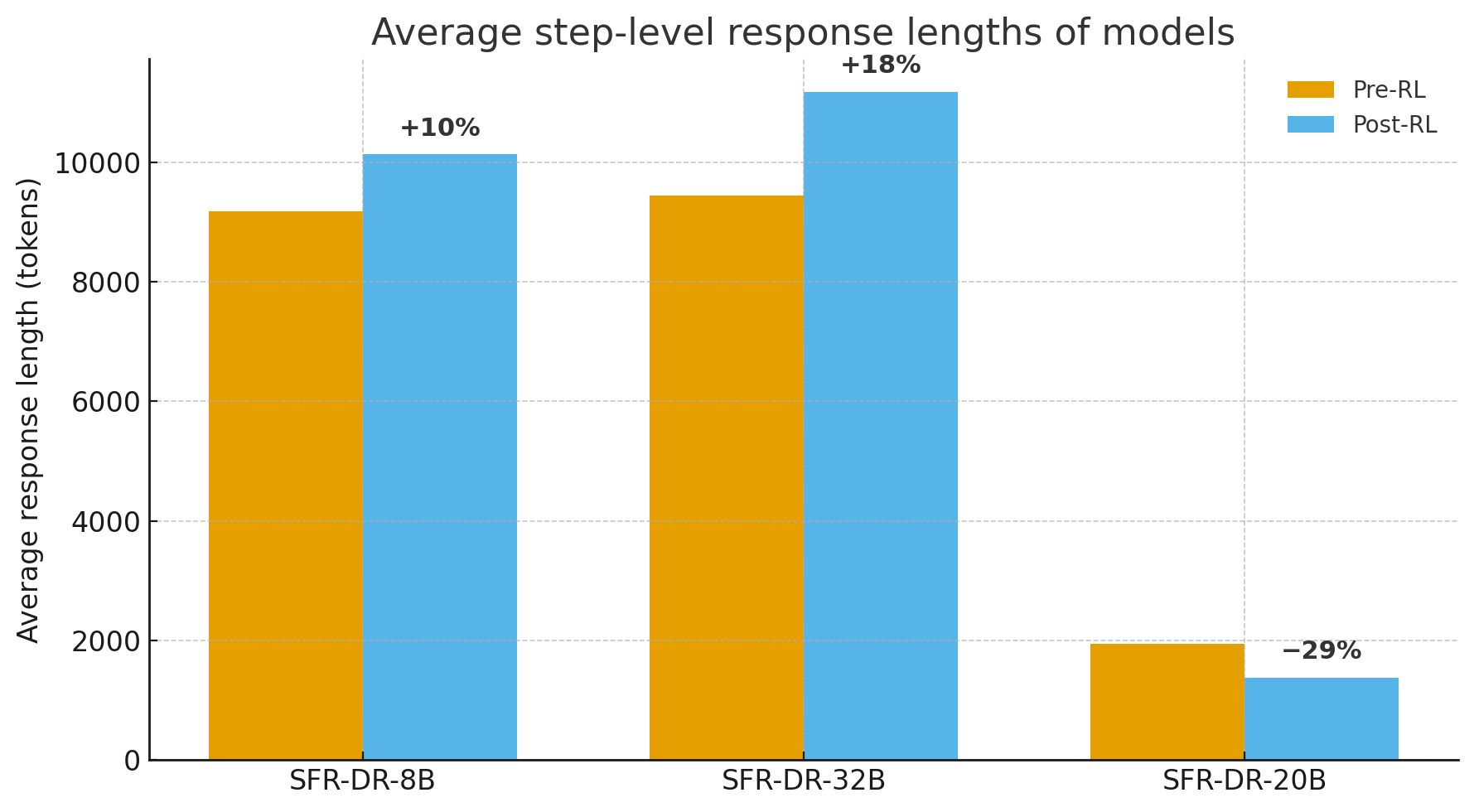

The SFR-DeepResearch framework shows strong performance across several benchmarks like Humanity's Last Exam (HLE), FRAMES, and GAIA. Remarkably, the SFR-DR-20B variant achieves up to 28.7% on HLE, showcasing significant improvements over various baselines. The approach also demonstrates effective tool usage and response efficiency, with the model generating fewer tokens per step as seen in Figure 3.

Figure 3: Comparison of (a) average tool usage and (b) average step-level response lengths (tokens) on HLE across different models.

Analysis

The paper provides in-depth ablation studies, revealing insights into several methodological components:

- The agentic workflow significantly improves performance by reformulating multi-turn conversations into single-turn tasks, enabling reasoning-optimized models to operate effectively.

- The length normalization strategy effectively stabilizes RL training by penalizing unnecessary long trajectories, improving both training stability and performance.

- A detailed analysis of tool usage and response lengths illustrates that models trained with this framework efficiently balance reasoning and tool-calling actions, leading to robust agentic behavior.

Conclusion

The proposed SFR-DeepResearch introduces a pragmatic RL framework for building sophisticated Autonomous Single-Agent models that are capable of effective deep research. By leveraging reasoning-optimized backbones, the approach achieves substantial advancements in DR tasks, providing a foundation for future developments in autonomously reasoning AI agents. The results not only underline the efficacy of the proposed methodologies but also suggest further exploration of length normalization and tool integration strategies for more refined AI behaviors.