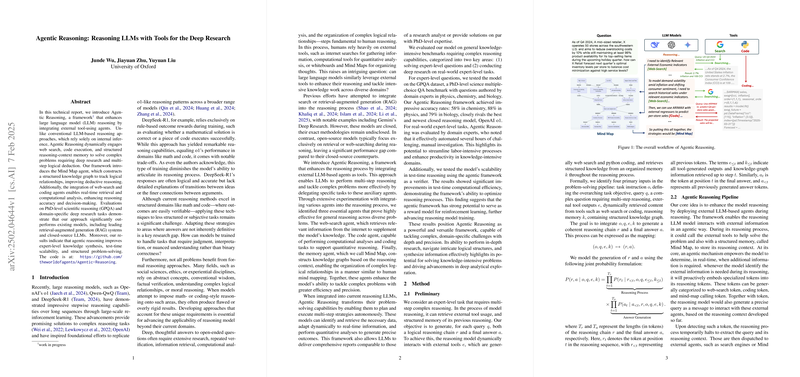

The paper introduces Agentic Reasoning, a framework designed to enhance reasoning in LLMs (LLMs) by integrating external tool-using agents. This approach contrasts with conventional LLM-based reasoning methods that rely on internal inference alone. Agentic Reasoning uses web search, code execution, and a structured reasoning-context memory to address complex problems requiring deep research and multi-step logical deduction.

The framework incorporates the Mind Map agent, which constructs a structured knowledge graph to track logical relationships, thereby improving deductive reasoning. Additionally, the integration of web-search and coding agents enables real-time information retrieval and computational analysis, enhancing reasoning accuracy and decision-making.

The paper details the method used in Agentic Reasoning:

- The framework takes task instruction , query , external tool outputs , and reasoning memory as inputs.

- It generates a coherent reasoning chain and a final answer .

- The process can be expressed as the mapping:

.

- The joint probability formulation is:

where:

- is the number of tokens of the reasoning chain

- is the number of tokens of the final answer

- denotes the token at position in the reasoning sequence

- represents all previous tokens

- and indicate all tool-generated outputs and knowledge-graph information retrieved up to step

- is the token at position in the final answer

- represents all previously generated answer tokens

- The framework uses specialized tokens for web-search, coding, and Mind Map calling.

The Mind Map agent transforms raw reasoning chains into a structured knowledge graph, extracting entities and identifying semantic relationships between them, similar to Graph*RAG*. It clusters reasoning contexts, summarizes themes using community clustering, and supports querying with specific questions.

The web-search agent retrieves relevant documents from the web and uses an LLM to extract concise summaries relevant to the ongoing reasoning context. The coding agent delegates coding tasks to a specialized coding LLM, which writes and executes code, returning the results in natural language.

The key findings of the paper are:

- The paper suggests that using two tools—web search and coding—are sufficient for most tasks.

- The paper suggests that delegating tasks to LLM-based agents improves efficiency.

- The paper suggests that tool usage can be leveraged as a test-time reasoning verifier.

Evaluations on the GPQA dataset, a Ph.D.-level science multiple-choice question answering benchmark, demonstrate that Agentic Reasoning achieves high accuracy rates: 58% in chemistry, 88% in physics, and 79% in biology. These results closely rival the best closed reasoning model, OpenAI o1 (OpenAI et al., 21 Dec 2024 ). Further evaluations by domain experts indicate that Agentic Reasoning effectively automates several hours of manual investigation, highlighting its potential to streamline labor-intensive processes.

The paper also examines the scalability of the model in test-time reasoning, using the agentic framework as a verifier. The results indicate significant improvements in test-time computational efficiency, demonstrating the framework’s ability to optimize reasoning processes. This suggests the agentic framework's potential as a reward model for reinforcement learning, further advancing reasoning model training.

In a comparison with human experts using the GPQA Extended Set, Agentic Reasoning surpasses human performance across all disciplines. Specifically, the model achieved the following accuracies:

- 75.2% in physics, compared to 57.9% by physicists

- 53.1% in chemistry, compared to 72.6% by chemists

- 72.8% in biology, compared to 68.9% by biologists

For deep research in open-ended question answering tasks, Agentic Reasoning outperforms Gemini Deep Research across finance, medicine, and law, demonstrating the effectiveness of structured reasoning and tool-augmented frameworks. The paper also finds that increased tool usage improves performance on the same question, suggesting that the frequency of tool calls can be used as a heuristic to select better responses during inference time.

The Mind Map component is shown to be effective in clarifying complex logical relationships. For example, Agentic Reasoning correctly answers the riddle about the surgeon who is the boy's father, while other models like DeepSeek-R1 and those from the GPT and Gemini series fail. Additionally, in a social deduction game called Werewolf, the model achieved a 72% win rate against experienced players, demonstrating Mind Mapping as a powerful strategy enhancer in dynamic reasoning environments.