- The paper demonstrates that current graph-language benchmarks inadequately assess multimodal reasoning, as unimodal approaches often match GLM performance.

- The paper introduces CLeGR, a synthetic benchmark with over 1,000 graphs and 54,000 questions designed to enforce true joint reasoning over graph structure and textual semantics.

- The empirical analysis, featuring high Pearson correlation (r=0.9643) and CKA results, reveals that complex graph encoders offer limited advantages over text-only baselines.

Rethinking Evaluation Paradigms for Graph-LLMs: Insights from CLeGR

Introduction

The integration of graph-structured data with natural language processing has led to the emergence of Graph-LLMs (GLMs), which aim to combine the structural reasoning capabilities of Graph Neural Networks (GNNs) with the semantic understanding of LLMs. Despite rapid progress in model architectures, the evaluation of GLMs has largely relied on repurposed node-level classification datasets, which may not adequately assess multimodal reasoning. This paper systematically analyzes the limitations of current benchmarks and introduces the CLeGR (Compositional Language-Graph Reasoning) benchmark to rigorously evaluate the joint reasoning capabilities of GLMs.

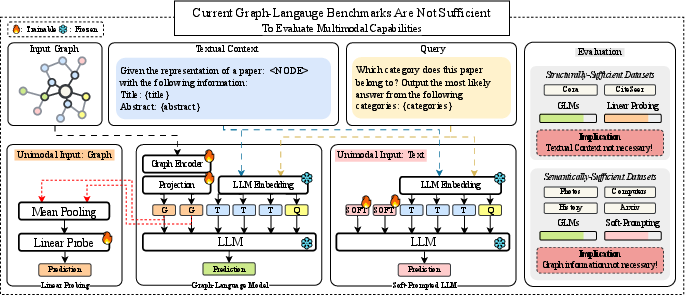

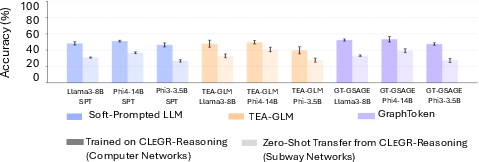

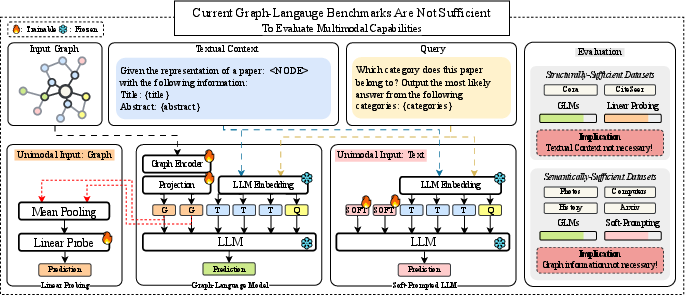

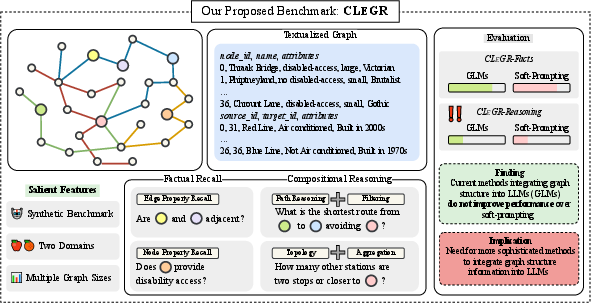

Figure 1: Current graph-language benchmarks are insufficient for evaluating multimodal reasoning; unimodal approaches suffice for strong performance.

Limitations of Existing Benchmarks

The paper demonstrates that current graph-language benchmarks are fundamentally insufficient for evaluating multimodal reasoning. Through extensive experiments on six widely-used Text-Attributed Graph (TAG) datasets (Cora, CiteSeer, Computers, Photo, History, Arxiv), the authors show that strong performance can be achieved using unimodal information alone. Specifically, linear probing on graph tokens matches GLM performance on structurally-sufficient datasets, while soft-prompted LLMs using only text attributes achieve comparable results on semantically-sufficient datasets.

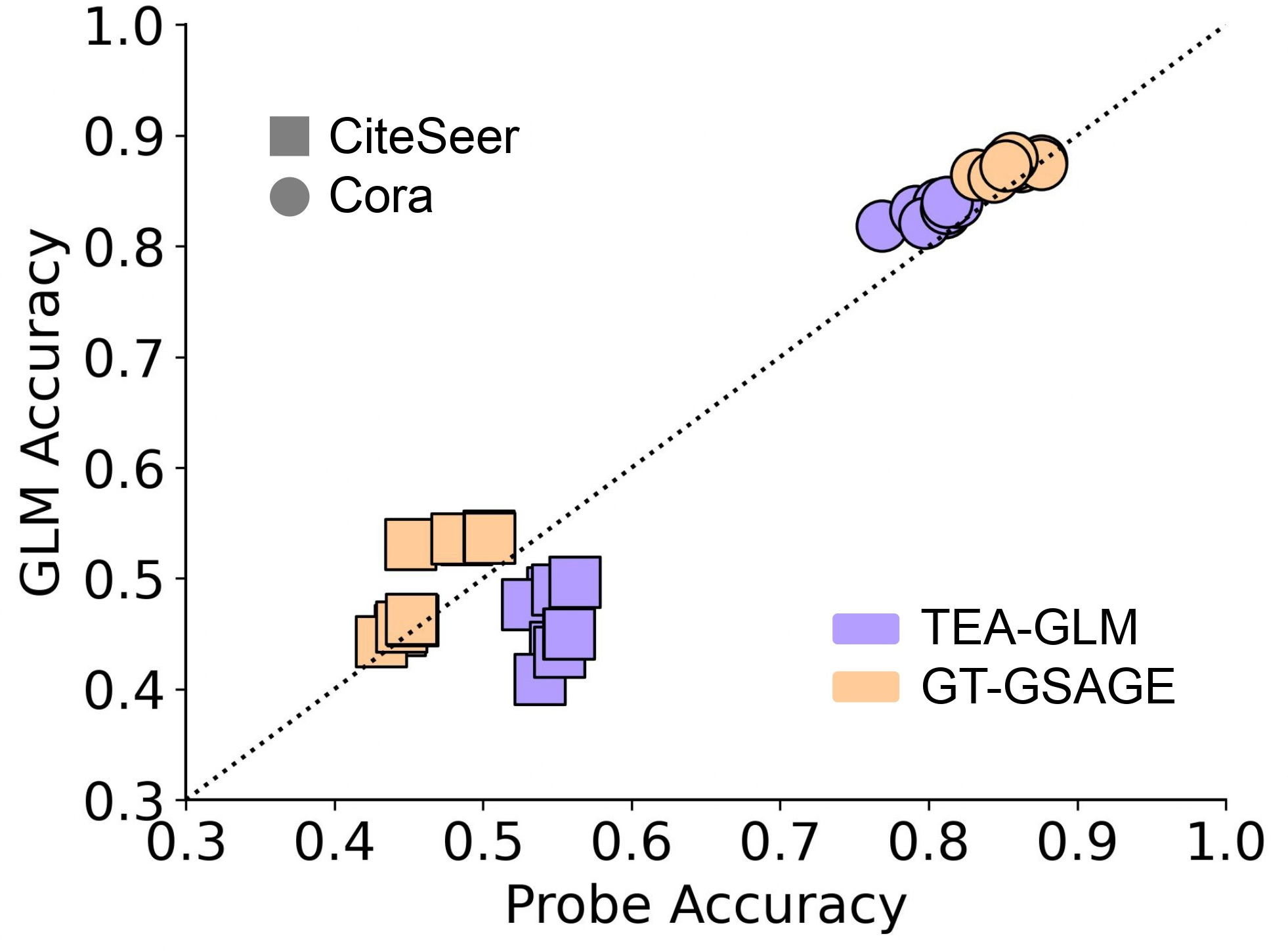

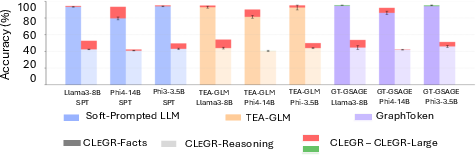

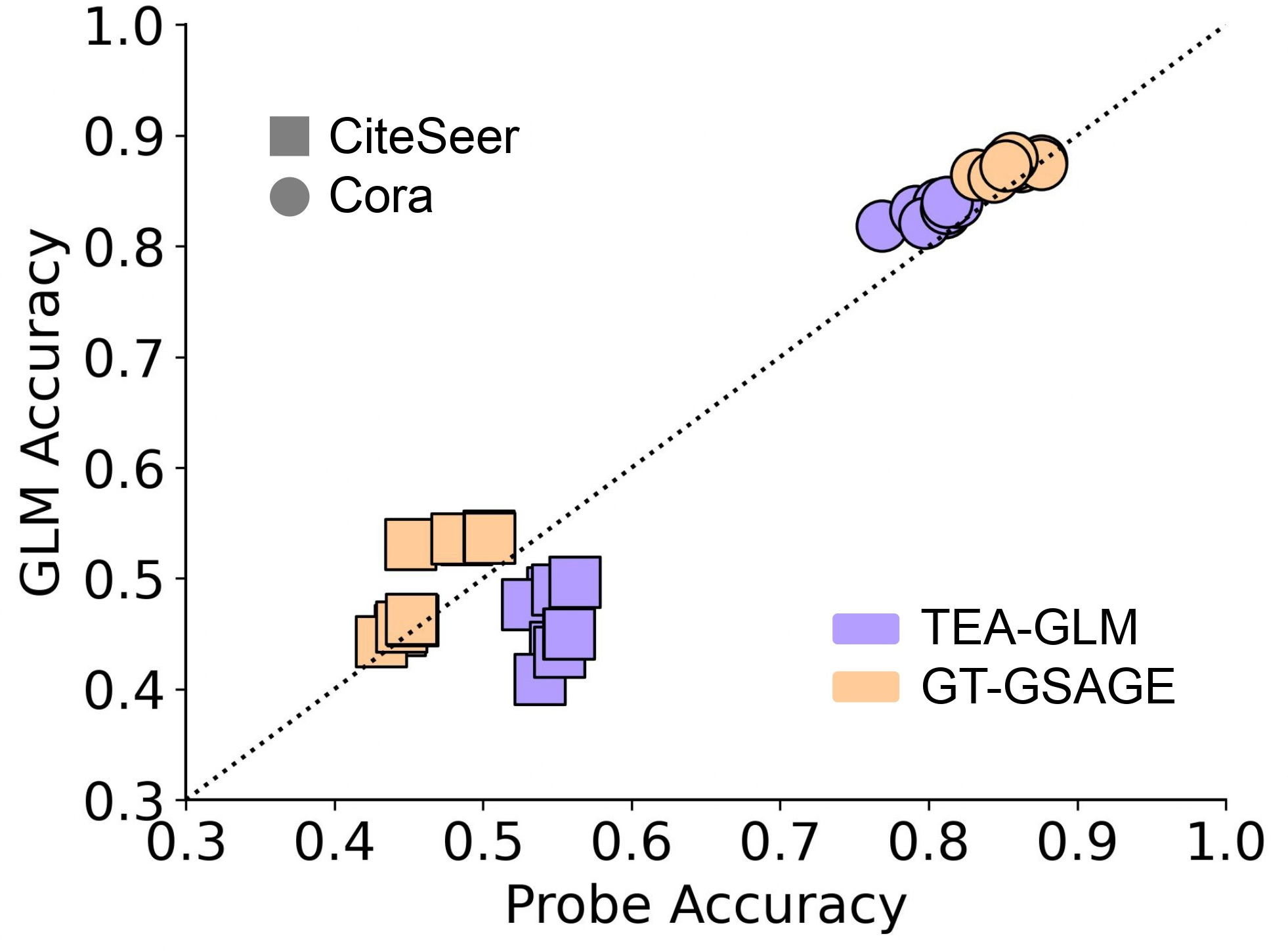

Figure 2: Linear probe accuracy closely matches full GLM performance on structurally-sufficient datasets, indicating the graph encoder captures all task-relevant information.

This finding is supported by a high Pearson correlation (r=0.9643) between linear probe and GLM accuracy, suggesting that the LLM component in GLMs often acts as an expensive decoder head rather than contributing to multimodal integration. The analysis categorizes datasets into semantically-sufficient (where text alone suffices) and structurally-sufficient (where graph structure dominates), revealing a lack of benchmarks that require true integration of both modalities.

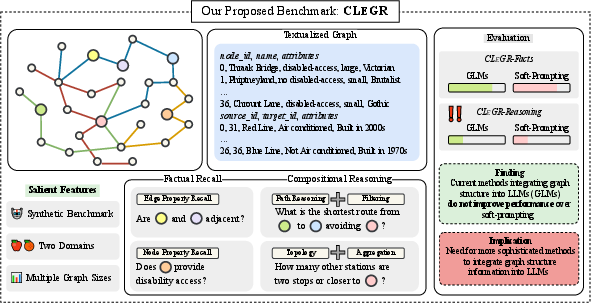

The CLeGR Benchmark: Design and Motivation

To address the evaluation gap, the paper introduces CLeGR, a synthetic benchmark explicitly constructed to require joint reasoning over graph structure and textual semantics. CLeGR comprises over 1,000 diverse graphs and 54,000 questions, spanning factual recall (CLeGR-Facts) and compositional reasoning (CLeGR-Reasoning) tasks. The benchmark is designed to preclude unimodal solutions by enforcing structural dependency, semantic grounding, and compositional complexity.

Figure 3: CLeGR evaluation framework and benchmark structure, covering factual and compositional reasoning tasks across multiple reasoning types and scopes.

CLeGR-Reasoning systematically covers filtering, aggregation, path reasoning, and topology tasks, each requiring multi-step inference that blends property lookup with logical graph traversal. The synthetic nature of the graphs eliminates pre-training confounds, ensuring that models cannot rely on memorized knowledge.

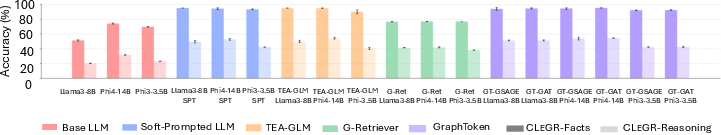

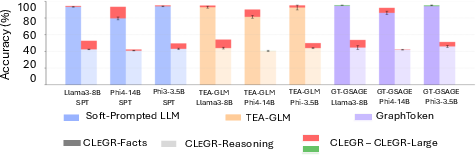

Empirical Evaluation of GLMs on CLeGR

The evaluation of representative GLM architectures (TEA-GLM, GraphToken, G-Retriever) and soft-prompted LLM baselines on CLeGR reveals several critical findings:

Figure 5: Zero-shot generalization from subway to computer network domains shows no transfer benefit for GLMs over soft-prompted approaches.

These results challenge the architectural necessity of incorporating graph structure into LLMs for multimodal reasoning, as current GLMs revert to unimodal textual processing even when provided with explicit structural information.

Representation Analysis via CKA

To further investigate the underlying cause of performance parity, the paper employs Centered Kernel Alignment (CKA) to measure representational overlap between GLMs and soft-prompted LLMs. The analysis shows that semantically-sufficient datasets and CLeGR tasks maintain high CKA across all layers, indicating near-identical internal representations. Structurally-sufficient datasets diverge in mid layers, aligning with the observed failure of soft-prompted baselines.

Figure 6: CKA analysis shows strong alignment of representations when performance is similar; divergence occurs only in structurally-sufficient datasets.

This suggests that GLMs learn distinct representations only when the dataset is structurally-sufficient and the LLM's semantic reasoning is underutilized.

Implementation and Experimental Considerations

The paper provides detailed implementation protocols for GLMs and baselines, including:

- Model architectures: TEA-GLM, GraphToken (GSAGE/GAT), G-Retriever, and soft-prompted LLMs (Llama3-8B, Phi3-3.5B, Phi4-14B).

- Training setup: Consistent hardware (NVIDIA A100 80GB), batch sizes, learning rates, and greedy decoding.

- Evaluation metrics: Overall accuracy, F1-score, MCC, MAE, RMSE, and set-based precision/recall for different answer types.

- Prompt engineering: Structured prompts for both node classification and graph QA tasks, with explicit output format suffixes.

The CLeGR dataset is publicly available, enabling reproducibility and further research.

Implications and Future Directions

The findings have significant implications for the development and evaluation of GLMs:

- Benchmark design: There is a critical need for benchmarks that require genuine multimodal integration, as current datasets are insufficient.

- Model architecture: The results question the utility of complex graph encoders in GLMs, suggesting that architectural innovation should focus on mechanisms that enforce cross-modal interaction.

- Generalization claims: The lack of zero-shot transfer benefits undermines claims of superior generalization for GLMs, highlighting the need for more robust evaluation protocols.

- Representation learning: Future work should explore methods that explicitly align and fuse graph and language representations, potentially leveraging cross-modal attention or joint training objectives.

The CLeGR benchmark provides a foundation for advancing research in explicit multimodal reasoning involving graph structure and language.

Conclusion

This paper presents a rigorous analysis of the limitations of current graph-language benchmarks and introduces the CLeGR benchmark to evaluate multimodal reasoning. The empirical results demonstrate that existing GLMs do not effectively integrate graph and language modalities, as unimodal baselines suffice for strong performance. The paper calls for a paradigm shift in both benchmark design and model architecture, emphasizing the need for explicit multimodal integration to realize the full potential of graph-LLMs.