Analysis of "GraphGPT: Graph Instruction Tuning for LLMs"

Overview

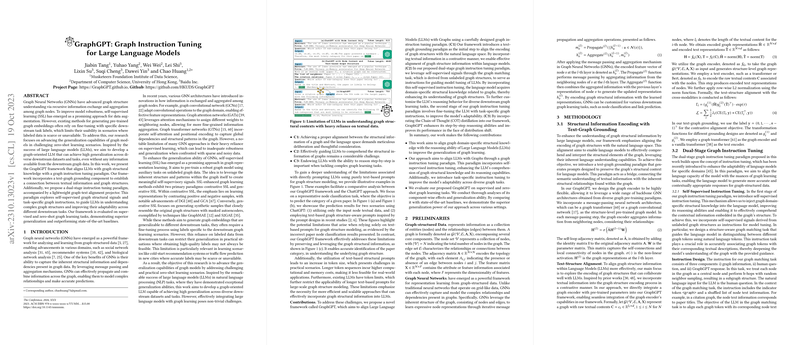

The paper introduces GraphGPT, a novel framework designed to enhance LLMs with graph structural knowledge, addressing a gap in current graph neural networks (GNNs) through an advanced graph instruction tuning paradigm. This framework emphasizes improving generalization capabilities in zero-shot learning scenarios, which is crucial for applications where labeled data is unavailable or scarce. By aligning the processing abilities of LLMs with graph structures, GraphGPT represents a step forward in the integration of LLMs and graph data.

Methodology

GraphGPT's primary innovation lies in its dual-stage instruction tuning paradigm, which includes:

- Self-Supervised Instruction Tuning: This stage involves aligning graph tokens with textual descriptions using a contrastive method to incorporate graph structural information into LLMs. By implementing a text-graph grounding technique, the framework preserves the structural context, allowing LLMs to understand graph representations effectively.

- Task-Specific Instruction Tuning: This approach fine-tunes the LLM using task-specific instructions for different graph learning tasks, such as node classification and link prediction. A lightweight graph-text alignment projector is deployed to facilitate this integration without extensive retraining.

Moreover, the framework incorporates Chain-of-Thought (CoT) distillation to enhance step-by-step reasoning abilities, particularly benefiting complex graph learning tasks.

Performance and Results

GraphGPT outperforms state-of-the-art models across both supervised and zero-shot settings. On standard node classification tasks, it demonstrates superior accuracy, showcasing two to tenfold improvements in zero-shot scenarios compared to existing methods. Its design allows for effective generalization across diverse datasets and tasks, confirmed through empirical evaluations across various graph learning applications.

The employment of COT distillation and self-supervised signals significantly bolsters its reasoning and adaptability to different graph structures, highlighting the robustness and flexibility of GraphGPT in handling complex and unseen datasets.

Implications and Future Directions

The introduction of GraphGPT reflects a significant advancement in combining graph theory with natural language processing. Practically, this can facilitate better insights in fields like social networking analysis and bioinformatics, where structured and unstructured data often overlap. Theoretically, this framework opens new avenues for exploring the intersection of language and structure in machine learning models.

Future research directions may include refining efficient parameter utilization among LLMs and exploring pruning techniques to further enhance computational efficiency without degrading performance. Additionally, the exploration of other application domains, such as dynamic graphs or real-time data processing, could benefit from the foundational advancements provided by GraphGPT.

Overall, this paper marks a pivotal contribution to the development of more adaptable and comprehensive artificial intelligence models capable of operating in the multifaceted landscapes of real-world data systems.