Understanding LLMs for Graphs

Introduction

Graphs are absolutely everywhere. Think about social networks, molecular structures, or even recommendation systems—all of these structures can be effectively represented as graphs. With their nodes and connecting edges, graphs give us a flexible way to capture relationships and interdependencies in real-world data.

Now, if you've been following the AI scene, you've probably heard of Graph Neural Networks (GNNs) and LLMs. Each has its own strengths: GNNs excel in node classification and link prediction, while LLMs shine in natural language processing tasks. But what happens when we combine these two? In the paper titled "A Survey of LLMs for Graphs," the authors explore exactly this: integrating LLMs with graph learning to push the boundaries of what we can achieve in graph-centric tasks.

Taxonomy of Models

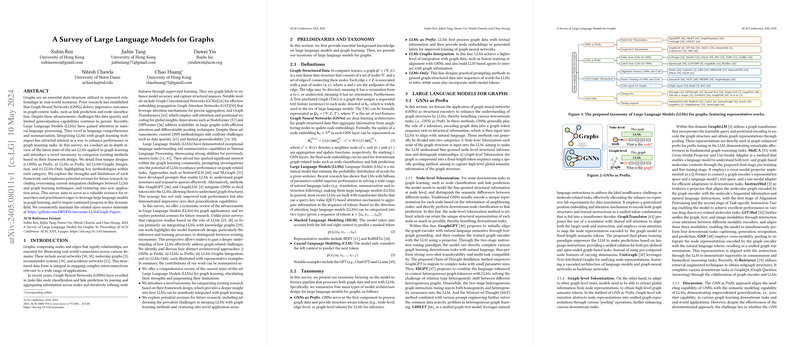

The paper introduces a novel taxonomy for categorizing existing methods into four distinct designs:

- GNNs as Prefix

- LLMs as Prefix

- LLMs-Graphs Integration

- LLMs-Only

Let's break these down one by one.

GNNs as Prefix

In this approach, GNNs serve as a preliminary step, converting graph nodes or the entire graph into tokens for the LLMs. The idea is that GNNs capture structural information, which LLMs can then process for higher-level tasks. This method is split into two categories:

- Node-level Tokenization: Here, each node is encoded as unique structural tokens.

- Graph-level Tokenization: This involves pooling methods to capture the graph's global semantic.

Representative Works:

- GraphGPT: Aligns graph encoders with natural language semantics.

- HiGPT: Combines language-enhanced in-context heterogeneous graph tokenization.

- GIMLET: Uses instructions to address challenges in molecule-related tasks.

Pros:

- Strong zero-shot transferability.

- Effective in downstream graph tasks.

Cons:

- Limited effectiveness for non-text-attributed graphs.

- Challenges in optimizing coordination between GNNs and LLMs.

LLMs as Prefix

Here, LLMs first process the graph data, generating embeddings or labels used to improve GNN performance. This category is split into:

- Embeddings from LLMs for GNNs: LLM-generated embeddings are used for GNN training.

- Labels from LLMs for GNNs: LLMs generate supervision labels to guide GNNs.

Representative Works:

- G-Prompt: Uses GNNs to generate task-specific node embeddings.

- OpenGraph: Employs LLMs to generate nodes and edges for training graph foundational models.

Pros:

- Utilizes rich textual profiles to improve models.

- Enhances generalization capabilities.

Cons:

- Decoupled nature between LLM and GNN requires a two-stage learning process.

- Performance heavily depends on pre-generated embeddings/labels.

LLMs-Graphs Integration

For deeper integration, some approaches co-train GNNs and LLMs or align their feature spaces:

- Alignment between GNNs and LLMs: Uses contrastive learning or EM iterative training.

- Fusion Training of GNNs and LLMs: Combines modules, allowing bidirectional information flow.

- LLMs Agent for Graphs: Builds autonomous agents based on LLMs to interact with graph data.

Representative Works:

- MoMu: Employs contrastive learning for molecule synthesis from text.

- GreaseLM: Integrates transformer layers and GNN layers.

- Graph Agent: Converts graphs into textual descriptions for LLMs to understand.

Pros:

- Minimizes the modality gap between structured data and text.

- Co-optimization of GNNs and LLMs.

Cons:

- Scalability issues with larger models and datasets.

- Single-run operations in agents limit their adaptability.

LLMs-Only

This method relies purely on LLMs for interpreting and inferring graph data:

- Tuning-free: Constructs graphs in natural language for LLMs to understand.

- Tuning-required: Aligns graph token sequences with natural language, followed by fine-tuning.

Representative Works:

- GraphText: Translates graphs into natural language.

- InstructGraph: Uses structured format verbalization for graph reasoning.

Pros:

- Leverages the pre-existing capabilities of LLMs for new tasks.

- Potential for multi-modal integration.

Cons:

- Difficulty in expressing large-scale graphs purely in text format.

- Challenges in preserving structural integrity without a graph encoder.

Future Directions

The paper also speculates on several future directions, such as:

- Developing multi-modal LLMs to handle diverse graph data.

- Improving efficiency to bring down computational costs.

- Exploring new graph tasks like graph generation and question answering.

- Building user-centric agents capable of handling open-ended questions from users.

Conclusion

In summary, integrating LLMs with GNNs offers exciting possibilities in graph-based tasks, from node classification to graph-based question answering. While each approach has its strengths and weaknesses, the paper provides a comprehensive overview that serves as a valuable resource for researchers in this dynamic field. Future research should aim to address existing challenges and explore new opportunities to further unlock the potential of LLMs in graph learning.