- The paper presents a formal proof that tool integration expands LLMs’ empirical and feasible support beyond what pure-text models can achieve.

- It introduces the ASPO algorithm to stabilize and control early tool invocation, enhancing efficiency on complex mathematical benchmarks.

- Empirical results show that TIR models consistently outperform pure-text counterparts, establishing a new framework for advanced AI reasoning.

Introduction

This paper presents a rigorous theoretical and empirical analysis of Tool-Integrated Reasoning (TIR) in LLMs, focusing on the integration of external computational tools such as Python interpreters. The authors provide the first formal proof that TIR strictly expands both the empirical and feasible support of LLMs, breaking the capability ceiling imposed by pure-text models. The work further introduces Advantage Shaping Policy Optimization (ASPO), a novel algorithm for stable and controllable behavioral guidance in TIR models, and demonstrates its efficacy through comprehensive experiments on challenging mathematical benchmarks.

The central theoretical contribution is a formal proof that tool integration enables LLMs to generate solution trajectories that are impossible or intractably improbable for pure-text models. The analysis builds on the "invisible leash" theory, which states that RL-based fine-tuning in pure-text environments cannot discover fundamentally new reasoning paths outside the base model's support. By introducing deterministic, non-linguistic state transitions through external tools, TIR models can access a strictly larger set of generative trajectories.

The proof leverages the concept of a random oracle to show that, for certain problem instances, the probability of a pure-text model generating a correct solution is exponentially small, while a tool-integrated model can deterministically obtain the solution via a single tool call. This establishes that the empirical support of a pure-text model is a strict subset of that of a TIR model.

Token Efficiency and Feasible Support

Beyond theoretical reachability, the paper introduces the concept of token efficiency to argue that tool integration is a practical necessity. Programmatic representations of algorithms (e.g., iteration, dynamic programming, graph search) have constant token cost, whereas natural language simulations scale linearly or superlinearly with problem size, quickly exceeding any feasible context window.

For any finite token budget B, there exist algorithmic strategies whose programmatic representations are concise, while their natural-language simulations are intractably verbose. The authors formalize this with the notion of feasible support under a token budget, proving that for sufficiently large problem instances, the feasible support of pure-text models is a strict subset of that of tool-integrated models.

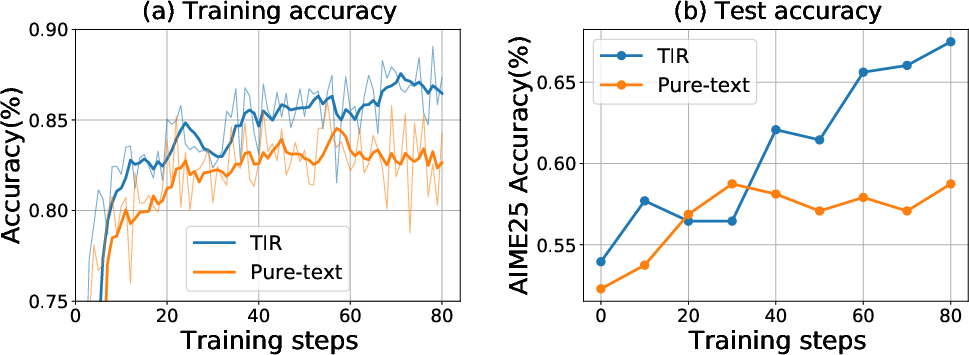

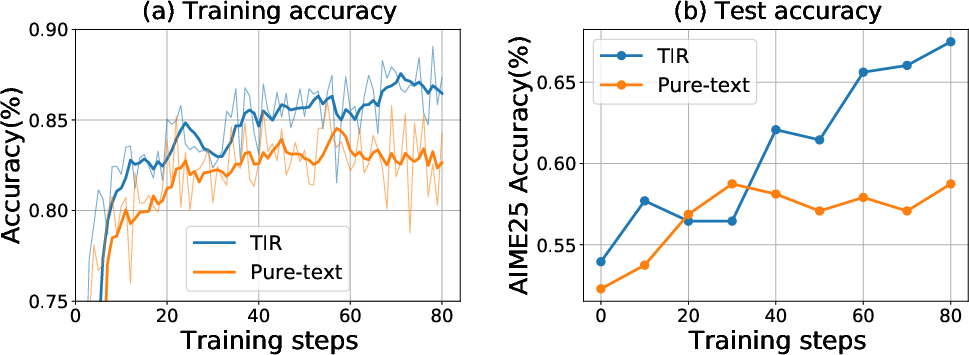

Figure 1: Training and testing accuracy curves for TIR and pure-text RL on Qwen3-8B, demonstrating superior performance of TIR across epochs.

Advantage Shaping Policy Optimization (ASPO)

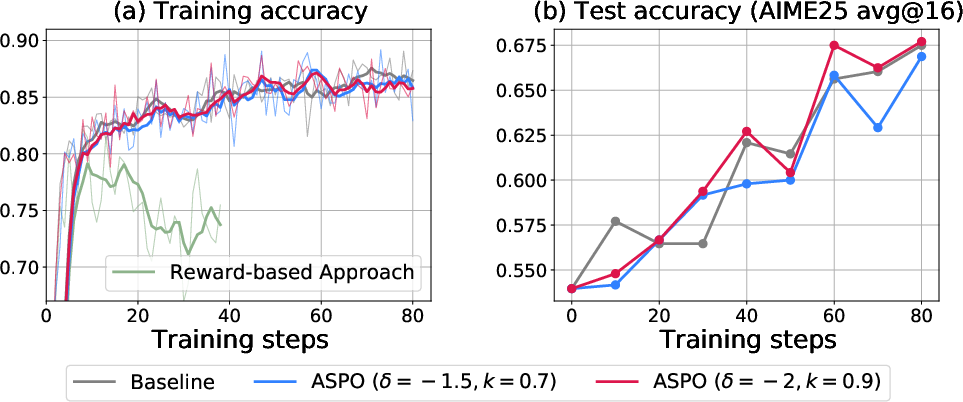

The paper identifies a critical challenge in guiding TIR model behavior: reward shaping for early tool invocation destabilizes training in GRPO-like algorithms due to normalization effects that can penalize correct answers. ASPO circumvents this by directly modifying the advantage function, applying a clipped bias to encourage desired behaviors (e.g., earlier code invocation) while preserving the primary correctness signal.

ASPO ensures that the incentive for early tool use is a stable adjustment, subordinate to correctness, and avoids the volatility introduced by reward normalization. The method is generalizable to other behavioral guidance scenarios in TIR systems.

Empirical Validation: Mathematical Reasoning Benchmarks

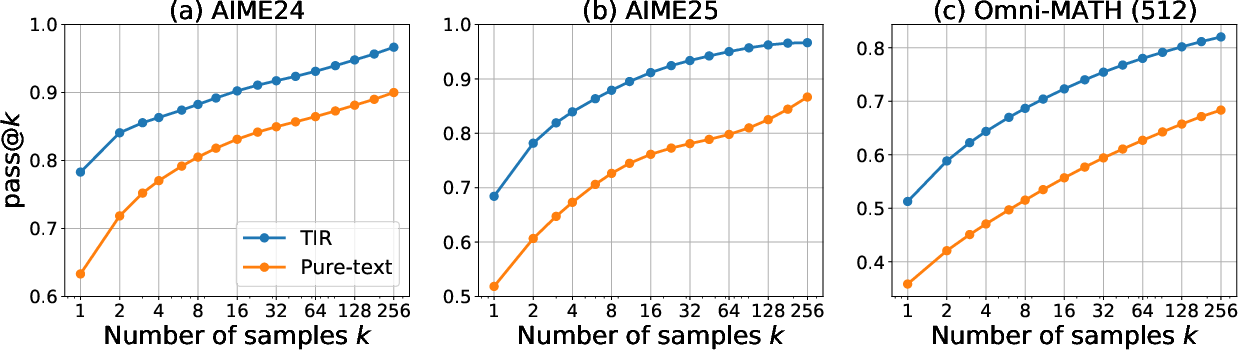

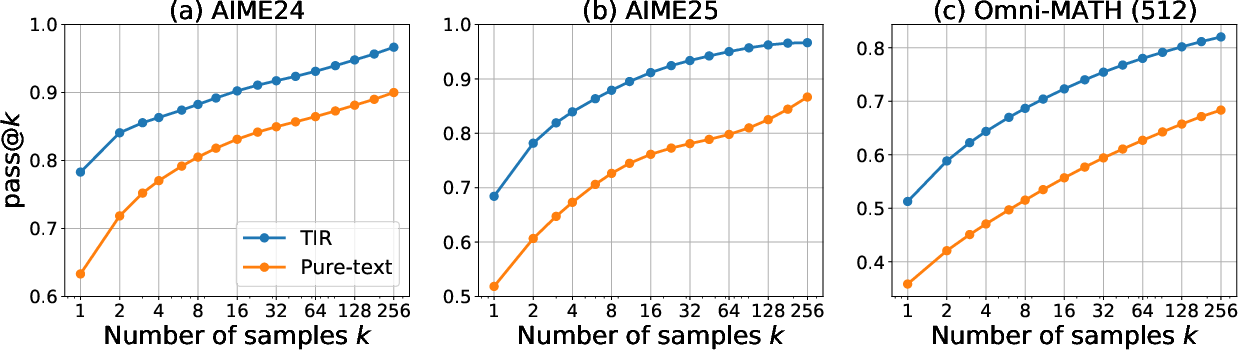

Experiments are conducted on the Qwen3-8B model using AIME24, AIME25, and Omni-MATH-512 benchmarks. The TIR model, equipped with a Python interpreter, decisively outperforms the pure-text baseline across all metrics, including pass@k for k up to 256.

Figure 2: Pass@k curves for TIR and pure-text models across AIME24, AIME25, and Omni-MATH-512, showing consistent superiority of TIR at all k.

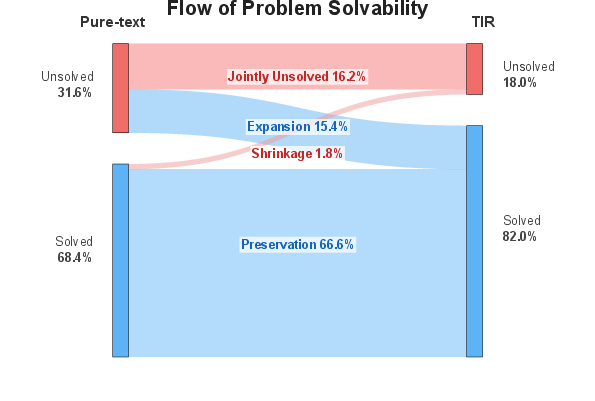

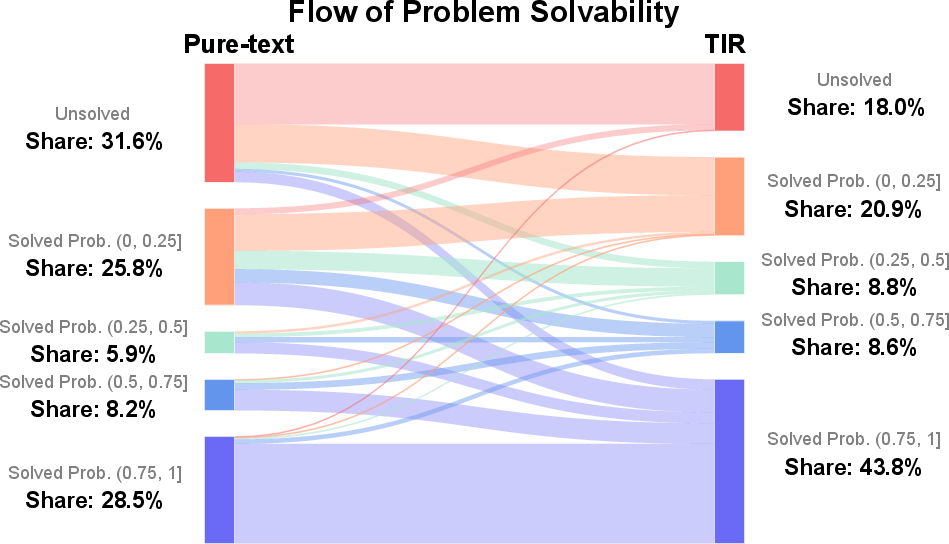

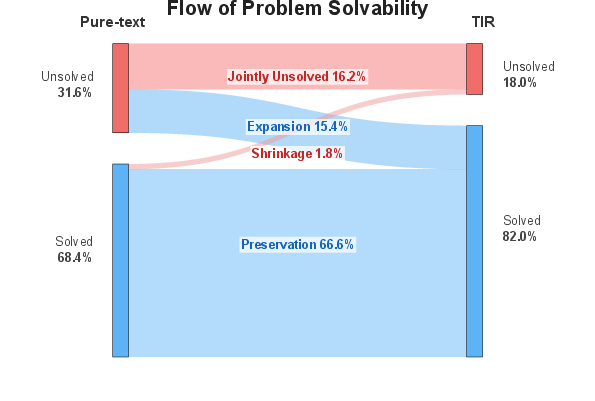

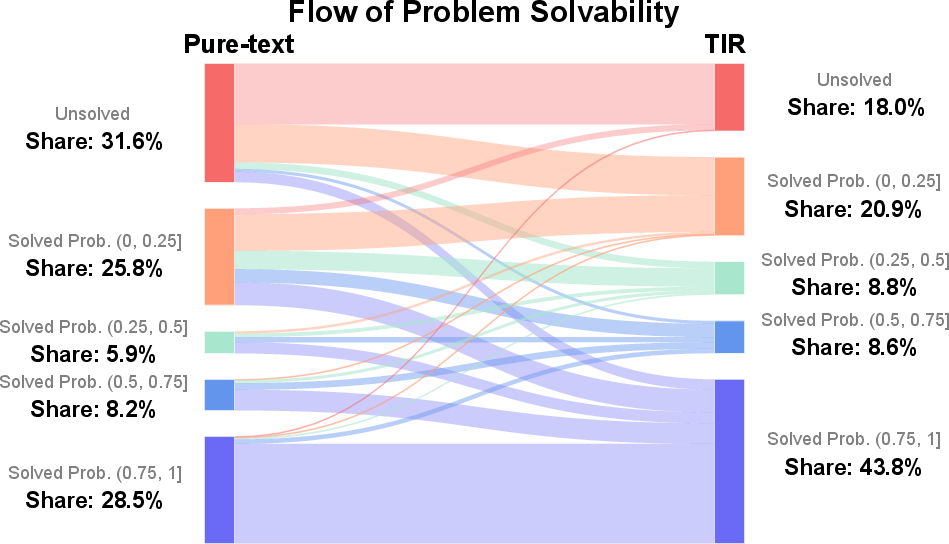

A Sankey diagram visualizes the flow of problem solvability, revealing a substantial net gain in capability expansion for TIR, with minimal capability shrinkage.

Figure 3: Sankey diagram of problem solvability transitions on Omni-MATH-512, highlighting the expansion in solvable problems due to TIR.

Algorithmic Friendliness and Universality of TIR Benefits

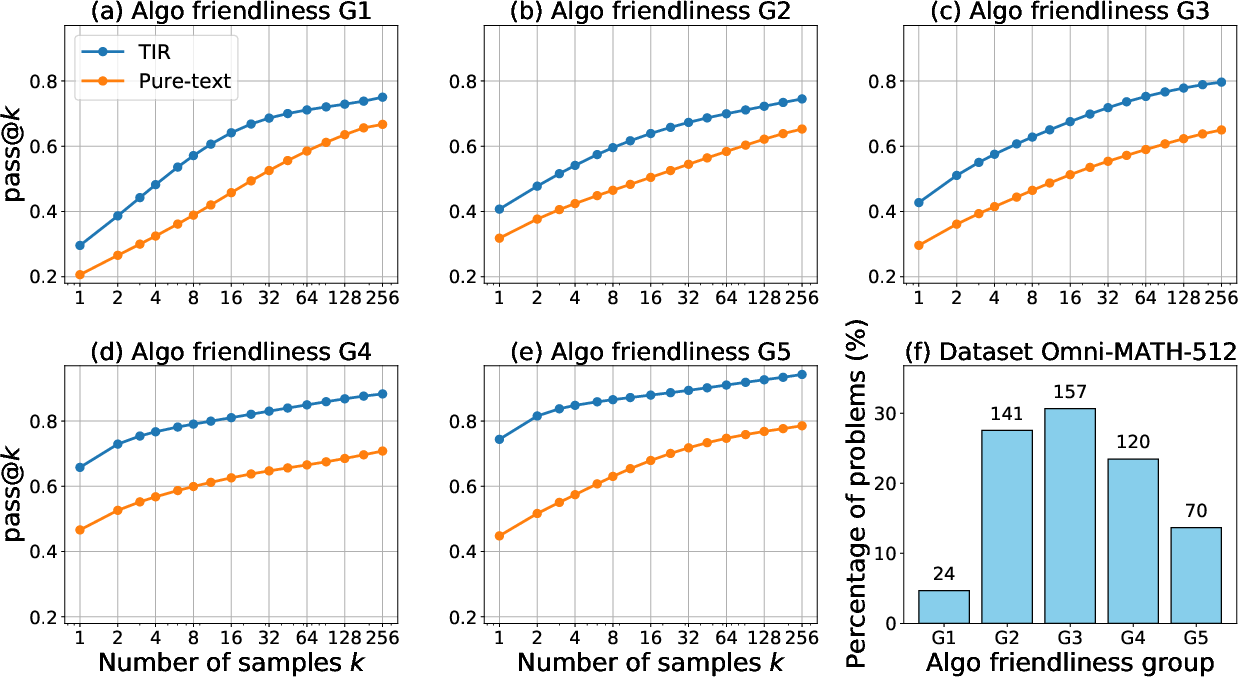

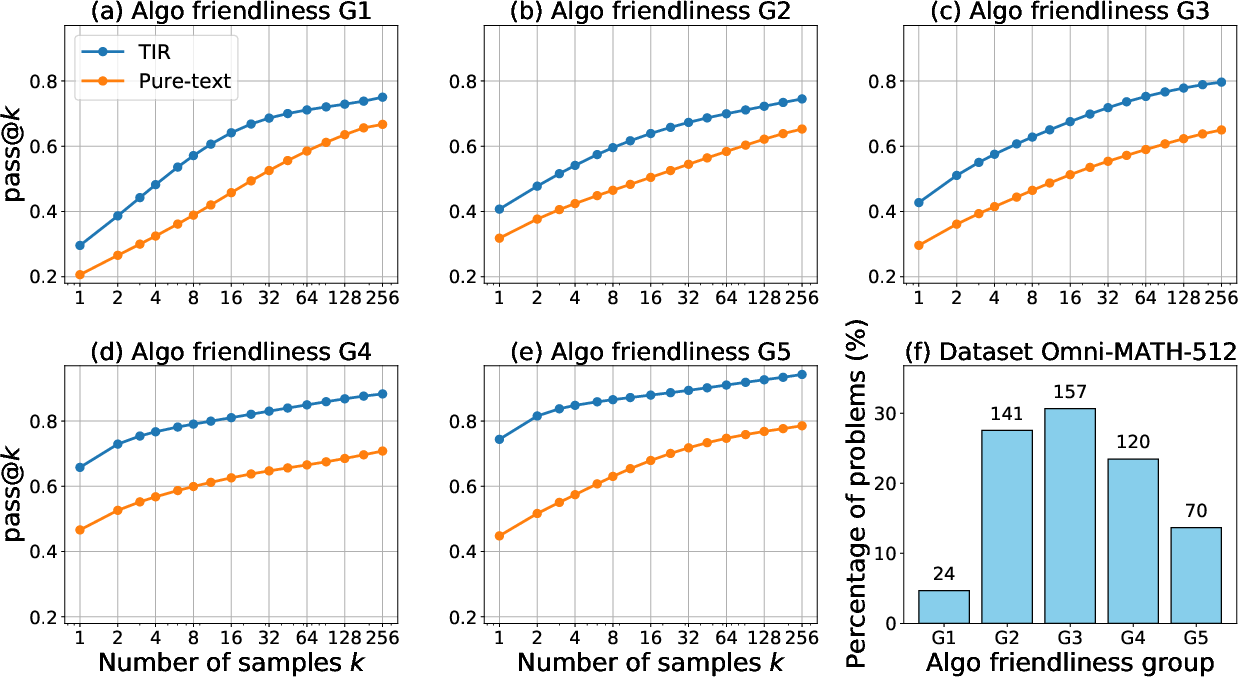

To test whether TIR's advantage is confined to computationally-intensive problems, the authors introduce an "algorithmic friendliness" rubric, classifying problems by their amenability to algorithmic solutions. Analysis shows that TIR's benefits extend to problems requiring significant abstract insight, not just those suited to direct computation.

Figure 4: Pass@k curves grouped by algorithmic friendliness, demonstrating TIR's advantage even on low-friendliness (abstract) problems.

Qualitative analysis identifies three emergent patterns in TIR model behavior:

- Insight-to-computation transformation: The model uses abstract reasoning to reformulate problems into states amenable to programmatic solutions, then leverages the interpreter for efficient computation.

- Exploration and verification via code: The model employs the interpreter as an interactive sandbox for hypothesis testing and iterative refinement, especially on abstract problems.

- Offloading complex calculation: The model delegates intricate or error-prone computations to the interpreter, preserving reasoning integrity.

These patterns represent new computational equivalence classes, inaccessible to pure-text models within practical token budgets.

ASPO: Behavioral Shaping and Stability

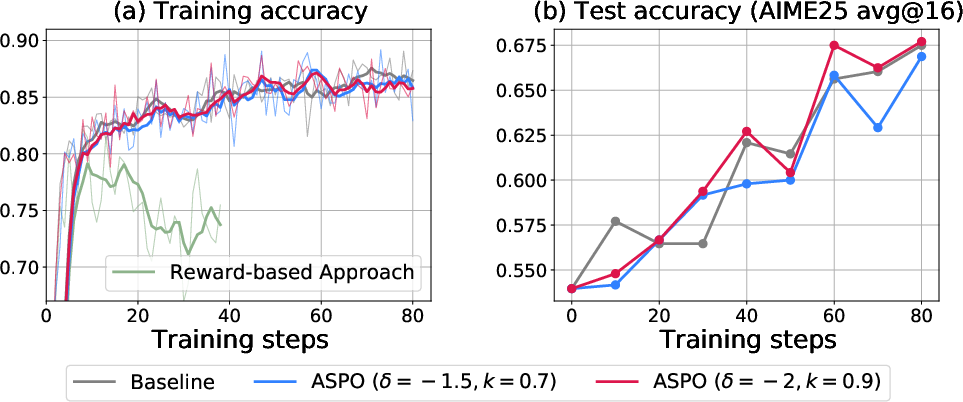

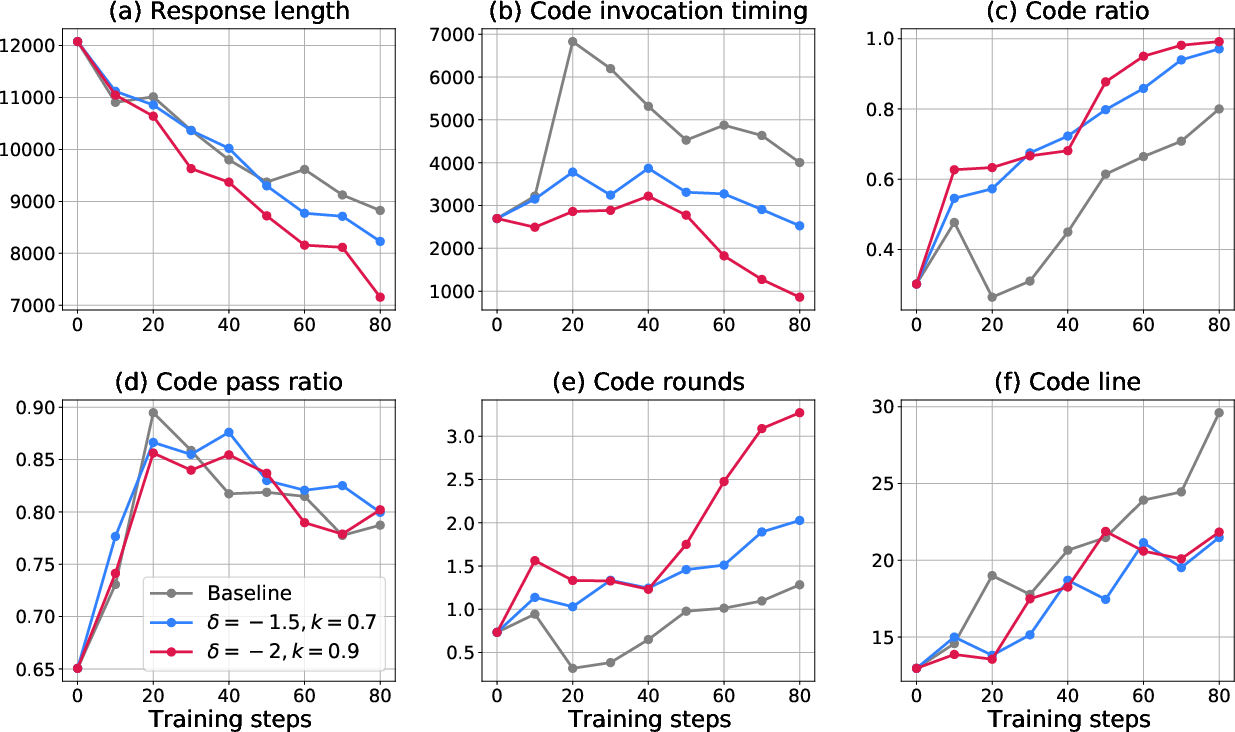

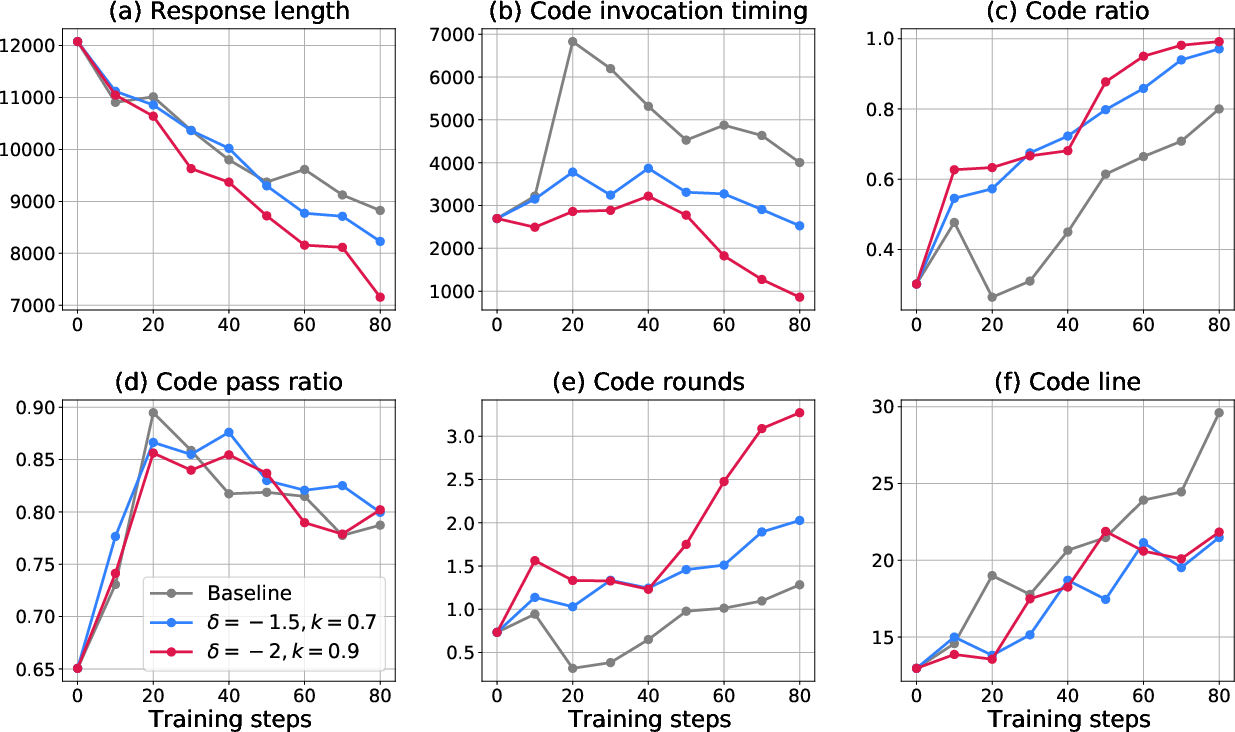

Empirical analysis of ASPO demonstrates that it maintains training stability and final task performance, unlike naive reward-based approaches. ASPO-trained models exhibit earlier and more frequent tool invocation, with controllable behavioral shifts and no evidence of reward hacking.

Figure 5: Training and testing accuracy for baseline and ASPO variants, confirming stability and performance preservation.

Figure 6: Evaluation of code-use behavior on AIME25, showing earlier code invocation and increased tool usage with ASPO.

Implications and Future Directions

The findings advocate for a paradigm shift in LLM design: treating LLMs as core reasoning engines that delegate computational tasks to specialized tools. The formal framework and ASPO algorithm provide principled methods for expanding and controlling LLM capabilities in tool-integrated settings. Extensions to other tools (e.g., search engines, verifiers, external memory) are discussed, with the analytical framework generalizing beyond Python interpreters.

Figure 7: Detailed flow of problem solvability on Omni-MATH-512, further illustrating the expansion enabled by TIR.

Conclusion

This work establishes a formal and empirical foundation for the superiority of Tool-Integrated Reasoning in LLMs. By proving strict support expansion and demonstrating practical necessity via token efficiency, the paper shifts the focus from empirical success to principled understanding. The introduction of ASPO enables stable and controllable behavioral guidance in TIR models. The results have broad implications for the design and deployment of advanced AI agents, suggesting that future systems should be architected for synergistic reasoning with external tools, and that behavioral shaping should be performed at the advantage level for stability and efficacy.