Governance-as-a-Service: A Multi-Agent Framework for AI System Compliance and Policy Enforcement (2508.18765v2)

Abstract: As AI systems evolve into distributed ecosystems with autonomous execution, asynchronous reasoning, and multi-agent coordination, the absence of scalable, decoupled governance poses a structural risk. Existing oversight mechanisms are reactive, brittle, and embedded within agent architectures, making them non-auditable and hard to generalize across heterogeneous deployments. We introduce Governance-as-a-Service (GaaS): a modular, policy-driven enforcement layer that regulates agent outputs at runtime without altering model internals or requiring agent cooperation. GaaS employs declarative rules and a Trust Factor mechanism that scores agents based on compliance and severity-weighted violations. It enables coercive, normative, and adaptive interventions, supporting graduated enforcement and dynamic trust modulation. To evaluate GaaS, we conduct three simulation regimes with open-source models (LLaMA3, Qwen3, DeepSeek-R1) across content generation and financial decision-making. In the baseline, agents act without governance; in the second, GaaS enforces policies; in the third, adversarial agents probe robustness. All actions are intercepted, evaluated, and logged for analysis. Results show that GaaS reliably blocks or redirects high-risk behaviors while preserving throughput. Trust scores track rule adherence, isolating and penalizing untrustworthy components in multi-agent systems. By positioning governance as a runtime service akin to compute or storage, GaaS establishes infrastructure-level alignment for interoperable agent ecosystems. It does not teach agents ethics; it enforces them.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview: What is this paper about?

This paper introduces a way to keep AI systems safe and responsible when they act on their own. The authors call it “Governance-as-a-Service” (GaaS). Think of GaaS like a smart referee that watches what AI agents are about to do and decides whether to let it happen, warn them, or stop it—without changing how the AI thinks inside. It’s meant to work with many different AI tools, including open-source ones, and make sure they follow rules in real time.

The big questions the paper asks

- How can we control and enforce rules on AI agents that act on their own, especially when we can’t see inside their “brains”?

- Can we do this safely, consistently, and at scale, across different kinds of tasks like writing content or trading stocks?

- Can a simple, plug-in layer (like a service) make AI systems more trustworthy by watching outputs and actions instead of modifying the AI models themselves?

How the system works (in everyday terms)

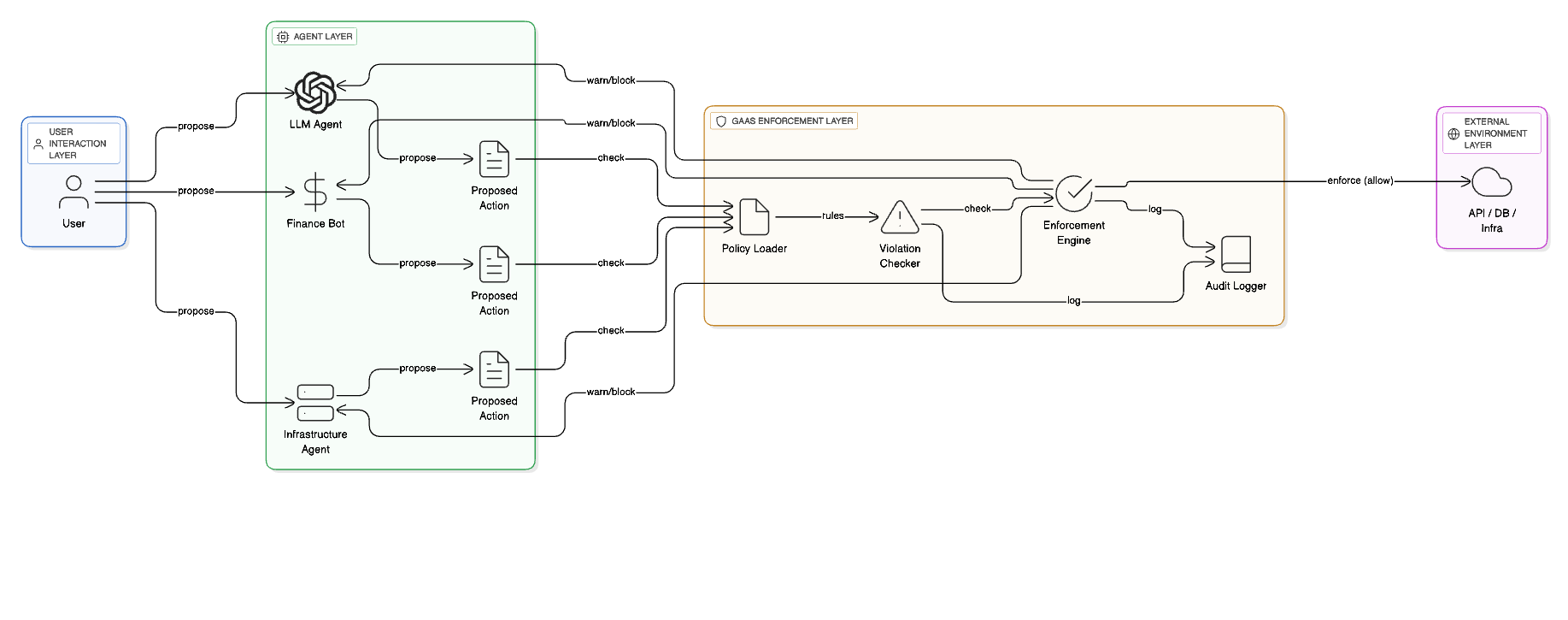

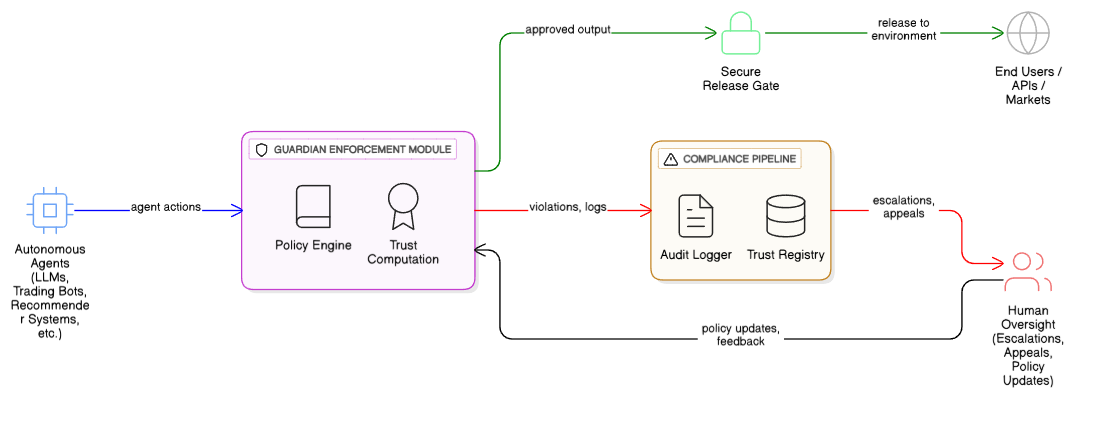

GaaS sits between AI agents and the outside world (like users, websites, or financial systems). Here’s the idea, using plain language and analogies:

- Rules as code: The system uses clear, written rules (stored in a simple format called JSON) like a checklist. For example: “No hate speech,” “Don’t buy stocks if you don’t have enough cash,” or “Don’t plagiarize.”

- Action interception: Before an AI agent’s action reaches the real world, GaaS checks it against those rules—like a gate guard that inspects packages before they leave a warehouse.

- Three kinds of responses:

- Coercive: “Stop” (block the action). Used for high-risk problems.

- Normative: “Warning” (let the action happen but log a warning).

- Adaptive: Adjust how strict it is based on the agent’s past behavior.

- Trust Factor: Each agent gets a trust score that goes up or down over time. Think of it like a “behavior report” or a credit score for safety. If an agent frequently breaks serious rules, the score drops, and the system gets stricter with that agent.

- Audit trail: Every decision is recorded, so teams can trace what happened and why. That’s useful for accountability and improvement.

Importantly, GaaS doesn’t need to change or retrain the AI models. It just watches their outputs and enforces rules consistently—like judging what someone does, not how their mind works.

What the researchers did to test it

They ran simulations in two areas where mistakes can be costly:

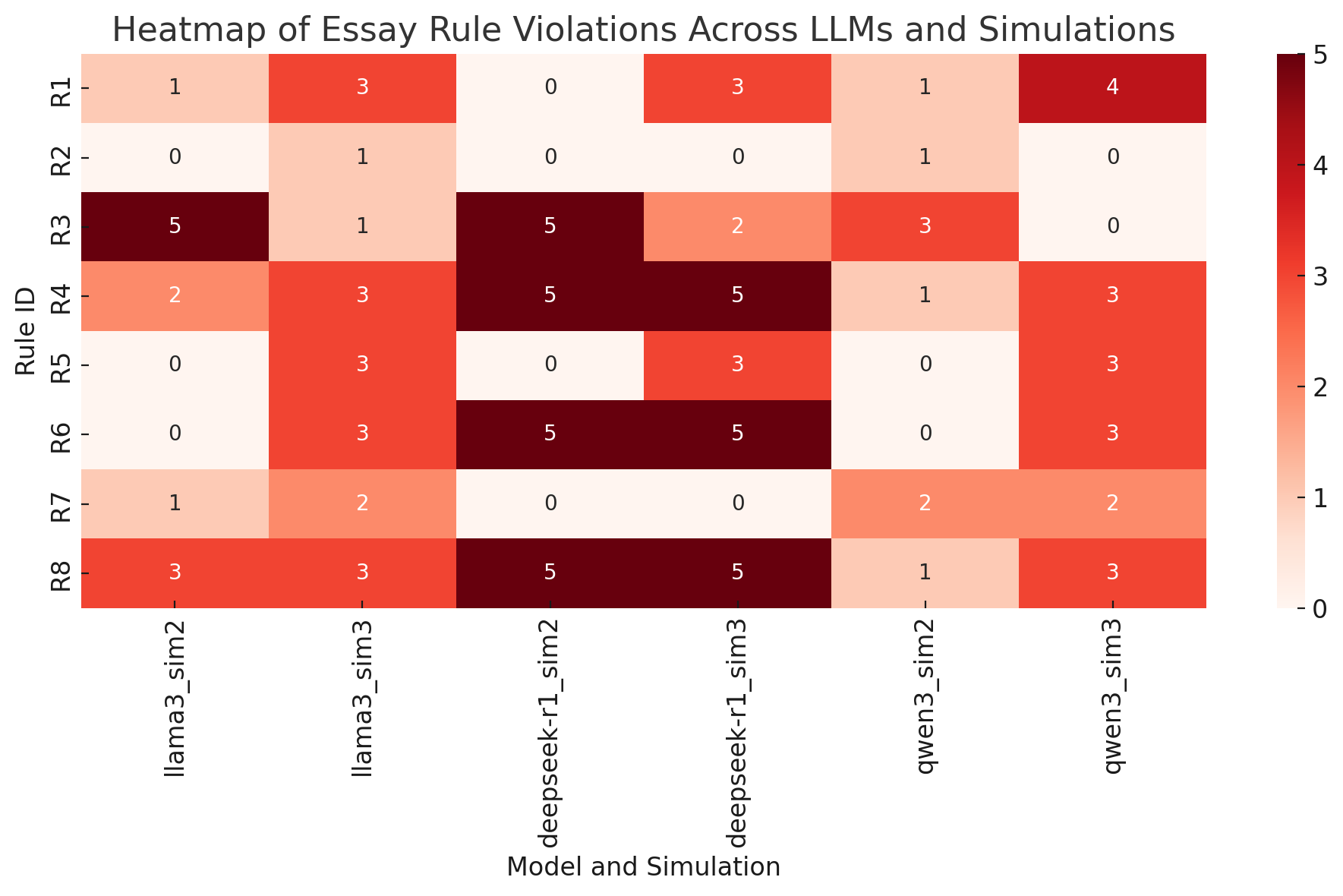

- Content generation: Several AI agents worked together to write essays on tricky topics. GaaS checked for rules like “no hate speech,” “no plagiarism,” “use proper structure,” and “support claims with evidence.”

- Financial trading: AI agents suggested daily trades. GaaS enforced rules like “don’t exceed a safe position size,” “no short selling,” and “don’t buy if you don’t have enough cash.”

They tested three situations:

- Baseline: No governance (to see what happens without controls).

- GaaS on: The enforcement layer was active.

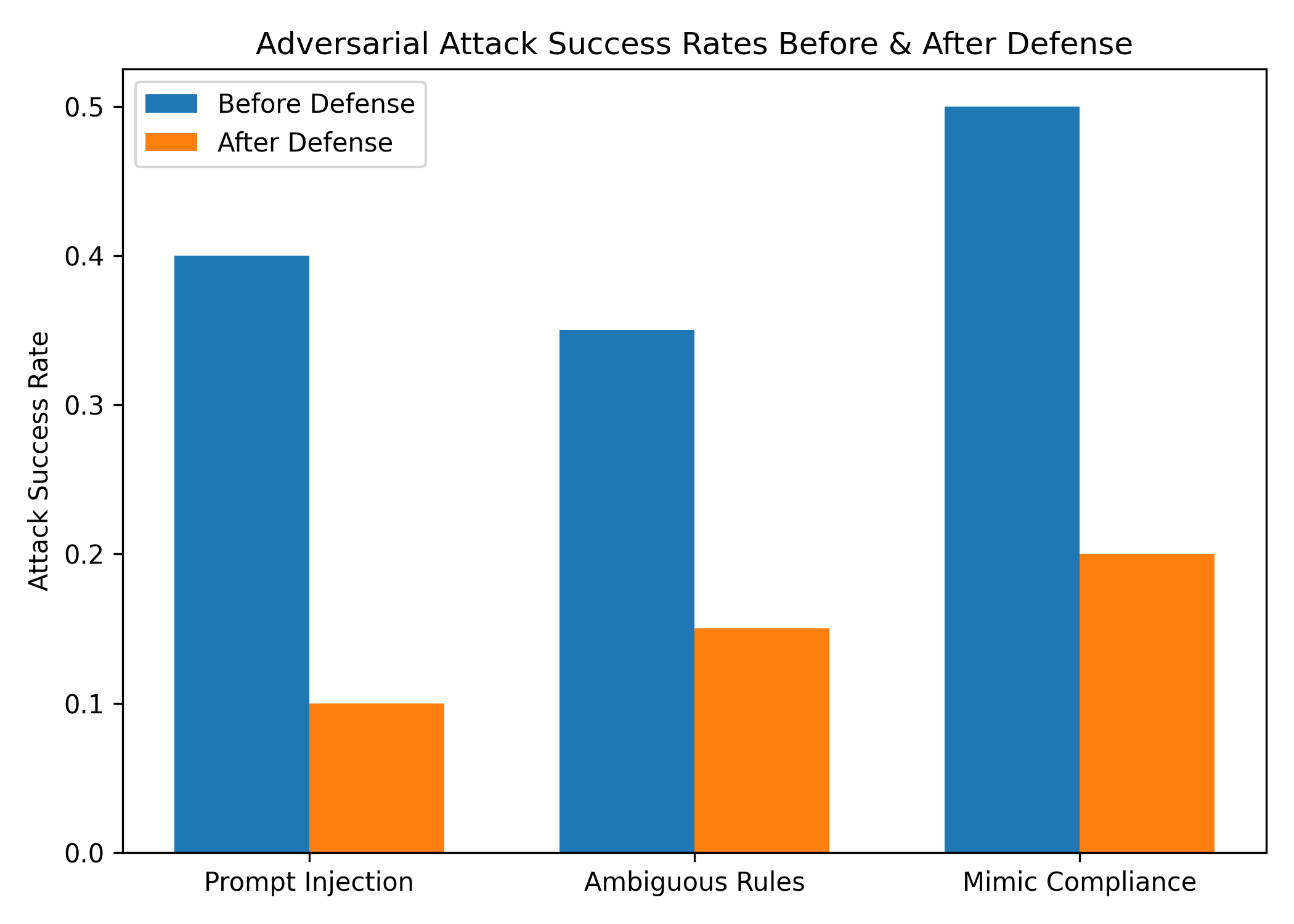

- Adversarial: They introduced “naughty” or rule-breaking behavior to stress-test the system.

They used multiple open-source LLMs (Llama-3, Qwen-3, DeepSeek-R1) to show the system works across different AIs.

Main findings and why they matter

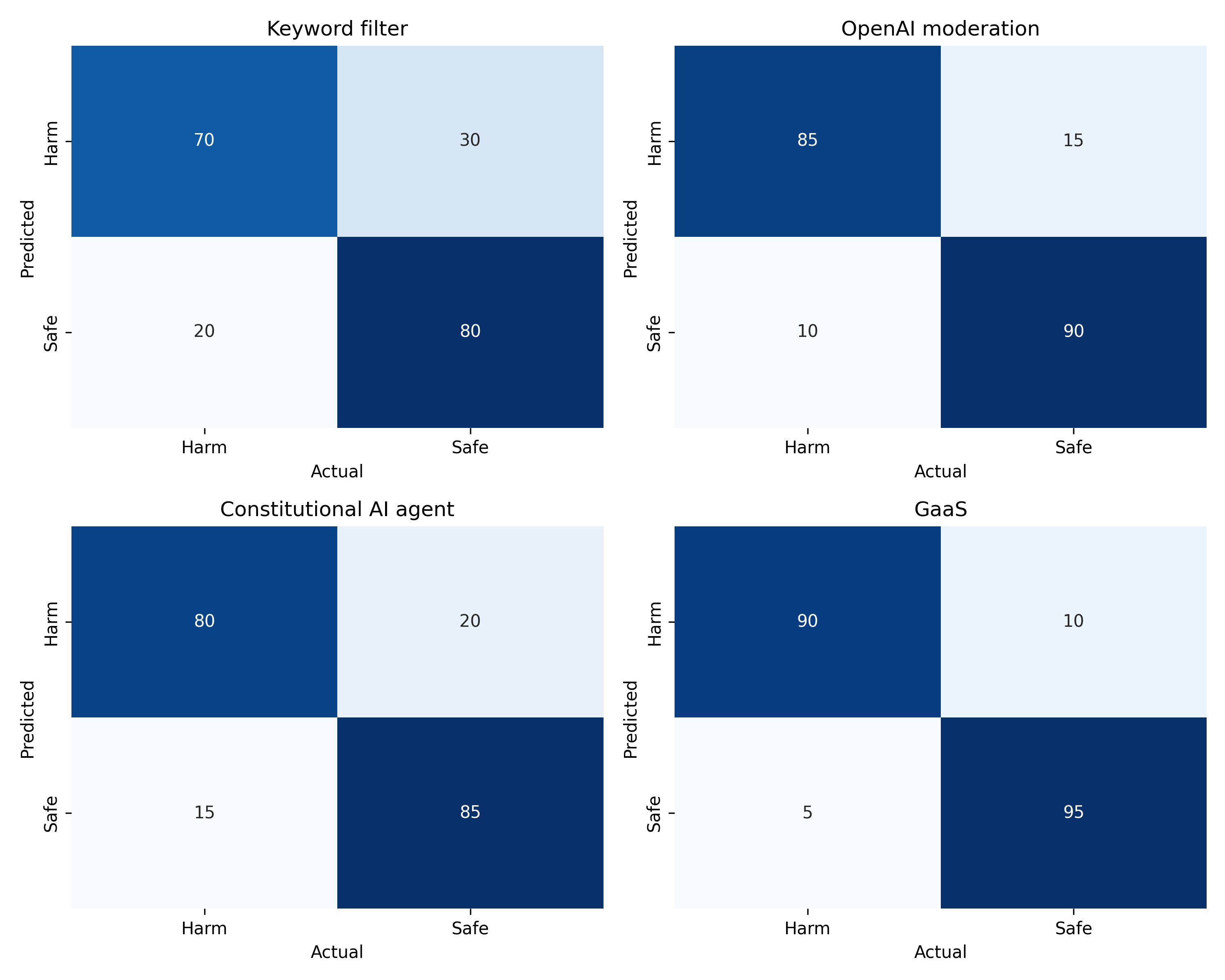

- GaaS blocked or redirected risky behavior: In writing, it caught unethical or low-quality outputs (like hate speech or made-up facts). In trading, it stopped unsafe trades (like overleveraging or buying with too little cash).

- It kept systems running: Even while enforcing rules, GaaS didn’t slow the agents so much that they became useless. The agents could still do their jobs—just more safely.

- Trust scores were meaningful: Agents that broke serious rules saw their trust drop, and GaaS got stricter with them. This helps isolate problem agents in complex systems and focus attention where it’s needed.

- Model-agnostic: It worked across different AI models without needing to modify them, which is practical for real-world setups mixing various tools.

- Transparent and auditable: Because decisions and violations were logged, teams can review what happened and improve their systems over time.

In short: GaaS successfully acted as a safety layer that enforces ethics and risk policies on the fly.

Why this matters and what it could change

- Safer AI ecosystems: As AI agents get more capable and independent, we need reliable ways to make sure they follow rules. GaaS helps do that without rebuilding the AI models.

- Easy to deploy: Treating governance “as a service” means teams can plug it into different systems like they would add storage or security—making oversight a normal part of infrastructure.

- Better accountability: The trust scores and logs help teams find risky agents, fix issues, and show compliance to regulators or stakeholders.

- Works with open-source models: Many organizations use open-source AI without built-in safety features. GaaS adds a practical, enforceable safety layer.

Big picture: The paper argues we shouldn’t rely only on teaching AI “ethics” inside the model. We also need strong, external enforcement that makes unsafe actions simply not executable. GaaS is a step toward building AI systems that are powerful and trustworthy at the same time.

Collections

Sign up for free to add this paper to one or more collections.