- The paper introduces a multipartite network framework that maps cognitive skills, datasets, and LLM modules, uncovering distributed skill encoding.

- It employs community detection and spectral analysis to reveal that module communities do not strictly align with predefined cognitive functions.

- Fine-tuning experiments show that global updates outperform community-based strategies, emphasizing distributed learning dynamics in LLMs.

Introduction

The paper "Unraveling the cognitive patterns of LLMs through module communities" (2508.18192) presents a network-theoretic framework for interpreting the internal organization of LLMs by mapping cognitive skills, datasets, and architectural modules into a multipartite network. Drawing on analogies from neuroscience and cognitive science, the authors systematically analyze how cognitive skills are distributed across LLM modules, the emergent community structures within these networks, and the implications for fine-tuning and interpretability. The paper leverages structural pruning, community detection, and spectral analysis to reveal both parallels and divergences between artificial and biological cognition.

Multipartite Network Construction: Skills, Datasets, and Modules

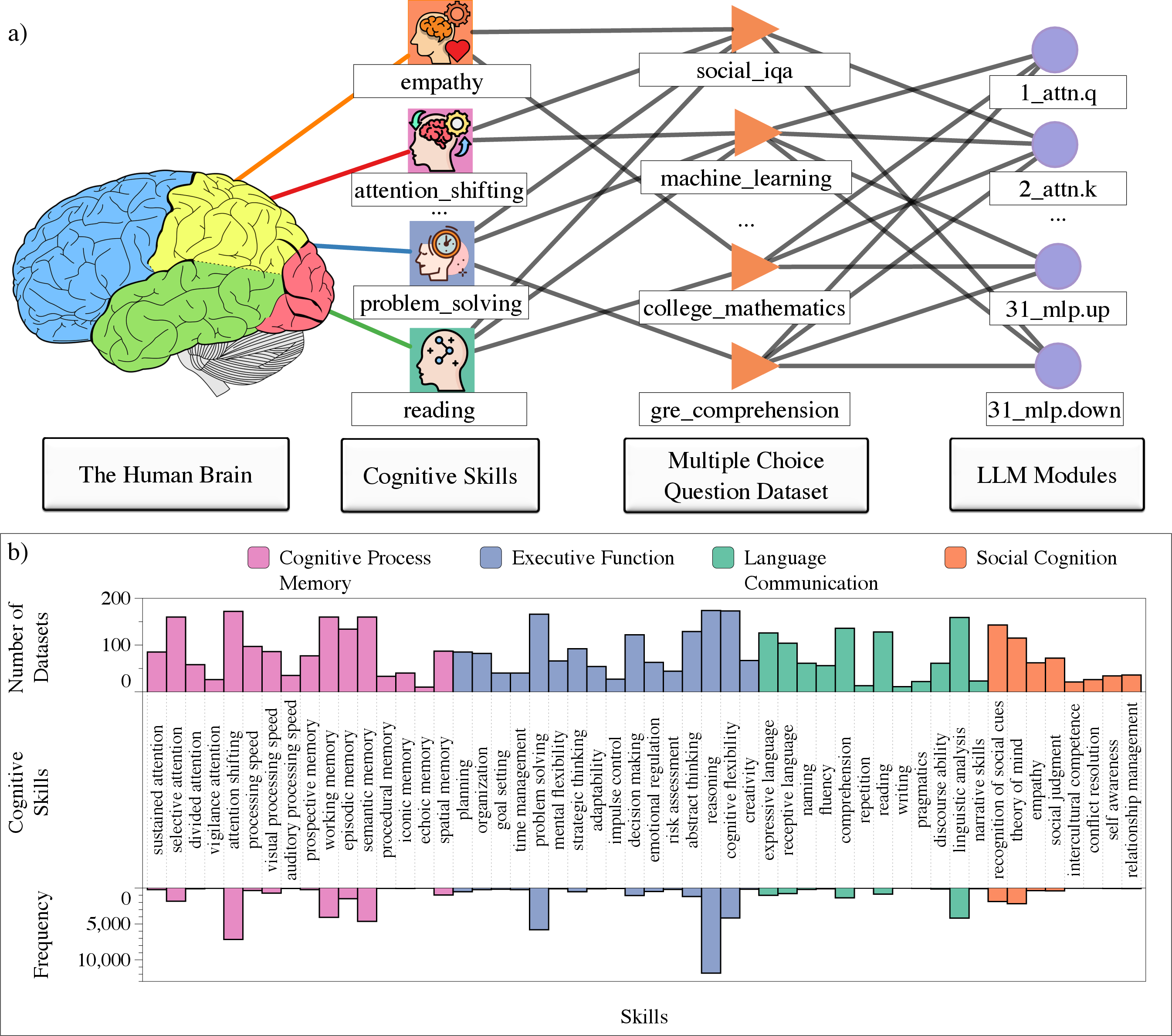

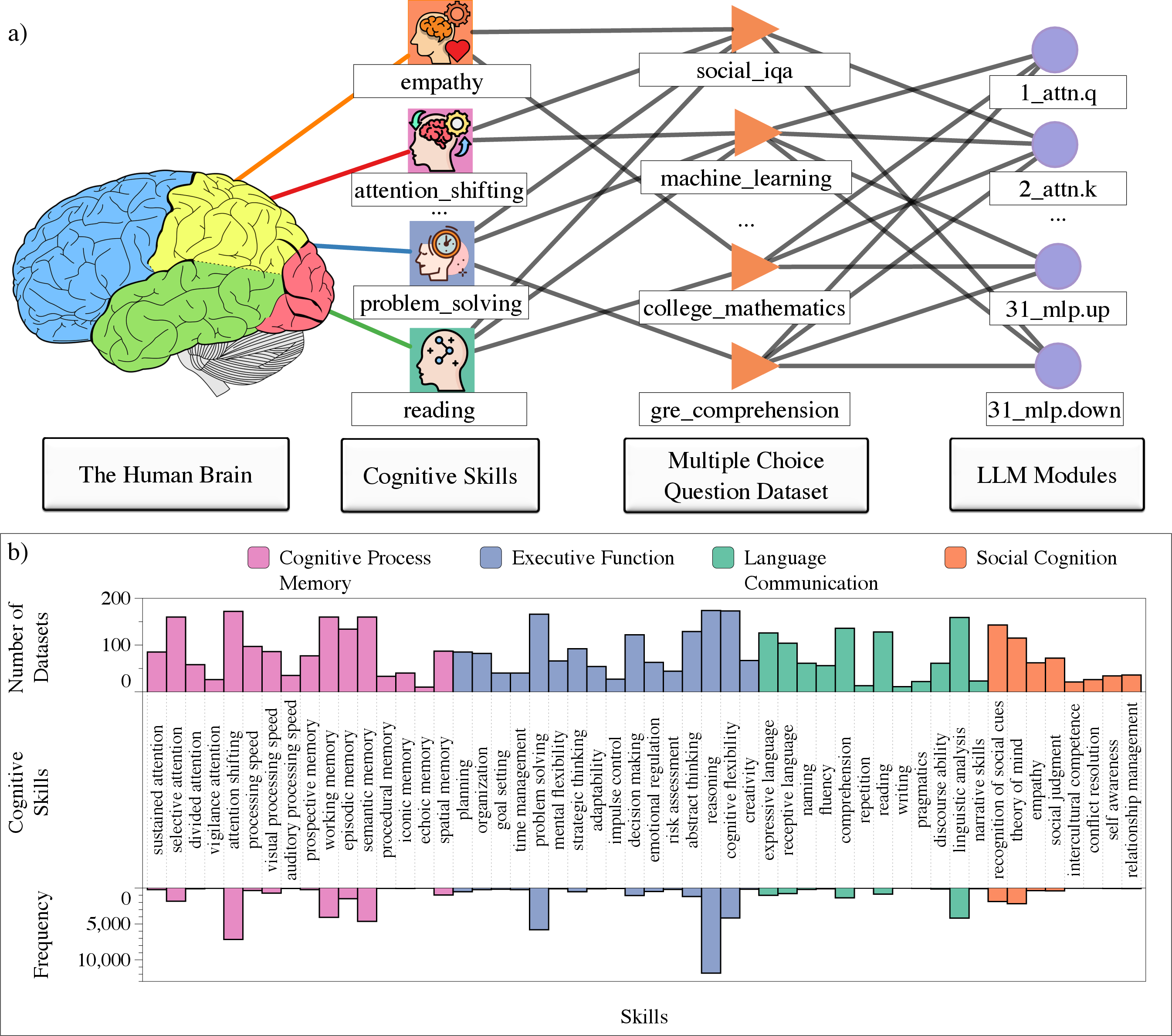

The core methodological innovation is the construction of a multipartite network linking cognitive skills, datasets, and LLM modules. Cognitive skills are defined based on established cognitive science taxonomies and mapped to multiple-choice question datasets using LLM-based annotation. Datasets are then associated with LLM modules via structural pruning (LLM-Pruner), quantifying the impact of each dataset on specific modules.

Figure 1: Schematic of the multipartite network connecting cognitive skills, datasets, and LLM modules, with bar plots showing the frequency of cognitive functions across datasets.

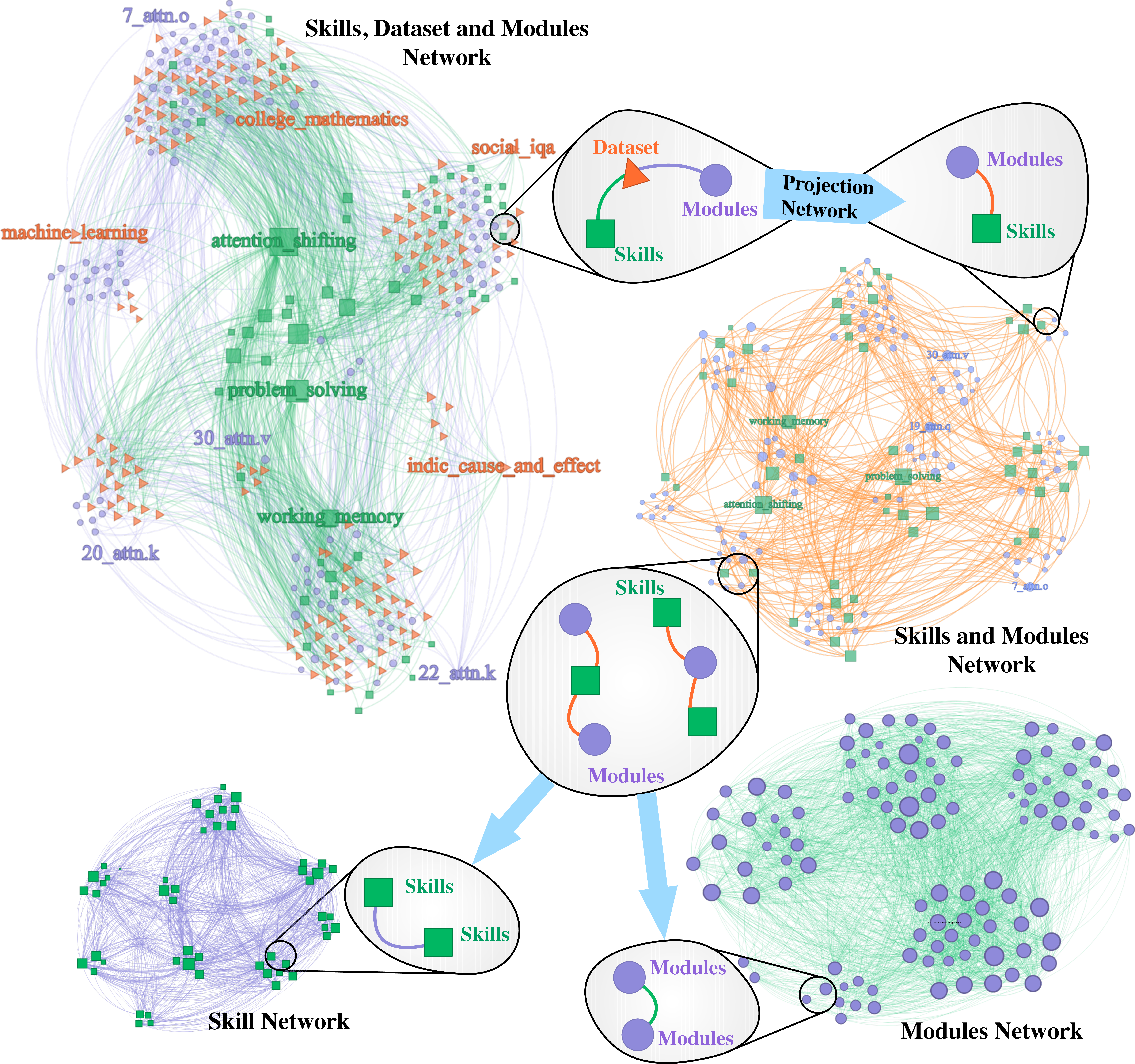

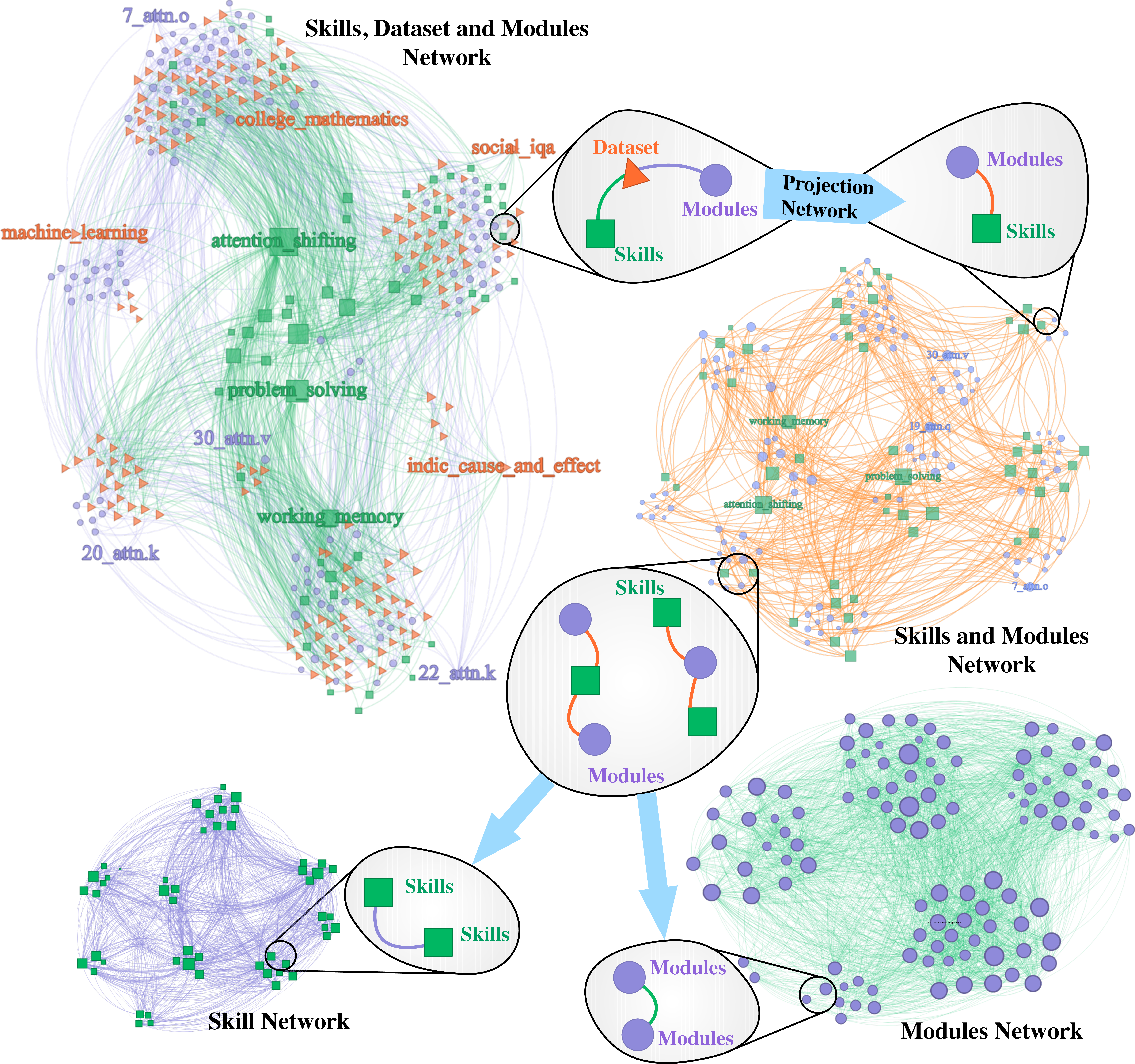

This approach enables the projection of skill-dataset and dataset-module bipartite graphs into a direct skill-module association, facilitating downstream analyses of skill localization and module community structure.

Figure 2: Visualization of the multipartite network for Llama2, with modules, datasets, and skills as nodes; the projection network highlights direct skill-module dependencies after collapsing intermediary datasets.

Community Structure and Skill Localization

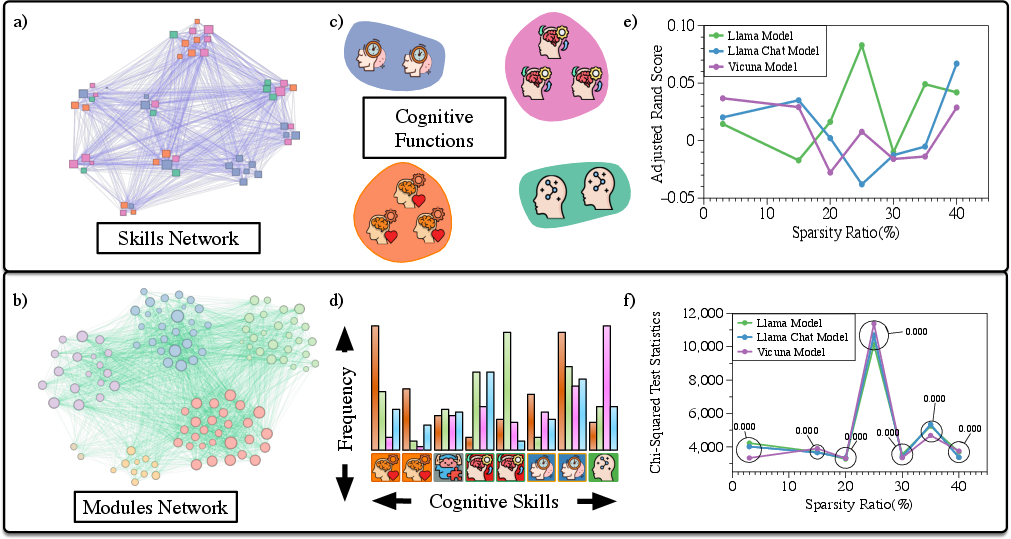

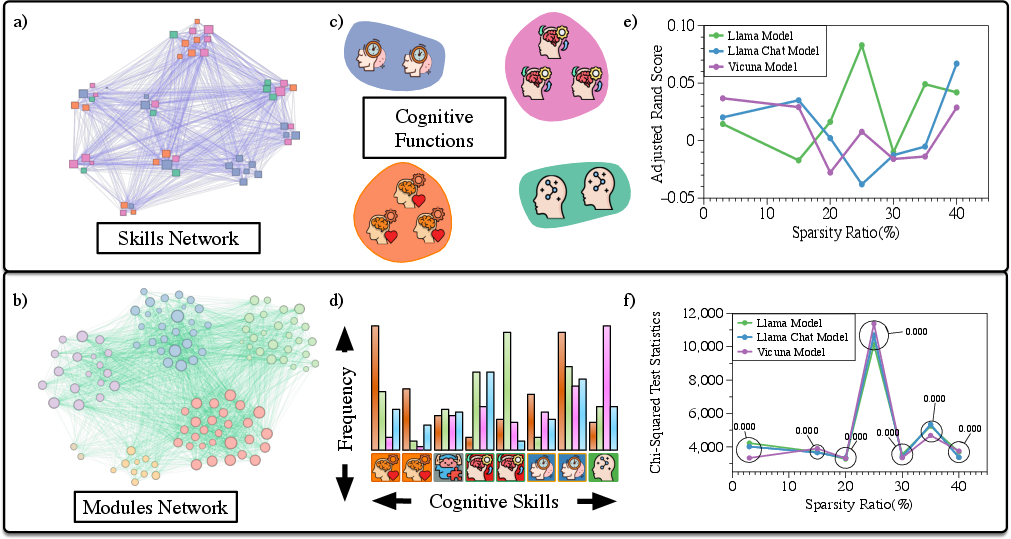

Community detection (Louvain algorithm) is applied to the projected skill and module networks to uncover latent organizational patterns. The analysis reveals that while LLM modules form tightly interconnected communities based on shared skill distributions, there is no significant alignment between these communities and predefined cognitive function categories. Adjusted Rand Index (ARI) and chi-squared tests confirm that skill allocation is statistically independent of cognitive function labels, even under varying pruning strategies and across different LLM architectures (Llama, Llama-Chat, Vicuna).

Figure 3: Community structure comparison between skills and modules; skills are grouped by detected communities and colored by cognitive function, with ARI and chi-squared tests quantifying alignment.

This result stands in contrast to the human brain, where cognitive skills tend to localize within distinct anatomical regions. In LLMs, skill localization is more distributed, with each module community exhibiting a unique but functionally heterogeneous skill profile.

Spectral and Topological Properties of Module Networks

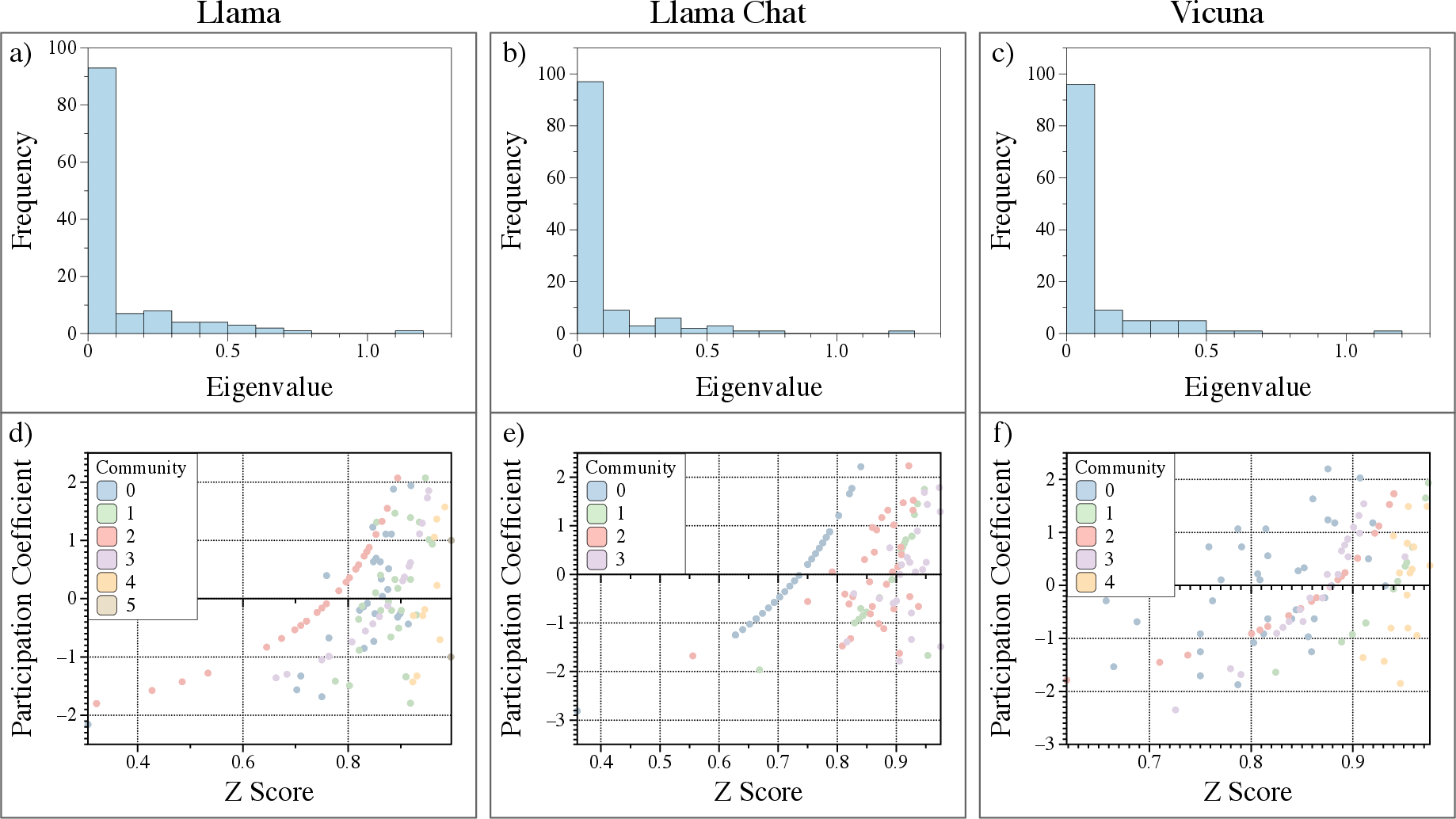

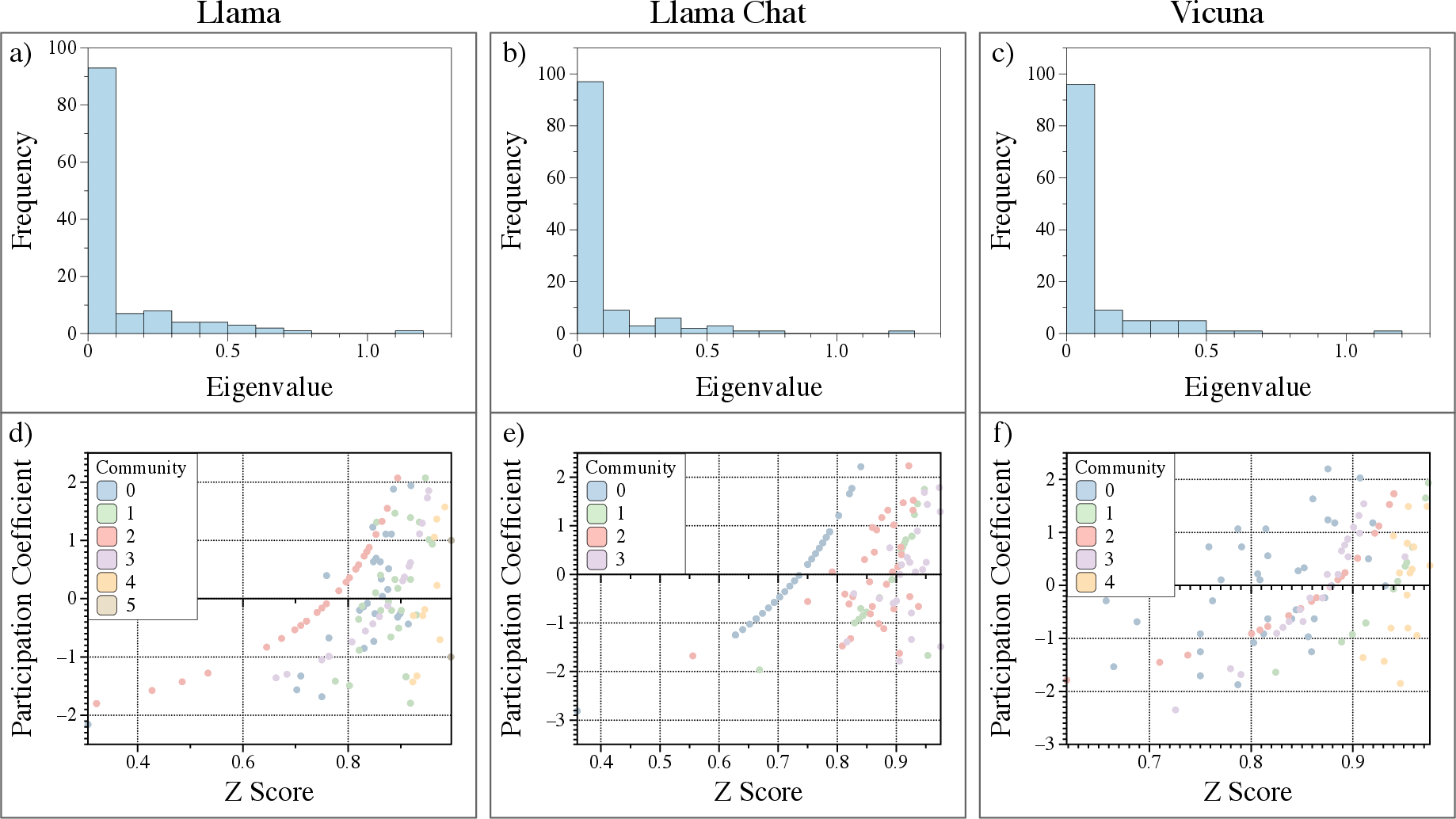

Spectral analysis of the module connectivity networks reveals well-defined community structures, as evidenced by distinct eigenvalue gaps. Participation coefficients and within-module degree Z-scores are computed to quantify the roles of individual modules within and across communities. High participation coefficients indicate that many modules act as bridges between communities, while Z-scores identify both influential hubs and peripheral modules.

Figure 4: Eigenvalue distributions, participation coefficients, and Z-scores for module networks, highlighting community structure and the integrative roles of specific modules.

These findings suggest that, similar to biological neural systems, LLMs balance modular specialization with global integration. However, the degree of functional localization is weaker, with extensive cross-community interactions supporting distributed processing.

Fine-Tuning and Functional Specialization

To probe the functional relevance of module communities, the authors conduct targeted fine-tuning experiments. Models are fine-tuned on datasets aligned with specific module communities, on random module subsets, on all modules, or not at all. The magnitude of weight changes is greatest for community-based fine-tuning, but accuracy improvements are highest when all modules are fine-tuned. Community-based and random-module fine-tuning yield comparable performance, indicating that skill-relevant modules do not confer a clear advantage in task adaptation.

This result is consistent with the weak-localization architecture observed in avian and small mammalian brains, where cognitive functions are distributed and supported by dynamic cross-regional interactions, rather than the strong localization seen in the human cortex.

Implications and Future Directions

The paper provides several key insights:

- Distributed Skill Encoding: LLMs encode cognitive skills in a distributed manner across module communities, lacking the strict functional localization characteristic of biological brains.

- Community Structure without Functional Alignment: Emergent module communities are not aligned with cognitive function categories, challenging the assumption that architectural modularity in LLMs mirrors biological specialization.

- Fine-Tuning Strategies: Targeted fine-tuning of skill-relevant modules does not yield superior performance compared to random or global fine-tuning, underscoring the importance of distributed learning dynamics.

- Interpretability and Model Design: The network-based framework offers a principled approach for interpreting LLM internals and suggests that future fine-tuning and pruning strategies should leverage global dependencies rather than rigid modular interventions.

The findings motivate further research into more granular definitions of cognitive skills, the scalability of network-based interpretability to larger models, and the development of optimization strategies that exploit the distributed nature of knowledge representation in LLMs.

Conclusion

This work advances the interpretability of LLMs by integrating cognitive science, network theory, and machine learning. The multipartite network framework reveals that, unlike biological brains, LLMs exhibit distributed, community-based organization of cognitive skills without strict functional localization. These results have significant implications for model interpretability, fine-tuning, and the design of future AI systems, highlighting the need to move beyond modular interventions toward strategies that harness the full complexity of distributed representations.