- The paper introduces UQ, a benchmark that curates 500 unsolved real-world questions from Stack Exchange to challenge LLMs.

- It employs a three-stage pipeline—rule-based, LLM-based, and human review—to ensure question quality and difficulty.

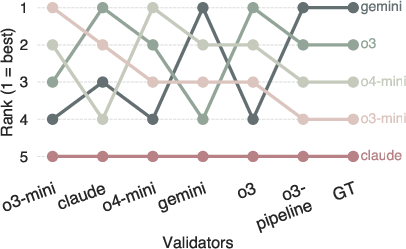

- The study reveals a generator-validator gap, highlighting challenges in oracle-free evaluation and unstable model rankings.

UQ: Assessing LLMs on Unsolved Questions

Motivation and Benchmark Paradigm Shift

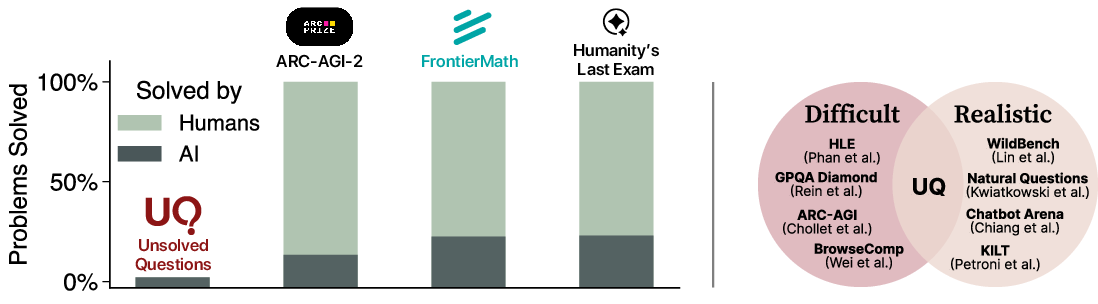

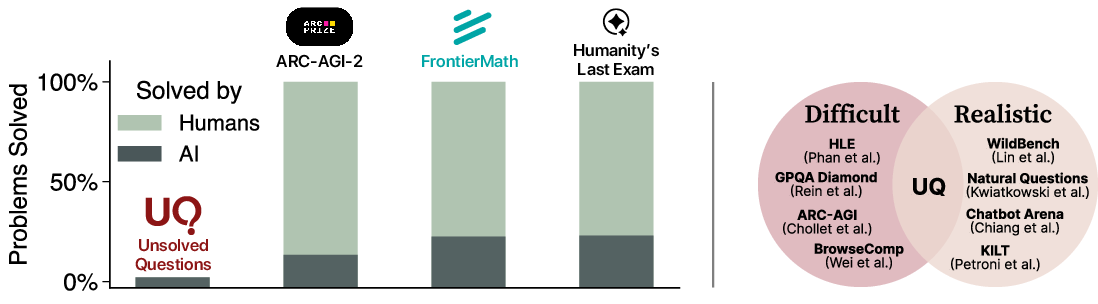

The paper introduces UQ, a benchmark and evaluation framework that fundamentally departs from traditional exam-style or user-query-based benchmarks by focusing on unsolved, real-world questions. The motivation is twofold: (1) existing benchmarks are rapidly saturated by frontier LLMs, and (2) there is a persistent tension between constructing benchmarks that are both difficult and realistic. Exam-based benchmarks can be made arbitrarily hard but often lack real-world relevance, while user-query-based benchmarks are realistic but tend to be too easy and quickly saturated.

UQ addresses this by curating a dataset of 500 unsolved questions from Stack Exchange, targeting open problems that have resisted solution despite significant community engagement. This approach ensures both high difficulty and real-world relevance, as these questions are naturally posed by humans with genuine information needs.

Figure 1: UQ focuses on hard, open-ended problems not already solved by humans, addressing the difficulty-realism tradeoff in prior benchmarks.

Dataset Construction and Analysis

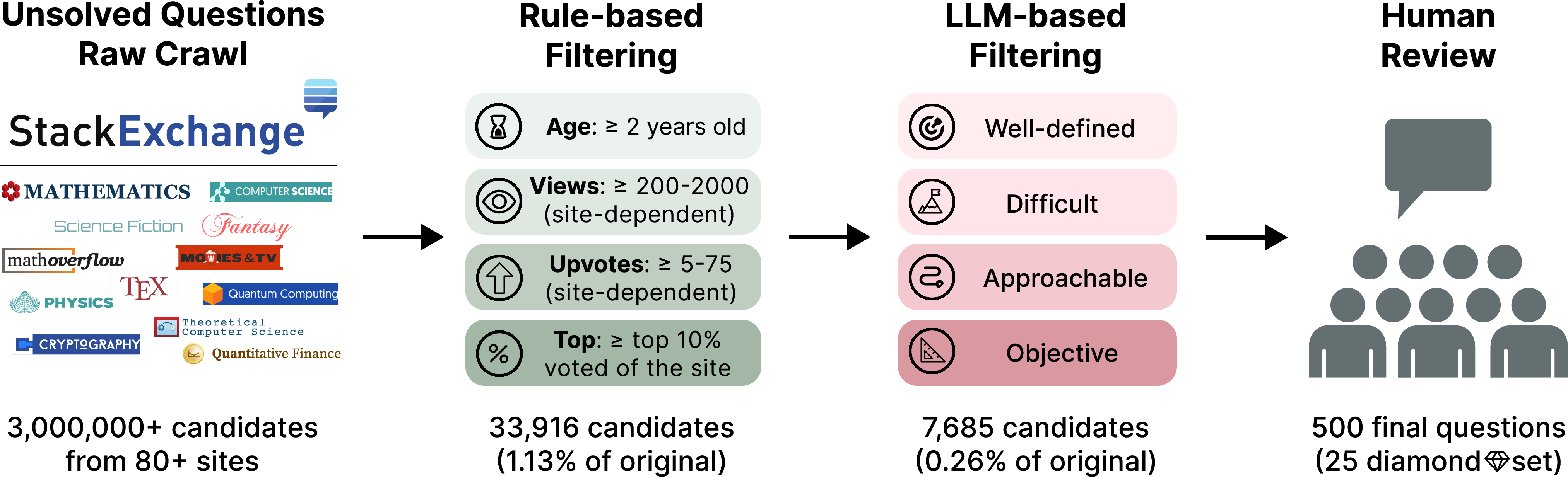

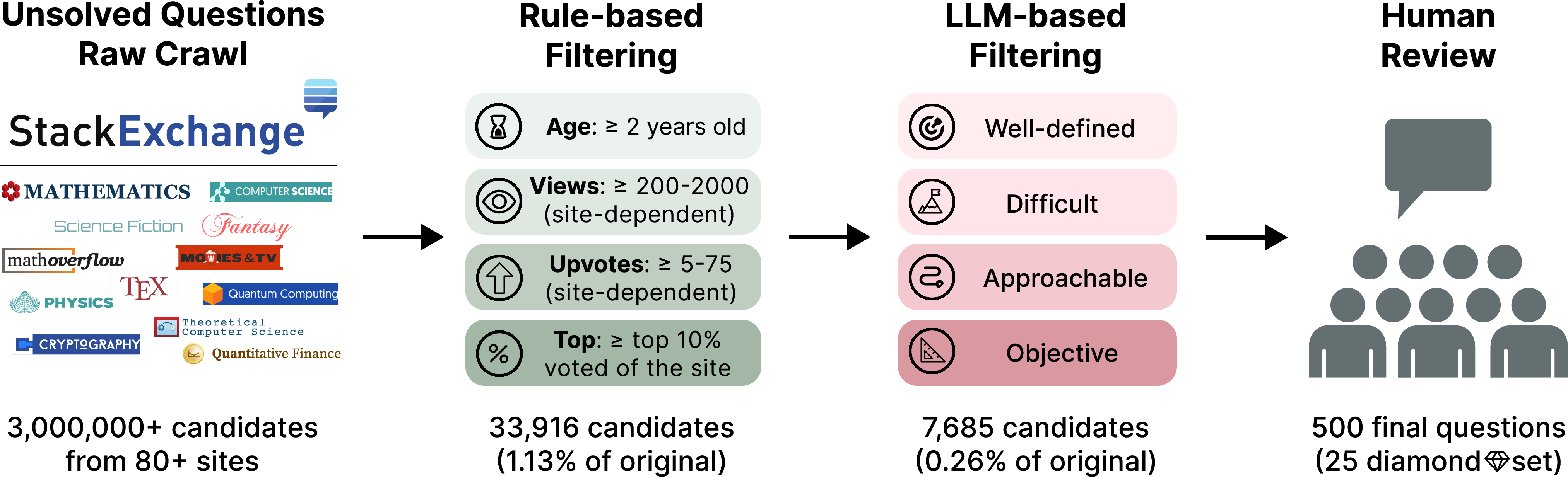

The UQ dataset is constructed via a three-stage pipeline:

- Rule-Based Filtering: From over 3 million unanswered questions across 80 Stack Exchange sites, heuristic rules filter for age, engagement (views, upvotes), and absence of answers, reducing the pool to ~34,000 candidates.

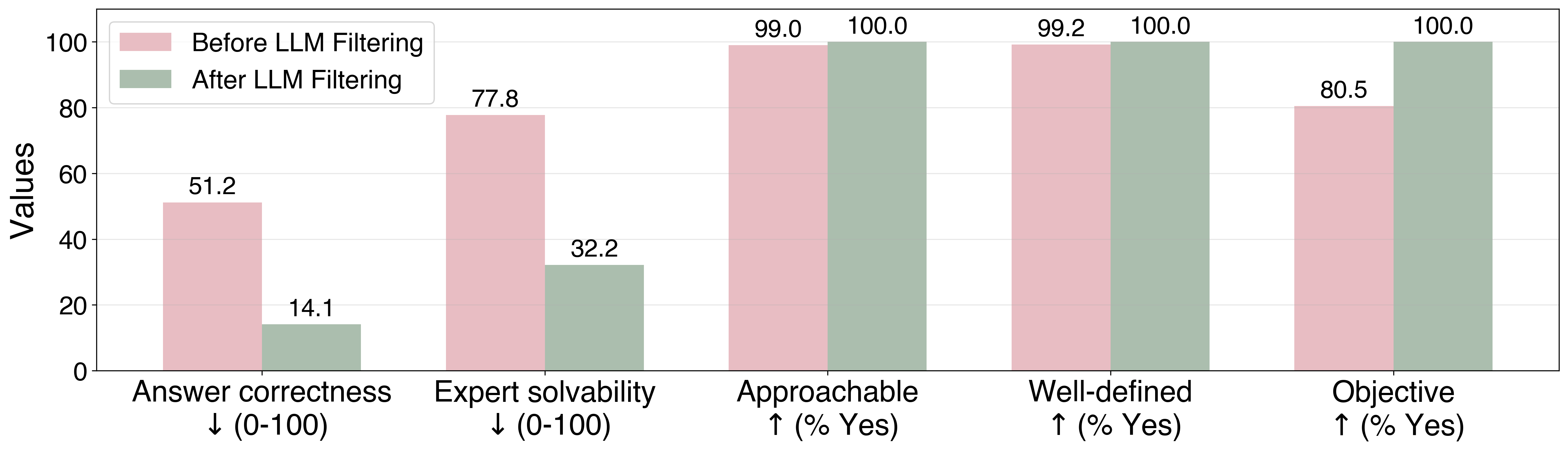

- LLM-Based Filtering: Candidate questions are further filtered using LLMs to assess well-definedness, difficulty (by both model answer correctness and expert solvability), approachability, and objectivity. Only questions with low model answer correctness and low expert solvability, but high quality on binary criteria, are retained.

- Human Review: PhD-level annotators review the remaining questions, considering both the question and model-generated answers, to ensure high quality and diversity.

Figure 2: The dataset creation pipeline combines rule-based filters, LLM judges, and human review to ensure question quality and difficulty.

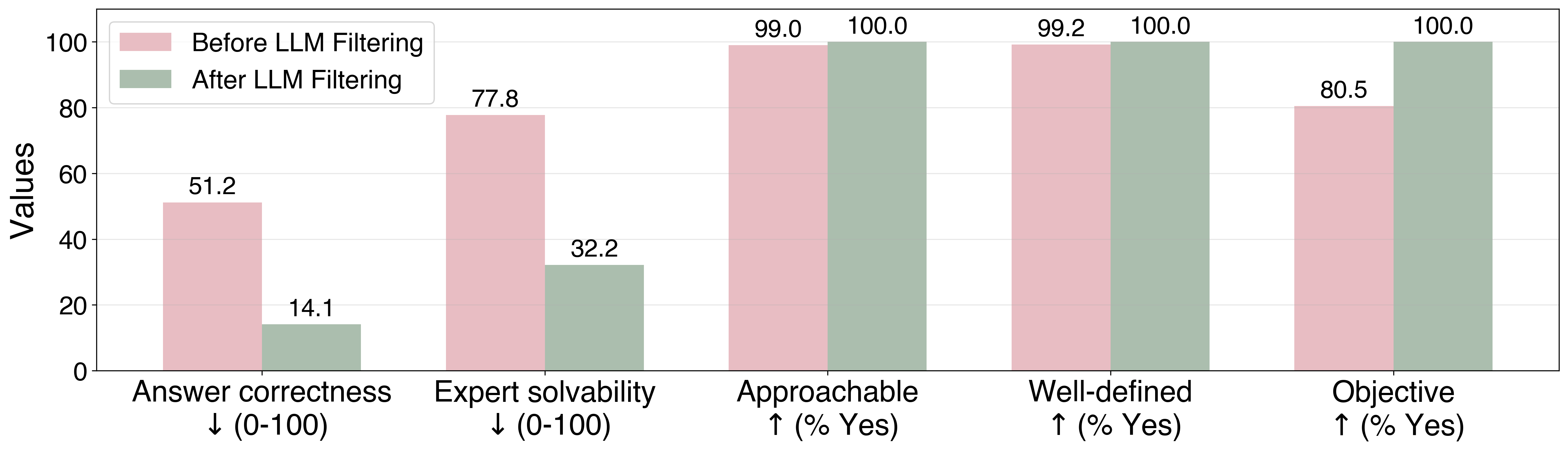

LLM-based filtering is shown to significantly increase question difficulty, as measured by both model answer correctness and expert solvability, while saturating quality metrics at 100%.

Figure 3: LLM-based filters reduce the candidate pool and increase difficulty, with quality metrics saturating as non-conforming questions are discarded.

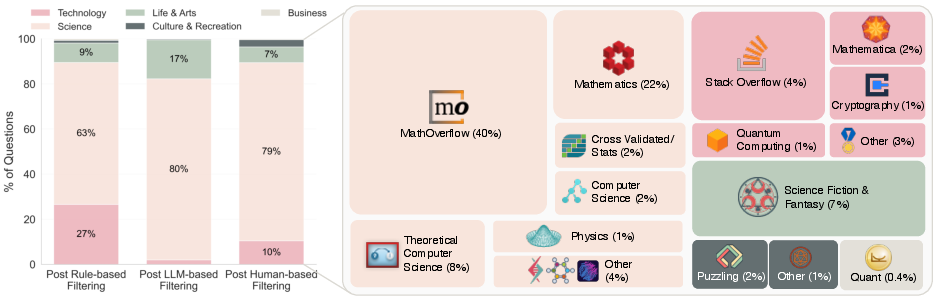

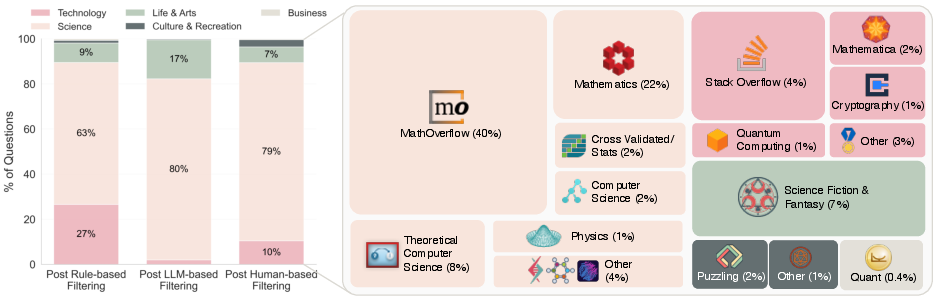

The final dataset is dominated by STEM topics, reflecting both Stack Exchange usage and the filtering criteria, but also includes questions from history, linguistics, and science fiction.

Figure 4: Question composition across filtering stages and Stack Exchange domains, with a majority in science and technology.

Oracle-Free Validation: Generator-Validator Gap and Strategies

A central challenge in evaluating model answers to unsolved questions is the absence of ground-truth answers. UQ addresses this by developing compound LLM-based validation strategies—referred to as "validators"—that aim to rule out false answers and provide useful signals for downstream human review.

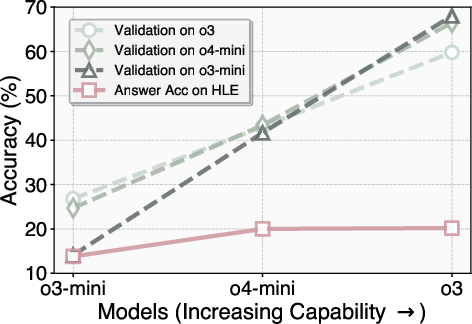

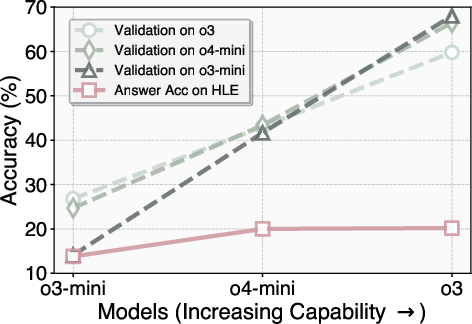

A key empirical finding is the generator-validator gap: as model capability increases, validation accuracy (i.e., the ability to judge correctness of answers) improves faster than answer generation accuracy. This gap is robust and transfers across datasets.

Figure 5: A model's ability to validate answers grows faster than its ability to generate them, supporting the use of LLM validators.

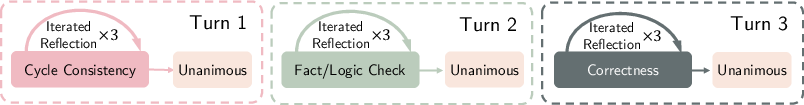

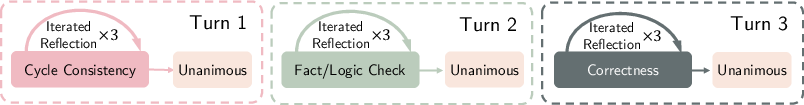

The validator design space is hierarchical:

- Low-level: Prompting for correctness, fact/logic check, and cycle consistency.

- Mid-level: Repeated sampling and iterated reflection.

- High-level: Majority/unanimous voting and sequential pipeline verification.

The default performant pipeline combines these strategies in a multi-stage process.

Figure 6: The default, performant validation pipeline used in UQ experiments.

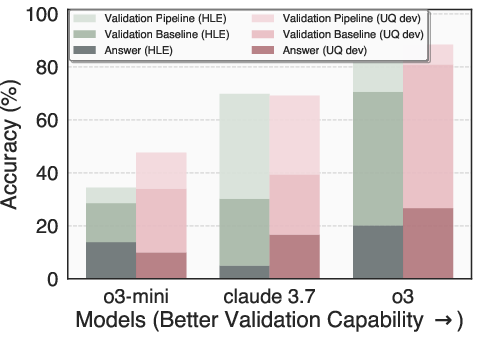

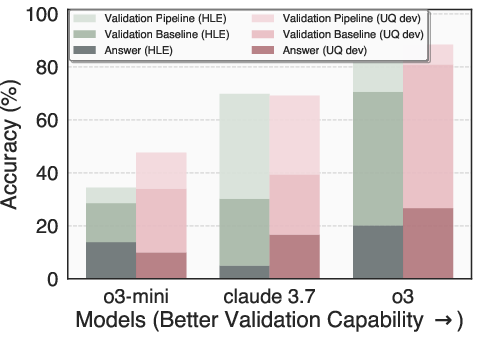

Empirical results on surrogate datasets (e.g., Humanity's Last Exam) show that compound validators outperform simple prompting baselines, with accuracy improvements from ~20% to over 80% depending on the model and strategy. However, high precision remains difficult to achieve, and there is a sharp tradeoff between precision and recall. Notably, stricter validators (e.g., more iterations or unanimity) do not always yield higher precision, indicating that validator strictness is not analogous to confidence thresholding.

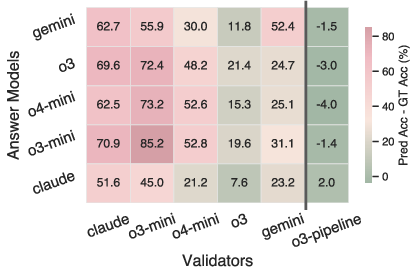

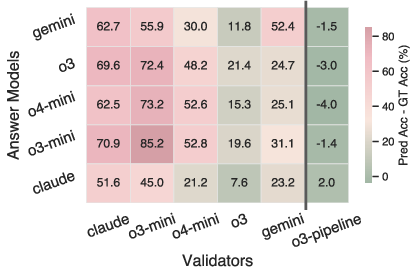

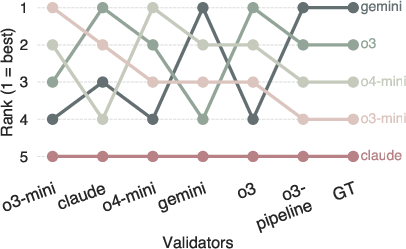

Validators also exhibit significant self- and sibling-model bias when naively applied, overrating answers from the same model family. Compound validation pipelines substantially mitigate this bias.

Figure 7: Left—LLM validators overrate self and sibling answers; right—model ranking is unstable across validator performance, with only the strongest validator agreeing with ground truth.

Model rankings are highly unstable across validators of varying strength, cautioning against the use of oracle-free validators for automated leaderboards without human verification.

UQ is complemented by an open platform (https://uq.stanford.edu) that hosts the dataset, candidate model answers, validation results, and full provenance for reproducibility. The platform enables:

- Browsing and sorting of questions and answers.

- Submission of new answers and reviews by model developers and users.

- Human reviews with correctness and confidence ratings.

- Display of validation results and additional AI reviews.

- Resolution statistics and model ranking based on verified solutions.

This infrastructure supports ongoing, asynchronous, and community-driven evaluation, with the goal of continuously updating the dataset as questions are solved and new unsolved questions are added.

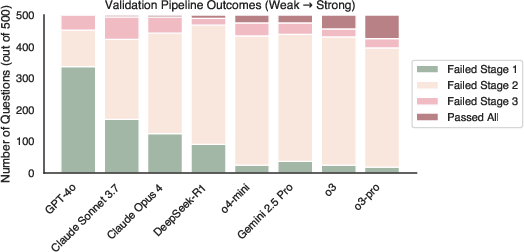

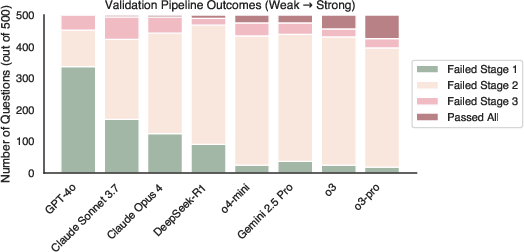

Frontier models are evaluated on the UQ dataset using the 3-iter pipeline validator. Pass rates are low: the top-performing model (o3-pro) passes validation on only 15% of questions, and most models pass on fewer than 5%. Human verification of validated answers reveals that only a small fraction are actually correct, with common failure modes including hallucinated references and incomplete solutions.

Figure 8: Validation outcomes across models show that stronger models fail less frequently in early stages and are more robust to multi-stage validation.

This highlights both the difficulty of the benchmark and the limitations of current models and validators. The platform is designed to facilitate ongoing human verification and dataset refreshes as models improve.

Implications, Limitations, and Future Directions

Practical Implications:

UQ provides a testbed for evaluating LLMs on genuinely hard, real-world problems where progress has direct value. The generator-validator gap supports the use of LLMs as triage tools for human reviewers, reducing the burden of expert verification. The open platform enables transparent, reproducible, and community-driven evaluation.

Theoretical Implications:

The instability of model rankings across validators and the difficulty of achieving high precision without ground truth highlight fundamental challenges in oracle-free evaluation. The generator-validator gap suggests that validation may be a distinct capability from generation, with implications for model architecture and training.

Limitations:

- The dataset is currently sourced entirely from Stack Exchange, with a STEM skew.

- Validator evaluation relies on surrogate datasets due to limited expert annotation budget.

- Oracle-free validation remains an open research problem, especially for domains requiring reference verification or formal proof checking.

- Early platform engagement may be biased toward LLM researchers rather than domain experts.

Future Work:

- Expanding the dataset to include more diverse sources and domains.

- Developing domain-specific validators (e.g., proof assistants, code execution).

- Exploring generator-validator interaction and co-training.

- Improving validator precision and controllability.

- Studying the dynamics of community-driven evaluation and incentives.

Conclusion

UQ establishes a new paradigm for LLM evaluation by focusing on unsolved, real-world questions and leveraging compound LLM-based validators and community-driven human verification. The benchmark exposes the limitations of current models and evaluation strategies, provides a scalable path for future research on oracle-free evaluation, and offers a dynamic, evolving testbed for measuring genuine progress in AI capabilities. As models improve and more questions are solved, UQ is positioned to serve as a foundation for research in hard-to-verify domains and the development of robust, trustworthy AI systems.