This paper explores the use of LLMs as judges to evaluate other LLM-based chat assistants, and introduces two benchmarks, MT-bench and Chatbot Arena, to verify the agreement between LLM judges and human preferences.

Here's a detailed breakdown:

Introduction

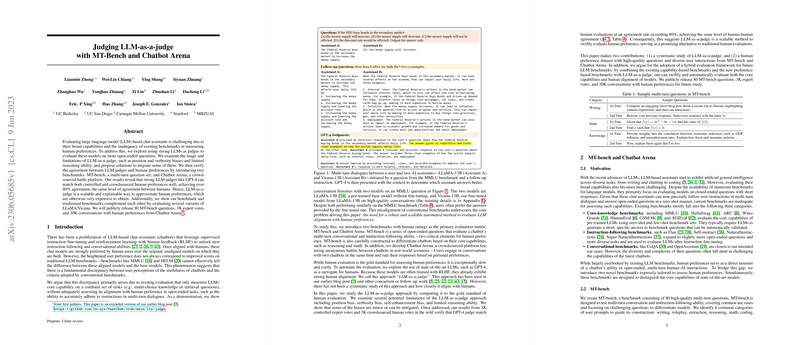

The paper addresses the challenge of evaluating LLM-based chat assistants due to their broad capabilities and the inadequacy of existing benchmarks. It highlights the discrepancy between user preference for aligned models and their scores on traditional LLM benchmarks like MMLU and HELM. The core problem is the need for a robust and scalable automated method to evaluate LLM alignment with human preferences. To address this, the authors introduce MT-bench and Chatbot Arena, and explore the use of state-of-the-art LLMs as judges.

MT-Bench and Chatbot Arena

The authors introduce two benchmarks with human ratings as the primary evaluation metric:

- MT-bench: A benchmark consisting of 80 high-quality multi-turn questions designed to test multi-turn conversation and instruction-following ability, covering common use cases and focusing on challenging questions to differentiate models. The questions are categorized into writing, roleplay, extraction, reasoning, math, coding, knowledge I (STEM), and knowledge II (humanities/social science).

- Chatbot Arena: A crowdsourced platform featuring anonymous battles between chatbots in real-world scenarios. Users interact with two chatbots simultaneously and rate their responses based on personal preferences.

LLM as a Judge

The paper explores the use of state-of-the-art LLMs, such as GPT-4, as a surrogate for humans in evaluating chat assistants. This approach, termed "LLM-as-a-judge," leverages the inherent human alignment exhibited by models trained with Reinforcement Learning from Human Feedback (RLHF).

Three variations of the LLM-as-a-judge approach are proposed:

- Pairwise comparison: An LLM judge is presented with a question and two answers, and tasked to determine which one is better or declare a tie.

- Single answer grading: An LLM judge is asked to directly assign a score to a single answer.

- Reference-guided grading: A reference solution is provided to the LLM judge, if applicable.

The advantages of LLM-as-a-judge are scalability and explainability. However, the paper also identifies limitations and biases of LLM judges:

- Position bias: An LLM exhibits a propensity to favor certain positions over others. Experiments using GPT-3.5 and Claude-v1 revealed a strong position bias, with the models often favoring the first position.

- Verbosity bias: An LLM judge favors longer, verbose responses, even if they are not as clear, high-quality, or accurate as shorter alternatives. A "repetitive list" attack was designed to examine this bias.

- Self-enhancement bias: LLM judges may favor the answers generated by themselves. Win rates of six models under different LLM judges and humans show that some judges favor certain models. For example, GPT-4 favors itself with a 10% higher win rate, and Claude-v1 favors itself with a 25% higher win rate.

- Limited capability in grading math and reasoning questions: LLMs are known to have limited math and reasoning capability.

To address these limitations, the paper presents several methods:

- Swapping positions: Calling a judge twice by swapping the order of two answers.

- Few-shot judge: Using few-shot examples can significantly increase the consistency of GPT-4.

- Chain-of-thought and reference-guided judge: Prompting an LLM judge to begin with answering the question independently and then start grading or using a reference answer.

- Fine-tuning a judge model: Fine-tuning a Vicuna-13B on arena data to act as a judge.

The paper also explores two possible designs for the multi-turn judge: (1) breaking the two turns into two prompts or (2) displaying complete conversations in a single prompt and finds that the former one can cause the LLM judge struggling to locate the assistant's previous response precisely.

Agreement Evaluation

The paper studies the agreement between different LLM judges and humans on MT-bench and Chatbot Arena datasets. The agreement between two types of judges is defined as the probability of randomly selected individuals of each type agreeing on a randomly selected question. On MT-bench, GPT-4 with both pairwise comparison and single answer grading show very high agreements with human experts. The agreement under setup S2 (w/o tie) between GPT-4 and humans reaches 85%, which is even higher than the agreement among humans (81%). The data from Arena shows a similar trend.

The win rate curves from LLM judges closely match the curves from humans. On MT-bench second turn, proprietary models like Claude and GPT-3.5 are more preferred by the humans compared to the first turn, meaning that a multi-turn benchmark can better differentiate some advanced abilities of models.

Human Preference Benchmark and Standardized Benchmark

Human preference benchmarks such as MT-bench and Chatbot Arena serve as valuable additions to the current standardized LLM benchmarks. They focus on different aspects of a model and the recommended way is to comprehensively evaluate models with both kinds of benchmarks. The paper evaluates several model variants derived from LLaMA on MMLU, Truthful QA (MC1), and MT-bench (GPT-4 judge) and finds that no single benchmark can determine model quality, meaning that a comprehensive evaluation is needed.

Discussion

The paper acknowledges limitations such as neglecting safety, combining multiple dimensions into a single metric and emphasizes the importance of addressing biases in these methods. Future directions include benchmarking chatbots at scale with a broader set of categories, open-sourcing LLM judge aligned with human preference and enhancing open models' math/reasoning capability.

Conclusion

The paper concludes that strong LLMs can achieve an agreement rate of over 80% with human experts, establishing a foundation for an LLM-based evaluation framework.