- The paper presents a PostNAS pipeline that leverages pre-trained full-attention models and frozen MLPs to reduce pre-training costs while improving efficiency.

- It systematically selects linear attention blocks and introduces JetBlock, a dynamic convolution module that streamlines computation and enhances accuracy.

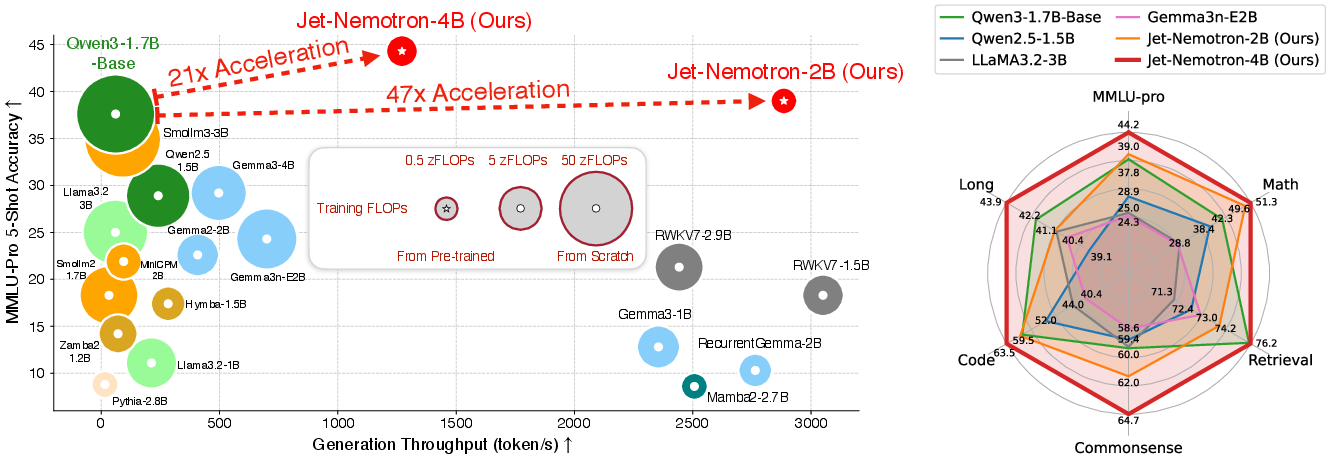

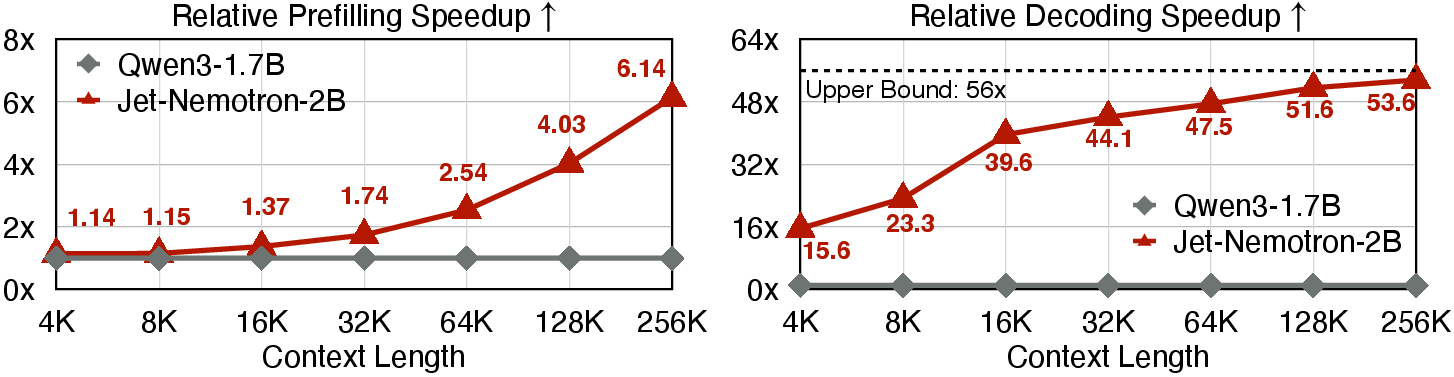

- Empirical results show Jet-Nemotron models achieve up to 47× speedup and outperform baselines on benchmarks like MMLU, math, and retrieval tasks.

Jet-Nemotron: Efficient LLM with Post Neural Architecture Search

Introduction and Motivation

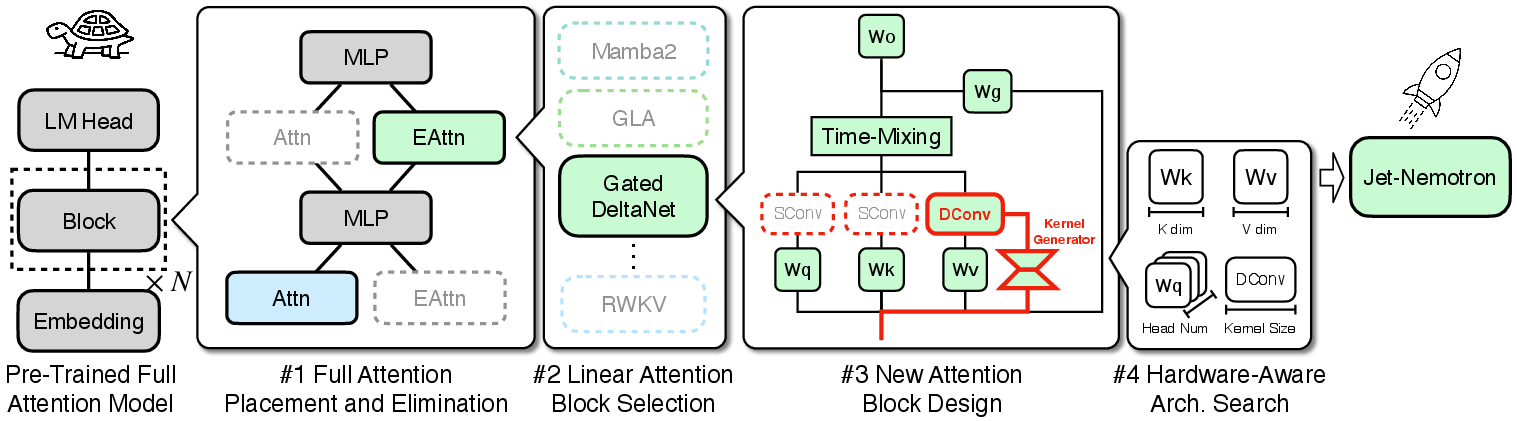

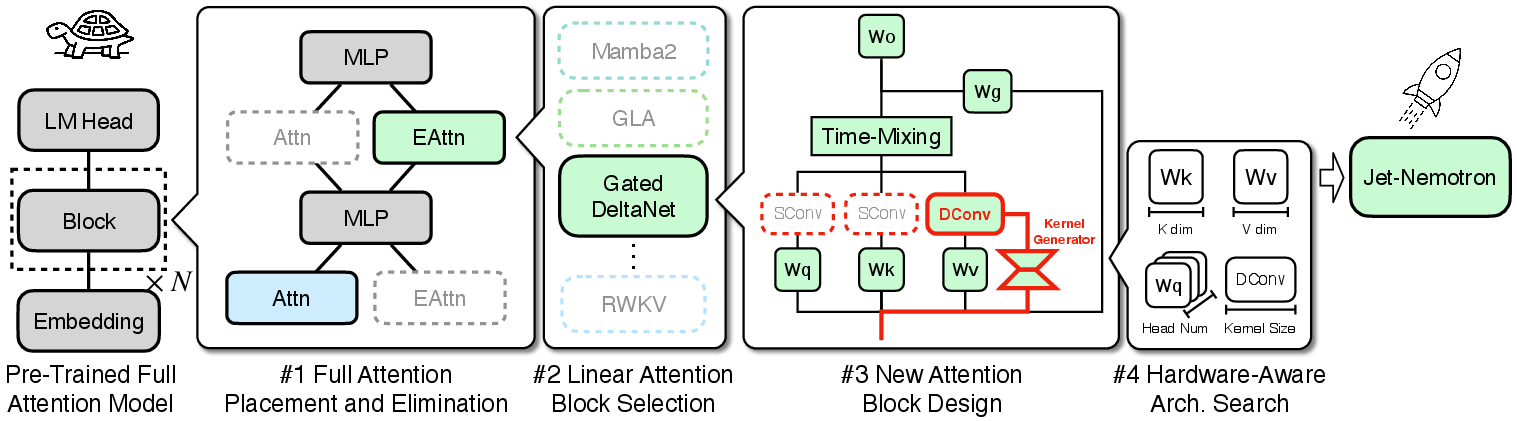

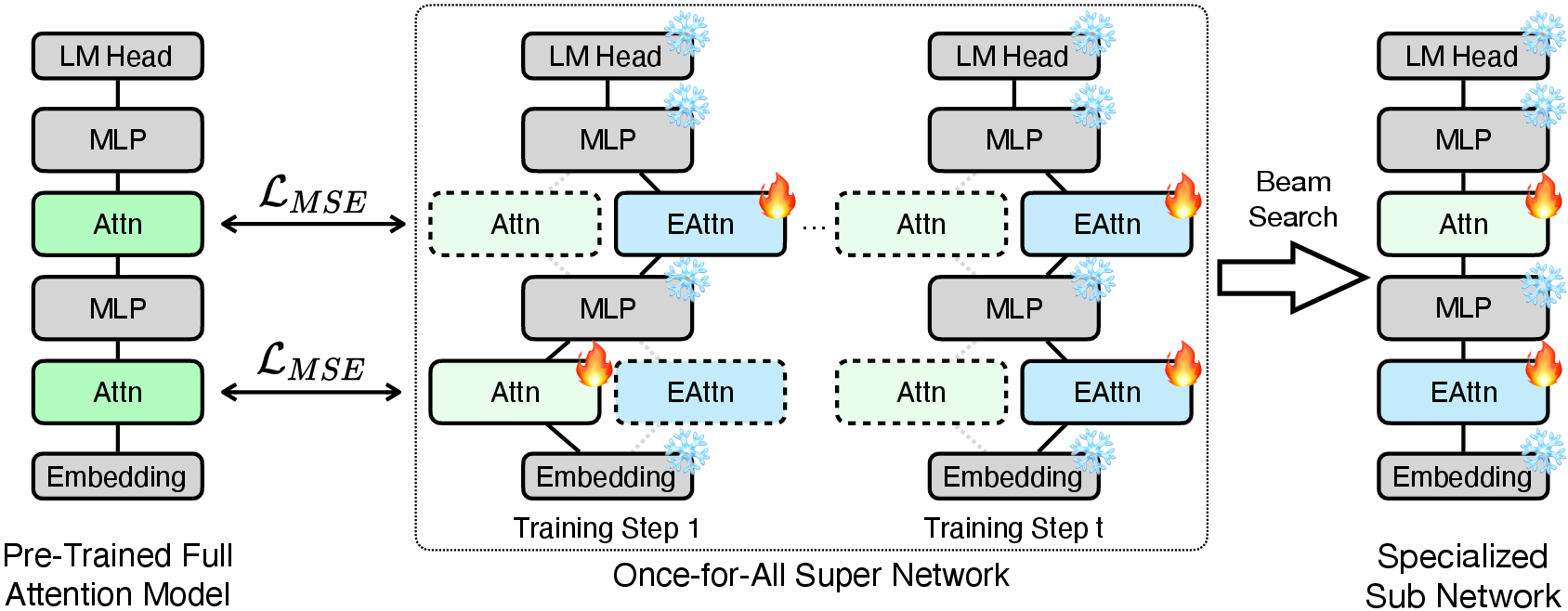

Jet-Nemotron introduces a hybrid-architecture LLM family that achieves state-of-the-art accuracy while delivering substantial efficiency improvements over full-attention models. The central innovation is the Post Neural Architecture Search (PostNAS) pipeline, which enables rapid and cost-effective architecture exploration by leveraging pre-trained full-attention models and freezing their MLP weights. This approach circumvents the prohibitive cost of pre-training from scratch and allows for systematic, hardware-aware optimization of attention mechanisms.

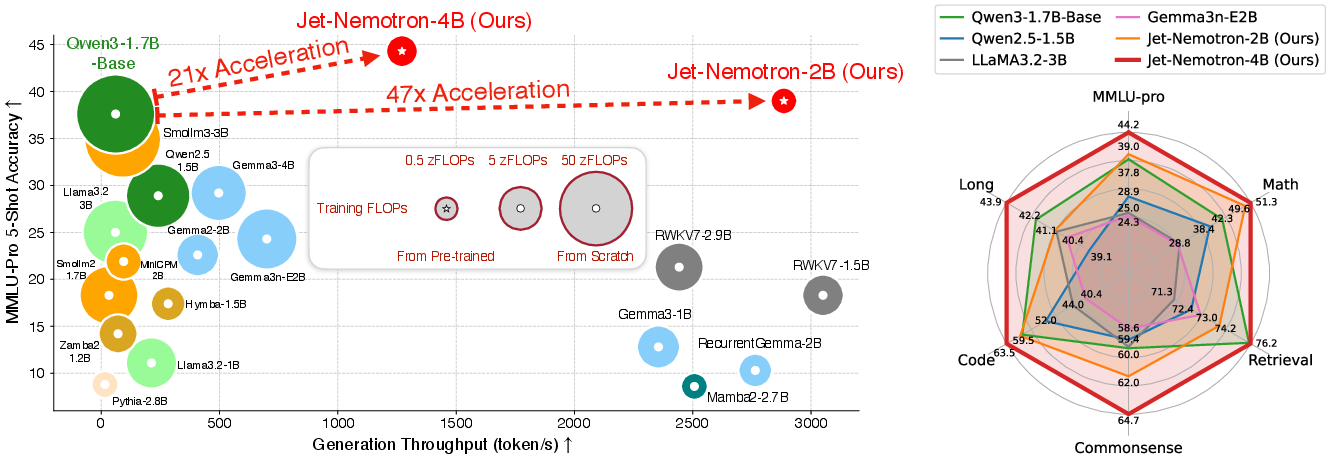

Figure 1: Comparison between Jet-Nemotron and state-of-the-art efficient LLMs, showing superior accuracy and throughput for Jet-Nemotron-2B and Jet-Nemotron-4B.

PostNAS Pipeline and Architectural Innovations

The PostNAS pipeline comprises four key stages:

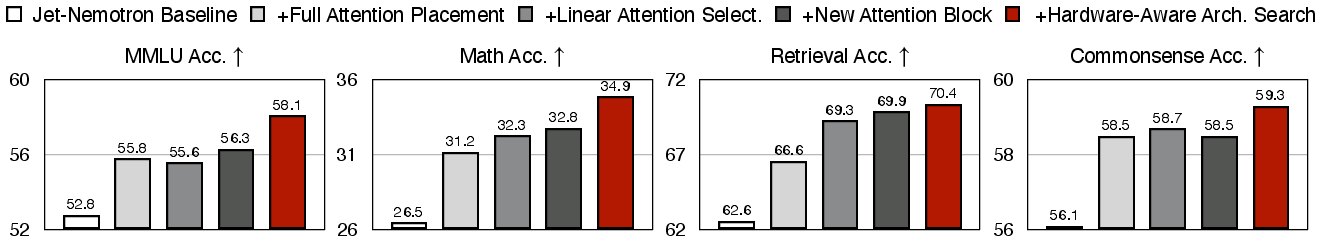

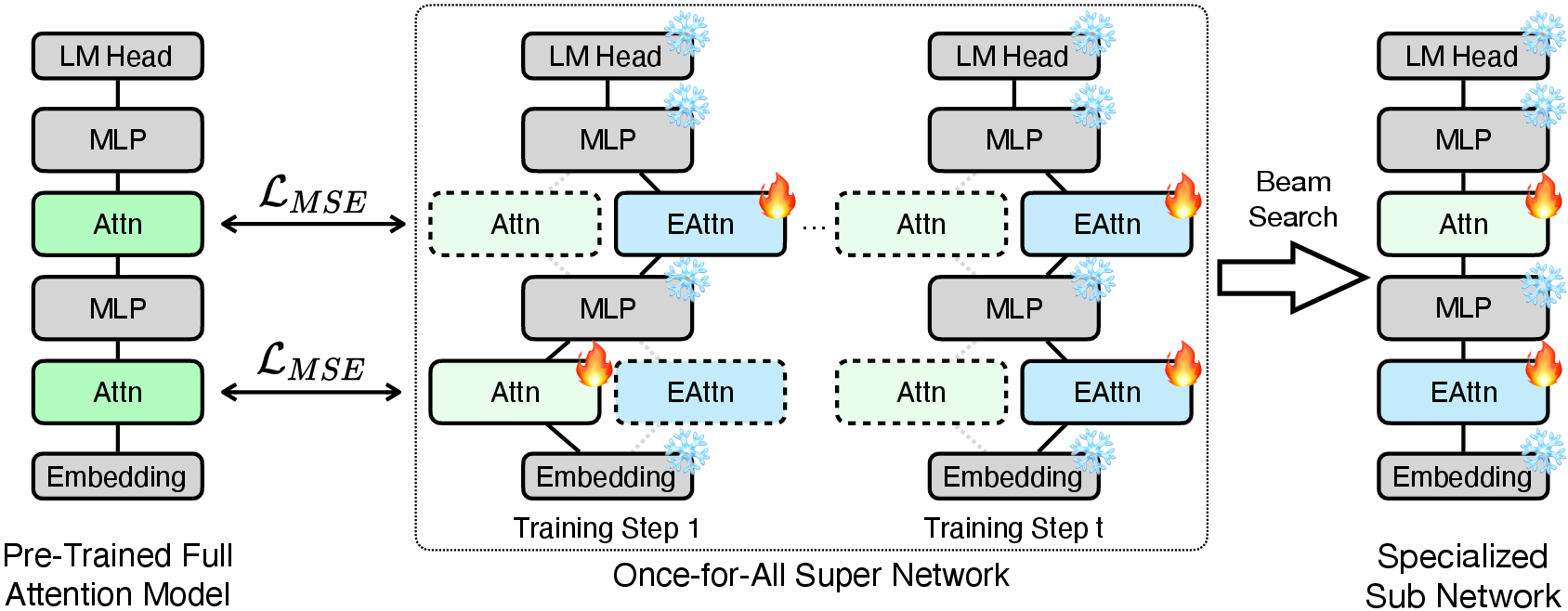

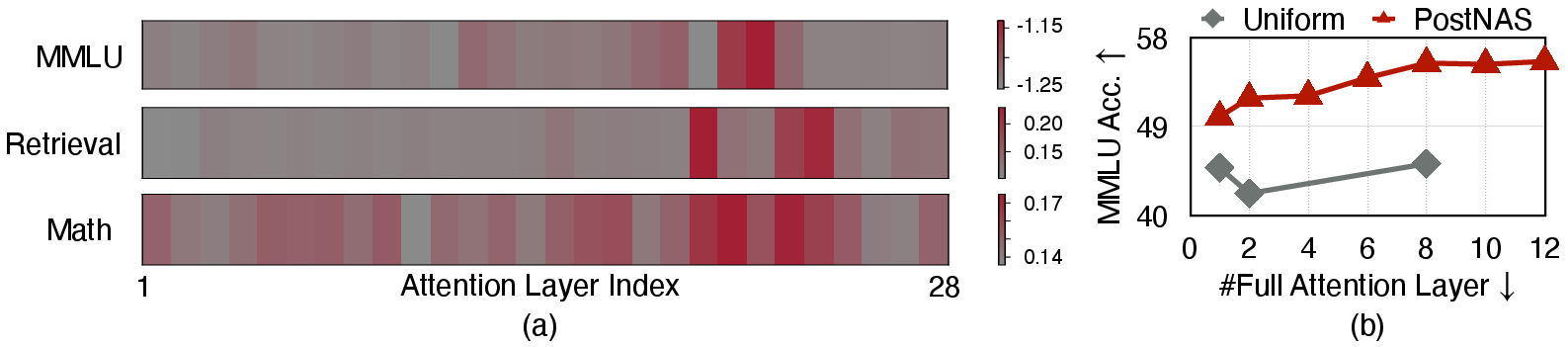

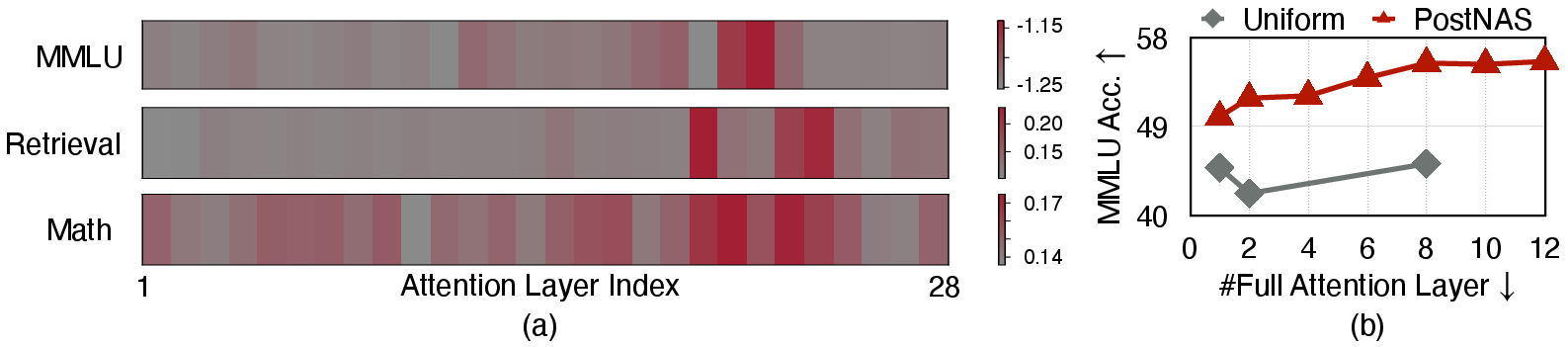

- Full Attention Placement and Elimination: Rather than uniformly distributing full-attention layers, PostNAS employs a once-for-all super network and beam search to learn optimal layer placement, maximizing accuracy for specific tasks such as retrieval and MMLU.

Figure 2: PostNAS roadmap illustrating the coarse-to-fine search for efficient attention block designs, starting from a pre-trained model with frozen MLPs.

- Linear Attention Block Selection: The pipeline systematically evaluates existing linear attention blocks (RWKV7, RetNet, Mamba2, GLA, Deltanet, Gated DeltaNet) for accuracy, training efficiency, and inference speed. Gated DeltaNet is selected for its superior accuracy, attributed to its data-dependent gating and delta rule mechanisms.

- New Attention Block Design (JetBlock): JetBlock introduces dynamic causal convolution kernels generated conditionally on input features, applied to value tokens. This design removes redundant static convolutions on queries and keys, streamlining computation and improving accuracy with minimal overhead.

- Hardware-Aware Architecture Search: Instead of parameter count, generation throughput is used as the primary optimization target. The search fixes KV cache size and explores key/value dimensions and attention head numbers, resulting in configurations that maximize throughput while increasing parameter count for higher accuracy.

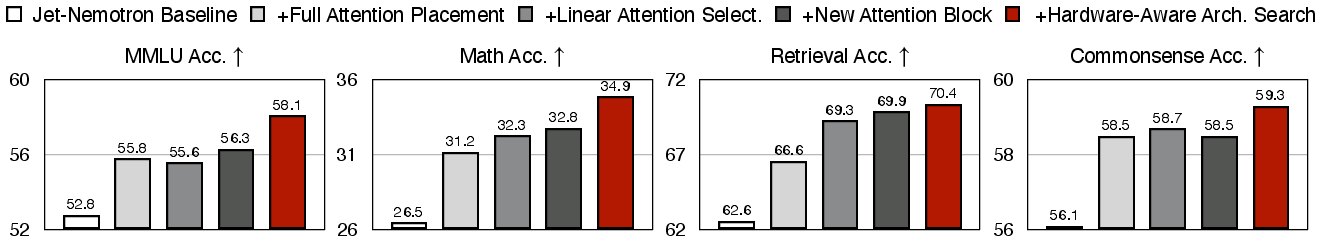

Figure 3: Breakdown of accuracy improvements across benchmarks achieved by applying PostNAS to the baseline model.

Empirical Results and Benchmarking

Jet-Nemotron models are evaluated on a comprehensive suite of benchmarks, including MMLU(-Pro), mathematical reasoning, commonsense reasoning, retrieval, coding, and long-context tasks. The results demonstrate:

- Jet-Nemotron-2B achieves higher accuracy than Qwen3-1.7B-Base on MMLU-Pro, with a 47× increase in generation throughput and a 47× reduction in KV cache size.

- Jet-Nemotron-4B maintains a 21× throughput advantage over Qwen3-1.7B-Base, even as model size increases.

- On math tasks, Jet-Nemotron-2B surpasses Qwen3-1.7B-Base by 6.3 points in accuracy while being 47× faster.

- On commonsense reasoning, Jet-Nemotron-2B achieves the highest average accuracy among all baselines.

- On retrieval and coding tasks, Jet-Nemotron models consistently outperform or match the best baselines, with substantial efficiency gains.

Figure 4: Once-for-all super network and beam search used to learn optimal full-attention layer placement.

Figure 5: (a) Layer placement search results on Qwen2.5-1.5B, showing task-dependent importance of attention layers. (b) PostNAS placement yields higher accuracy than uniform placement.

Efficiency Analysis

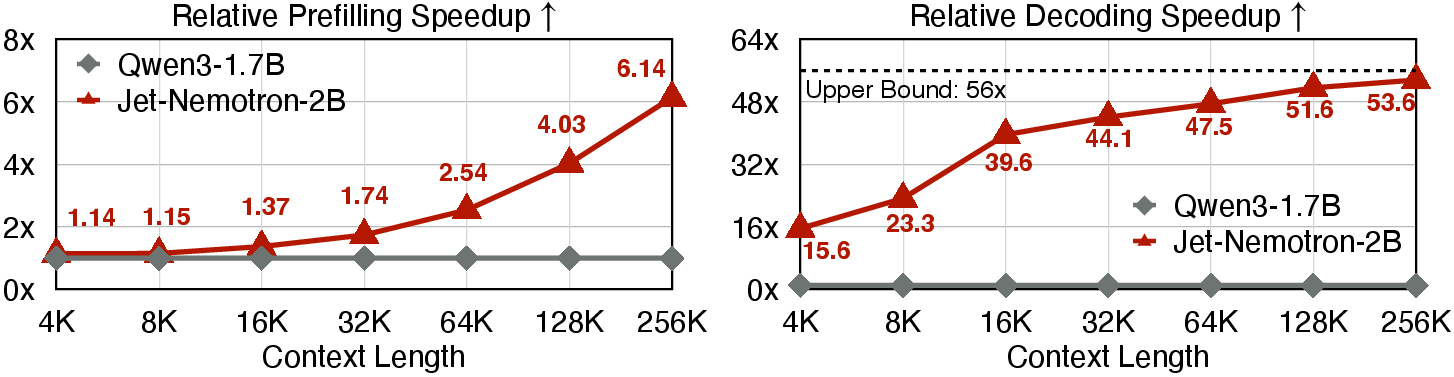

Jet-Nemotron's efficiency is most pronounced in long-context scenarios. The model achieves up to 6.14× speedup in prefilling and 53.6× speedup in decoding compared to Qwen3-1.7B-Base at a 256K context length. The throughput improvements are attributed to the reduced number of full-attention layers and smaller KV cache size, which are critical for memory-bandwidth-bound inference.

Figure 6: Jet-Nemotron-2B achieves up to 6.14× speedup in prefilling and 53.6× speedup in decoding across different context lengths.

Theoretical and Practical Implications

Jet-Nemotron demonstrates that hybrid architectures, when optimized via post-training architecture search, can match or exceed the accuracy of full-attention models while delivering dramatic efficiency improvements. The findings challenge the conventional reliance on parameter count as a proxy for efficiency, highlighting the importance of KV cache size and hardware-aware design. The PostNAS framework provides a scalable, low-risk testbed for architectural innovation, enabling rapid evaluation and deployment of new attention mechanisms.

The JetBlock design, with dynamic convolution kernels, sets a new standard for linear attention blocks, outperforming prior designs such as Mamba2, GLA, and Gated DeltaNet. The empirical results suggest that the combination of learned full-attention placement and advanced linear attention can close the accuracy gap with full-attention models, even in challenging domains like mathematical reasoning and long-context understanding.

Future Directions

The PostNAS paradigm opens avenues for further research in hardware-aware neural architecture search, especially for LLMs. Future work may explore:

- Automated adaptation of architecture for diverse hardware platforms and deployment scenarios.

- Extension of PostNAS to multimodal and multilingual models.

- Integration with sparse attention and MoE architectures for further efficiency gains.

- Exploration of dynamic attention mechanisms beyond convolution, such as adaptive memory or routing.

Conclusion

Jet-Nemotron establishes a new benchmark for efficient LLMing by combining post-training architecture search with advanced linear attention design. The model family achieves state-of-the-art accuracy and unprecedented generation throughput, demonstrating the practical viability of hybrid architectures for large-scale LLMing. The PostNAS pipeline and JetBlock attention module provide robust tools for future research and deployment of efficient LLMs, with significant implications for both theoretical understanding and real-world applications.