- The paper demonstrates how integrating symbolic AI with neural networks improves the reasoning processes of large language models.

- It introduces methodologies to generate logical reasoning data using symbolic techniques in order to augment LLM performance.

- The research outlines future directions for developing hybrid neuro-symbolic systems capable of tackling multi-modal and complex reasoning tasks.

Neuro-Symbolic Artificial Intelligence: Towards Improving the Reasoning Abilities of LLMs

Introduction

The evolution of artificial intelligence has been marked by shifts between paradigms, reflecting the continual search for improved methodologies and capabilities. Notably, the reasoning abilities of LLMs have emerged as a central focus within the pursuit of AGI. Despite significant progress, LLMs still exhibit fundamental difficulties in complex reasoning tasks, often mimicking reasoning steps from training data rather than genuinely reasoning. This paper reviews neuro-symbolic approaches as a promising avenue to enhance LLM reasoning capabilities, examining how integrating symbolic AI with neural methods can address current limitations.

Neuro-Symbolic AI Paradigm

Neuro-symbolic AI represents the convergence of symbolic AI, which excels in complex, logical reasoning, with neural networks, renowned for their learning from vast datasets. This approach aligns with the Dual Process Theory from cognitive science, suggesting two systems of human cognition: a fast, intuitive neural-like system and a slower, analytical symbolic-like system. The integration leverages neural capabilities to overcome symbolic AI's challenges with scalability and representation, while symbolic systems enhance neural architectures with rigorous logical reasoning.

Reasoning tasks are formally characterized as recursive processes, where each new reasoning step builds on prior ones. The challenge lies in mapping input problems and background knowledge onto solutions, with intermediate reasoning steps defined by their specific reasoning functions. The paper identifies deductive, inductive, and abductive reasoning as frameworks for understanding how reasoning functions operate within symbolic and neural systems.

Symbolic → LLM: Enhancing Data Availability

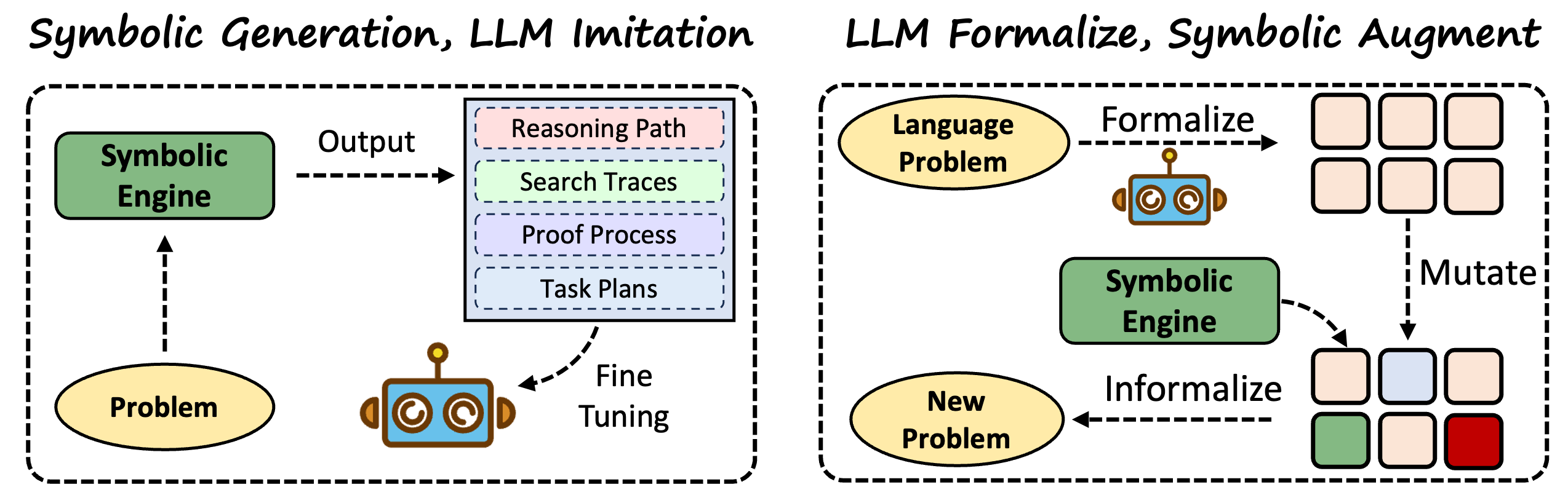

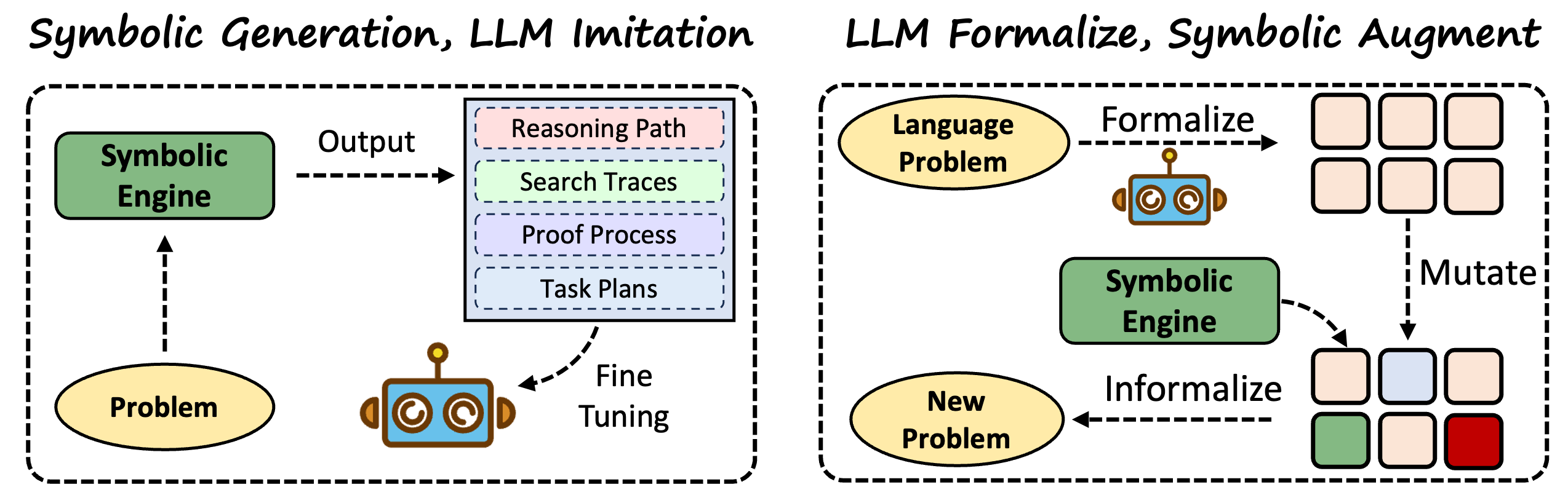

Symbolic methods can address data scarcity issues in LLM reasoning by generating and augmenting reasoning datasets that LLMs can imitate. These methods produce logically coherent reasoning paths that provide valuable training data for LLM fine-tuning. This approach involves symbolic generation of reasoning processes and LLM formalization to capitalize on rigorous symbolic augmentation.

Figure 1: Illustration of how symbolic methods can be exploited to provide reasoning data for LLMs.

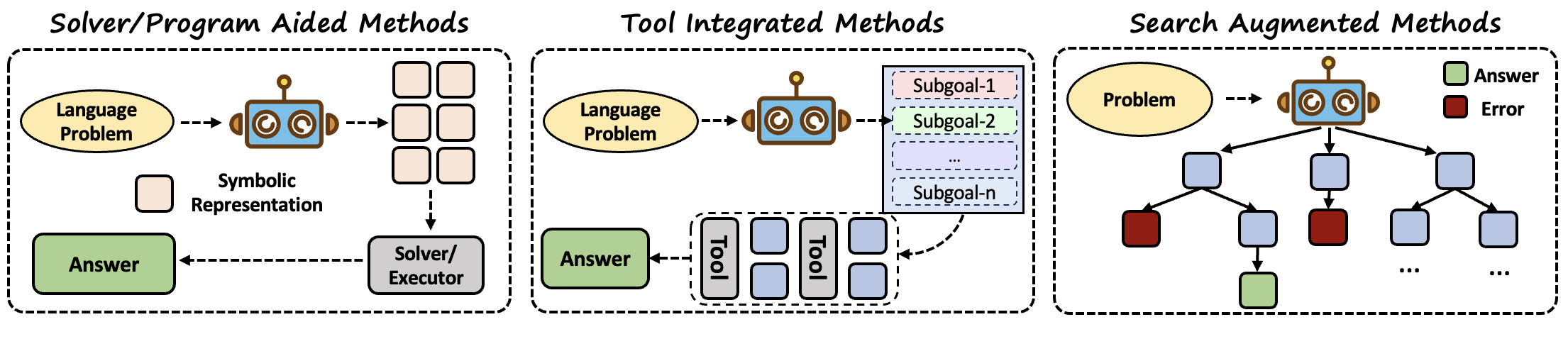

LLM → Symbolic: Enhancing Reasoning Functions

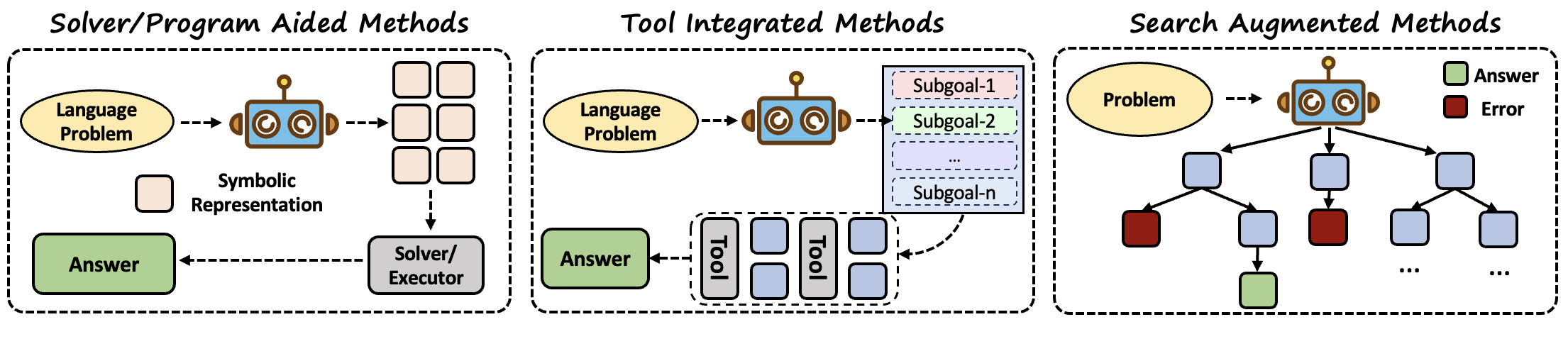

Symbolic solvers, program interpreters, and external tools enhance LLM reasoning by providing intermediate logical rigor. This involves translating problems into symbolic formats that external modules can resolve, effectively replacing error-prone autoregressive reasoning functions. This strategy extends to tool-aided methods where LLMs invoke specific external tools, improving reasoning accuracy in various modalities.

Figure 2: Illustration of how LLMs integrate symbolic solvers, programs, tools, or search algorithms to facilitate the reasoning process.

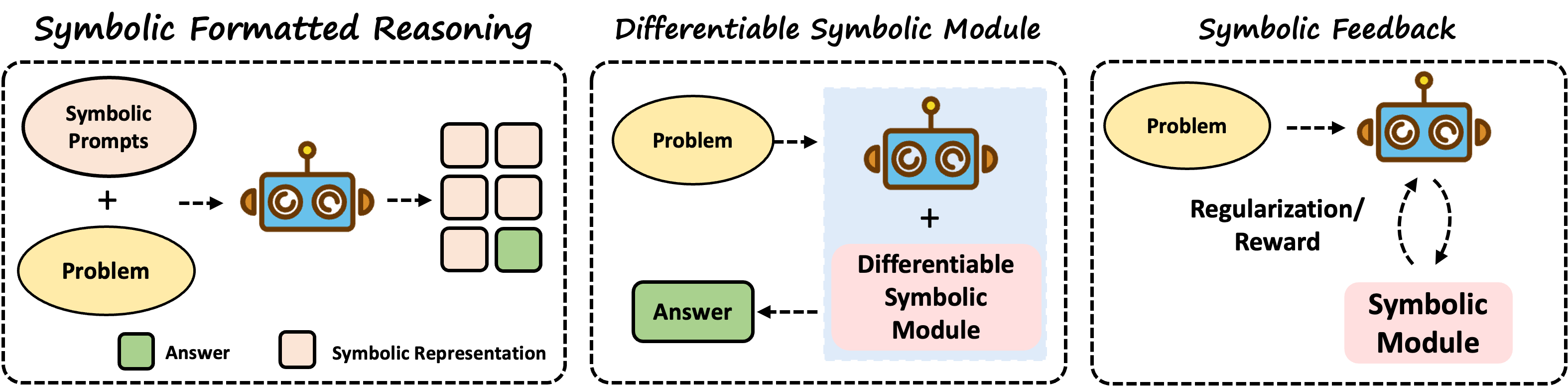

Symbolic + LLMs: Towards End-to-End Reasoning

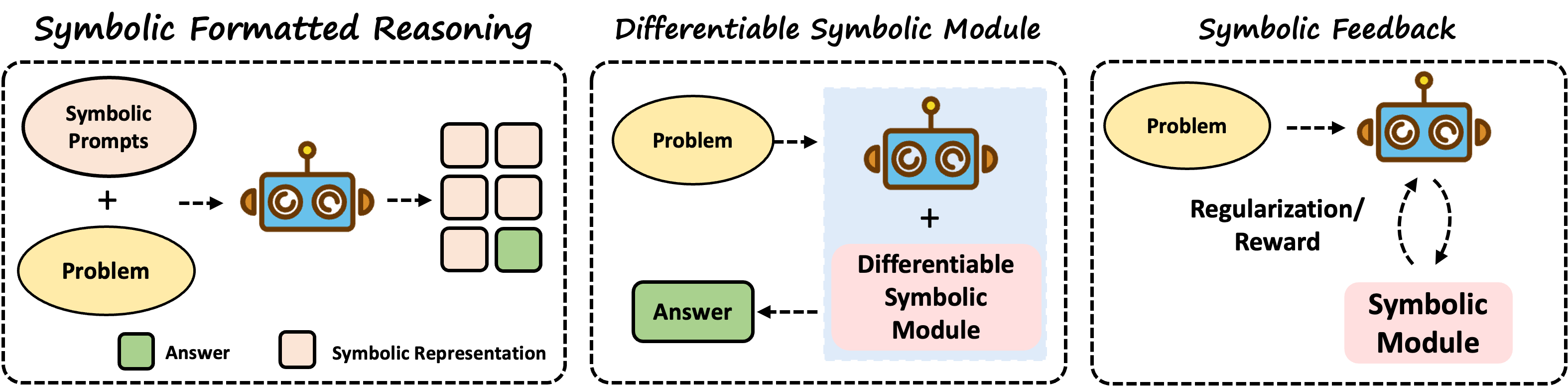

Enabling hybrid neuro-symbolic systems that integrate symbolic and neural components end-to-end remains a long-term goal. Strategies such as symbolic formatted reasoning, differential symbolic modules, and symbolic feedback represent initial steps towards this integration. Symbolic representations during reasoning, differentiable symbolic reasoning mechanisms, and symbolic feedback in learning highlight promising methods for advancing these capabilities.

Figure 3: Illustration of the main ideas of end-to-end LLM+symbolic reasoning, including symbolic formatted reasoning, differential symbolic modules, and symbolic feedback.

Challenges and Future Directions

Addressing multi-modal reasoning, developing advanced hybrid architectures, and establishing robust theoretical foundations are pivotal challenges. Current research predominantly focuses on linguistic reasoning, whereas integrating reasoning across modalities like visual and spatial aspects remains underexplored. Moreover, theoretical understanding of neuro-symbolic systems is necessary to guide future model architectures and learning methodologies.

Conclusion

The integration of neuro-symbolic methods holds promise for enhancing LLMs' reasoning capabilities, creating systems capable of both learning from large datasets and executing complex reasoning tasks. Through systematic exploration of Symbolic → LLM, LLM → Symbolic, and Symbolic + LLM approaches, this research highlights the potential pathways and challenges in developing robust AI models with strong reasoning faculties. Continued efforts to integrate symbolic rigor with neural flexibility will be crucial for progressing towards AGI.