Understanding the Limitations of Mathematical Reasoning in LLMs: An Analysis of the GSM-Symbolic Benchmark

The paper "GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in LLMs" presents a critical analysis of the mathematical reasoning capabilities of contemporary LLMs. The paper notably extends the analysis beyond existing evaluations by introducing GSM-Symbolic, a sophisticated benchmark consisting of symbolic templates designed to test the robustness and reliability of LLMs in mathematical reasoning tasks.

Key Contributions

The authors make several significant contributions:

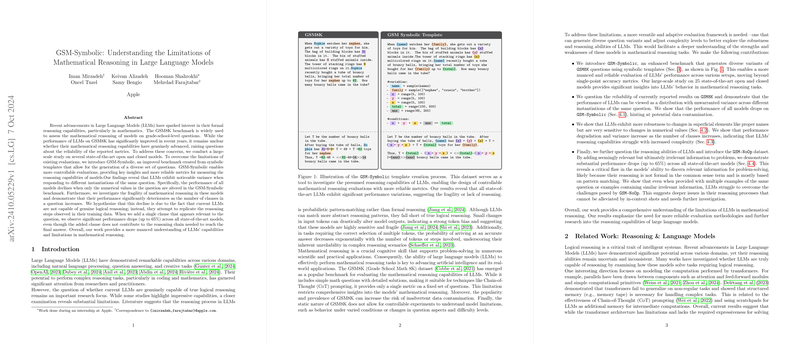

- GSM-Symbolic Benchmark: This enhanced benchmark incorporates symbolic templates to generate diverse variants of mathematical reasoning questions. The design allows for controlled evaluation scenarios, enabling a comprehensive understanding of LLM capabilities beyond singular accuracy metrics.

- Performance Variability Analysis: The paper reveals that reported metrics on the GSM8K benchmark may be unreliable. By assessing performance distributions across various instances of the same questions, the authors illustrate substantial variance, highlighting potential limitations in previous evaluations.

- Robustness Exploration: The research demonstrates that LLMs exhibit a degree of robustness to changes in superficial elements like proper names; however, they are notably sensitive to variations in numerical values. Additionally, an increase in question complexity, such as the number of clauses, exacerbates performance degradation.

- GSM-NoOp Dataset: The introduction of this dataset challenges LLMs further by adding irrelevant yet seemingly significant information to mathematical questions. The pervasive performance drops observed (up to 65%) across models suggest deficiencies in distinguishing pertinent information, underscoring the models' reliance on pattern matching rather than formal reasoning.

Implications and Theoretical Considerations

This work provides critical insights into the inherent limitations in the reasoning processes of LLMs. Despite the impressive general performance across various tasks, their mathematical reasoning capabilities appear fragile. The notable sensitivity to input variations and question complexities indicates reliance on probabilistic pattern-matching techniques rather than genuine logical reasoning.

From a theoretical perspective, these findings align with emerging evidence suggesting that transformer-based architectures, while capable within certain bounds, may lack the inherent expressiveness required for complex logical tasks without significant architectural adaptations or the inclusion of additional memory mechanisms.

Practical and Future Implications

The practical implications are clear: while LLMs have broad applicational potential, caution is necessary when applying these models to domains requiring precise logical reasoning, such as mathematical problem-solving and formal logic tasks. The GSM-Symbolic benchmark establishes a more dependable foundation for evaluating such capabilities and catalyzes further research into refining model architectures to address these identified limitations.

Theoretical developments could focus on enhancing model architectures to integrate mechanisms for handling abstract reasoning more effectively. Exploring the incorporation of advanced memory retrieval systems or hybrid computing strategies might offer potential avenues to overcome current limitations.

Conclusion

Overall, this paper underscores significant challenges facing LLMs in genuinely understanding and performing mathematical reasoning. Through GSM-Symbolic and GSM-NoOp, the authors reveal profound insights into model fragility and highlight the urgent need for research focused on evolving AI systems toward true logical and mathematical reasoning capabilities, a pursuit foundational to achieving more robust and human-like cognitive modeling.