- The paper proposes a novel evaluation framework for multi-hop personalized reasoning, comparing explicit, implicit, and hybrid memory systems.

- It employs Retrieval-Augmented Generation and supervised fine-tuning to analyze memory performance and reasoning efficiency.

- Experimental results highlight that optimal multi-hop performance is achieved through sequential reasoning and dynamically selected hybrid memory models.

This paper focuses on the development and evaluation of multi-hop personalized reasoning (MPR) tasks, employing both explicit and implicit memory mechanisms. These tasks pose significant challenges for LLM agents, requiring complex reasoning over extensive personalized user information. The paper proposes a novel dataset and evaluation framework to analyze the performance of different memory systems, along with an exploration of hybrid approaches to optimize memory usage for complex reasoning.

Background and Motivation

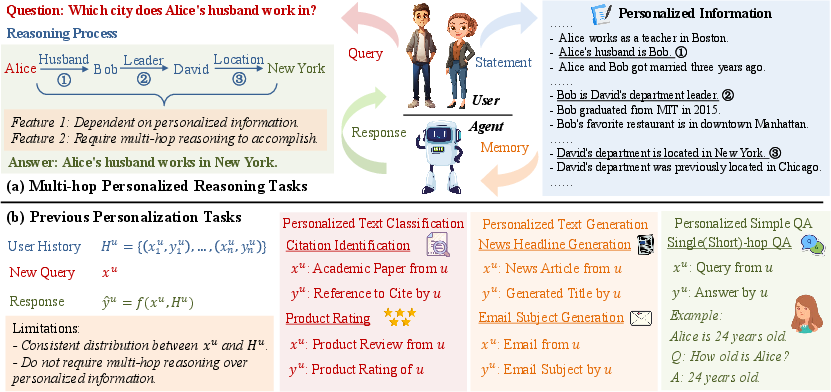

In the context of LLM-based agents, personalization relies heavily on effective memory systems that store and utilize user-specific information. Previous studies primarily addressed simpler personalization tasks such as preference alignment or direct question-answering, which do not necessitate detailed reasoning. MPR tasks, however, require extensive reasoning across multiple pieces of personalized data. This constitutes a complex challenge due to the need for multi-step reasoning to derive correct answers not available through singular data pieces.

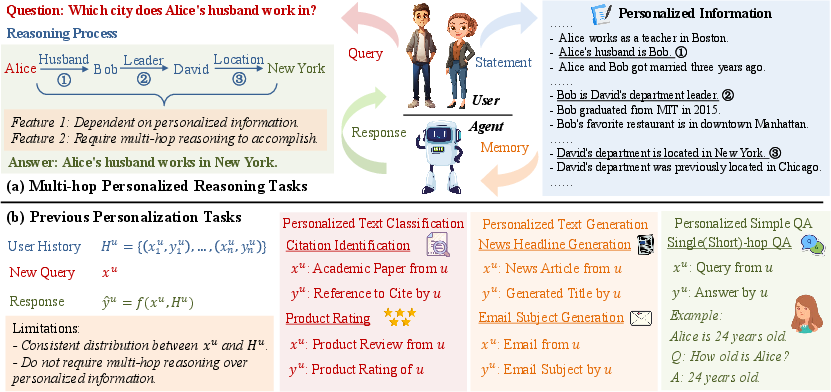

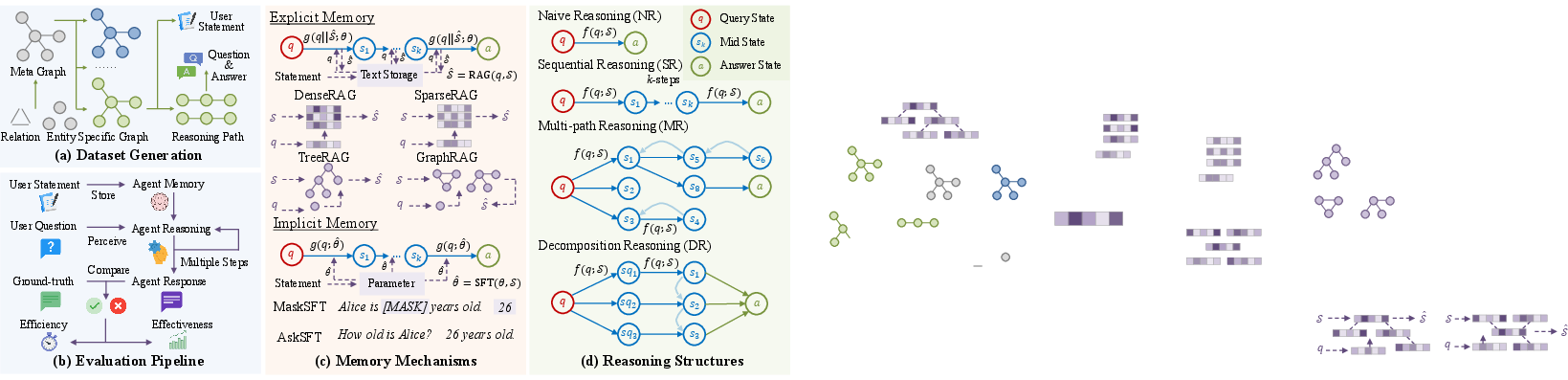

The authors argue that current approaches inadequately address the intricacies of reasoning with vast personalized datasets, stressing the need for innovative methods to span multiple leaps in reasoning. Figure 1 demonstrates the complexity of MPR tasks compared to simpler personalization tasks.

Figure 1: Demonstration of multi-hop personalized reasoning tasks and previous personalization tasks.

Methodology

Definition of MPR Tasks

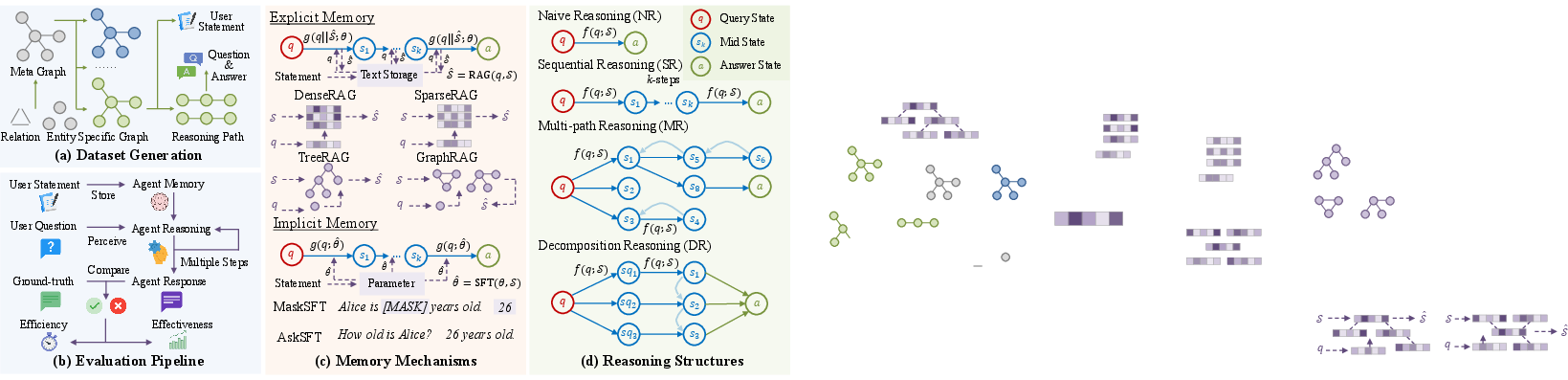

The paper precisely defines MPR tasks as requiring reasoning over multiple pieces of user-specific information that are necessary yet insufficient on their own for task completion. These tasks differ markedly from those relying on general public datasets like Wikipedia, due to their reliance on personal data specific to the user. Figure 2 illustrates the exploration of such tasks, showcasing an effective framework for evaluation.

Figure 2: Overview of exploration on multi-hop personalized reasoning tasks.

Memory Mechanisms

Explicit Memory: Utilizes Retrieval-Augmented Generation (RAG), wherein user information is stored textually and accessible via retrieval methods aligned with query relevance.

Implicit Memory: Involves supervised fine-tuning (SFT) to incorporate personalized data directly into model parameters, eliminating the need for retrieval during task execution.

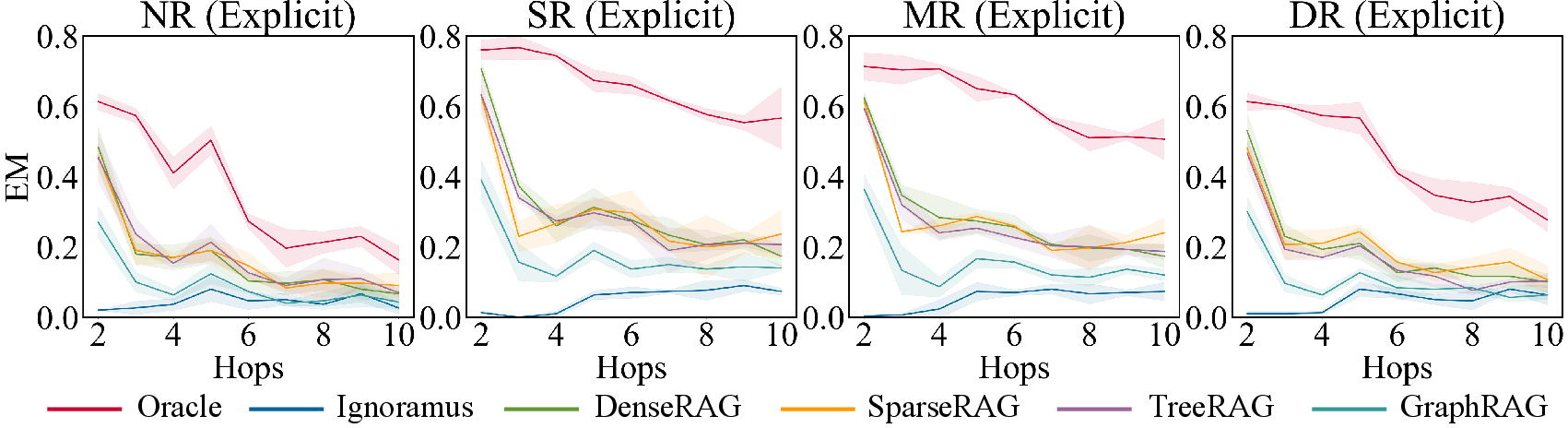

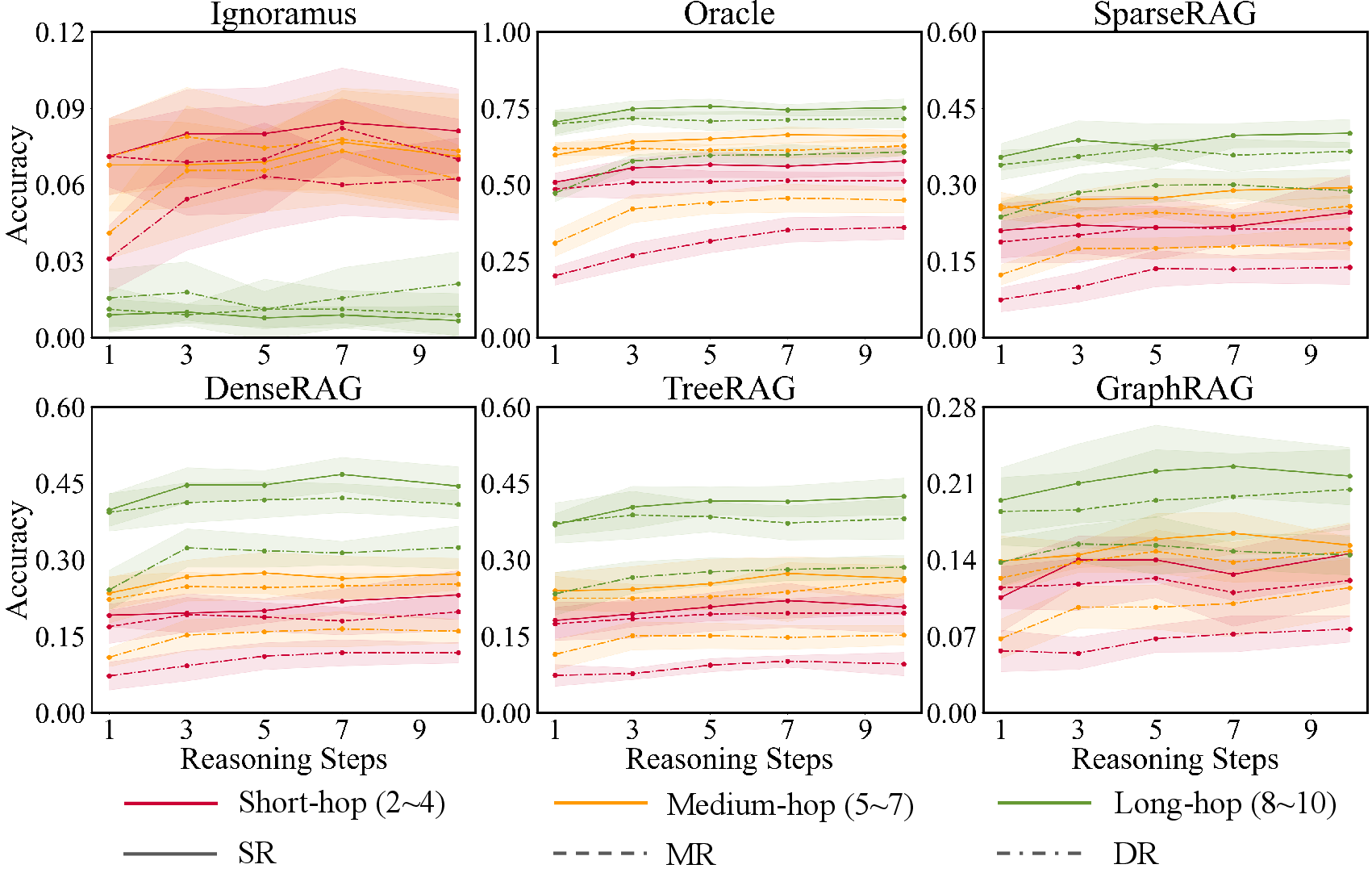

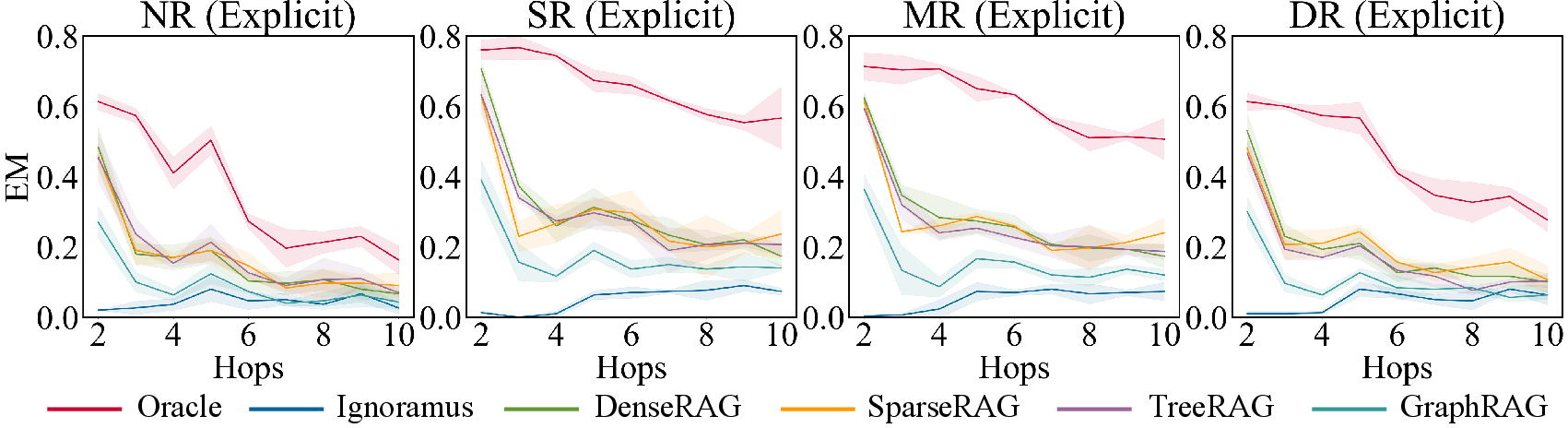

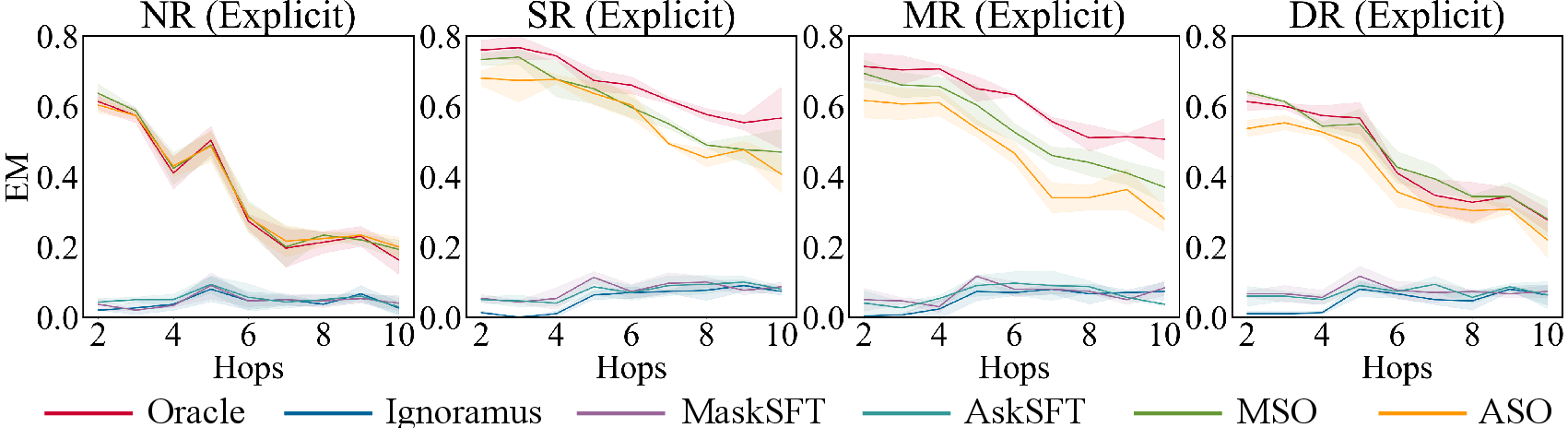

A series of comprehensive experiments compare these mechanisms, analyzing the impact on reasoning efficiency, retrieval effectiveness, and overall task performance. Figure 3 depicts the performance of explicit memory under varied reasoning conditions.

Figure 3: Overall performances of explicit memory, with mean values (line) and standard deviation values (shading).

Results and Analysis

Explicit Memory

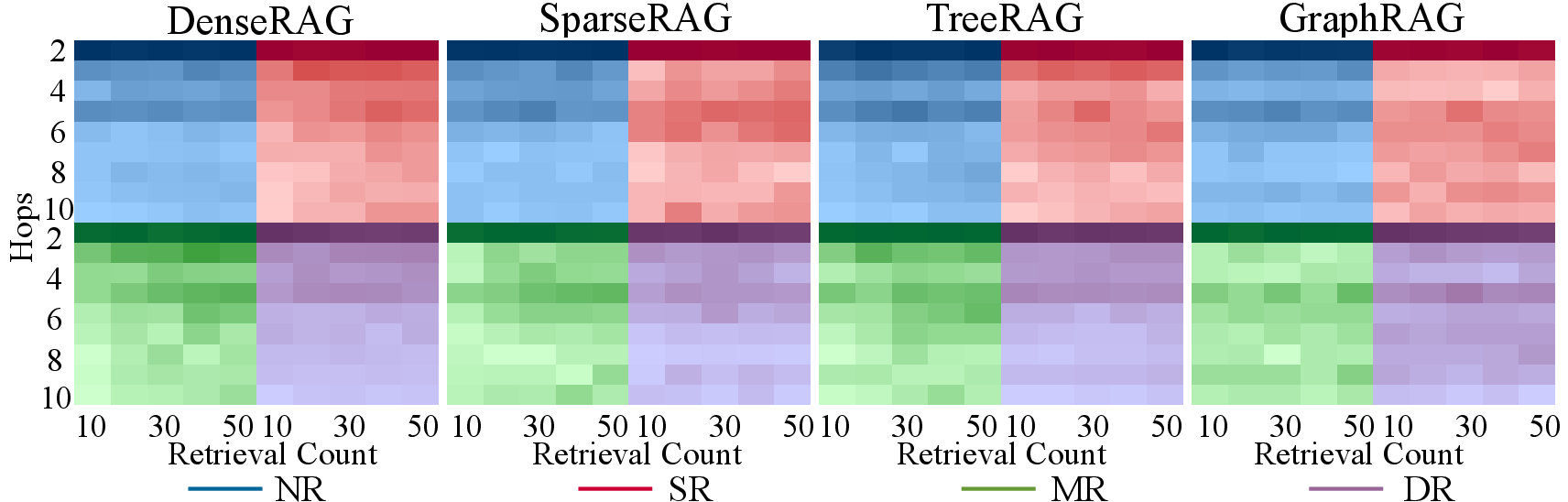

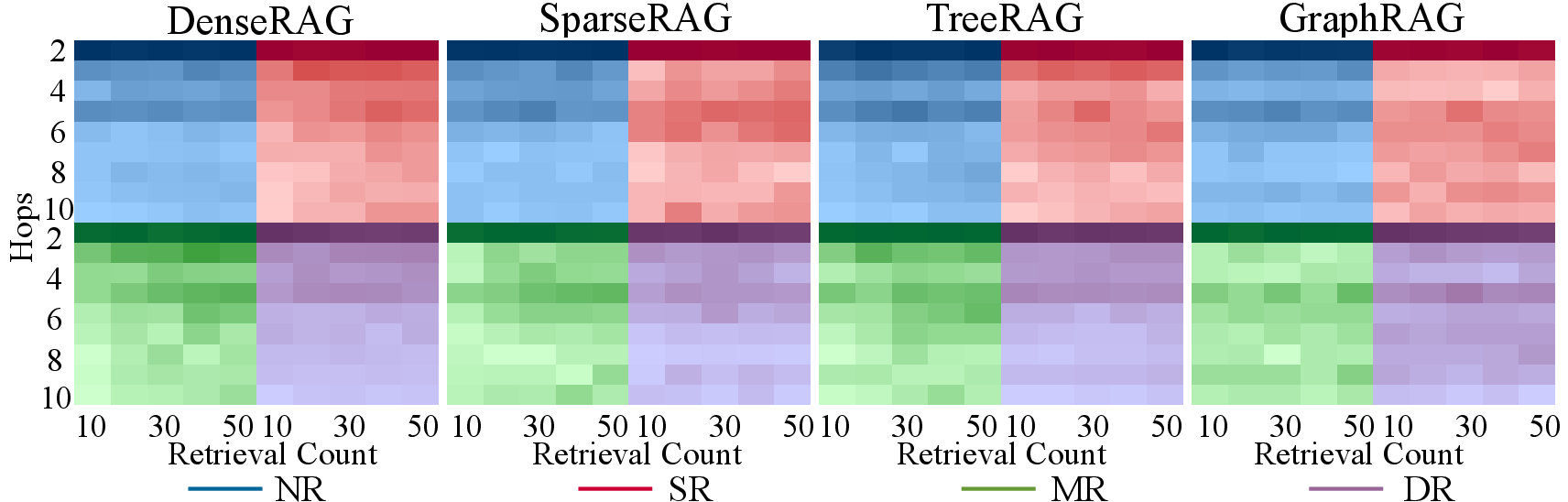

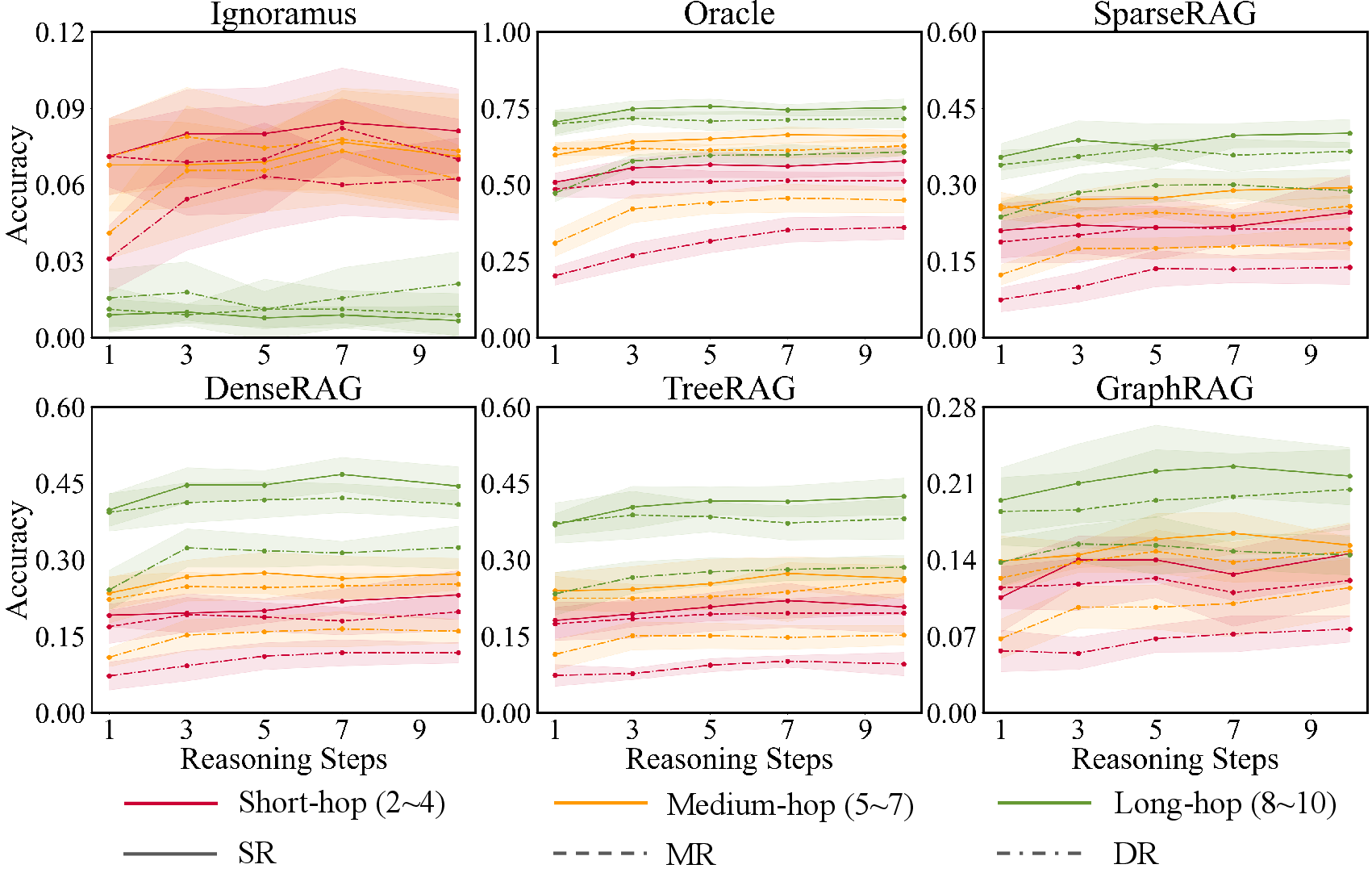

Experiments with explicit memory reveal significant sensitivity to reasoning structures, with sequential and multi-path approaches outperforming naive and decomposition-based reasoning. Results crux around the retrieval count, with optimal performance achieved at intermediate levels, balancing detail coverage and information noise. Figures 4 and 5 capture the performance nuances across different retrieved statement counts and reasoning steps, respectively.

Figure 4: Performance of various retrieved statement counts. Darker colors indicate higher accuracy in MPR tasks.

Figure 5: Performance of various reasoning steps, with mean values (line) and standard deviation values (shading).

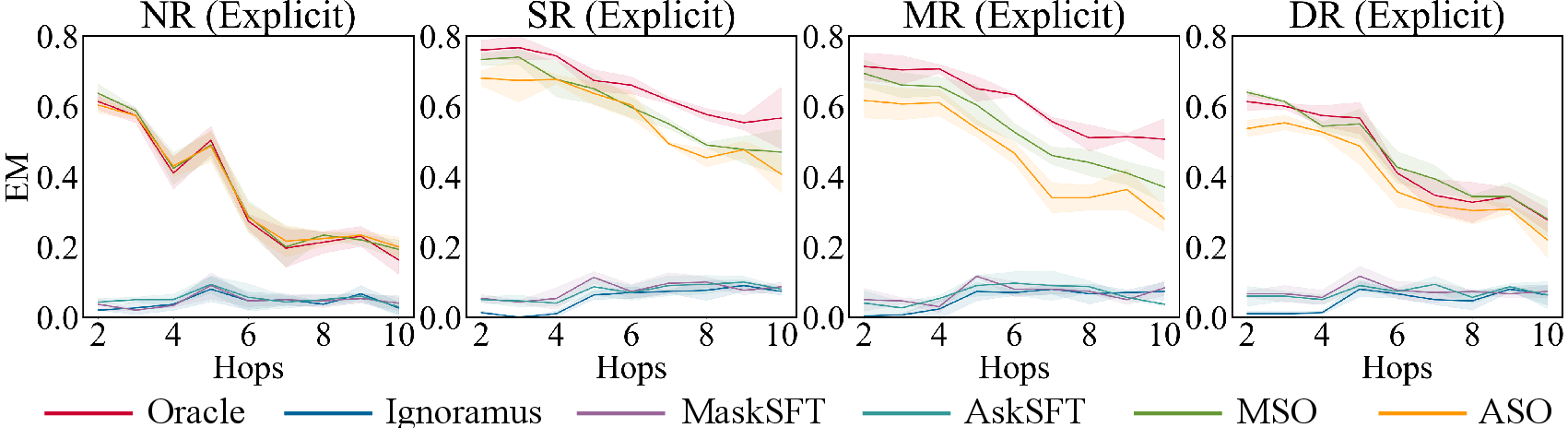

Implicit Memory

The paper finds limitations in implicit memory's ability to handle complex, detailed interactions necessary for MPR tasks. Results suggest potential for implicit mechanisms to enhance explicit memory, particularly when combined in hybrid approaches. Figure 6 highlights the overall performance of implicit memory.

Figure 6: Overall performances of implicit memory.

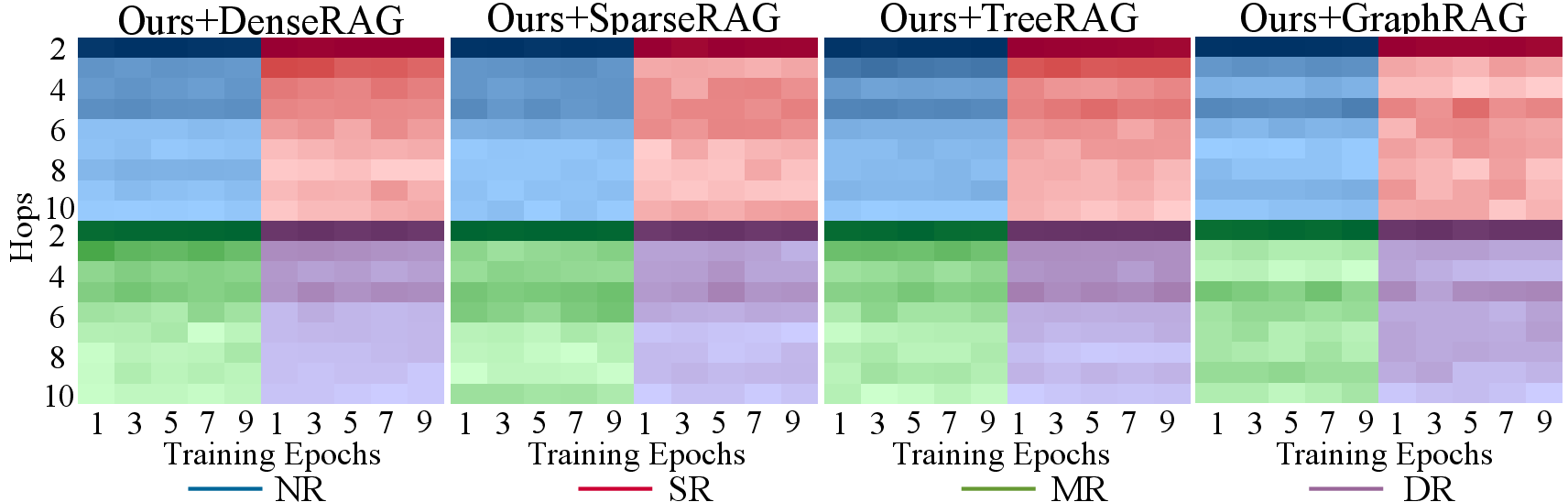

Proposed Hybrid Solutions

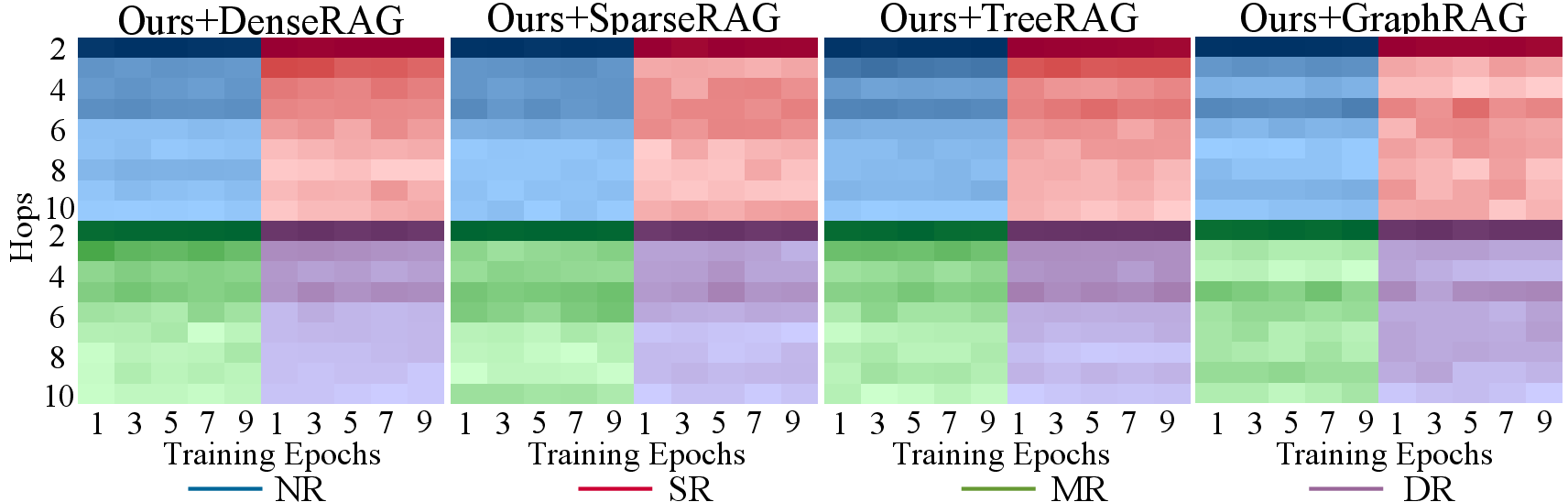

Acknowledging the limitations inherent to standalone approaches, the paper introduces HybridMem—a method leveraging both explicit and implicit paradigms. HybridMem applies K-means clustering to organize user data and fine-tuning individual LoRA adapters, selected dynamically during task execution based on relevance to retrieved statements. This method significantly enhances reasoning capability, as illustrated in Figure 7.

Figure 7: Performance of training epochs. Darker colors indicate higher accuracy in MPR tasks.

Conclusion

The paper presents a robust framework for evaluating MPR tasks, highlighting the substantial challenges they pose to standard memory mechanisms. Through rigorous experiments, explicit memory systems coupled with sophisticated multi-hop reasoning structures demonstrate superior performance, while hybrid approaches offer promising directions for future research. The authors propose continued exploration into adaptive hybrid models and multimodal data integration for further enhancements in personalized reasoning.

These findings underscore the intricacies of integrating personalized information into LLM models, paving the way for advanced applications in user-specific complex task solving. Release of the dataset and code provides a valuable resource for ongoing investigation and development within this domain.