- The paper introduces a structured pipeline that decomposes action reasoning into depth perception, trajectory planning, and action token generation.

- It employs a ViT-based visual encoder and autoregressive decoding to achieve robust performance, with up to 86.6% success on benchmarks.

- The model demonstrates improved robustness and interpretability, enabling interactive policy steering and precise real-world manipulation.

MolmoAct: Structured Action Reasoning in Space for Robotic Manipulation

Introduction and Motivation

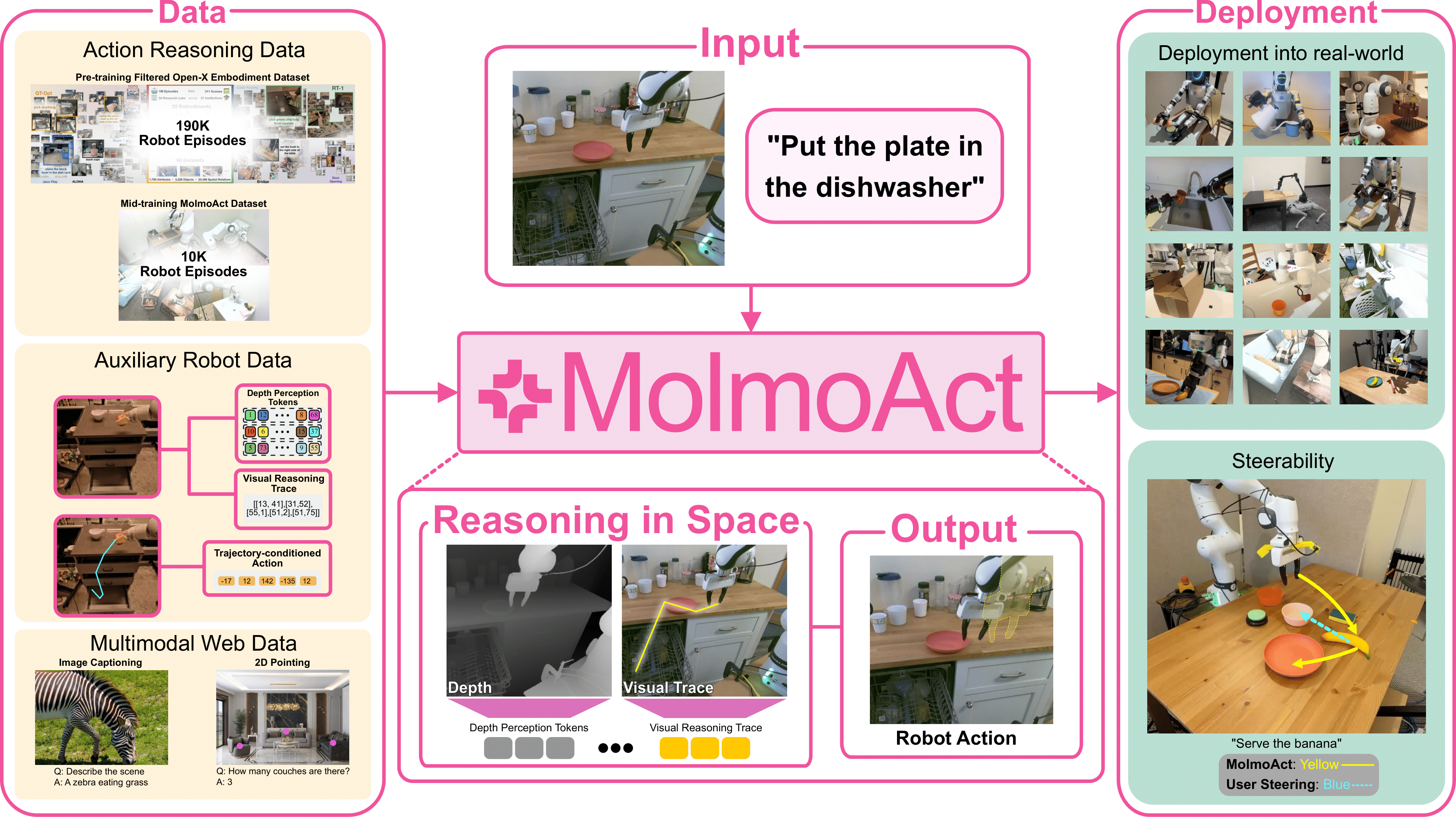

MolmoAct introduces a new class of open Action Reasoning Models (ARMs) for robotic manipulation, addressing the limitations of current Vision-Language-Action (VLA) models that directly map perception and language to control without explicit intermediate reasoning. The core hypothesis is that explicit, spatially grounded reasoning—incorporating depth perception and mid-level trajectory planning—enables more adaptable, generalizable, and explainable robotic behavior. MolmoAct operationalizes this by decomposing the action generation process into three structured, autoregressive stages: depth perception, visual reasoning trace, and action token prediction.

Figure 1: Overview of MolmoAct’s structured reasoning pipeline, with explicit depth, trajectory, and action token chains, each independently decodable for interpretability and spatial grounding.

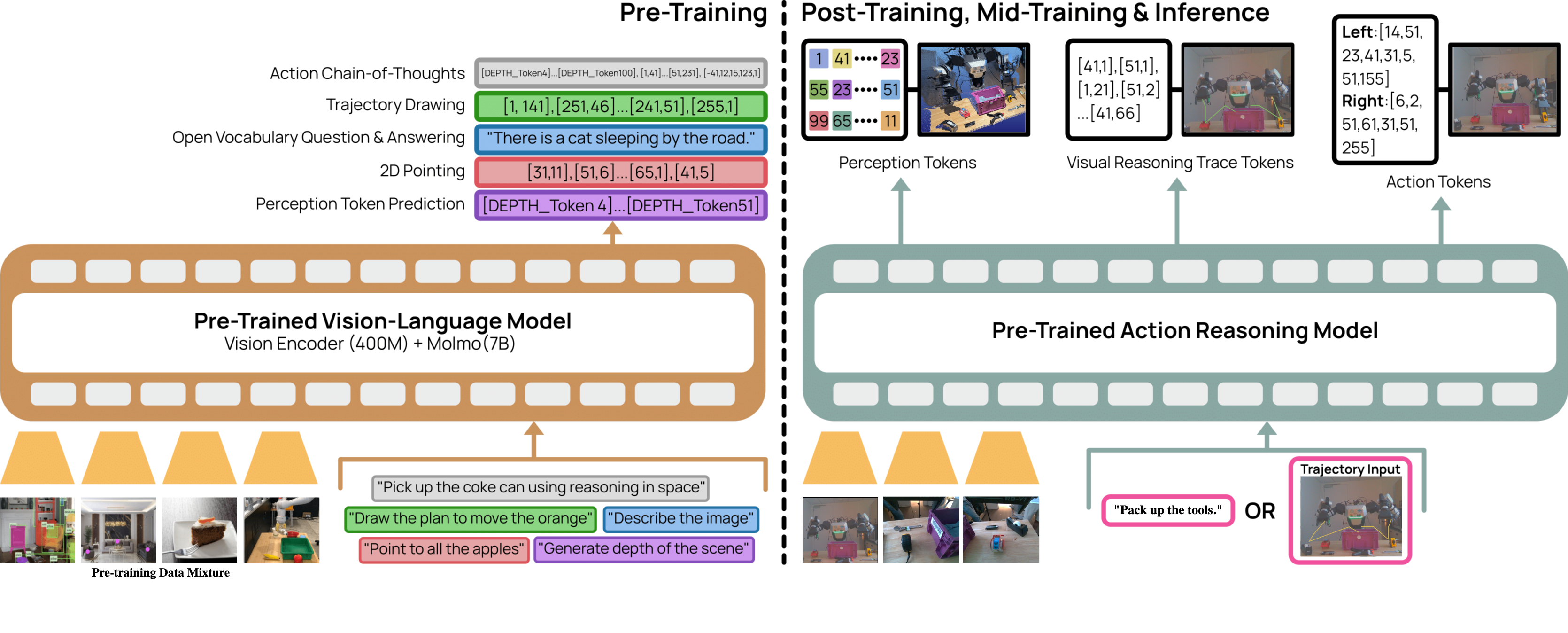

Model Architecture and Reasoning Pipeline

MolmoAct builds on the Molmo vision-language backbone, comprising a ViT-based visual encoder, a vision-language connector, and a decoder-only LLM (OLMo2-7B or Qwen2.5-7B). The model is extended to support structured action reasoning by introducing two auxiliary token streams:

- Depth Perception Tokens: Discrete tokens summarizing the 3D structure of the scene, derived via VQVAE quantization of depth maps predicted by a specialist estimator.

- Visual Reasoning Trace Tokens: Polyline representations of the planned end-effector trajectory, projected onto the image plane and discretized as token sequences.

The action reasoning process is autoregressive: given an image and instruction, the model first predicts depth tokens, then trajectory tokens, and finally action tokens, with each stage conditioned on the outputs of the previous. This factorization enforces explicit spatial grounding and enables independent decoding and inspection of each reasoning stage.

Figure 2: MolmoAct’s two-stage training process, with pre-training on diverse multimodal and robot data, and post-training for task-specific adaptation using multi-view images and either language or visual trajectory inputs.

Data Curation and Training Regime

MolmoAct is trained on a mixture of action reasoning data, auxiliary robot data, and multimodal web data. The action reasoning data is constructed by augmenting standard robot datasets (e.g., RT-1, BridgeData V2, BC-Z) with ground-truth depth and trajectory tokens, generated via specialist models and vision-language pointing, respectively. The MolmoAct Dataset, curated specifically for this work, provides over 10,000 high-quality trajectories across 93 manipulation tasks in both home and tabletop environments, with explicit annotation of depth and trajectory traces.

Figure 3: Data mixture composition for pre-training, highlighting the increased proportion of auxiliary depth and trace data in the sampled subset used for MolmoAct.

Figure 4: Example tasks and verb distribution in the MolmoAct Dataset, illustrating the diversity and long-tail action coverage.

The training pipeline consists of three stages:

- Pre-training: On a 26.3M-sample mixture, emphasizing action reasoning and auxiliary spatial data.

- Mid-training: On the MolmoAct Dataset, focusing on high-quality, domain-aligned action reasoning.

- Post-training: Task-specific adaptation via LoRA, with action chunking for efficient manipulation.

Experimental Evaluation

MolmoAct demonstrates strong zero-shot performance on the SimplerEnv visual-matching benchmark, achieving 70.5% accuracy, outperforming closed-source and proprietary baselines despite using an order of magnitude less pre-training data. Fine-tuning further improves performance, with MolmoAct-7B-D reaching 71.6% accuracy.

Generalization and Adaptation

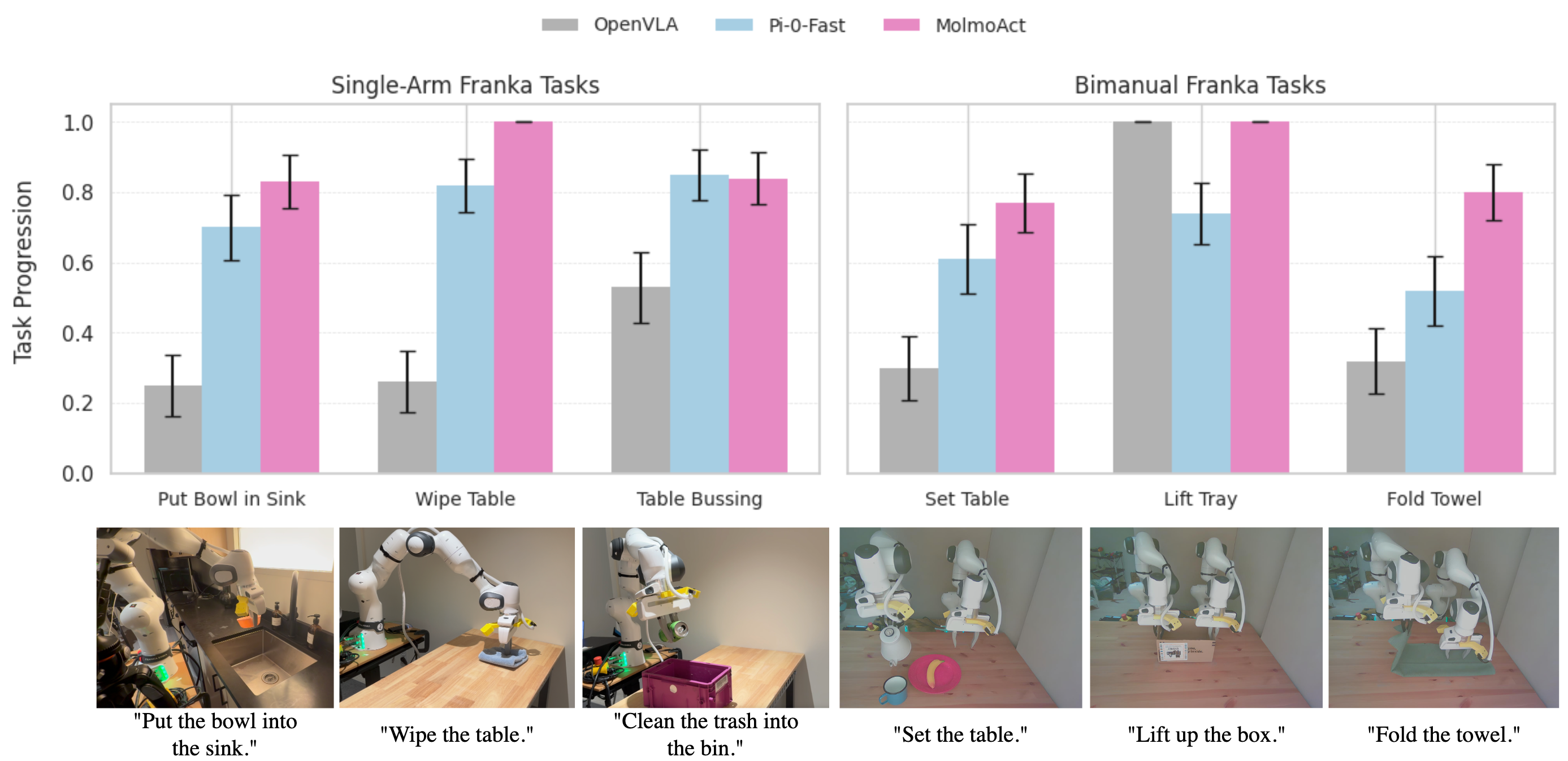

On the LIBERO benchmark, MolmoAct achieves an average success rate of 86.6%, outperforming all baselines, with particularly strong results on long-horizon tasks (+6.3% over ThinkAct). In real-world evaluations on Franka single-arm and bimanual setups, MolmoAct surpasses baselines by 10% and 22.7% in task progression, respectively.

Figure 5: Real-world task progression for single-arm and bimanual Franka tasks, with MolmoAct consistently outperforming OpenVLA and π0 baselines.

Out-of-Distribution Robustness

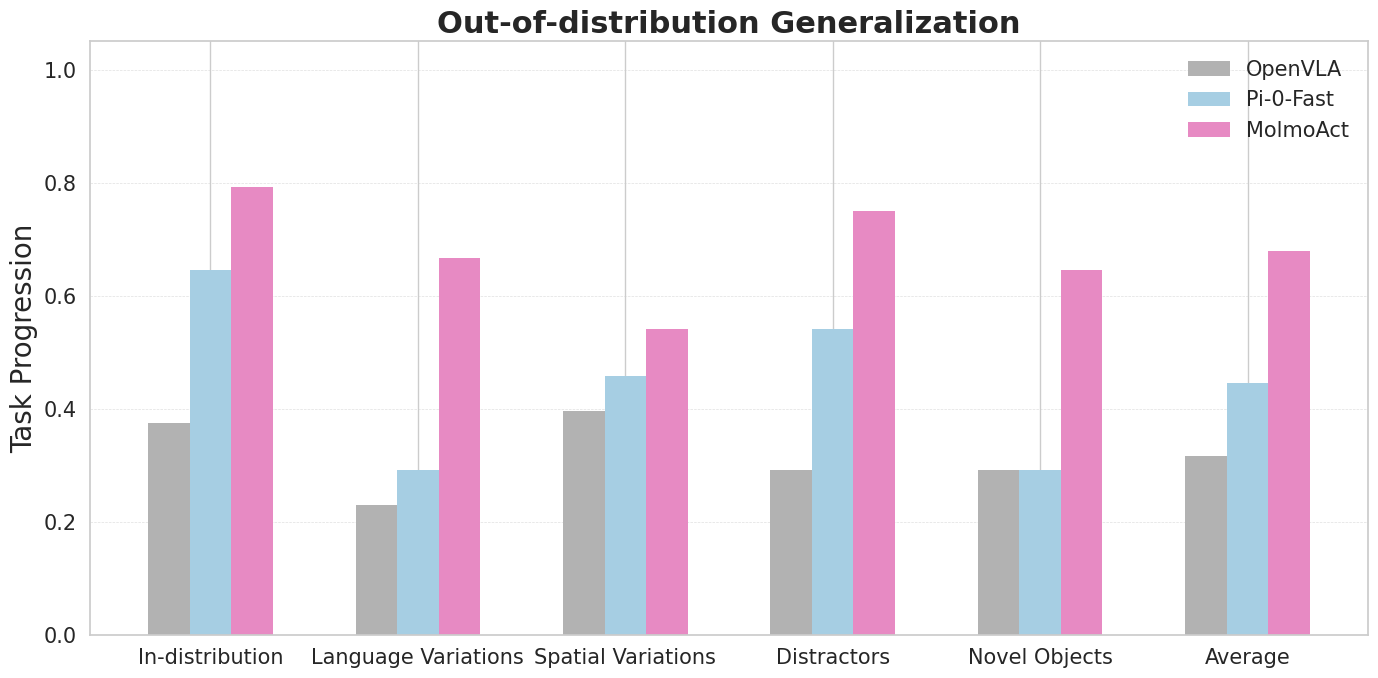

MolmoAct exhibits robust generalization to distribution shifts, achieving a 23.3% improvement in real-world task progression over π0 on axes including language variation, spatial variation, distractors, and novel objects.

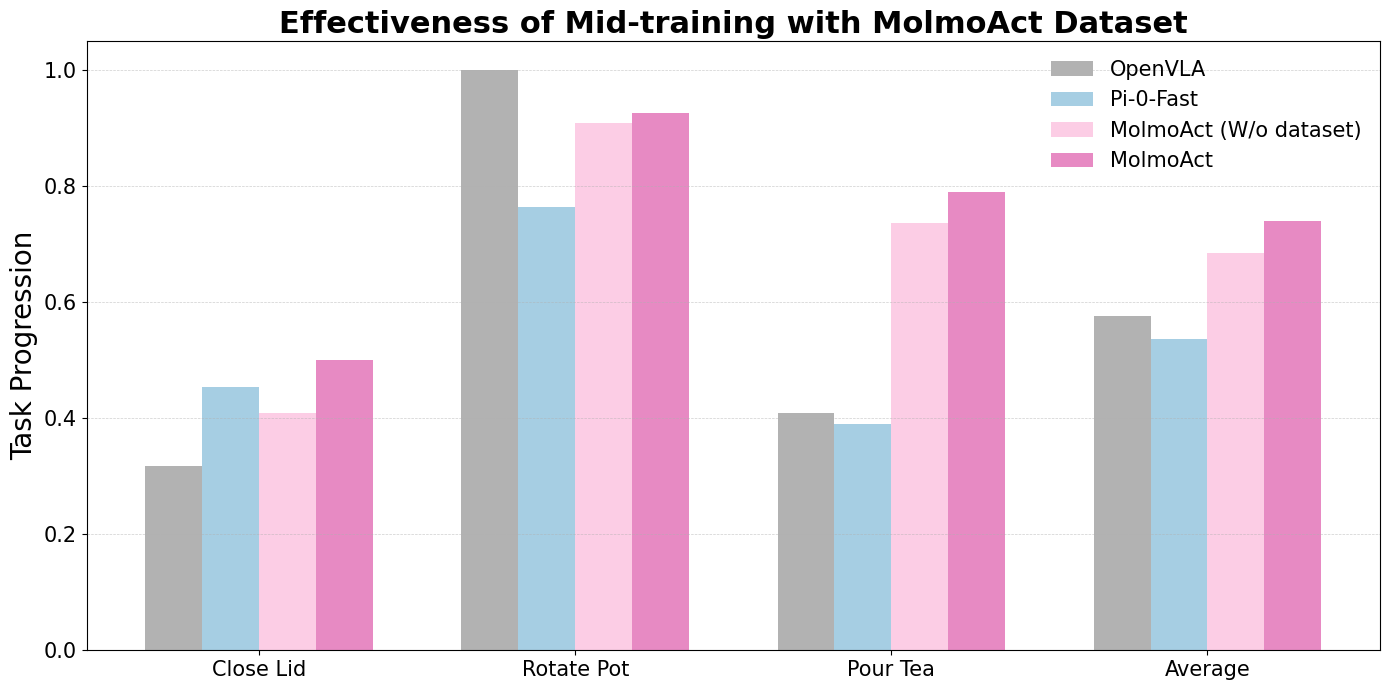

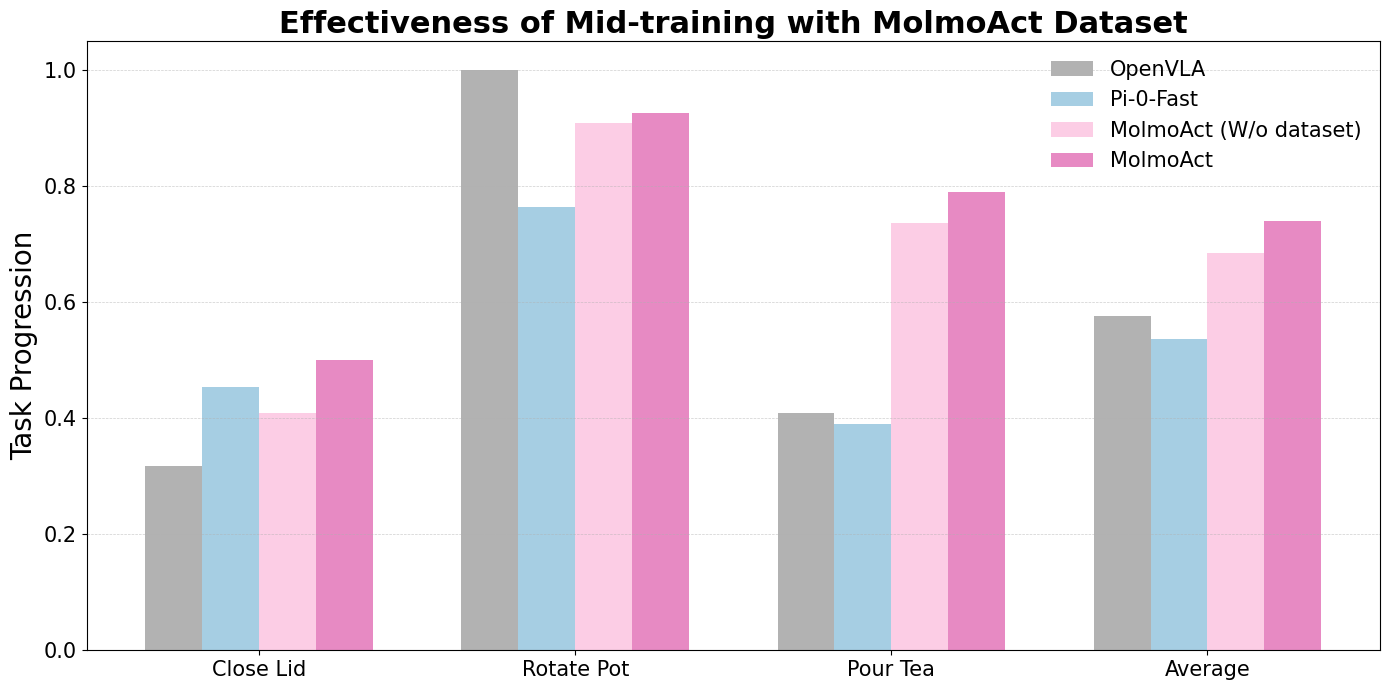

Figure 6: MolmoAct’s generalization beyond training distributions and the impact of mid-training with the MolmoAct Dataset on real-world task performance.

Ablation: Impact of the MolmoAct Dataset

Mid-training on the MolmoAct Dataset yields a consistent 5.5% performance boost across complex real-world tasks, demonstrating the value of high-quality, spatially annotated data for generalist manipulation.

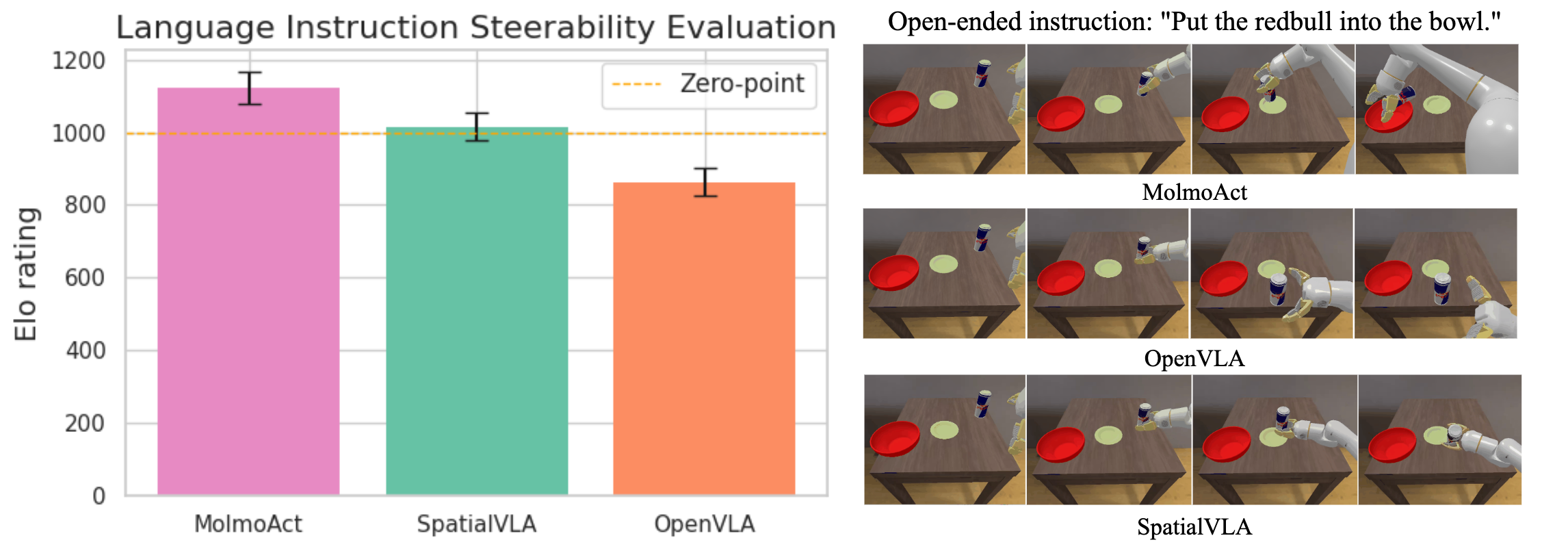

Instruction Following and Steerability

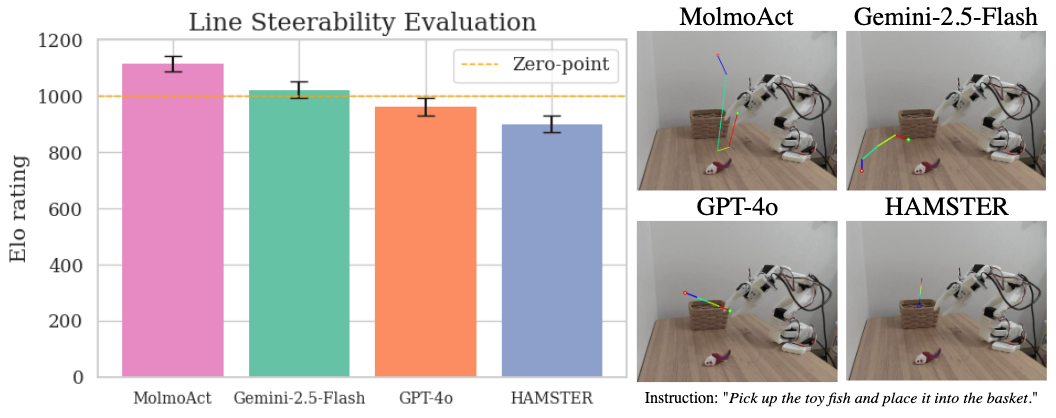

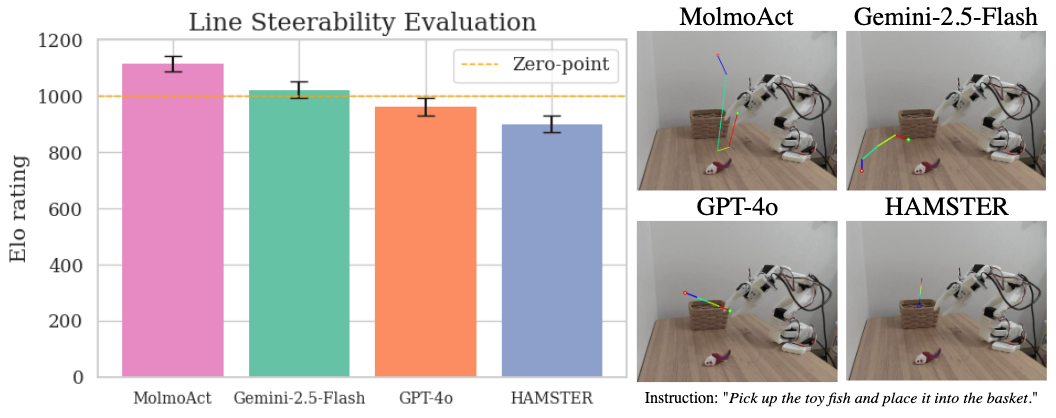

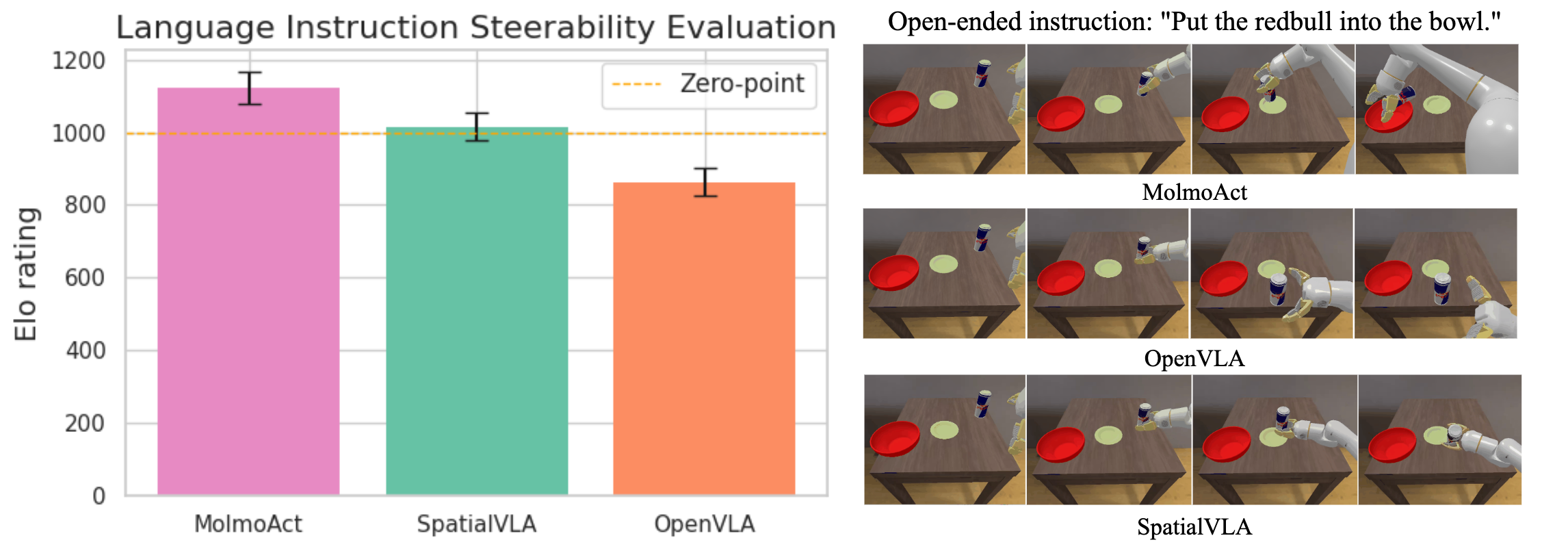

MolmoAct achieves the highest Elo ratings in both open-ended instruction following and visual trace generation, outperforming Gemini-2.5-Flash, GPT-4o, HAMSTER, SpatialVLA, and OpenVLA. Human evaluators consistently prefer MolmoAct’s execution traces and spatial reasoning.

Figure 7: Line steerability evaluation, with MolmoAct achieving the highest Elo ratings and superior qualitative visual trace predictions.

Figure 8: Language instruction following, with MolmoAct’s execution traces more closely aligning with intended instructions than competing models.

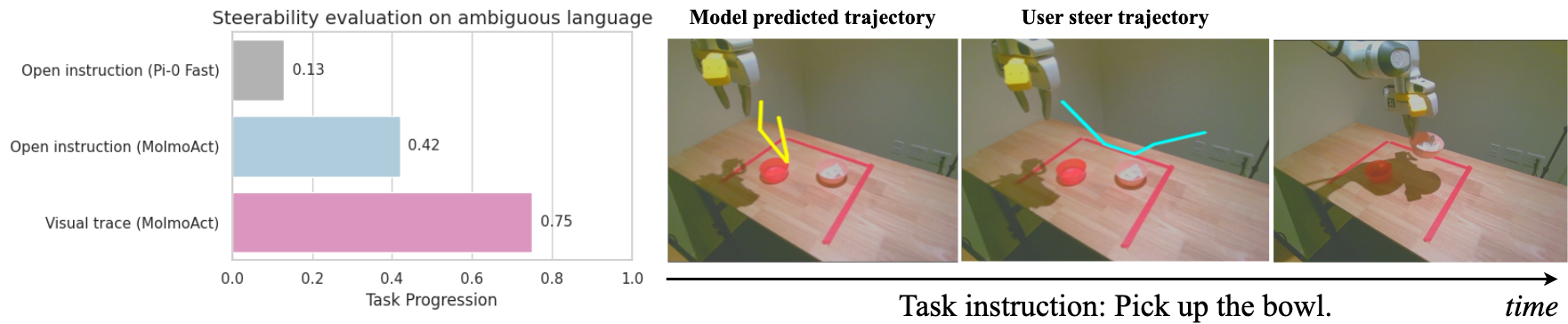

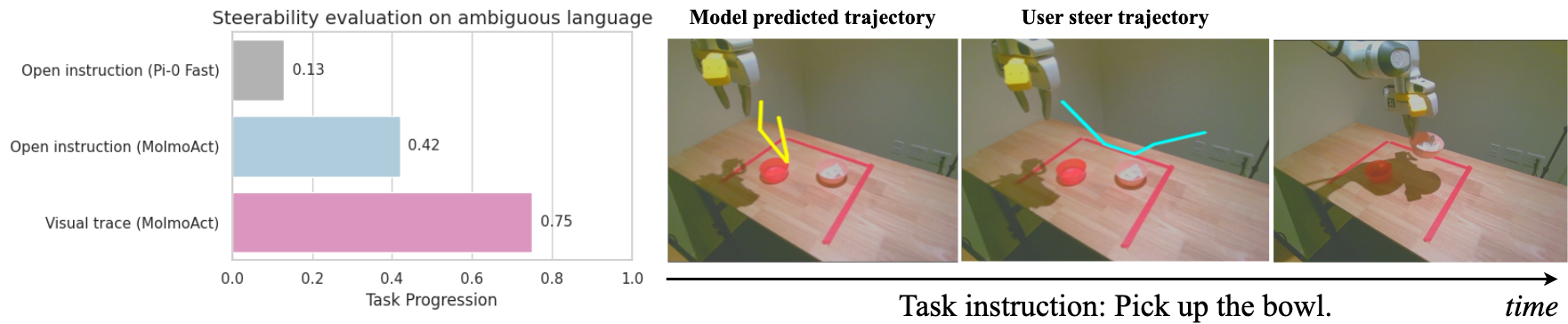

Interactive Policy Steering

MolmoAct’s visual trace interface enables precise, interactive policy steering, outperforming language-only steering by 33% in success rate and achieving a 75% success rate in ambiguous scenarios. This dual-modality control enhances both explainability and user interaction.

Figure 9: Steerability evaluation, showing the effectiveness of visual trace steering in correcting and guiding robot actions.

Theoretical and Practical Implications

MolmoAct’s explicit spatial reasoning pipeline advances the field by bridging the gap between high-level semantic reasoning and low-level control in embodied agents. The model’s architecture enforces interpretability and enables direct user intervention at the planning stage, addressing the opacity and brittleness of prior VLA models. The demonstrated data efficiency—achieving state-of-the-art results with significantly less robot data—suggests that structured intermediate representations can mitigate the need for massive teleoperation datasets.

The open release of model weights, code, and the MolmoAct Dataset establishes a reproducible foundation for further research in action reasoning, spatial grounding, and interactive robot control. The approach is extensible to multi-embodiment and multi-modal settings, and the modularity of the reasoning chain supports future integration of additional spatial or semantic modalities.

Future Directions

Potential avenues for future research include:

- Scaling the reasoning chain to incorporate richer 3D representations, semantic maps, or object-centric abstractions.

- Extending the visual trace interface to support multi-agent or collaborative scenarios.

- Investigating the integration of reinforcement learning or model-based planning atop the structured reasoning pipeline.

- Exploring transfer to non-robotic embodied domains, such as AR/VR agents or autonomous vehicles.

Conclusion

MolmoAct establishes a new paradigm for action reasoning in robotics, demonstrating that explicit, spatially grounded intermediate representations enable robust, explainable, and adaptable manipulation policies. The model’s strong empirical results, data efficiency, and open-source ethos provide a blueprint for the next generation of generalist embodied agents that reason in space.